Virtualization has become a cornerstone technology in modern IT infrastructure, enabling organizations to optimize their resources, reduce costs, and improve scalability. At its core, virtualization refers to the abstraction of physical hardware to create virtual versions of servers, storage devices, networks, and other computing resources. This abstraction allows multiple operating systems (OS) and applications to run on a single physical machine, creating efficiencies and flexibility in how resources are used.

The growing demand for cloud services, increased data processing needs, and the shift towards more agile IT operations have all driven the rapid adoption of virtualization. In this blog, we will explore the different types of virtualization technologies, the key concepts behind them, and how they are applied in real-world environments.

1. History of Virtualization

Virtualization, as a concept, has been around for several decades, and its evolution has played a significant role in shaping modern computing. It refers to the practice of creating virtual versions of physical hardware or software resources. By decoupling the physical hardware from the software, virtualization allows for better resource utilization, flexibility, and scalability.

The history of virtualization can be broken down into several key phases, each representing technological advancements that contributed to its current state. Here's a timeline of its development:

Table: Milestones in the History of Virtualization

| Year | Milestone | Description |

| 1960s | Early Beginnings: Mainframe Virtualization | IBM develops the first virtualization technology in mainframe computers, allowing multiple users to share the same system without interference. |

| 1970s | IBM VM/370 System | IBM introduces the VM/370 operating system, one of the first true examples of virtualization for business computing on mainframes. |

| 1980s | Virtual Memory in Personal Computers | The concept of virtual memory, which allows the operating system to use hard drive space as "virtual" RAM, gains popularity, especially in personal computers. |

| 1990s | The Rise of x86 Virtualization | Virtualization software starts being developed for x86-based systems, allowing personal computers to run multiple OS instances on the same machine. |

| 2000s | Server Virtualization and VMware | VMware creates one of the first commercial x86 virtualization products, allowing IT administrators to run multiple OS instances on a single server. This leads to a surge in virtualization adoption in data centers. |

| 2005-2010 | Cloud Computing and Virtualization | The growth of cloud computing platforms like Amazon Web Services (AWS) brings virtualization into the mainstream for public and private cloud environments. |

| 2010s | Containerization and Docker | Containerization (via Docker) becomes a major trend, offering lightweight virtualization that is faster and more efficient than traditional virtual machines. |

| 2020s | AI and Machine Learning Integration with Virtualization | AI and machine learning technologies are integrated with virtualization platforms to improve resource management, automation, and efficiency in virtual environments. |

| 2020s (Ongoing) | Quantum Computing and Virtualization | Research into quantum computing impacts the future of virtualization, promising potentially transformative capabilities in computation. |

Detailed Overview of Key Milestones

- 1960s - Early Beginnings: Mainframe Virtualization

The concept of virtualization was first introduced in the 1960s by IBM. During this time, large, expensive mainframe computers were used by businesses, and there was a need to share the computer's resources between multiple users without having them interfere with each other. IBM's CP-40 (Control Program) system, developed in 1967, allowed users to run different programs in isolation, providing the foundation for what we know today as virtualization. - 1970s - IBM VM/370 System

In 1972, IBM introduced the VM/370 operating system, which provided full virtualization for its mainframe computers. This system allowed multiple virtual machines (VMs) to run on a single physical machine, enabling businesses to maximize resource utilization and reduce costs. The VM/370 is considered one of the first practical implementations of virtualization. - 1980s - Virtual Memory in Personal Computers

During the 1980s, the concept of virtual memory became popular in personal computing. Virtual memory allows operating systems to use hard disk space as if it were part of the computer's RAM. While this isn't virtualization in the sense we understand today, it laid the groundwork for creating software-driven systems that operate independently of physical hardware limitations. - 1990s - The Rise of x86 Virtualization

The 1990s saw the development of virtualization technologies for x86-based computers. Companies began developing software solutions that allowed users to run multiple operating systems on a single x86-based machine. This marked the beginning of the widespread use of virtualization in personal and enterprise computing. - 2000s - Server Virtualization and VMware

VMware, founded in 1998, developed the first successful x86 virtualization product, which transformed the data center landscape. VMware's platform allowed IT departments to consolidate hardware by running multiple virtual machines on a single physical server. This innovation greatly improved the efficiency of data centers, reducing hardware costs and energy consumption. VMware quickly became the leading provider of virtualization solutions for enterprises. - 2005-2010 - Cloud Computing and Virtualization

As cloud computing gained traction in the 2000s, virtualization played a crucial role in enabling scalable and efficient cloud services. Cloud providers like Amazon Web Services (AWS) began using virtualization to offer on-demand, scalable resources to businesses worldwide. This period marked the shift from traditional on-premise data centers to cloud environments, where virtualization underpinned the infrastructure-as-a-service (IaaS) model. - 2010s - Containerization and Docker

The 2010s introduced the concept of containerization, which provided a more lightweight alternative to traditional virtualization. Containers, popularized by Docker, allow developers to package applications with all their dependencies, making them portable and efficient. Unlike virtual machines, which emulate entire physical systems, containers run on a shared operating system, making them faster and more resource-efficient. This era marked the shift toward microservices architecture and DevOps practices. - 2020s - AI and Machine Learning Integration

Today, AI and machine learning are being integrated with virtualization platforms to optimize resource allocation, improve automation, and enhance virtual environments. Virtualization tools now incorporate predictive analytics and self-healing mechanisms to better manage workloads and enhance performance. - 2020s (Ongoing) - Quantum Computing and Virtualization

Quantum computing, while still in its early stages, holds the potential to revolutionize virtualization by providing exponentially faster computing power. Researchers are exploring how quantum computing can be integrated with traditional virtualization platforms to handle complex tasks that are beyond the capabilities of classical computers.

2. Core Concepts and Terminology

To understand virtualization, it is essential to familiarize yourself with the core concepts and terminology that underpin it. Here are some key terms and ideas:

- Hypervisor: A critical component in virtualization, the hypervisor is software that enables the creation and management of virtual machines (VMs). It sits between the hardware and the virtual machines, ensuring that each VM has access to the required resources while remaining isolated from others.

- Virtual Machine (VM): A VM is an emulation of a physical computer that runs an operating system and applications. Each VM behaves like a separate physical machine, but it is hosted on a hypervisor, sharing the resources of the physical server.

- Host and Guest: The "host" refers to the physical machine that runs the hypervisor, while the "guest" refers to the virtual machines running on that hypervisor. Each guest VM can have its own operating system and applications.

- Resource Pooling: One of the main advantages of virtualization is the ability to pool and allocate resources dynamically across multiple virtual environments. This helps in balancing workloads and improving the efficiency of resource utilization.

- Isolation: Virtualization allows for the isolation of VMs, ensuring that they operate independently. If one VM encounters an issue, it doesn't affect the others, making the system more resilient.

These foundational concepts form the bedrock of virtualization and provide the basis for more advanced technologies and applications.

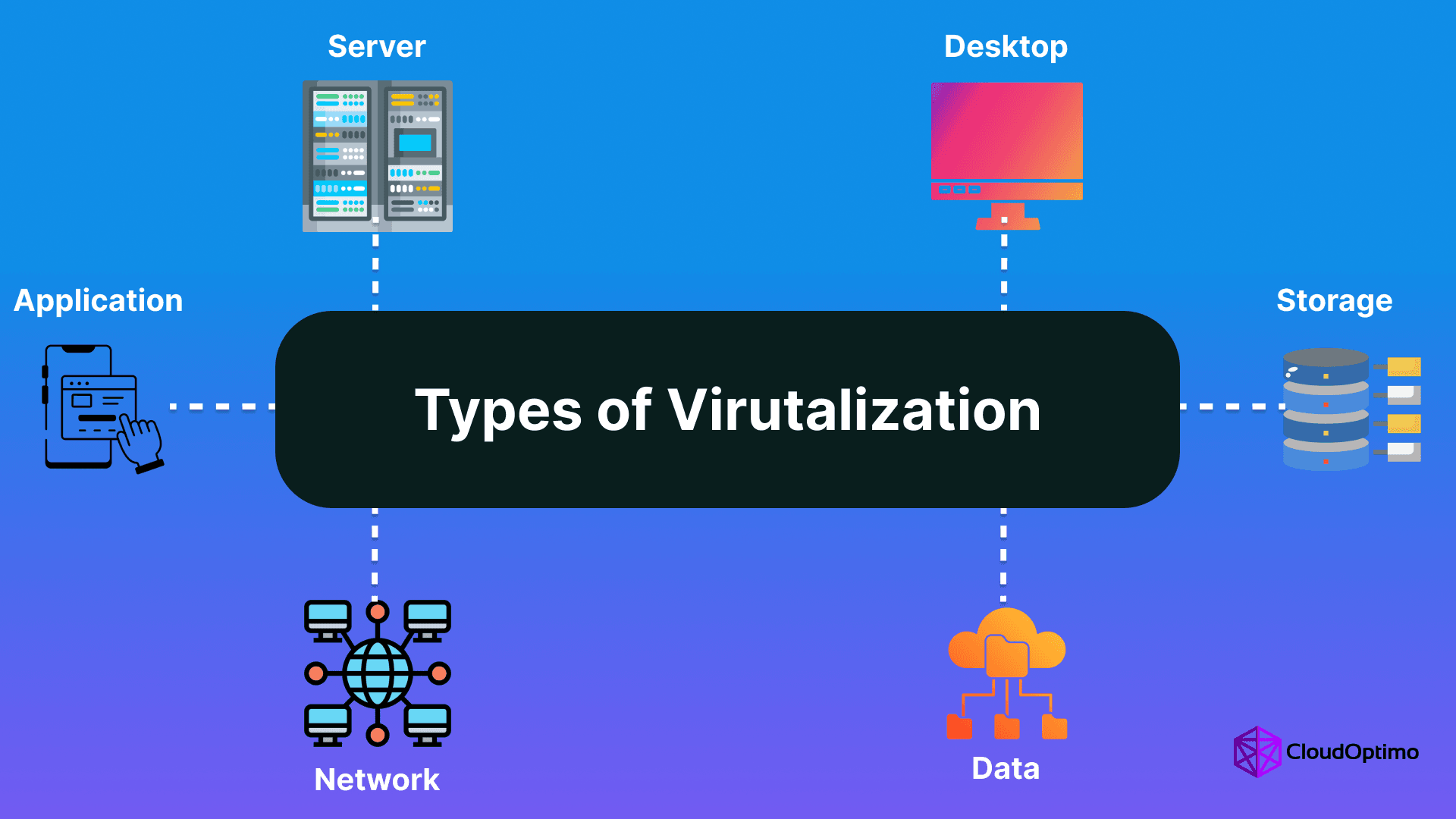

3. Types of Virtualization

Virtualization comes in various forms, each designed to address specific challenges and requirements in modern IT environments. Let’s explore the main types:

Hardware/Platform Virtualization

Hardware or platform virtualization is the most fundamental form, where the hypervisor creates virtual instances of a physical machine. This allows multiple operating systems to run on a single physical server. The hypervisor abstracts the physical hardware and allocates resources to each virtual machine independently. This type of virtualization is widely used in data centers and cloud environments to maximize hardware utilization and scalability.

Network Virtualization

Network virtualization involves the creation of virtual networks that are decoupled from the underlying physical network infrastructure. It enables organizations to abstract and pool network resources, such as bandwidth and IP addresses, into a virtual network that can be managed and provisioned independently. Network virtualization is essential for optimizing network performance, improving security, and enabling software-defined networking (SDN) capabilities.

Storage Virtualization

Storage virtualization abstracts the physical storage hardware to create a pool of storage resources that can be allocated dynamically to virtual machines. This allows administrators to manage storage more efficiently, improve utilization, and reduce costs. Virtualized storage solutions can span different types of storage devices, such as hard drives, SSDs, or network-attached storage (NAS), providing flexibility and scalability for organizations' data management needs.

Desktop and Application Virtualization

Desktop virtualization allows organizations to deliver desktop environments to users remotely, enabling them to access their desktops from virtually any device. It consolidates desktop management in a central server, improving security and simplifying administration. Similarly, application virtualization involves running applications on a central server while displaying them to the user on a remote device, ensuring that applications are isolated and easier to maintain.

Each of these virtualization types plays a crucial role in modernizing IT infrastructures, streamlining operations, and enabling the efficient use of resources.

4. Virtualization Architecture

Virtualization architecture refers to the underlying structure and components that support virtualized environments. Understanding this architecture is key to leveraging virtualization technologies effectively.

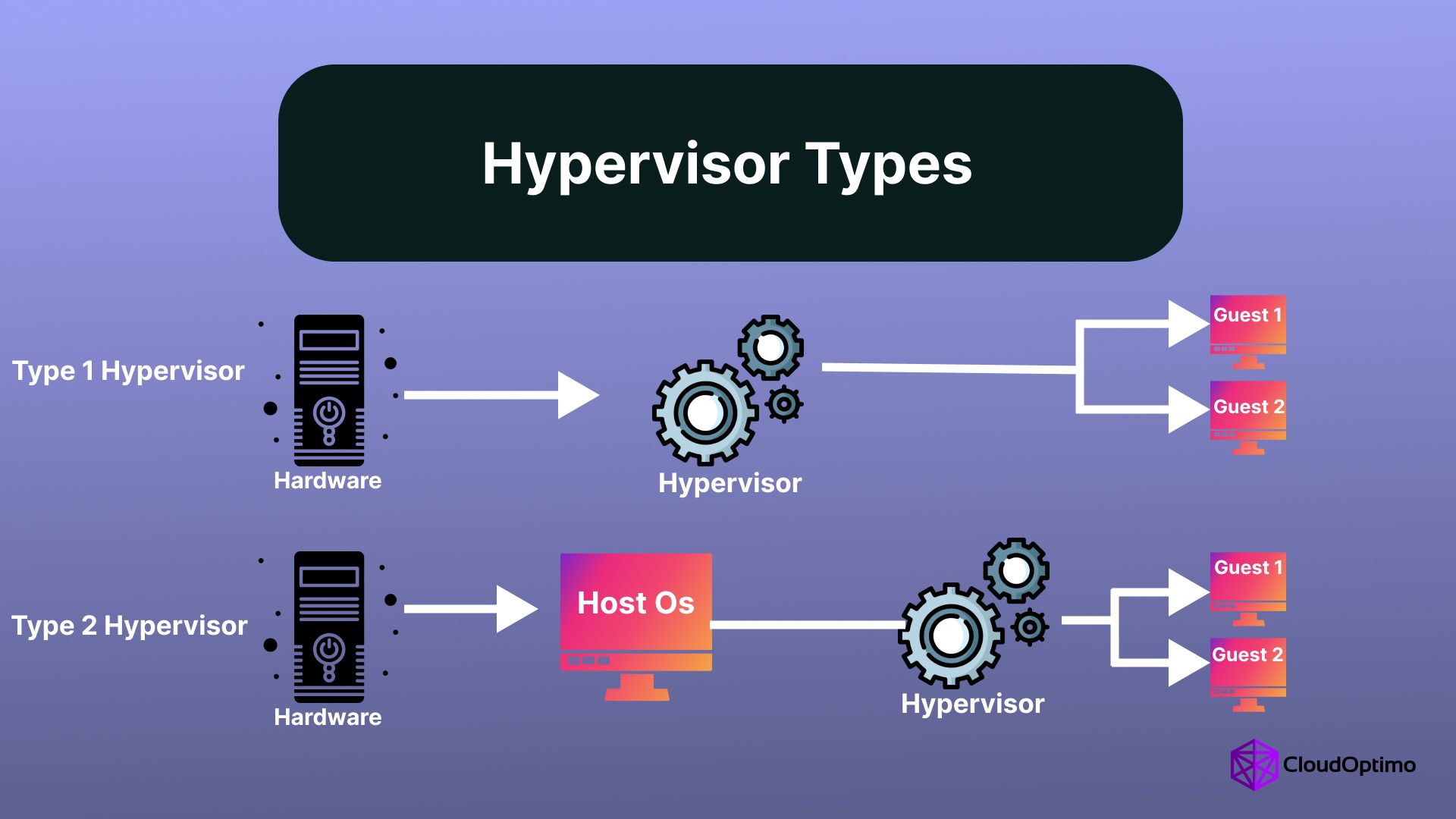

Hypervisors (Type 1 and Type 2)

At the heart of virtualization architecture lies the hypervisor, which is responsible for managing virtual machines. There are two main types of hypervisors:

- Type 1 Hypervisor: Also known as a “bare-metal” hypervisor, this type runs directly on the host’s hardware without the need for an underlying operating system. Type 1 hypervisors are typically used in enterprise and cloud data centers due to their efficiency and performance. Examples include VMware vSphere, Microsoft Hyper-V, and Xen.

- Type 2 Hypervisor: This type runs on top of an existing operating system, such as Windows or Linux. Type 2 hypervisors are commonly used for personal or small-scale virtualization setups, such as testing or development environments. Examples include Oracle VirtualBox and VMware Workstation.

Virtual Machines and Resource Management

Virtual machines are the virtualized instances that run on top of a hypervisor. Each VM acts as a separate computer with its own OS and applications, but it shares the host's physical resources. Resource management is a key function of virtualization, as it involves allocating CPU, memory, storage, and network resources to each VM efficiently. This ensures optimal performance and prevents resource contention.

Hardware and Memory Virtualization

Hardware virtualization refers to the ability of a hypervisor to present virtualized hardware to the VMs. This includes the CPU, memory, storage, and network interfaces. Memory virtualization is particularly important, as it enables the dynamic allocation and sharing of memory resources between VMs. Technologies like Intel VT-x and AMD-V enhance the performance of hardware virtualization by providing hardware support for running virtual environments.

5. Setting Up Virtual Environments

Setting up a virtual environment requires careful attention to hardware, network, and storage. Let’s break down what you need.

Hardware and Network Requirements

The foundation of a solid virtual environment is the hardware. Here’s what you need to consider:

Component | Requirements |

CPU | Multi-core processors with virtualization support (Intel VT-x or AMD-V). |

RAM | Enough memory to allocate to each VM, depending on workload. |

Network | Reliable, fast network connections for VM communication. |

Storage | Fast storage (SSDs) for better VM performance and scalability. |

Storage and Planning Considerations

Effective storage planning is critical. Here are the key factors to consider:

Consideration | Description |

Capacity | Ensure enough storage to handle multiple VMs with room to grow. |

Redundancy | Use RAID or other methods to protect data from failure. |

Performance | SSDs are ideal for better speed when managing multiple VMs. |

Scalability | Plan for future expansion as your virtual environment grows. |

6. Virtualization Management Tools

Once your environment is set up, management tools are essential for controlling and optimizing virtual resources. These tools allow administrators to streamline the deployment, monitoring, and scaling of virtual machines (VMs). Let’s dive into some of the most popular and widely used virtualization management tools.

VMware Suite

VMware is one of the most popular platforms for enterprise-level virtualization. It provides a robust suite of tools that help administrators manage virtual environments effectively. Below is a look at key tools within the VMware Suite:

Tool | Key Features |

vSphere | Manages VM creation, deployment, and monitoring. |

vCenter | A centralized platform for managing large VMware environments. |

vMotion | Enables live migration of VMs between hosts with zero downtime. |

VMware vSphere Example (Code Snippet for Deployment):

To create a virtual machine using vSphere PowerCLI (a powerful command-line interface), you can use the following script:

| powershell # Connect to the vSphere server Connect-VIServer -Server your-vcenter-server # Create a new VM New-VM -Name "NewVM" -ResourcePool your-resource-pool -Datastore your-datastore -OSCustomizationSpec your-os-spec # Power on the VM Start-VM -VM "NewVM" |

VMware vMotion Example (Code Snippet for Migration):

To migrate a VM between hosts using vMotion:

| powershell # Migrate VM using vMotion to another host Move-VM -VM "YourVM" -Destination "NewHost" |

These tools are essential for large-scale environments, offering features like high availability and automatic resource balancing.

Microsoft Hyper-V

Hyper-V is Microsoft’s virtualization platform, best suited for those using Windows Server. It integrates well into environments that rely heavily on Microsoft technologies.

Feature | Description |

Dynamic Memory | Automatically adjusts VM memory allocation based on demand and current usage. |

Virtual Machine Manager | Provides centralized VM management for large-scale deployments. |

Example of Creating a Virtual Machine in Hyper-V (PowerShell Script):

To create a VM in Hyper-V, use the following PowerShell script:

| powershell # Create a new Hyper-V VM New-VM -Name "VM_Name" -MemoryStartupBytes 2GB -Generation 2 -Path "C:\VMs" -SwitchName "VirtualSwitch" # Set the CPU count Set-VMProcessor -VMName "VM_Name" -Count 2 # Start the VM Start-VM -Name "VM_Name" |

Hyper-V offers live migration, similar to vMotion in VMware, and is a great option for integrating with Windows Server and Active Directory.

KVM, QEMU, and Containers

For Linux-based environments, KVM (Kernel-based Virtual Machine) and QEMU (Quick Emulator) are popular open-source solutions. These tools allow for powerful full virtualization and emulation, respectively. Additionally, Docker has gained immense popularity for lightweight container-based virtualization.

Tool/Platform | Description |

KVM | Full virtualization solution for Linux, capable of running unmodified guest OSes. |

QEMU | Emulator that provides hardware virtualization for various architectures. |

Docker | Lightweight containerization platform, ideal for deploying microservices. |

KVM Example (Code Snippet to Create VM):

On a Linux system, you can use virt-install (a command-line tool for creating virtual machines) to create a VM with KVM:

| bash # Install virt-install if not already installed sudo apt-get install virtinst # Create a new VM with KVM virt-install --name=MyVM --vcpus=2 --memory=2048 --disk size=10 --cdrom /path/to/iso --os-type linux --os-variant ubuntu20.04 |

QEMU Example (Code Snippet for Virtual Machine Creation):

QEMU can emulate different hardware platforms. To run a virtual machine on QEMU:

| bash # Run a VM with QEMU qemu-system-x86_64 -m 2048 -hda /path/to/disk.img -cdrom /path/to/iso -boot d -enable-kvm |

Docker Example (Code Snippet to Run a Container):

Docker provides lightweight virtualization and is great for running microservices. Here’s a simple example of running a container with Docker:

| bash # Pull the Docker image docker pull ubuntu # Run the container interactively docker run -it ubuntu /bin/bash |

With Docker, instead of managing entire virtual machines, you manage containers that share the host OS kernel, making them more efficient.

Comparison of Virtualization Tools

Tool | Platform | Type of Virtualization | Best Use Case |

VMware vSphere | Enterprise (Windows/Linux) | Full Virtualization | Large enterprises needing comprehensive management tools |

Microsoft Hyper-V | Windows Server | Full Virtualization | Windows-based environments, integration with Active Directory |

KVM/QEMU | Linux-based systems | Full Virtualization & Emulation | Open-source solutions for Linux systems, hardware emulation |

Docker | Cross-platform | Containerization | Lightweight virtualization for microservices, DevOps, and cloud-native apps |

7. Cloud Integration and Virtualization

Virtualization is key for maximizing the benefits of cloud environments. It allows businesses to scale efficiently and manage resources more effectively.

Private, Hybrid, and Multi-Cloud Solutions

With virtualization, businesses can choose from a range of cloud solutions:

Cloud Type | Description |

Private Cloud | Fully controlled infrastructure, ideal for sensitive data. |

Hybrid Cloud | Combines private and public clouds, offering flexibility. |

Multi-Cloud | Uses services from multiple cloud providers to avoid lock-in. |

Cloud Migration Strategies

When moving to the cloud, several strategies help ensure a smooth transition:

Strategy | Description |

Lift and Shift | Migrate applications with minimal changes. |

Refactoring | Rebuild apps to optimize them for the cloud environment. |

Replatforming | Modify some parts of the app to fit cloud infrastructure. |

8. Virtualization for Edge Computing

Edge computing processes data closer to where it is generated, reducing latency and enabling real-time decision-making. Virtualization plays a vital role by abstracting hardware resources, allowing efficient deployment of virtual machines (VMs) or containers on edge devices like IoT sensors. This is especially valuable in applications like smart factories or autonomous vehicles, where real-time data analysis is critical.

How Virtualization Benefits Edge Computing

Virtualization enables efficient resource management at the edge by creating isolated environments for different workloads. Here’s how it helps:

- Isolation of workloads: Virtualized systems prevent interference between different tasks on edge devices.

- Lightweight virtualization: VMs and containers are optimized for low resource usage, ensuring fast startup and minimal overhead.

- Scalability: Virtual environments can easily scale based on demand, making it ideal for dynamic edge workloads.

- Centralized management: Despite being deployed on distributed edge devices, virtualized systems can be managed from a central platform.

Key Use Cases of Virtualization in Edge Computing

Below are examples where virtualization enhances edge computing performance:

Edge Computing Use Case: IoT Devices

IoT devices, such as sensors in smart cities or industrial machines, generate data that needs to be processed quickly for real-time decisions.

- How Virtualization Helps: Virtualizing IoT systems on edge devices reduces latency by processing data locally. This avoids delays from sending data to central data centers, enabling fast decision-making. For example, in a smart factory, virtualized control systems on edge devices can adjust operations based on live sensor data.

Example Code for Virtualizing IoT System with Docker:

| bash # Run an IoT monitoring application in a Docker container on an edge device docker run -d --name iot_sensor_monitor -p 8080:80 iot-monitor:latest |

Edge Computing Use Case: Remote Data Centers

Edge computing often involves deploying data centers in remote locations like oil rigs, research stations, or field operations, where direct access to centralized data centers is limited.

- How Virtualization Helps: Virtualization allows organizations to deploy VMs or containers at remote locations. Local processing and storage reduce dependency on centralized resources, enabling real-time decision-making. For instance, a remote monitoring station can run virtualized applications locally, with minimal hardware.

Edge Computing Use Case: Mobile Networks

With the rise of 5G technology, mobile network functions such as firewalls, load balancers, and content delivery networks need to be distributed at the network edge to ensure low latency and high performance.

- How Virtualization Helps: Virtualizing network functions (VNFs) allows mobile network operators to deploy services closer to users, improving scalability and efficiency. For example, in a 5G network, virtualized network slices prioritize data traffic from autonomous vehicles or critical applications.

Example Code for Deploying VNFs on Edge Devices (OpenStack CLI):

| bash # Create a virtual network function on an edge node using OpenStack openstack server create --flavor m1.small --image ubuntu --nic net-id=edge-network --security-group default vnf_edge_node |

Benefits of Virtualization for Edge Computing

Virtualization improves edge computing by offering:

- Efficient resource allocation: VMs and containers are provisioned with just the right amount of resources, ensuring optimized use of edge devices.

- Centralized management: Tools like VMware vSphere and Kubernetes provide centralized control over distributed edge environments.

- Rapid deployment: Automation tools streamline the process of deploying services on edge devices.

- Dynamic scaling: Containers can quickly scale based on workload demands, such as during peak production times in a smart factory.

9. Performance Optimization

Optimizing the performance of virtualized environments is crucial to ensure that resources are being used efficiently and that applications run smoothly.

Resource Allocation and Performance Tuning

One of the key aspects of performance optimization is resource allocation. This involves distributing CPU, memory, and storage to virtual machines (VMs) based on their needs. Proper allocation helps avoid resource contention, which can slow down performance. Tools like VMware’s Distributed Resource Scheduler (DRS) and Microsoft’s Hyper-V Resource Metering can automatically balance the load across your virtualized infrastructure.

Performance tuning involves adjusting the settings and configurations of your VMs to get the most out of your hardware. For example, adjusting the number of CPU cores allocated to a VM or fine-tuning memory settings can significantly boost performance. It's also important to regularly review resource usage and make adjustments to keep your virtual environment optimized as workloads change.

Performance Factor | Optimization Tip |

CPU | Allocate cores based on workload, avoid over-provisioning. |

Memory | Adjust dynamic memory allocation for VMs based on usage. |

Storage | Use fast SSDs for high-performance VMs and balance disk IO. |

Monitoring and Analytics

Monitoring is key to ensuring that your virtualized systems are running at peak performance. Tools like VMware vRealize Operations and Microsoft System Center can provide real-time analytics on resource consumption, helping you identify performance bottlenecks early. Regular performance audits and fine-tuning will help keep everything running smoothly and ensure that virtual environments scale efficiently as needed.

10. Security in Virtual Environments

Security is a critical consideration in any virtualized environment. Since multiple virtual machines share the same physical hardware, protecting data and preventing breaches requires a combination of strategies.

Security Challenges and Best Practices

Virtualization introduces new security challenges due to the abstraction of hardware resources. One challenge is the potential for VM escape, where an attacker gains access to the host system through a VM. Another concern is the isolation between VMs, which must be robust to prevent cross-VM attacks.

To mitigate these risks, best practices include implementing strong network segmentation, using encryption for data at rest and in transit, and regularly patching both the hypervisor and VMs. Additionally, role-based access control (RBAC) ensures that only authorized users can manage VMs and access sensitive data.

Security Concern | Mitigation Strategy |

VM Escape | Use hypervisor-based security features, regularly update. |

Cross-VM Attacks | Enforce network segmentation and use firewalls between VMs. |

Unauthorized Access | Implement RBAC and regularly audit user permissions. |

Compliance and Regulations

Compliance is another major consideration. Many industries, like healthcare and finance, are subject to strict regulations (e.g., HIPAA, PCI-DSS). Virtualized environments must ensure that data is stored, processed, and transmitted securely to comply with these standards. Using encryption, monitoring, and implementing proper access controls helps organizations meet compliance requirements while maintaining security across virtual environments.

11. Disaster Recovery and High Availability

Virtualization has significantly improved disaster recovery (DR) and high availability (HA) strategies. By leveraging the flexibility of virtual environments, organizations can ensure their systems are resilient and can recover quickly in the event of an outage.

In the context of disaster recovery, virtualization allows for the replication of virtual machines across multiple locations. Tools like VMware Site Recovery Manager or Microsoft Hyper-V Replica help automate failover processes, ensuring that your workloads can quickly be moved to another location if something goes wrong. This reduces downtime and minimizes data loss during disruptions.

High availability can be achieved through features like VM clustering, which allows for the automatic restart of VMs on another host in case of a failure. By spreading workloads across different physical servers, you ensure that if one fails, the others can take over without affecting overall service.

Strategy | Benefit |

Disaster Recovery (DR) | Reduces downtime and data loss through VM replication. |

High Availability (HA) | Ensures business continuity with automatic failover and recovery. |

Together, these strategies make virtual environments much more resilient to hardware failures, natural disasters, or other critical issues, ensuring minimal disruption to services.

12. Next-Generation Virtualization Technologies

Virtualization is continuously evolving, and the next-generation technologies are set to revolutionize how we manage IT infrastructure.

AI and Machine Learning Integration

One of the most exciting developments is the integration of Artificial Intelligence (AI) and Machine Learning (ML) with virtualization. AI can help automate resource allocation, detect anomalies, and predict workloads, optimizing how resources are distributed across virtual environments. For instance, AI-driven tools can predict when a VM will require more resources, allowing the system to automatically allocate additional CPU or memory before performance issues arise. This results in better system efficiency and a more dynamic approach to resource management.

Quantum Computing Impact

Another emerging trend is quantum computing, which promises to revolutionize computing by solving complex problems much faster than traditional computers. Though quantum computing is still in its early stages, its potential impact on virtualization could be huge. Virtualized environments could harness quantum computing's processing power to simulate multiple scenarios simultaneously, solving problems related to optimization and performance at speeds never before seen. As quantum computing becomes more accessible, it could change the way we approach data center management and workload distribution.

Technology | Potential Impact on Virtualization |

AI/ML Integration | Automates resource management, improves efficiency, and predicts needs. |

Quantum Computing | Revolutionizes problem-solving and workload distribution with immense processing power. |

These technologies are paving the way for more intelligent, efficient, and scalable virtual environments, making IT operations even more powerful and future-ready.

13. Container Technologies

Container technologies have become a crucial part of modern IT infrastructure, offering lightweight and efficient ways to run applications.

Docker and Kubernetes

Docker revolutionized software development by enabling containers to package applications and their dependencies into a single, portable unit. This allows applications to run consistently across various environments, from a developer’s laptop to a production server. Docker containers are lightweight, fast to deploy, and provide great resource efficiency, as they share the host OS's kernel.

However, managing hundreds or thousands of containers can be challenging. This is where Kubernetes comes in. Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It helps ensure high availability, load balancing, and efficient resource utilization, making it the go-to solution for large-scale container management.

Technology | Key Benefits |

Docker | Lightweight, fast, and consistent application delivery. |

Kubernetes | Automated orchestration, scaling, and high availability for containers. |

Microservices Architecture

Container technologies are often paired with microservices architecture, a design pattern where applications are broken into smaller, independent services that can be developed, deployed, and scaled separately. This approach enhances flexibility and agility, as each service can be updated or deployed independently without affecting the entire application.

Microservices align perfectly with containerization, as each service can run in its own container. This allows teams to update individual services without causing downtime and improves system resilience by isolating failures to specific microservices instead of impacting the entire application.

Microservices | Benefits |

Decoupled Services | Easier to develop, deploy, and scale independently. |

Resilient Systems | Failure in one service doesn’t impact the entire system. |

14. Real-World Applications of Virtualization

Virtualization has become the backbone of modern IT infrastructure, enabling businesses to run applications more efficiently and scale operations.

Data Center and VDI Implementations

In data centers, virtualization has led to dramatic improvements in server consolidation and resource utilization. By virtualizing physical servers, businesses can run multiple virtual machines (VMs) on a single physical server, reducing hardware costs and improving flexibility. Virtualization also allows for better disaster recovery and business continuity, as VMs can be moved across physical hosts and backed up more easily.

One common use of virtualization in the enterprise is Virtual Desktop Infrastructure (VDI). VDI allows organizations to centralize desktop management in the data center. Users access their virtual desktops from any device, offering mobility and reducing endpoint management costs. VDI improves security by keeping data centralized and easier to protect, as opposed to being stored on individual user devices.

Application | Key Benefits |

Data Center Virtualization | Reduced hardware costs, improved resource utilization, and easier management. |

VDI | Centralized desktop management, improved security, and flexibility for remote work. |

Industry-Specific Use Cases (Healthcare, Financial Services, etc.)

Virtualization is not just a one-size-fits-all solution—it’s transforming industries by tailoring solutions to meet specific needs.

- Healthcare: Virtualization helps healthcare providers securely manage and store patient data, while also allowing for easier access and sharing of information across departments. Virtualization also supports the rise of telemedicine by enabling healthcare systems to scale quickly as patient demands grow.

- Financial Services: In financial services, where security and performance are paramount, virtualization enables rapid provisioning of secure environments for trading platforms and regulatory compliance testing. Additionally, virtualization allows for better disaster recovery solutions, ensuring business continuity during system failures.

Industry | Use Case |

Healthcare | Secure management and sharing of patient data, scalability for telemedicine. |

Financial Services | Secure trading environments, regulatory compliance, and disaster recovery. |

These industry-specific applications showcase how virtualization is not only about optimizing resources but also about enhancing business capabilities, improving security, and enabling growth.

Conclusion

In conclusion, virtualization has evolved significantly from its early concepts to become a fundamental technology in modern computing. Through a historical journey, we explored core concepts, types, and architectures that form the backbone of virtualized environments. The integration of cloud technologies and the emergence of edge computing have further expanded the scope of virtualization, enhancing scalability and flexibility. Performance optimization and robust security measures are crucial for ensuring the reliability of virtual systems. Disaster recovery and high availability have become essential components, while next-generation technologies, such as containers, continue to shape the future. Virtualization's real-world applications are vast, offering transformative benefits across industries, from improving efficiency to enabling innovative solutions.