In the cloud-native era, applications must meet high demands for reliability, availability, and scalability. These requirements are essential for delivering consistent and seamless user experiences. Among the key components to achieve these goals is a solid load-balancing strategy that can effectively manage fluctuating traffic loads.

Azure Load Balancer, Microsoft’s Layer 4 (TCP/UDP) load balancing solution, is designed to distribute network traffic across multiple backend resources, providing high availability and resilient application connectivity.

This blog dives into the specifics of Azure Load Balancer, covering its types, configurations, and best practices to implement this tool in real-world cloud environments.

Layer 4 Load Balancing Explained

Azure Load Balancer operates at Layer 4 (Transport Layer) of the OSI model. Here's what this means:

Simple Explanation

- Layer 4 load balancing is like a mail sorting facility that only reads the address on the envelope

- It doesn't open the mail (packet contents) but efficiently routes based on:

- Source/destination IP addresses

- Port numbers

- Protocol type (TCP/UDP)

Technical Details

- Processes packets without inspecting content

- Maintains connection information

- Faster than Layer 7 (Application Layer) processing

- Ideal for raw TCP/UDP traffic distribution

What is Azure Load Balancer?

Azure Load Balancer is a fully managed service that automatically routes incoming network traffic across multiple resources, enhancing application performance and increasing fault tolerance. It supports both internal and external connections, allowing it to accommodate a wide variety of use cases.

It plays a crucial role in network traffic distribution across applications hosted in Microsoft Azure, providing high availability and reliability by diverting requests to healthy servers. It can be used for both internal (private) and external (public) connections, making it highly versatile.

Key Features

- Automatic Failover: Ensures applications are always accessible by automatically rerouting traffic to healthy resources.

- High Throughput with Low Latency: Designed for high-performance environments, with low network latency and high availability.

- Real-Time Health Monitoring: Tracks backend resource health, making real-time adjustments based on their status.

- Flexible Configuration Options: Supports complex architectures, including virtual network (VNet) integration and multi-region setups for resilience.

- Robust Scaling Capabilities: Scales dynamically based on demand, optimizing resource usage and performance.

With Azure Load Balancer, organizations can ensure that their applications remain responsive, even during peak times, by automatically balancing the load across available resources.

Types of Azure Load Balancer: Basic vs. Standard

Azure Load Balancer offers two main SKUs—Basic and Standard—each designed for different levels of performance and functionality. Here’s a detailed comparison to help you choose the right SKU for your needs.

| Feature | Basic Load Balancer | Standard Load Balancer |

| Target Audience | Non-production/small workloads | Production workloads with high performance needs |

| Max Backend Instances | Up to 100 | Up to 1,000 instances |

| Availability Zones | Not supported | Zone redundant for high availability |

| Health Probes | Limited options | Customizable with granular monitoring |

| DDoS Protection | None | Built-in DDoS Protection with additional controls |

| Frontend IPs | Limited | Supports both public and private IPs |

Basic Load Balancer

The Basic Load Balancer is suited for smaller workloads or non-production environments where advanced features aren’t necessary. It provides essential load-balancing capabilities with limitations on scalability and support.

Features:

- Cost-effective: Free except for outbound data transfer.

- Limited scalability: Supports up to 100 backend instances.

- IP Support: Public IP support only for external load balancing.

While it’s an effective option for small, cost-sensitive projects, the Basic Load Balancer may lack the resilience and scaling required for enterprise-level applications

Standard Load Balancer

Standard Load Balancer offers a broader feature set suitable for production environments where high performance, security, and scalability are essential.

Features:

- Scalable backend pool of up to 1,000 instances.

- Offers DDoS Protection and supports Availability Zones.

- Flexible backend pools with integration into VM Scale Sets.

- Support for both internal and external IP configurations.

Standard Load Balancer is recommended for most production workloads due to its enhanced capabilities, including support for a broader range of use cases and robust failover options.

Core Concepts and Components

To understand how Azure Load Balancer works, it’s helpful to know its core components:

- Frontend IP Configuration:

- This configuration is the entry point for network traffic. Each Load Balancer can have multiple frontend IP configurations.

- Public vs. Private Frontend: Public IPs expose applications to the internet, while private IPs handle internal traffic within a VNet.

Example Configuration: To create a public frontend IP, navigate to Azure Portal → Load Balancer → Frontend IP Configuration and assign a public IP address.

- Backend Pool

- The backend pool is where backend resources (VMs or IPs) are registered. Traffic from the frontend IP is distributed across these resources.

- Azure Load Balancer supports dynamic scaling with VM Scale Sets, allowing automatic adjustments to backend pool size.

Configuration Tip: When setting up a backend pool, consider using Azure Availability Sets to achieve high availability by distributing VMs across multiple fault domains.

- Health Probes

- Health probes monitor the state of each backend resource and mark any failed resource as unhealthy.

- Types of Probes: HTTP, TCP, and HTTPS. HTTP/HTTPS checks perform actual HTTP requests, while TCP probes validate the presence of a TCP connection.

- Advanced Settings: Define interval, timeout, and failure thresholds for precise health monitoring.

For example, if you set the probe interval to 5 seconds and the unhealthy threshold to 2, the probe will mark a resource as unhealthy after two consecutive failures within a 10-second interval.

Load Distribution Rules

Enterprise load balancing rules must account for various traffic patterns and application requirements:

- Session Persistence Configurations:

- Hash-based distribution

- Source IP affinity

- Protocol-level persistence

- Connection Management:

- Configurable idle timeout (4-30 minutes)

- TCP Reset optimization

- SNAT port allocation

Outbound Rules

- Outbound rules manage traffic leaving the Azure environment, particularly useful for private backend instances needing outbound internet access.

- NAT and Port Mapping: Network Address Translation (NAT) options enable easy mapping between private and public IPs.

Configuration Scenarios for Azure Load Balancer

Azure Load Balancer can be configured to handle both internet-facing and internal traffic distribution.

Public Load Balancer Configuration (Internet-Facing Applications)

Public Load Balancers distribute incoming internet traffic across multiple resources within a backend pool. This is commonly used for web servers and public APIs.

- Create a Public Frontend IP:

- Go to Azure Portal → Load Balancer → Frontend IP configuration.

- Assign a public IP address for internet access.

- Add Backend Pool Resources:

- Choose VMs or VM Scale Sets as backend instances.

- Consider configuring VM Scale Sets to automate scaling based on load.

- Set Up Health Probes:

- Configure HTTP, TCP, or HTTPS probes depending on your application requirements.

- For HTTP probes, define a specific path, like /healthcheck, to ensure proper monitoring.

- Define Load Balancing Rules:

- Map the frontend IP and backend pool resources, and specify the port and protocol.

- Example: If your application runs on port 80, set the rule to redirect traffic to backend pool resources on the same port.

- Create a Public Frontend IP:

Internal Load Balancer Configuration (Private Applications)

For applications that don’t require internet exposure, internal Load Balancers distribute traffic across private resources within a VNet.

- Create a Private Frontend IP:

- Use a private IP to avoid exposing resources to the internet.

- Navigate to Azure Portal → Load Balancer → Frontend IP configuration and assign a private IP within the VNet.

- Set Up Backend Pool:

- Add resources within the same VNet.

- Internal load balancers are commonly used to balance requests among app servers, databases, or microservices.

- Configure Health Probes and Load Balancing Rules:

- Set up health probes for regular status checks.

- Define load balancing rules similar to those for a public load balancer but limited to internal IP access.

- Create a Private Frontend IP:

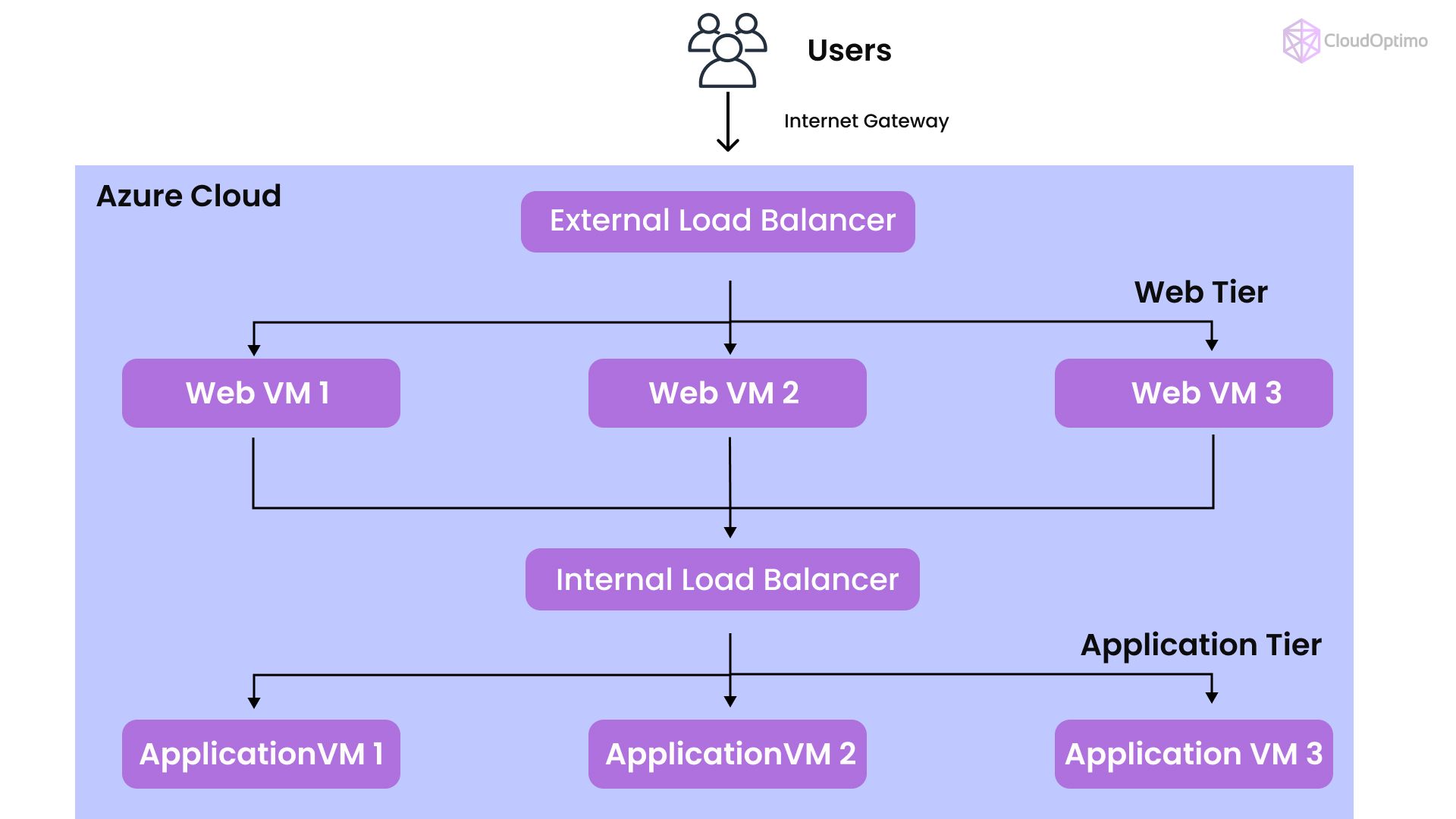

- Multi-Tier Applications with Internal and External Load Balancers

For multi-tier architectures, it’s common to use both internal and external Load Balancers. For instance, a public Load Balancer can handle incoming internet traffic, which then forwards requests to an internal Load Balancer managing traffic between middle-tier and backend resources.

Example:

- Frontend Tier: Public Load Balancer for web traffic

- Middle Tier: Internal Load Balancer for app services

- Backend Tier: Database services, potentially without a Load Balancer if using a managed Azure database

This setup enhances security by isolating tiers and restricting direct access to sensitive services.

Configuring Health Probes and Monitoring

Health probes are essential for monitoring backend resource health, providing resiliency for the application. Follow these best practices for optimal configuration:

- Probe Protocols: Choose HTTP/HTTPS for applications needing URL-based checks, as this allows verification of app-level responses. Use TCP for simpler health checks at the connection level.

- Probe Customization: Adjust the probe interval and unhealthy threshold according to expected traffic. This prevents false positives and ensures accurate detection of unhealthy instances.

- Leverage Azure Monitor and Alerts: By integrating with Azure Monitor, you can visualize metrics, set custom alerts, and even automate scaling decisions based on real-time insights into backend health and traffic trends.

Advanced Features and Use Cases

- Availability Zones and Zone Redundancy

For high-availability applications, the Standard Load Balancer supports distribution across Availability Zones. With this setup, Azure automatically routes traffic to resources in the healthiest zone, minimizing disruptions.

- Hybrid Connections with VPN or ExpressRoute

Azure Load Balancer can extend to hybrid setups, allowing connections between on-premises environments and cloud resources via VPN or ExpressRoute. This is particularly valuable for companies transitioning workloads to Azure.

- Integration with Azure Virtual Machine Scale Sets

Load Balancer integrates seamlessly with Azure VM Scale Sets, enabling automatic scaling of VMs based on traffic demand. This combination provides an auto-scaling solution that dynamically adjusts backend resources, offering optimal performance and cost savings.

Common Issues and Resolutions

- Connectivity Issues Problem:

- Verify NSG rules

- Check health probe configuration

- Validate backend pool membership

- Review network interface configuration

- Performance Problems Problem:

- Monitor backend pool health

- Check for bandwidth throttling

- Verify instance sizes

- Review network topology

- Health Probe Failures Problem

- Verify probe protocol configuration

- Check backend service availability

- Review probe interval settings

- Validate network path

Performance Tuning

Network Optimization

- Implement TCP Fast Path:

- TCP Fast Path is a feature in Azure Load Balancer that optimizes traffic handling by reducing network latency and resource consumption. This is especially useful for applications that rely on high-throughput, low-latency traffic. Enabling TCP Fast Path bypasses the load balancer’s connection tracking, thereby improving packet forwarding speeds and minimizing delays. It’s best suited for stateless applications or when minimal packet processing by the load balancer is required.

- Optimize TCP Settings:

- Fine-tuning TCP settings for your environment can improve connection stability and efficiency. You can adjust parameters like the TCP window size, which controls the amount of data sent before requiring an acknowledgment from the receiver, and the maximum segment size, which limits the packet size, to reduce fragmentation. Adjusting these settings helps balance throughput and latency based on the network conditions and backend requirements.

- Configure Appropriate Timeout Values:

- The load balancer’s idle timeout setting defines the time a connection can remain idle before being closed. Configuring this properly ensures a balance between resource efficiency and connection availability. Shorter timeouts may save on resources, but may inadvertently terminate connections for long-running operations. Conversely, long timeouts could keep unused connections open, leading to resource waste.

- Use Connection Draining:

- Connection draining allows in-progress connections to complete while preventing new ones from being established. When performing maintenance or scaling down backend VMs, connection draining ensures minimal disruption to users by allowing ongoing sessions to complete before removing the VM from the pool. This prevents abrupt disconnections, thereby enhancing the user experience during system changes.

Backend Pool Optimization

- Right-size VM Instances:

- Right-sizing VM instances involves selecting the appropriate VM SKU (size and series) based on the actual workload requirements. By analyzing the load and traffic patterns, you can allocate VMs with the optimal CPU, memory, and network capabilities. Right-sizing prevents both underutilization and overutilization, reducing costs while maintaining performance.

- Implement Auto-Scaling:

- Auto-scaling dynamically adjusts the number of backend VMs based on traffic load, improving resource efficiency. By defining thresholds (e.g., CPU usage, memory consumption, or network traffic), you can configure auto-scaling to increase resources during peak demand and reduce them during off-peak times. This approach is cost-effective and ensures that sufficient resources are always available without unnecessary excess.

- Configure Proper Health Probe Thresholds:

- Health probes regularly check the status of backend resources, determining if they’re fit to receive traffic. Optimizing probe intervals and thresholds ensures accurate health status without excessive probing. Configuring higher frequency and stricter thresholds may lead to quicker response times for removing unhealthy instances, but could also introduce additional network overhead.

- Balance Across Availability Zones:

- Distributing backend resources across Availability Zones provides fault tolerance and improves application resilience. This setup ensures that, in case of an outage in one zone, the load balancer can route traffic to instances in other zones, maintaining service continuity. Balancing across zones also optimizes latency by routing traffic to the nearest available zone.

Security Hardening

Network Security

- Implement NSG Rules:

- Network Security Groups (NSGs) act as virtual firewalls for Azure resources. By creating NSG rules, you can control inbound and outbound traffic to your load balancer and its backend resources based on source/destination IP addresses, port numbers, and protocols. Properly configured NSGs improve security by allowing only legitimate traffic while blocking potential threats.

- Enable DDoS Protection:

- Azure DDoS Protection provides enhanced security by monitoring and mitigating Distributed Denial of Service (DDoS) attacks in real time. Enabling DDoS protection adds a layer of defense to your Azure resources, preventing traffic spikes that may overwhelm your application infrastructure. Azure’s DDoS protection is especially valuable for internet-facing resources to safeguard against attacks that can impact availability.

- Use Private Link Services:

- Azure Private Link enables secure, private connectivity between resources by eliminating the need to expose services to the public internet. By using Private Link with your load balancer, you can ensure that traffic remains within Azure’s network, thus minimizing exposure to external threats. This approach is ideal for services that require internal-only access or sensitive data.

- Configure HTTPS Probes:

- Using HTTPS health probes instead of HTTP ensures that traffic between the load balancer and backend instances is encrypted, providing an additional layer of security. HTTPS probes help verify the availability of secure backend services and support compliance with standards that require encryption in transit for sensitive data.

Access Control

- Implement RBAC:

- Role-Based Access Control (RBAC) in Azure helps restrict permissions based on user roles. By defining RBAC for Azure Load Balancer, you can limit access to only authorized individuals, reducing the risk of unauthorized changes to the configuration. You can assign roles such as Reader, Contributor, or Owner, ensuring that users have only the necessary permissions.

- Use Managed Identities:

- Managed identities allow Azure services to securely interact with one another without storing credentials in code. By enabling managed identities for resources associated with the load balancer, you can grant them access to other Azure services (e.g., Key Vault for certificates) without manually managing credentials. This approach simplifies access control while improving security.

- Enable Diagnostic Logging:

- Diagnostic logging provides insights into load balancer operations, helping you monitor traffic patterns, connection health, and performance. By enabling diagnostic logging, you gain access to logs and metrics that can be used for troubleshooting, auditing, and analyzing traffic behavior. Logging also helps identify unusual patterns that may indicate potential security incidents.

- Configure Alerts:

- Setting up alerts allows you to receive notifications for specific events or conditions, such as high latency, backend resource failures, or unusual traffic spikes. Configuring alerts in Azure Monitor provides real-time visibility into load balancer health and performance, enabling faster responses to issues and maintaining system reliability.

Best Practices

- Choose the Right SKU: Basic Load Balancer is suited for small-scale or testing environments, while the Standard SKU is recommended for production workloads.

- Use Health Probes Effectively: Regularly adjust probe settings based on traffic patterns to ensure accurate health monitoring.

- Implement Multi-Region Failover: Use Azure Traffic Manager with Load Balancer to set up cross-region redundancy for added resilience.

- Leverage NSGs and Azure Firewall: For improved security, integrate Network Security Groups (NSGs) and Azure Firewall with your Load Balancer to control traffic flow effectively.

- Monitor and Optimize Costs: Regularly review and monitor traffic flow and instance utilization to avoid overprovisioning and optimize spending.

Pricing and Cost Considerations

Azure Load Balancer is priced based on the SKU, outbound data processed, and health probe configuration. The Basic Load Balancer is generally free (except for data transfer costs), whereas the Standard Load Balancer has additional charges for added capabilities.

Be mindful of the outbound data costs, as data leaving the Azure data center (e.g., public IP traffic) incurs fees. For private traffic within the VNet, consider using internal Load Balancers to minimize charges.

Comparing Azure Load Balancer with Other Load Balancing Solutions

For more advanced Layer 7 load balancing, Azure offers Application Gateway, which is capable of inspecting HTTP(S) traffic and managing web-based traffic efficiently. Azure Traffic Manager provides DNS-based traffic management for cross-region failover, making it a great addition for global distribution.

Each of these tools complements Azure Load Balancer, enabling a multi-layered approach to traffic management across the network stack.

Azure Load Balancer is a powerful tool for cloud professionals looking to enhance application performance and reliability through effective load balancing. Its capabilities—ranging from simple load distribution to multi-tier architectures with zone redundancy—make it suitable for a wide range of use cases. By following best practices and leveraging its advanced features, you can ensure your applications remain responsive, secure, and cost-efficient.

Whether your goal is to improve availability, scale applications, or simplify traffic management, Azure Load Balancer offers the flexibility and performance required in today’s cloud environments. With the right configuration, it’s a cornerstone for any resilient Azure architecture.