1. What is Databricks?

Databricks is a cloud-based, unified analytics platform that simplifies the management of big data and machine learning workflows. Founded by the creators of Apache Spark, Databricks enables teams to collaborate seamlessly across data engineering, data science, and business intelligence. It integrates the power of Apache Spark with a collaborative environment, so data teams can focus on solving problems rather than managing infrastructure.

Think of Databricks as an all-in-one solution that makes it easier to process large datasets, perform complex analyses, and build machine learning models – all in one place.

Why Databricks is Revolutionizing Big Data and AI

The growth of data in modern businesses has created a need for more efficient data workflows. Databricks addresses this need in several impactful ways:

- Unified Analytics: Databricks combines data engineering, data science, and machine learning in a single platform. This integration eliminates the need for silos, enabling better collaboration between teams.

- Scalability and Flexibility: Databricks dynamically scales compute resources based on workload demand. Whether you’re processing terabytes of data or training a machine learning model, Databricks adapts automatically.

- Faster Time-to-Insight: With built-in optimizations for Apache Spark, Databricks can process data faster than traditional tools, reducing time from data ingestion to actionable insights.

- Real-Time Collaboration: With notebooks, version control, and sharing features, Databricks allows teams to collaborate in real time, making it easier to share results, experiment with models, and troubleshoot.

The Evolution of Databricks: From Apache Spark to a Unified Analytics Platform

Databricks began with a focus on Apache Spark. Spark is an open-source distributed computing framework designed for fast data processing at scale. Databricks made it easier to manage Spark clusters, but over time, it expanded to include more robust features for data scientists and engineers. Here’s how it evolved:

- Apache Spark Optimization: Databricks started by offering a managed Spark environment where users could easily run distributed data processing jobs without dealing with complex configurations.

- Delta Lake: As organizations began using data lakes for storage, they ran into challenges around data consistency and reliability. Databricks introduced Delta Lake, a storage layer that brings ACID transactions to data lakes, solving these problems and enabling more reliable analytics.

- Machine Learning & AI: As the platform grew, Databricks introduced full support for machine learning workflows, including tools like MLflow to manage models and experiment tracking. This expansion allowed teams to go beyond just processing data and dive into the entire machine learning lifecycle.

Key Features and Benefits of Databricks

Here’s a closer look at what makes Databricks such a powerful platform for big data and AI:

| Feature | What It Does | Why It’s Great |

| Collaborative Notebooks | Write, test, and share code in an interactive, real-time environment. | Facilitates collaboration between teams, streamlining data exploration and development. |

| Delta Lake | A reliable storage layer that ensures data consistency, scalability, and fast querying. | Delta Lake handles the complex issues of data quality and consistency, ensuring robust and reliable data lakes. |

| Managed Spark Clusters | Automatically scaled clusters for data processing, eliminating manual configuration. | Makes it easy to scale resources as needed, without worrying about infrastructure management. |

| Integrated Machine Learning | Tools for managing the full ML lifecycle, from experimentation to model deployment. | Simplifies building, tracking, and deploying machine learning models, improving productivity. |

| Cloud Integration | Seamlessly integrates with AWS, Azure, and GCP cloud services for storage, compute, and security. | Leverages cloud-native tools to handle large-scale data storage and processing needs, offering flexibility. |

2. Getting Started with Databricks

Creating Your First Databricks Workspace

The first step to using Databricks is setting up your workspace. This is where all your projects, notebooks, and clusters will live.

- Sign Up and Log In: Go to the Databricks website and sign up for an account. You can get started with a free trial.

- Create a Workspace: After logging in, click on Create Workspace. The workspace is your personal environment for managing and running all your notebooks, clusters, and files.

- Select Your Cloud Provider: Databricks is available on AWS, Azure, and GCP. Choose the cloud provider you prefer to use (your organization may have already made this decision).

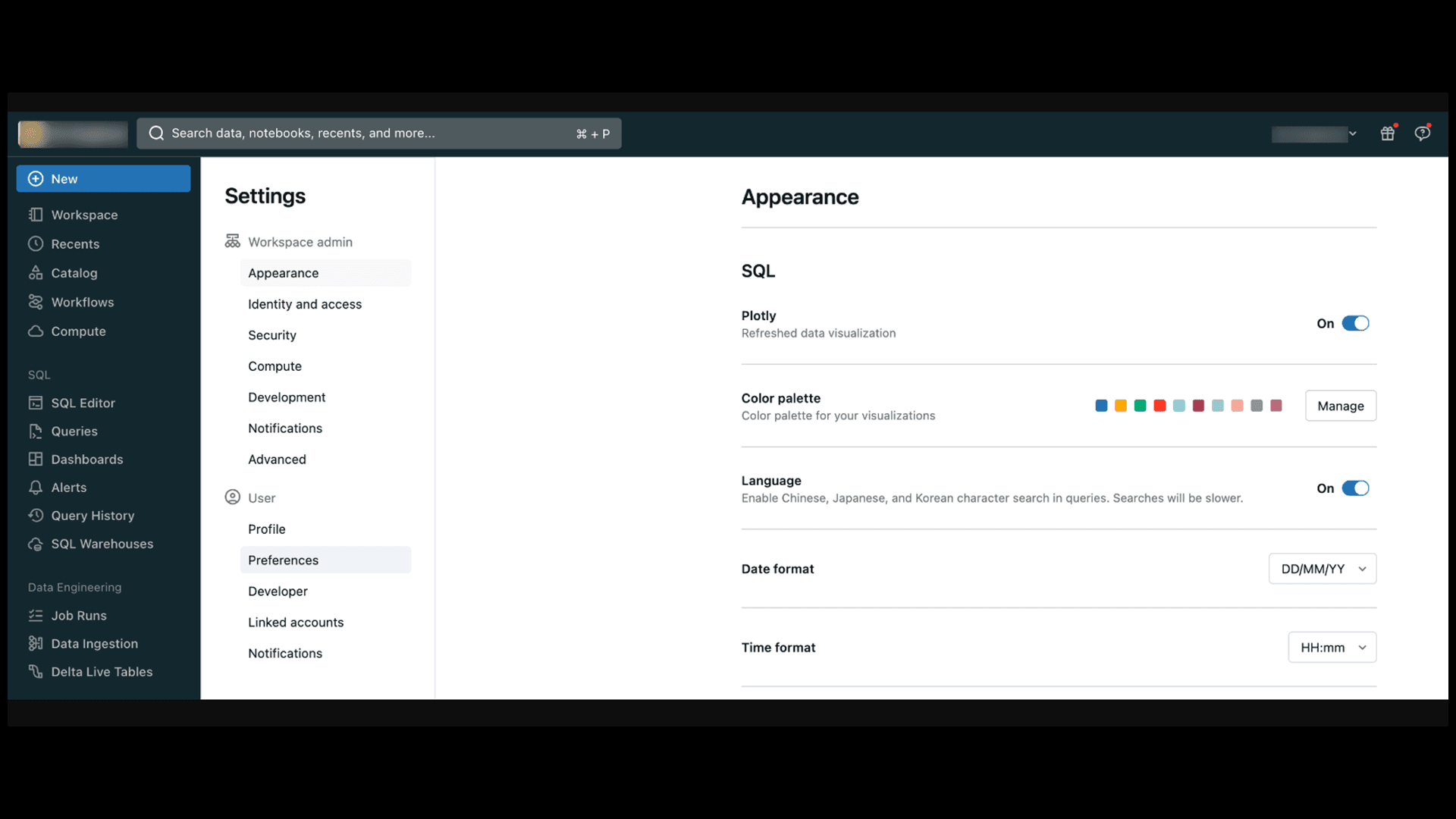

Navigating the Databricks UI: A Beginner's Guide

Source : Databricks

Once you're logged into Databricks, you'll see a clean, intuitive interface. Here's a breakdown of the key components:

| Area | What It Does |

| Workspace | This is where you store notebooks, libraries, and datasets. It's your main hub. |

| Clusters | Here, you can create and manage your Spark clusters, which run all your computations. |

| Jobs | Schedule and automate workflows like running notebooks or Spark jobs at specific times. |

| Libraries | Install and manage external libraries like Pandas, NumPy, or TensorFlow. |

| Notebooks | Interactive documents where you can write and execute code, visualize data, and document results. |

Setting Up Your First Cluster

Clusters are the heart of Databricks. They process the data you work with and provide the computing power for running Spark jobs.

To create your first cluster:

- Go to the Clusters Tab in your workspace.

- Click on Create Cluster. Choose a cluster size, Spark version, and other configurations.

- Launch Your Cluster. After a few minutes, your cluster will be up and running, ready to process data.

Using Databricks Notebooks: A Hands-On Introduction

Databricks Notebooks allow you to interactively write and run code. They support multiple languages like Python, Scala, SQL, and R, and make it easy to see results and adjust code as you go. Here's an example:

| python # Import required libraries import pandas as pd import matplotlib.pyplot as plt # Create a simple DataFrame data = {'Name': ['Alice', 'Bob', 'Charlie', 'David'], 'Age': [25, 30, 35, 40]} df = pd.DataFrame(data) # Display the DataFrame display(df) # Plot a bar chart of Ages df.plot(x='Name', y='Age', kind='bar') plt.show() |

This code creates a simple DataFrame and then generates a bar chart of the ages. The interactive nature of notebooks allows you to see your results immediately after running the code. This makes it easier to experiment, visualize, and debug your work.

3. Deep Dive into Databricks Architecture

Core Components of Databricks: Clusters, Jobs, Notebooks, and Repos

Databricks has several core components that help organize and manage your data workflows. Let’s take a closer look:

- Clusters: These are the machines that run your computations. You can create clusters to process data, run notebooks, and train machine learning models. Databricks manages all the complexities of cluster setup, scaling, and maintenance.

- Jobs: Jobs allow you to automate and schedule tasks. For instance, you can set up a job to run a notebook every day, which is great for periodic data processing tasks.

- Notebooks: Interactive documents that allow you to run code, visualize results, and document your workflow in one place. They’re essential for sharing your findings with teammates.

- Repos: Repositories help you manage code and data with version control. You can integrate Databricks with GitHub, GitLab, or other version control platforms to track changes.

Databricks Runtime: What It Is and Why It Matters?

Databricks Runtime is a custom environment optimized for running Spark workloads. It includes several features that make data processing faster and more efficient:

- Performance Enhancements: Databricks Runtime comes with built-in optimizations for Spark that improve performance, including better resource management and caching strategies.

- Integration with Delta Lake: It natively supports Delta Lake, which means your data lakes will have ACID transactions, schema enforcement, and time travel capabilities.

- Ease of Use: With Databricks Runtime, you don’t have to worry about installing or managing dependencies – it’s all pre-configured for you.

The Role of Apache Spark in Databricks

Apache Spark is the open-source distributed computing framework that powers Databricks. It’s designed to handle large-scale data processing and analytics, and it does so by breaking down tasks and distributing them across multiple machines.

- Distributed Computing: Spark splits large datasets into smaller pieces and processes them in parallel, which speeds up data processing tasks.

- Versatility: Spark can handle batch processing, stream processing, machine learning, and SQL queries, making it a go-to solution for a wide range of big data use cases.

Databricks File System (DBFS) and Data Storage Options

Databricks File System (DBFS) is the default storage layer within Databricks. It provides a simple way to store and access files, and is fully integrated with Spark.

- DBFS: It acts as a bridge between cloud storage (e.g., AWS S3, Azure Blob Storage) and the Databricks environment. Files in DBFS are stored in your cloud storage but can be accessed like a local file system within Databricks.

- External Storage Integration: You can also read from and write to external storage solutions like Amazon S3, Azure Data Lake, or Google Cloud Storage, making Databricks highly flexible.

How Databricks Integrates with Cloud Services (AWS, Azure, GCP)?

Databricks leverages the power of cloud platforms to enhance its capabilities:

- AWS Integration: Databricks works seamlessly with AWS services like S3 for storage, EC2 for compute, and IAM for security, ensuring high availability and scalability.

- Azure Integration: On Azure, Databricks integrates with Azure Blob Storage, Azure Data Lake, and Azure Active Directory to streamline data workflows and enhance security.

- GCP Integration: Google Cloud users can leverage Databricks’ integration with GCP services such as Google Cloud Storage and BigQuery, enabling high-performance analytics on Google’s infrastructure.

4. Advanced Databricks Features

Optimizing Data Engineering Workflows with Delta Lake

Delta Lake is an open-source storage layer that brings reliability and performance to data lakes. It helps organizations manage large-scale datasets more effectively by providing features like:

- ACID Transactions: Ensures data integrity by enabling transactions that allow you to handle concurrent reads and writes without issues.

- Schema Enforcement: Automatically validates data as it is ingested, preventing schema mismatches.

- Time Travel: Enables querying historical data by keeping a versioned history of your datasets, which is perfect for auditing or rolling back changes.

To write data in Delta format:

| python df.write.format("delta").save("/mnt/delta/events") |

Delta Lake vs. Traditional Data Lakes: A Comparative Study

| Feature | Delta Lake | Traditional Data Lake |

| ACID Transactions | Yes | No |

| Schema Enforcement | Yes | No |

| Time Travel | Yes (query historical data) | No |

| Scalability | Highly optimized for large-scale processing | Depends on setup and infrastructure |

Real-Time Data Processing with Structured Streaming

Databricks enables real-time data processing through Structured Streaming, allowing you to process data as it arrives rather than waiting for batch jobs to run. This is particularly useful for use cases like fraud detection or real-time analytics.

| python # Reading streaming data from Delta streaming_df = spark.readStream.format("delta").load("/mnt/delta/events") # Output to the console query = streaming_df.writeStream.outputMode("append").format("console").start() query.awaitTermination() |

Databricks Jobs & Scheduling for Automation

Jobs in Databricks let you automate your workflows by running notebooks and tasks on a schedule. This feature is crucial for data pipelines, ETL tasks, or batch processing jobs.

| python dbutils.jobs.runJob('job_id') |

Leveraging Databricks for Machine Learning Pipelines

With Databricks, you can streamline the entire machine learning lifecycle—from data collection and preprocessing to model deployment. The MLflow integration makes it easy to track experiments, tune models, and manage model deployment.

Using MLflow for Managing Machine Learning Models

MLflow is an open-source tool that helps you manage the machine learning lifecycle. Here’s an example of logging a model with MLflow:

| python import mlflow import mlflow.sklearn # Log experiment with MLflow with mlflow.start_run(): mlflow.log_param("param1", 5) mlflow.log_metric("accuracy", 0.92) mlflow.sklearn.log_model(model, "model") |

5. Databricks APIs and SDKs

Overview of Databricks REST API

The Databricks REST API is a powerful tool for programmatically interacting with the Databricks platform. It provides access to many features such as cluster management, job scheduling, and workspace operations, all via HTTP requests.

Key Features of the Databricks REST API:

- Cluster Management: Create, start, stop, and manage clusters directly through the API.

- Job Automation: Trigger jobs, check their status, and retrieve results without having to manually interact with the UI.

- Workspace Operations: Upload, download, and manage files in your workspace programmatically.

- Notebook Execution: Run notebooks as part of automated workflows, allowing you to integrate Databricks into larger automation systems.

Here's an example of how to use the REST API to list all clusters:

| bash curl -X GET https://<databricks-instance>/api/2.0/clusters/list \ -H "Authorization: Bearer <access-token>" |

This simple API call fetches a list of all the clusters in your Databricks environment.

Using Databricks CLI for Automation

The Databricks CLI (Command Line Interface) allows users to interact with Databricks from their terminal. It’s particularly useful for automating tasks like cluster management, job submissions, and file handling. Here are a few basic CLI commands:

Authenticate with Databricks:

| bash databricks configure --token |

- List Clusters:

| bash databricks clusters list |

- Submit a Job:

| bash databricks jobs run-now --job-id <job-id> |

- With the CLI, you can integrate Databricks into larger automation pipelines, run repetitive tasks, or manage resources without ever logging into the Databricks UI.

Python and Scala SDKs for Custom Integrations

Databricks provides SDKs for Python and Scala to facilitate custom integrations. These SDKs allow developers to interact with Databricks’ REST API directly from their scripts.

Python SDK: The databricks-api Python package can be installed via pip and used to manage clusters, jobs, and other operations.

| bash pip install databricks-api |

Example of using the Python SDK to get a list of clusters:

| python from databricks_api import DatabricksAPI db = DatabricksAPI(token="<access-token>", host="<databricks-instance>") clusters = db.clusters.list() print(clusters) |

- Scala SDK: The Scala SDK provides similar functionality, allowing integration into Spark-based workflows or larger Scala applications running in Databricks.

Integrating Databricks with Third-Party Tools and Systems

Databricks supports a range of integrations with third-party tools, from BI platforms like Tableau and Power BI to machine learning libraries and external storage systems like S3, Azure Blob Storage, and Google Cloud Storage.

- BI Tools: Use Databricks' JDBC/ODBC connectors to integrate with BI tools and run SQL queries on your data.

- Machine Learning Libraries: Leverage libraries like TensorFlow, PyTorch, and XGBoost for model building and training, seamlessly integrating them into Databricks workflows.

- External Systems: Easily connect Databricks to external systems like Kafka for real-time data streaming or Datadog for monitoring.

6. Managing the Data Science Lifecycle in Databricks

Model Development and Experimentation

Databricks is designed to simplify the machine learning (ML) lifecycle, from experimentation to production. The platform provides tools for data preprocessing, model training, hyperparameter tuning, and model evaluation.

- Data Preprocessing: Use Databricks Notebooks to clean and prepare data for training, leveraging the full power of Spark and its distributed computing capabilities.

- Model Training: Train models using a variety of ML libraries, including scikit-learn, TensorFlow, and PyTorch. Databricks provides the compute power to scale your models across multiple nodes.

- Hyperparameter Tuning: Automatically tune model parameters with libraries like Hyperopt or MLlib for Spark-based optimization.

Collaborative Features for Data Scientists

Collaboration is key to modern data science. Databricks provides several features that enable data scientists to work together seamlessly:

- Notebooks for Collaboration: Multiple data scientists can work on the same notebook simultaneously, share results, and leave comments for discussion.

- Experiment Tracking: With MLflow, Databricks helps you track experiments, compare model performance, and store results.

- Shared Workspace: Notebooks, datasets, and jobs can be shared easily within teams, facilitating collaboration across multiple roles (data engineers, data scientists, etc.).

Model Deployment and Monitoring with Databricks

Once a model is trained, it needs to be deployed to production. Databricks makes this easy with built-in tools for model deployment and monitoring.

- Model Deployment: With MLflow, you can deploy models as REST APIs or integrate them into Databricks jobs. This allows you to automate predictions and integrate them into larger business workflows.

- Model Monitoring: Databricks integrates with tools like Datadog and Prometheus to monitor the performance of your models in real time, ensuring they perform as expected in production.

7. Collaboration and Productivity in Databricks

Collaborative Data Science: Sharing Notebooks and Results

In the data science world, collaboration is critical to success. Databricks simplifies this with real-time collaboration features that allow data scientists to work together on notebooks, share insights, and collaborate effectively:

- Live Sharing: Share notebooks with teammates and collaborators, allowing others to comment, edit, and view results in real-time.

- Visualization Sharing: Visualizations generated within Databricks Notebooks can be shared, helping teams discuss data insights visually.

Version Control with Git Integration in Databricks

Databricks integrates with Git to manage versions of notebooks, code, and configurations, making it easy to track changes and collaborate on projects:

- GitHub/GitLab Integration: Connect your Databricks workspace to GitHub or GitLab for version control. This allows teams to push and pull code directly from their Git repositories.

- Notebook Versioning: Databricks also automatically tracks notebook revisions, so you can roll back to a previous version of a notebook if needed.

Integrating Databricks with CI/CD Pipelines

Databricks can be integrated with Continuous Integration/Continuous Deployment (CI/CD) pipelines, ensuring smooth and automated deployment of data science and machine learning models:

- Automated Testing: Set up automated tests for your code within your CI pipeline to ensure it works as expected before being deployed.

- Deployment to Production: Use Databricks Jobs or APIs to automate the deployment of models to production once they have passed testing.

Monitoring and Debugging Code in Databricks Notebooks

Debugging is crucial for maintaining quality in any codebase. Databricks provides several tools to monitor and debug your notebooks:

- Interactive Debugging: Set breakpoints, inspect variables, and step through code directly in Databricks Notebooks.

- Logs and Errors: View detailed logs and error messages to understand what went wrong, helping you resolve issues faster.

Building Dashboards for Data Insights

Databricks allows you to build interactive dashboards that display key insights from your data:

- Data Visualizations: Create charts and graphs from your data and include them in dashboards to provide insights to stakeholders.

- Custom Dashboards: Use Databricks' visualization tools to create custom dashboards that update in real-time, providing dynamic insights to users.

8. Data Security and Governance in Databricks

Understanding Databricks Security Model

Databricks follows a robust security model to ensure your data is protected at every stage. This model includes encryption, authentication, access control, and auditing.

- Encryption: All data stored in Databricks is encrypted, both at rest and in transit, using industry-standard protocols.

- Authentication: Databricks supports multi-factor authentication (MFA), ensuring that only authorized users can access the platform.

Role-Based Access Control (RBAC) for Users and Groups

Role-Based Access Control (RBAC) is crucial for securing data and ensuring that only authorized personnel have access to sensitive resources. In Databricks, you can define roles for users and groups, controlling access to specific notebooks, clusters, and data.

- User Roles: Assign specific roles (e.g., admin, data engineer, data scientist) that determine what actions a user can perform.

- Group-Based Access: Organize users into groups and assign them access permissions based on their group roles, streamlining management.

Securing Data in Databricks: Encryption, Authentication, and Auditing

To further enhance security, Databricks employs:

- End-to-End Encryption: Ensures that all data, whether in storage or in transit, is encrypted.

- Authentication Options: Use OAuth, SAML, or Azure Active Directory (AD) to authenticate users securely.

- Audit Logs: Keep detailed records of user activity, which can be used to monitor access patterns and troubleshoot security incidents.

Managing Data Lineage and Compliance with Delta Lake

Delta Lake is also an essential tool for maintaining data lineage and compliance. With its versioning capabilities, you can track the history of every change made to a dataset, helping you meet compliance requirements for industries like healthcare and finance.

- Data Lineage: Track how data has transformed over time, making it easier to audit and comply with regulations.

- Compliance: Delta Lake’s features ensure that your data governance practices align with legal and regulatory standards.

Integration with Identity Providers (Azure AD, AWS IAM)

Databricks integrates with major identity providers like Azure Active Directory (AD) and AWS Identity and Access Management (IAM) to manage user authentication and authorization seamlessly.

- Single Sign-On (SSO): Users can authenticate using their existing corporate credentials, improving user experience and security.

- Fine-Grained Access Control: Leverage these integrations to manage access at a granular level, ensuring the right users have the right level of access to your Databricks environment.

9. Databricks on Cloud Platforms: A Closer Look

Databricks is a versatile platform that integrates seamlessly with all major cloud providers: AWS, Azure, and Google Cloud. Each platform offers unique capabilities and features that can be leveraged to meet specific business needs. Let’s dive into how Databricks works on each cloud provider.

Databricks on AWS: Features, Integration, and Use Cases

Databricks on AWS is fully integrated with Amazon Web Services (AWS), enabling you to take full advantage of AWS's robust infrastructure and data services. This integration offers flexibility and scalability, making it suitable for large-scale data processing, analytics, and machine learning workloads.

Key Features:

- Seamless Integration with AWS Services: Databricks works effortlessly with AWS services like S3 for storage, Redshift for data warehousing, and AWS Glue for ETL (Extract, Transform, Load).

- Elastic Compute: Databricks runs on EC2 instances, which can be scaled automatically depending on your compute requirements. You can also use Spot Instances for cost savings on non-critical workloads.

- Delta Lake: Delta Lake, native to Databricks, is a powerful data lake storage solution for managing large datasets with ACID transactions.

Use Cases:

- Real-Time Data Analytics: By integrating with Apache Kafka, you can build real-time data pipelines to process streaming data efficiently.

- Machine Learning: AWS offers a variety of GPU-powered EC2 instances, ideal for running large-scale ML training jobs using Databricks.

- Data Lakes: Store data in S3 and process it on Databricks, benefiting from the storage reliability and scalability that AWS offers.

Databricks on Azure: How It Leverages Azure Synapse and Azure ML

On Azure, Databricks is tightly integrated with other Microsoft services such as Azure Synapse Analytics and Azure Machine Learning (Azure ML), creating a powerful end-to-end data engineering and machine learning solution.

Key Features:

- Azure Synapse Analytics: Databricks integrates with Azure Synapse, enabling you to process data in a unified workspace, blending data lakes and data warehouses.

- Azure Machine Learning Integration: Databricks allows users to build and train machine learning models, which can then be deployed using Azure ML for monitoring and scaling.

- Azure Active Directory Integration: Azure Databricks benefits from Azure AD, which allows for seamless authentication and access management.

Use Cases:

- End-to-End Data Engineering: Leverage Databricks to clean, transform, and analyze data, then push insights to Azure Synapse for business intelligence.

- ML Model Deployment: After training models on Databricks, use Azure ML for deployment and model management in production environments.

Databricks on Google Cloud: Key Capabilities and Services

Google Cloud provides a robust ecosystem for AI and machine learning, and when paired with Databricks, it offers a comprehensive solution for large-scale data processing and analytics.

Key Features:

- BigQuery Integration: Databricks integrates seamlessly with Google BigQuery, enabling you to perform large-scale data analysis with ease.

- AI and ML Services: Integrating Databricks with Google AI Platform makes it easy to train and deploy machine learning models at scale.

- Cloud Storage: Databricks runs efficiently on Google Cloud Storage (GCS), providing low-cost, high-performance storage for massive datasets.

Use Cases:

- AI and ML Workflows: Databricks on Google Cloud is ideal for businesses focused on AI and ML. Leverage Vertex AI to build and deploy models directly from Databricks.

- Real-Time Streaming: Combine Databricks with Google Pub/Sub for real-time analytics and event-driven applications.

10. Choosing the Right Cloud for Databricks

Selecting the right cloud platform for your Databricks deployment depends on several factors such as existing infrastructure, use cases, and pricing preferences. AWS, Azure, and Google Cloud each offer distinct advantages, and understanding the differences can help you make an informed decision.

Databricks on AWS vs. Azure vs. Google Cloud: Key Differences

Here’s a quick comparison to help you decide:

| Feature | AWS | Azure | Google Cloud |

| Integration with Databricks | Tight integration with AWS services like S3, EC2 | Native integration with Azure Synapse and Azure ML | Strong integration with Google BigQuery and Vertex AI |

| Compute Resources | EC2 instances (Spot, On-Demand) | Azure VM instances (Standard, Reserved) | Google Compute Engine VMs (Preemptible, Custom) |

| Storage | S3, Glacier, Redshift | Azure Blob Storage, Data Lake | Google Cloud Storage, BigQuery |

| Machine Learning | SageMaker, Databricks ML integration | Azure ML, Databricks ML integration | Vertex AI, Databricks ML integration |

| Pricing Model | Pay-as-you-go, Spot Instances for cost savings | Pay-as-you-go, Reserved Instances for savings | Pay-as-you-go, Preemptible VMs for savings |

Use Cases for Each Cloud Platform

- AWS: Ideal for large-scale data engineering tasks with heavy reliance on storage and compute, or if your organization is already deeply embedded in the AWS ecosystem.

- Azure: Best for enterprises that rely on Microsoft technologies, particularly those using Azure Synapse for data analytics and Azure Machine Learning for model deployment.

- Google Cloud: Excellent for AI-driven organizations looking to leverage Google’s BigQuery for analytics and Vertex AI for machine learning.

Key Factors to Consider When Choosing a Cloud for Databricks

- Existing Cloud Ecosystem: Consider which cloud platform your organization is already using for services like storage, compute, and machine learning. This can help reduce integration complexities.

- Pricing and Performance: Evaluate the total cost of ownership based on your expected workload. AWS might be the most cost-effective for large-scale compute-heavy workloads, while Google Cloud might offer the best performance for AI and ML.

- Security and Compliance: If you need specific security certifications or compliance requirements (e.g., HIPAA, GDPR), consider the platform that best meets these needs.

11. Databricks Ecosystem and Third-Party Integrations

One of the key strengths of Databricks is its ability to integrate seamlessly with various third-party tools and platforms, expanding its ecosystem. These integrations allow you to leverage the power of Databricks alongside other services you might already be using.

Integrating Databricks with BI Tools (Tableau, Power BI, etc.)

Databricks can integrate with popular business intelligence (BI) tools like Tableau and Power BI to provide powerful visual analytics. These integrations enable analysts to query Databricks' data directly and create dynamic dashboards.

- Tableau Integration: By using the JDBC/ODBC driver, Tableau users can connect directly to Databricks and visualize large-scale datasets with ease.

- Power BI Integration: Similarly, Power BI can connect to Databricks via its built-in connector, allowing you to generate reports and share insights in real time.

Real-Time Stream Processing: Databricks + Apache Kafka

For real-time data processing, integrating Apache Kafka with Databricks provides a powerful combination. You can stream data from Kafka, process it in real time using Databricks, and store results in Delta Lake for reliable, scalable data storage.

| python # Read streaming data from Kafka streaming_df = spark.readStream.format("kafka").option("subscribe", "topic_name").load() # Process and write to Delta table streaming_df.writeStream.format("delta").outputMode("append").start("/mnt/delta/events") |

This setup ensures that you're processing live data efficiently and storing it in a format that enables further analysis and reporting.

Databricks and Apache Airflow for Workflow Automation

Apache Airflow is a popular tool for automating and orchestrating workflows. When integrated with Databricks, Airflow can trigger Databricks jobs, schedule notebooks, and manage complex ETL pipelines.

| python from airflow.providers.databricks.operators.databricks import DatabricksSubmitRunOperator # Trigger a Databricks job from Airflow submit_run = DatabricksSubmitRunOperator( task_id='submit_databricks_job', databricks_conn_id='databricks_default', notebook_task={'notebook_path': '/Path/To/Your/Notebook'}, libraries=[{"jar": "dbfs:/path/to/jar"}] ) |

This integration enhances automation and ensures smoother management of end-to-end data pipelines.

Integrating with Other Cloud-Native Tools

Databricks is also compatible with other cloud-native tools like AWS Glue, Azure Data Factory, and Google Dataflow, which are used for orchestrating and automating ETL workflows.

12. Best Practices and Optimization Techniques

To ensure you're getting the best performance and value out of Databricks, it's essential to follow best practices for optimization. These tips can help you scale efficiently, improve performance, and minimize costs.

Performance Tuning: How to Optimize Spark Jobs in Databricks

Optimizing Spark jobs ensures that you can handle large datasets more efficiently. Here are some tips for tuning performance:

- Data Partitioning: Properly partition your data to avoid unnecessary shuffling, which can significantly slow down performance.

- Caching: Cache intermediate datasets when performing iterative operations or working with large datasets to avoid redundant computations.

- Optimizing Spark Configurations: Adjust Spark configurations like memory allocation, number of executors, and executor cores based on your workload.

Scaling Your Databricks Clusters Efficiently

Databricks provides the flexibility to scale your clusters based on demand, but it’s crucial to do so efficiently to avoid overspending:

- Cluster Auto-Scaling: Enable auto-scaling to ensure that your clusters dynamically scale up during high-demand periods and scale down during idle times.

- Spot Instances: Take advantage of Spot Instances for batch jobs or non-critical workloads to save on compute costs.

- Use Reserved Instances: For long-running workloads, consider using reserved instances (on AWS and Azure) to reduce the cost of compute resources.

Best Practices for Data Engineering in Databricks

- Modular Pipelines: Break up your data pipelines into smaller, reusable modules to increase maintainability and reduce errors.

- Use Delta Lake: Store data in Delta Lake to take advantage of features like ACID transactions, schema enforcement, and time travel.

- Optimize Data Formats: Always choose optimized formats like Parquet for efficient storage and querying, and avoid storing large files as CSVs.

Cost Optimization Strategies in Databricks

To optimize costs:

- Cluster Sizing: Choose appropriate cluster sizes based on the workload and adjust based on usage patterns.

- Auto-Termination: Set auto-termination for clusters that aren’t in use to avoid unnecessary costs.

- Efficient Data Storage: Store data in Delta Lake to minimize data duplication and optimize storage costs.

13. Real-World Use Cases and Industry Applications

Databricks is not just a powerful tool for theoretical use; it has been successfully applied in various industries to solve real-world problems and streamline data workflows. From data engineering to machine learning and real-time analytics, Databricks is enabling businesses across sectors to innovate and improve efficiency.

Databricks for Data Engineering and ETL Workflows

Databricks simplifies the ETL process by providing a scalable and unified platform to ingest, transform, and load data. Using its Apache Spark-based engine, you can process large datasets in real-time or batch, allowing data engineers to streamline workflows and reduce time-to-insight.

- Example: Companies in the tech industry often use Databricks to ingest streaming data from IoT devices, apply transformations, and load the data into a data warehouse for further analysis and reporting.

Databricks in Machine Learning and AI Projects

For machine learning (ML) projects, Databricks offers seamless integration with popular frameworks like TensorFlow, PyTorch, and Scikit-learn, allowing data scientists to train and deploy models at scale. With its built-in MLflow library, Databricks simplifies the process of tracking experiments, managing models, and deploying them into production.

- Example: In the automotive sector, Databricks is used to train autonomous vehicle models, handling vast amounts of data and providing real-time predictions for vehicle navigation.

Using Databricks for Real-Time Analytics and Data Streaming

With Structured Streaming, Databricks enables organizations to process data in real-time, providing insights into streaming datasets as they arrive. This is particularly beneficial for industries like finance, e-commerce, and social media, where immediate analysis of data is critical.

- Example: Retailers use Databricks to process clickstream data in real-time, gaining insights into customer behavior and adjusting their marketing strategies accordingly.

Case Studies from Healthcare, Finance, and Retail Sectors

- Healthcare: Databricks is transforming the healthcare industry by enabling efficient analysis of patient data, improving diagnostics, and accelerating drug discovery processes. For example, a major hospital network uses Databricks to process medical imaging data and run AI models to detect diseases earlier.

- Finance: Financial institutions leverage Databricks to perform risk analysis, fraud detection, and algorithmic trading by processing massive volumes of transaction data and market information in real time.

- Retail: Major retailers use Databricks for demand forecasting, inventory management, and personalized marketing. By integrating Databricks with their customer data platforms, they can build recommendation systems and predictive models that enhance customer experience.

14. Databricks vs. Other Platforms: A Detailed Comparison

Choosing the right platform for your data engineering and machine learning needs can be challenging, especially with so many options available. Here’s how Databricks stacks up against some of the most popular platforms.

Databricks vs. Apache Spark vs. EMR: Performance, Cost, and Features

- Apache Spark: Databricks is built on Spark, but with added optimizations like Delta Lake, making it more user-friendly and efficient for data engineering tasks. While Apache Spark is an open-source project that can be run anywhere, Databricks offers managed services and an interactive workspace for better collaboration.

- AWS EMR: EMR is a great choice for running Spark workloads on AWS, but it lacks the managed features and user-friendly interface that Databricks offers. Databricks also provides built-in version control, notebook sharing, and ML workflows, which EMR does not natively provide.

Databricks vs. Snowflake: When to Choose One Over the Other

- Snowflake is optimized for data warehousing and analytics with an easy-to-use interface for SQL queries, while Databricks is more focused on data engineering, ETL, and machine learning. Snowflake is an excellent choice for structured data querying, but if you need complex data pipelines and real-time analytics, Databricks offers greater flexibility.

Databricks vs. Google BigQuery: What Sets Them Apart?

- BigQuery is a serverless, highly scalable data warehouse with powerful SQL capabilities, while Databricks is a more comprehensive platform for big data processing, machine learning, and real-time analytics. BigQuery is excellent for running ad-hoc queries and analyzing large datasets, but Databricks shines when it comes to advanced analytics and ML workloads.

Databricks vs. AWS Glue vs. Azure Data Factory: A Workflow Comparison

- AWS Glue and Azure Data Factory are ETL orchestration services that are often used to move and transform data across data lakes and warehouses. However, Databricks offers more flexibility and advanced processing capabilities, especially for large-scale data pipelines that require complex transformations, machine learning, or real-time analytics.

15. The Future of Databricks and Emerging Trends

Databricks is continuously evolving, and as the fields of big data and AI advance, the platform is also adapting to meet new challenges and opportunities.

Trends Shaping the Future of Big Data and AI

- Data Lakehouses: The hybrid approach of combining data lakes and data warehouses is becoming a dominant architecture in the industry. Databricks, with its Delta Lake implementation, is leading this shift, allowing organizations to manage both structured and unstructured data more efficiently.

- Real-Time Analytics: The growing demand for instant data insights is pushing the adoption of real-time analytics platforms. Databricks' integration with Apache Kafka and Structured Streaming positions it perfectly for real-time use cases across industries.

How Databricks is Evolving with Advances in AI, Quantum Computing, and Beyond

- AI: Databricks is further integrating AI tools and frameworks to enable organizations to build and deploy more sophisticated models at scale. The future will likely see deeper integrations with Deep Learning frameworks, automated machine learning (AutoML), and enhanced model management.

- Quantum Computing: Although still in its early stages, quantum computing holds potential for transformative data processing. Databricks has already begun exploring integrations with quantum computing platforms to stay ahead of the curve and provide powerful capabilities as this technology matures.

The Road Ahead: Upcoming Features and Integrations

As Databricks continues to innovate, expect more features around automated machine learning, AI model governance, cloud-native integrations, and expanded multi-cloud support. The platform will keep evolving to integrate cutting-edge technologies and better support enterprise needs.

How to Stay Updated with Databricks Innovations

Follow the Databricks blog, attend Databricks webinars, and keep an eye on the Databricks community forums for the latest product announcements, feature releases, and tutorials to stay up-to-date with innovations.