1. Introduction to AWS PrivateLink

What is AWS PrivateLink?

Imagine your AWS environment as a complex city with multiple secured buildings (VPCs). Traditionally, if you wanted to visit a service in another building, you might have to go outside onto the public streets (the internet), exposing your journey to potential risks and delays. AWS PrivateLink acts like a private, secure underground tunnel that directly connects these buildings without ever stepping outside, ensuring your data travels safely and privately.

AWS PrivateLink is a networking feature that allows secure, private access to services across VPC boundaries without exposing any traffic to the public internet. It creates seamless connectivity between your VPC and AWS services, third-party SaaS applications, or internal services hosted in other AWS accounts. This is accomplished through private IP addresses and elastic network interfaces (ENIs), which keep your data flowing entirely within the protected AWS global network.

Unlike traditional network-level connections that open broader access, PrivateLink focuses on service-level connectivity. This approach tightens security boundaries, reduces exposure, and simplifies compliance — especially important for organizations operating under strict regulatory requirements.

Why PrivateLink Exists: The Case for Private Connectivity

Traditionally, when you wanted to access services hosted in another VPC or by a SaaS provider, traffic had to traverse the public internet or rely on complex networking setups like VPC peering or VPN tunnels. This approach brought challenges, including increased exposure to security threats, route management overhead, and difficulties in scaling multi-tenant architectures.

AWS introduced PrivateLink to address these limitations. It was designed to make it easier to share services privately, securely, and at scale without requiring consumers to manage networking complexity or expose workloads to public endpoints.

Key Benefits of Using AWS PrivateLink

PrivateLink offers a number of clear advantages. First, it enhances security by keeping all traffic inside the AWS network. Because consumers connect using private IP addresses within their own VPC, there's no need to assign public IPs or configure internet-facing endpoints. This reduces the attack surface and removes reliance on NAT gateways or internet gateways.

It also simplifies network architecture. Instead of configuring full VPC-to-VPC communication, PrivateLink enables precise service-level access. This makes it particularly useful in multi-account environments or when integrating third-party SaaS providers. Furthermore, PrivateLink supports scalable and highly available connections, backed by AWS infrastructure. Private DNS integration also allows consumers to access services using friendly hostnames that resolve to internal IPs.

PrivateLink vs Other AWS Networking Services

PrivateLink is one of several networking options in AWS, alongside VPC peering and Transit Gateway. Understanding how it differs helps in selecting the right tool for the right job.

VPC Peering creates a direct network link between two VPCs, allowing full communication between resources. While simple for one-to-one connections, it doesn’t scale well in large environments. There's no transitive routing, and as the number of VPCs grows, the number of required peering connections increases rapidly. Peering also exposes entire VPC CIDR blocks, which may be undesirable in some security models.

In contrast, PrivateLink only exposes a specific service or endpoint. This makes it easier to manage, especially in hub-and-spoke models or multi-tenant architectures, where you want to grant limited, controlled access to a service without exposing your broader network.

Transit Gateway is a hub-based solution for connecting multiple VPCs and on-premises networks. It's more suitable for scenarios where you need full, transitive routing between many networks. However, it's still more network-centric than service-centric. PrivateLink is preferred when your goal is to expose or consume a single service such as an API, database, or SaaS endpoint without opening broad network access or managing routing complexity.

| Feature | VPC Peering | Transit Gateway | AWS PrivateLink |

| Scope | Full VPC | Full VPC | Specific service |

| Routing | No transitive | Transitive | No routing needed |

| Internet Required | Sometimes | Sometimes | Never |

| Security Granularity | Network-level | Network-level | Service-level |

| Scalability | Poor in large setups | High | High |

When to Use AWS PrivateLink?

PrivateLink is a great fit when you need secure, private connectivity to a specific service rather than full network access. It's ideal when exposing internal services across accounts, integrating with third-party SaaS providers, or accessing AWS services like S3 or KMS without traversing the internet. It's also commonly used in regulated environments where internet traffic is tightly controlled or disallowed entirely. If you're building a microservices architecture across accounts or regions, or creating a central service consumed by multiple business units, PrivateLink provides an efficient and secure method of service sharing.

2. Architecture and Core Concepts

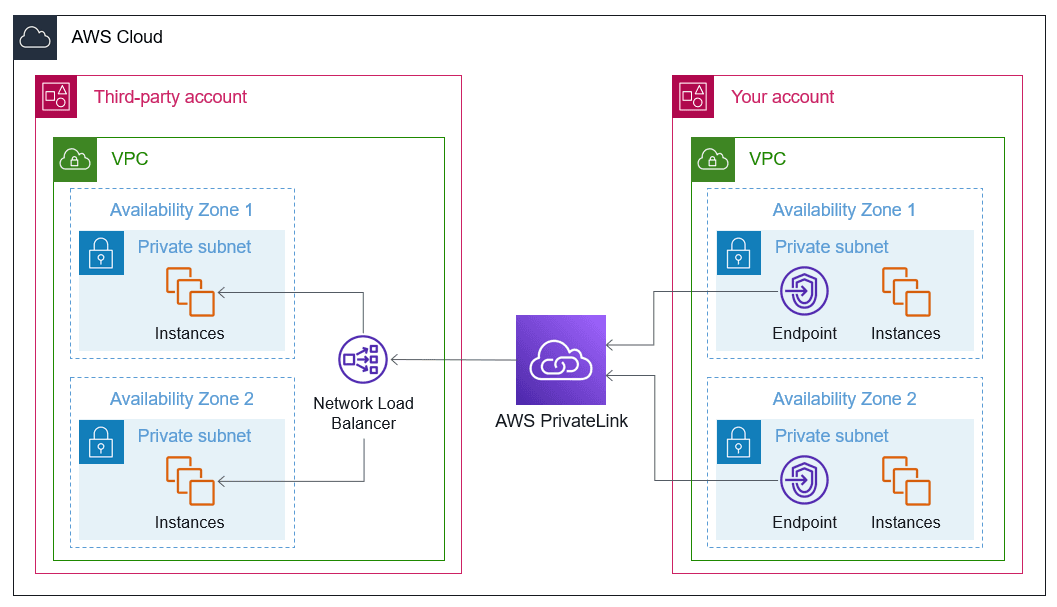

Source: AWS Document

Core Components

At the heart of AWS PrivateLink are three components: Interface Endpoints, Endpoint Services, and Network Load Balancers.

Interface Endpoints are elastic network interfaces (ENIs) that are created in the consumer’s VPC. These endpoints allow consumers to connect to a service using private IP addresses from within their subnet. From the consumer’s point of view, this connection feels like accessing a local resource, even though it’s reaching across accounts or services.

On the provider side, the service must be exposed through an Endpoint Service, which is backed by a Network Load Balancer. The NLB handles incoming requests from consumers and forwards them to the underlying targets, such as EC2 instances or containerized services. This separation between provider and consumer allows the service owner to retain full control over backend infrastructure while offering access to external accounts or organizations.

High-Level Architecture Overview

The AWS PrivateLink architecture follows a provider-consumer model. The provider hosts the service and sets up a Network Load Balancer in front of it. This NLB is then used to create an Endpoint Service, which the provider makes available to other AWS accounts or organizations.

The consumer creates an Interface Endpoint in their VPC, targeting the provider's Endpoint Service. When traffic is sent from the consumer to the endpoint, it travels over the AWS network not the internet and is routed through the NLB to the target service.

This design ensures the service remains private and secure, while still being easily accessible to authorized consumers across different environments.

Traffic Flow and ENI Roles

Once the interface endpoint is created in the consumer VPC, it acts as a private gateway to the service. The endpoint is essentially an ENI with a private IP address in the consumer's subnet. When the consumer sends traffic to this IP address (typically resolved via private DNS), the traffic is routed directly to the provider's NLB over AWS’s internal network infrastructure.

Because of this ENI-based model, the provider doesn't need to know or manage the consumer's VPC structure or routing configuration. The traffic remains isolated, controlled, and efficiently routed with low latency.

Security and Isolation Model

One of PrivateLink’s key strengths is its built-in security and isolation. All communication uses private IPs within the AWS network, meaning there is no exposure to the public internet. This is especially important for workloads with compliance or regulatory requirements.

Access control is managed on both sides. On the provider side, you define which AWS accounts or principals are allowed to connect to the Endpoint Service. On the consumer side, IAM policies determine who can create and manage interface endpoints. This two-layer permission model ensures that only trusted parties can connect.

PrivateLink also works well with other AWS security features. You can encrypt data in transit using TLS, manage authentication using AWS IAM, and monitor access with CloudWatch and VPC Flow Logs. This helps organizations build strong security postures without adding complexity to their networking layer.

3. Use Cases and Deployment Models

Accessing AWS Services Privately (e.g., S3, KMS, CloudWatch)

Many AWS-managed services offer PrivateLink support, allowing customers to access them without leaving the AWS backbone. For instance, services like Amazon S3, AWS KMS, and CloudWatch can be accessed directly through interface endpoints created within your VPC. This eliminates the need for NAT gateways or internet gateways and provides a secure, low-latency path to these critical services.

In high-compliance or air-gapped environments, PrivateLink ensures that sensitive data or logs don’t leave the VPC, satisfying stricter security policies while still leveraging native AWS services.

Integrating with Third-Party SaaS Applications

PrivateLink has become a popular integration method for many SaaS providers operating on AWS. Instead of exposing their APIs or dashboards through public endpoints, these providers can offer PrivateLink-based access to their services. Customers benefit from this approach by keeping all communication within the AWS network, avoiding the internet entirely.

This model is particularly effective in regulated industries such as finance, healthcare, and government, where direct internet connectivity to external vendors may not be allowed or may require extensive risk assessments.

Exposing Internal Services to Other AWS Accounts

PrivateLink is also ideal for organizations that need to expose internal services across different AWS accounts or organizational units. Instead of configuring VPC peering or managing shared networking infrastructure, service owners can publish an Endpoint Service. Consumers in other accounts can then connect to this service using interface endpoints, without gaining access to the rest of the provider's network.

This setup supports multi-tenant platforms, internal API sharing, or centralized service architectures, where teams operate in isolated accounts but need access to core services like billing, auth, or reporting APIs.

Intra-Organization VPC Communication

In larger organizations with many VPCs, often segmented by business unit or application, PrivateLink enables simplified and secure communication without the need for complex route tables, overlapping CIDRs, or transitive network setups. You can create a hub-and-spoke architecture where shared services are exposed via PrivateLink and consumed across accounts and regions, all without internet exposure.

Advanced Use Case Patterns

PrivateLink’s flexibility allows for more sophisticated architectures as well. For example, by integrating PrivateLink with AWS Service Catalog, enterprises can automate the provisioning of pre-approved, secure services across their organization. Similarly, in microservice environments, PrivateLink works well with service meshes like AWS App Mesh, enabling secure service-to-service communication across VPCs.

While PrivateLink doesn't natively support cross-region connectivity, you can combine it with inter-region VPC peering or Transit Gateway to achieve this. And for additional security layers, you can place AWS WAF in front of your services using a Network Load Balancer, or integrate with AWS Private Certificate Authority (CA) for secure TLS termination.

4. Implementation Strategy

Planning and Prerequisites

Before implementing AWS PrivateLink, careful planning is essential. This includes both network-level design and organizational considerations. At the architectural level, you need to ensure the provider and consumer VPCs are in the same region (unless you plan to use inter-region connectivity techniques). Additionally, the provider’s service must be behind a Network Load Balancer, and consumers must have subnet capacity for the interface endpoints.

Organizationally, it’s important to establish clear ownership for the service. The provider should manage the backend infrastructure and Endpoint Service configuration, while consumers should be responsible for creating and maintaining their own interface endpoints.

VPC and Account Design

In a multi-account environment, it's common to separate services into different AWS accounts for isolation and governance. You’ll want to ensure that CIDR blocks between consumer and provider VPCs do not overlap, although PrivateLink doesn’t rely on routing, so overlap is less of an issue compared to peering or Transit Gateway.

Designing subnet placement is also critical. Interface endpoints are ENIs, so you’ll need to place them in subnets with appropriate route tables, security groups, and access to DNS resolution.

Service Ownership Considerations

Deciding who owns and operates the PrivateLink service often depends on your organization's structure. In centralized models, platform or infrastructure teams manage the service and expose it to application teams. In other cases, individual service teams may own their endpoints. Either way, roles and responsibilities around monitoring, scaling, and support should be well defined to avoid miscommunication during operations.

Publishing a PrivateLink Service

To expose a service over PrivateLink, the provider must first set up a Network Load Balancer in front of the backend targets. This NLB should span multiple Availability Zones for high availability and should have listener rules that map to the required ports (typically HTTPS or TCP).

Once the NLB is in place, an Endpoint Service is created using it. This service acts as the PrivateLink “entry point” for consumers. You can configure this service to either accept any request or require explicit approval for each connection. AWS allows fine-grained access controls by specifying allowed AWS account IDs, AWS Organizations, or principals.

Managing access is critical. If you're offering a SaaS product, you’ll likely want to manually approve consumer connections or use Organization-based policies. For internal use across teams or business units, automatic approval may suffice.

Consuming a PrivateLink Service

On the consumer side, implementing PrivateLink starts by creating an Interface Endpoint. This is done via the AWS Console, CLI, or SDKs, and involves selecting the service, the VPC, and the subnets where the endpoint should reside.

After the endpoint is created, DNS resolution becomes key. If the provider has enabled Private DNS, the service name will resolve to the endpoint’s private IP address automatically. Otherwise, consumers must manually configure DNS settings or use custom domains. Proper security group rules and network ACLs are also necessary to allow communication between the interface endpoint and the provider’s backend.

Practical Walkthrough: Deploying a Private SaaS

To better illustrate the implementation, consider a scenario where you’re deploying a SaaS application using PrivateLink.

On the provider side, you would first deploy your application across multiple AZs and front it with a Network Load Balancer. Then, you'd create a VPC Endpoint Service and associate it with the NLB. If needed, configure manual approval for consumer accounts and attach appropriate permissions to control access.

On the consumer side, the customer creates an interface endpoint in their VPC. This connects directly to your service via the NLB. You’ll also need to ensure that the consumer has DNS properly configured either through AWS-provided Private DNS or custom setup so they can reach the service using a consistent name.

After setup, both sides should validate connectivity using tools like curl, health check endpoints, or CloudWatch metrics. Testing should include connectivity across all AZs, DNS resolution, and permissions validation.

5. Operations, Monitoring, and Cost

Availability and Fault Tolerance

PrivateLink is built on AWS infrastructure and supports high availability by design. However, you still need to architect for redundancy. For instance, the provider should deploy the NLB across multiple Availability Zones and ensure that backend targets are also distributed to avoid single points of failure.

Health checks configured on the NLB are crucial. They ensure traffic is only routed to healthy targets and allow for automatic failover in case of instance or zone failure. On the consumer side, placing interface endpoints in multiple subnets and AZs ensures resilience in case of zonal disruption.

Monitoring and Logging

Effective monitoring is necessary to keep PrivateLink connections healthy and performant. VPC Flow Logs can be enabled to track traffic to and from interface endpoints, providing insight into usage patterns, dropped packets, or unusual behavior.

Additionally, integrating with Amazon CloudWatch allows you to set alarms and dashboards based on metrics like NLB request count, backend latency, or endpoint error rates. For internal services, consider implementing application-level logging and tracing (e.g., AWS X-Ray) to get end-to-end visibility.

Pricing and Cost Optimization

PrivateLink pricing is based on two main components: interface endpoint hourly charges and data processing fees. You pay an hourly rate for each interface endpoint, as well as a per-GB charge for data that flows through the endpoint.

| Cost Component | Description |

| Interface Endpoint | Hourly charge per endpoint, per AZ |

| Data Processing | Per-GB data charge between interface and service |

To optimize cost, consider consolidating traffic through fewer endpoints when possible. For example, a shared services model might allow multiple applications to use a single endpoint. You can also reduce data transfer by compressing payloads or eliminating unnecessary API calls.

Avoid placing endpoints in all AZs unless required for HA, and monitor unused or idle endpoints to prevent unnecessary hourly charges.

6. Security, Best Practices, and Limitations

Designing for Scale and Security

As PrivateLink adoption grows in your environment, you’ll want to design with scale and security in mind. Services should be modular and exposed with the least privilege principle. Use interface endpoint tags and resource policies to track ownership and automate governance.

IAM and resource policies play a central role. Consumers should only have permissions to create and manage their own endpoints, and providers should limit which accounts can connect to their services. By carefully scoping these permissions, you reduce the blast radius and prevent unauthorized access.

Minimizing Blast Radius and Exposure

To protect against misconfiguration or compromise, limit what each PrivateLink endpoint can access. Avoid exposing full backend networks and consider deploying additional layers of authentication or authorization at the application level.

It’s also a best practice to use separate subnets and security groups for interface endpoints, giving you better control over access and auditability. Incorporating AWS Config rules and automated compliance checks can help enforce these standards.

PrivateLink in Multi-Account Architectures

In organizations that follow a multi-account strategy, PrivateLink becomes a powerful tool to connect services securely without the complexity of peering or Transit Gateways. Centralized services (like logging, monitoring, or auth services) can be hosted in a single account and exposed to others using interface endpoints.

To make this scalable, consider building automation around PrivateLink setup using AWS Service Catalog, Infrastructure as Code (IaC), or AWS Control Tower customizations. This allows new teams or accounts to onboard quickly while following governance standards.

Limitations and Trade-Offs

While PrivateLink offers clear benefits, it's not without trade-offs. There are protocol limitations for instance, only TCP is supported, which may be a blocker for certain use cases. Additionally, PrivateLink currently does not support UDP traffic or ICMP, and cannot be used for VPN or SSH tunneling.

Regional availability is another consideration. PrivateLink is a regional service, meaning consumer and provider endpoints must exist in the same region. While you can build workarounds using inter-region VPC peering, it adds complexity and cost.

Architecturally, PrivateLink may not always be the best fit. For full mesh VPC communication or when transitive routing is needed, Transit Gateway or VPC Peering may be more suitable. Also, DNS behavior can be tricky. If you rely on custom domains or multiple PrivateLink connections for the same service, you’ll need to carefully manage DNS overrides to avoid name resolution issues.

Integration Challenges When working with third-party SaaS providers, integration via PrivateLink can come with additional hurdles. These include aligning on service names, resolving DNS configuration mismatches, and ensuring both sides are using compatible VPC architectures.

Naming conflicts and route overlaps are less of a concern than with peering, but they can still occur if DNS records are not isolated properly. Testing and validating the setup in a staging environment before rolling out to production is strongly recommended.