1. Introduction

1.1 Why AI Hardware Matters Now

Artificial Intelligence (AI) is everywhere — from chatbots and recommendation engines to voice assistants and tools like ChatGPT or Google Gemini. But behind all of this smart technology is something that often gets overlooked: the hardware that makes it all possible.

Traditional CPUs are great for general tasks, but they struggle with the massive, complex calculations needed for deep learning. As AI models have gotten bigger and more powerful, we’ve needed hardware that can keep up. That’s where GPUs and TPUs come in.

- GPUs (Graphics Processing Units), originally built for video games, turned out to be great for training AI models.

- TPUs (Tensor Processing Units), built by Google, are custom-designed to run AI workloads more efficiently, especially at scale.

Now, with AI models being used in real-time applications around the world, there's a big shift happening — toward what Google calls the “age of inference.” This article breaks down how GPUs and TPUs compare, how they’ve evolved, and why the newest TPU, called Ironwood, might be a game-changer.

1.2 The Role of TPUs and GPUs in AI

AI models go through two main stages:

- Training – when the model learns from data.

- Inference – when the model makes predictions or answers based on what it’s learned.

Each stage has different hardware needs:

| Hardware | Built For | What It’s Best At |

| GPU | General-purpose compute | Flexible, great for training |

| TPU | AI-specific tasks | Very efficient, great for inference (and training on some versions) |

As of 2025, both GPUs and TPUs are essential to modern AI. But their designs, strengths, and best use cases are quite different — and knowing those differences helps you choose the right tool for your AI workload.

2. The Evolution of AI Compute

2.1 How GPUs Became Essential for AI

At first, GPUs were used mostly for graphics — things like rendering video games and 3D effects. But around the mid-2000s, researchers realized GPUs were also really good at handling parallel math operations, which are also used in AI.

This led to the idea of GPGPU (General-Purpose computing on GPUs). When NVIDIA released CUDA in 2006, it gave developers a way to write AI-friendly code for GPUs — which kicked off the deep learning revolution.

2.2 Deep Learning Creates Demand for New Chips

As deep learning took off with models like AlexNet, ResNet, and later transformers, the demands on hardware increased. GPUs were powerful, but they weren’t built specifically for machine learning — so issues like energy use and scalability became a problem.

That opened the door for specialized chips designed just for AI. This is where Google’s TPUs came in.

2.3 Google’s TPU: A Chip Built for AI

Google introduced its first TPU in 2016 to help run AI tasks more efficiently. TPUs are ASICs (Application-Specific Integrated Circuits), which means they’re built for one job — in this case, tensor operations (the math behind deep learning).

Each new TPU generation has added more speed, memory, and efficiency:

| Year | TPU Version | What It Added |

| 2016 | TPU v1 | Inference-only chip used inside Google |

| 2017 | TPU v2 | Added training support, launched on Google Cloud |

| 2018 | TPU v3 | Faster, with water cooling and pods |

| 2020 | TPU v4 | Better memory, more energy-efficient |

| 2023 | TPU v5e / v5p | Cost-effective training at scale |

| 2024 | Trillium (v6) | Big performance jump — 4.7x faster than v5e |

| 2025 | Ironwood (v7) | First TPU built just for inference |

2.4 Milestones That Changed the Game

Several events helped bring AI hardware into the spotlight:

- AlphaGo (2016): Google’s DeepMind used TPUs to beat a world champion at Go — showing the world what custom AI hardware could do.

- Cloud TPU launch (2017): Developers outside Google could now use TPUs for training.

- LLMs (2018–2024): Huge models like GPT-3, PaLM, and Gemini drove demand for more and better hardware.

These moments helped prove that hardware matters as much as the models themselves.

2.5 A New Focus: Inference at Scale

In the past, most AI hardware was focused on training big models. But now that these models are running real-time apps used by billions of people, the focus is shifting to inference.

Inference means the model is doing its job — answering questions, detecting spam, recommending videos — in real time. That means hardware needs to be:

- Fast (low latency)

- Scalable (serve millions or billions of users)

- Efficient (especially in huge data centers)

Google’s Ironwood TPU, launched in 2025, was built specifically for this. It’s designed for real-time reasoning, handling tasks like search, translation, and AI agents that respond instantly.

3. Understanding the Hardware: TPU and GPU Basics

3.1 What Is a GPU?

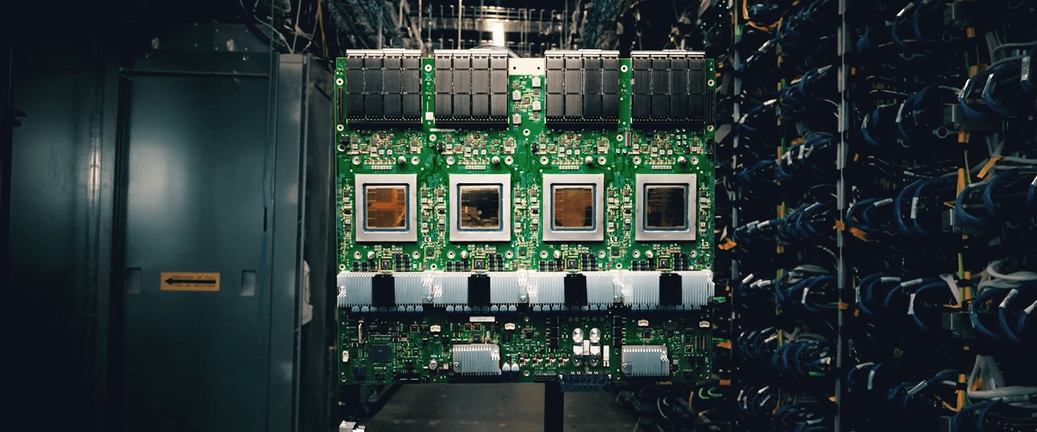

Source: Nvidia

A GPU (Graphics Processing Unit) is a parallel processor originally developed to accelerate gaming and 3D rendering. Its architecture — thousands of cores running in parallel — makes it ideal for workloads like training neural networks, where matrix operations dominate.

NVIDIA GPUs like the A100 and H100 are now AI workhorses, supported by a mature software stack (CUDA, cuDNN) and frameworks like PyTorch and TensorFlow.

3.2 What Is a TPU?

Source: Google

A TPU (Tensor Processing Unit) is a specialized chip designed by Google to accelerate machine learning workloads — particularly the tensor algebra used in deep learning.

Unlike GPUs, TPUs are not general-purpose. They’re designed to do one thing exceptionally well: run ML models with high efficiency. Some generations (v2–v5) support both training and inference; others, like Ironwood, are inference-only but optimized to the extreme.

3.3 Key Architectural Differences

Attribute | GPU | TPU |

Purpose | General-purpose compute | ML-specific acceleration |

Core Architecture | Thousands of programmable CUDA cores | Systolic arrays for matrix ops |

Flexibility | High (graphics, AI, scientific computing) | Low (tailored for AI workloads) |

Energy Efficiency | Moderate | High — especially for inference |

Software Ecosystem | CUDA, PyTorch, TensorFlow | TensorFlow, JAX, XLA |

Takeaway: GPUs are the Swiss Army knife; TPUs are the scalpel — focused, efficient, and increasingly dominant in inference-heavy environments.

3.4 Where They're Used Today

Use Case | GPU | TPU |

Training large LLMs | ✅ Common | ✅ Google-scale |

Inference for real-time apps | ✅ Widely used | ✅ Optimized (Ironwood) |

Computer vision / graphics | ✅ Origin story | ❌ Not designed for |

Scientific simulations | ✅ Strong | ❌ Limited |

Large-scale recommendation engines | ✅ With tuning | ✅ With SparseCore |

4. Architectural Comparison: TPU vs GPU

Understanding the architectural differences between TPUs and GPUs is key to evaluating their performance, efficiency, and use cases in real-world AI workloads. While both aim to accelerate machine learning tasks, their compute design, memory structure, and power usage vary significantly.

4.1 Compute Design (Systolic Arrays vs CUDA Cores)

GPUs are designed around thousands of small processing cores (CUDA cores in NVIDIA GPUs), enabling high parallelism. These cores are programmable and flexible, supporting a wide range of computations beyond AI.

TPUs, in contrast, use systolic arrays, a hardware design that passes data rhythmically across a grid of interconnected processing elements. This structure is highly optimized for tensor operations like matrix multiplication — the foundation of deep learning.

Feature | GPU (CUDA Cores) | TPU (Systolic Arrays) |

Design Purpose | General-purpose parallelism | Matrix/tensor operation efficiency |

Programmability | High | Low (more fixed-function) |

Efficiency (ML workloads) | Moderate | High |

Peak Utilization | Lower (depends on task) | Higher (for dense matrix ops) |

4.2 Memory Hierarchy and Bandwidth

Efficient memory access is crucial in AI workloads where large tensors need to be quickly moved and processed.

- GPUs use a hierarchy of memory (global, shared, cache) with high bandwidth to move data between the processor and memory.

- TPUs, particularly in later generations like Ironwood, have High Bandwidth Memory (HBM) closely integrated into the chip, minimizing latency and increasing throughput.

Attribute | GPU | TPU (Ironwood) |

Memory per chip | Up to 80 GB (H100) | 192 GB (Ironwood) |

Bandwidth per chip | ~3.35 TBps (H100) | 7.2 TBps |

Memory type | HBM3 | HBM (version varies by gen) |

4.3 Interconnects and Communication

Modern AI models often run across hundreds or thousands of chips, making fast inter-chip communication essential.

- GPUs use technologies like NVLink and NVSwitch for multi-GPU setups.

- TPUs use Google’s custom Inter-Chip Interconnect (ICI), enabling high-bandwidth, low-latency communication across massive pods of TPUs.

Feature | GPU (NVLink/NVSwitch) | TPU (ICI) |

Bandwidth | Up to 900 GBps | 1.2 Tbps (Ironwood) |

Scalability | 8–16 GPU nodes | Up to 9,216 TPUs per pod |

Latency optimization | Moderate | Highly optimized for synchronization |

4.4 Software Ecosystem

- GPUs have a mature ecosystem, primarily driven by CUDA, with wide support in frameworks like PyTorch, TensorFlow, and JAX.

- TPUs are deeply integrated with Google’s ML stack, including TensorFlow, JAX, and the Pathways runtime.

Attribute | GPU | TPU |

Ecosystem maturity | Extensive | Growing (Google-focused) |

Framework support | PyTorch, TensorFlow, JAX, more | TensorFlow, JAX, Pathways |

Learning curve | Moderate | Higher (but improving) |

4.5 Power and Thermal Efficiency

As compute demands rise, so do concerns about energy consumption and heat dissipation.

TPUs have consistently aimed for higher performance-per-watt compared to GPUs, particularly in inference. Ironwood, Google’s latest TPU, offers up to 2x the perf/watt of its predecessor, Trillium, and is cooled via advanced liquid cooling solutions.

Metric | GPU (e.g., NVIDIA H100) | TPU (Ironwood) |

Cooling method | Air / Liquid (data center) | Liquid (standard) |

Perf/watt (relative) | Baseline | ~2x of Trillium, ~30x of TPU v2 |

Environmental impact | High (large deployments) | Lower (optimized designs) |

5. Performance Breakdown

This section explores how TPUs and GPUs compare across various dimensions of real-world AI performance.

5.1 Training Performance

GPUs are still the most commonly used hardware for training, particularly for researchers and developers using PyTorch. NVIDIA's H100 GPU, for example, is optimized for mixed-precision training and supports large-scale multi-GPU setups.

TPUs, starting from TPU v2, also support training. Google’s Pathways stack enables large-scale training across pods of thousands of TPUs. For massive models like Gemini or PaLM, TPUs are often the hardware of choice.

5.2 Inference Performance

Designed specifically for inference at scale, Ironwood TPUs offer low latency, high throughput, and efficient performance across workloads like search ranking, language models, and recommendations.

GPUs also support inference well, especially with TensorRT optimizations. However, they typically consume more power and require more tuning to match TPU-level efficiency at scale.

5.3 Scalability and Parallelism

Both TPUs and GPUs offer robust scalability:

- GPUs scale via NVLink/NVSwitch and are often used in DGX systems or supercomputers.

- TPUs scale through pods — tightly integrated clusters of thousands of chips. Ironwood supports up to 9,216 TPUs per pod, with total compute reaching 42.5 ExaFLOPs.

Metric | GPU Cluster (H100) | TPU Pod (Ironwood) |

Max Chips per cluster | ~512–1024 | 9,216 |

Peak compute | ~1 ExaFLOP | 42.5 ExaFLOPs |

Network latency | Moderate | Low (synchronous ICI design) |

5.4 Performance-per-Watt Analysis

Energy efficiency is becoming a competitive differentiator:

- TPUs, by design, consume less power for AI-specific workloads. Ironwood is reportedly nearly 30x more efficient than the first-generation TPU.

- GPUs, while powerful, typically offer lower performance-per-watt unless fine-tuned with lower precision and energy-saving techniques.

6. TPU Generations: From v1 to Ironwood

TPUs have evolved dramatically since their debut in 2016. Each generation introduced architectural improvements focused on performance, flexibility, and now, inference.

6.1 Timeline of TPU Evolution

Generation | Year Introduced | Focus Area |

TPU v1 | 2016 | Inference-only |

TPU v2 | 2017 | Training + Inference |

TPU v3 | 2018 | Training at scale, pod introduction |

TPU v4 | 2020 | Higher efficiency, more memory |

TPU v5e/v5p | 2023 | Scalable, cost-optimized training |

Trillium (v6) | 2024 | 4.7x perf vs v5e, improved cooling |

Ironwood (v7) | 2025 | Inference-first design |

6.2 Innovations in Each TPU Generation

Each new TPU brought unique advancements:

- TPU v2: Introduced training support; made available on Google Cloud

- TPU v3: Liquid cooling, improved pod connectivity

- TPU v4: Focus on energy efficiency, better memory bandwidth

- TPU v5e/v5p: Optimized for cost and scalability in multi-user environments

- Trillium: Major jump in perf/watt and mixed-precision computing

- Ironwood: Built from the ground up for inference at ultra-large scale

6.3 Ironwood (TPU v7) Deep Dive

6.3.1 Inference-First Architecture

Ironwood is built to handle complex reasoning workloads, including large-scale LLMs, Mixture-of-Experts (MoE) models, and AI agents that think and respond in real time.

6.3.2 Compute, Memory, and Networking Advances

- Compute: 4,614 TFLOPs per chip

- Memory: 192 GB HBM per chip

- Bandwidth: 7.2 TBps memory, 1.2 Tbps interconnect

- Pod Scale: Up to 9,216 chips → 42.5 ExaFLOPs

- Cooling: Advanced liquid cooling for sustained performance

6.3.3 Use Cases: Gemini, AlphaFold, Ranking, etc.

Ironwood powers advanced workloads like:

- Gemini 2.5 — Google's frontier LLM

- AlphaFold — Protein structure prediction

- Search & YouTube ranking systems

- Generative AI agents using real-time inference

7. AI Hardware Ecosystem and Real-World Adoption

Theoretical performance benchmarks are only part of the story. The real test of AI hardware lies in production environments — where scalability, cost-efficiency, and developer accessibility matter just as much as raw compute. In this section, we explore how TPUs and GPUs are deployed across the industry and what differentiates their adoption paths.

7.1 Google’s TPU Ecosystem

TPUs are at the heart of Google's AI infrastructure, powering everything from day-to-day applications to world-class research. Designed for high-throughput, low-latency inference, TPUs are uniquely positioned for workloads that need to scale across billions of users and requests.

Examples of Google Services Powered by TPUs

| Application | TPU Role |

| Search & Ads | Fast, low-latency inference for ranking algorithms |

| Gemini Models | Training and deploying large-scale LLMs |

| AlphaFold | Deep learning for protein structure prediction |

| Google Translate | Real-time neural machine translation |

| Assistant & Bard | Generating responses using transformer-based models |

Newer generations, like TPU Ironwood, bring improved memory bandwidth, energy efficiency, and compute density — making them ideal for both training and inference at scale.

7.2 The GPU Stronghold Beyond Google

While TPUs dominate within Google, GPUs remain the industry standard for the broader AI community. From academic labs to startups and enterprise R&D teams, GPUs — especially NVIDIA’s A100 and H100 — are the hardware of choice.

Notable GPU-Based Deployments

| Organization | Hardware Used | Primary Use Case |

| OpenAI | NVIDIA A100/H100 GPUs | GPT training and inference |

| Meta (LLaMA) | NVIDIA GPUs | Open-source LLM development |

| Microsoft (Turing) | NVIDIA + custom chips | Multi-modal AI, inference, training |

| Stability AI | NVIDIA GPUs | Generative art, diffusion models |

| Universities & Labs | NVIDIA GPUs | Research and prototyping |

GPUs are preferred for their flexibility, mature software stack (CUDA), and cross-cloud availability, making them more accessible and developer-friendly for general-purpose AI tasks.

7.3 Cloud Availability and Developer Access

Accessibility plays a major role in hardware adoption. TPUs are currently exclusive to Google Cloud, making them less accessible to teams on AWS or Azure. GPUs, by contrast, are widely supported across nearly every major cloud provider.

AI Hardware Availability by Cloud Provider

| Cloud Provider | GPU Support | TPU Support |

| Google Cloud | NVIDIA A100, H100 | Native TPU (v2 to Ironwood) |

| AWS | NVIDIA A100, H100, Inferentia | No TPU – uses custom silicon |

| Microsoft Azure | NVIDIA A100, H100 | No TPU – offers custom Maia chip |

| IBM Cloud | NVIDIA GPUs | No TPU |

If you're building within GCP or leveraging models like Gemini or AlphaFold, TPUs offer deep integration. For everyone else, GPUs remain the default due to their ubiquity and toolchain compatibility.

7.4 Who’s Leading the Custom AI Chip Race?

The AI hardware space is no longer a one-horse race. Several cloud providers are now investing in custom silicon — seeking more control over cost, power efficiency, and performance.

Comparison of Leading Custom AI Chips

Chip | Deployed at Scale? | Live Use Cases | Cloud Access | Maturity |

| TPU (Google) | ✅ Massive scale | Search, Gemini, Translate, Bard | ✅ Yes (GCP) | Mature |

| Inferentia (AWS) | ✅ Scaled for inference | Alexa, customer workloads | ✅ Yes (AWS) | Mature |

| Trainium (AWS) | Training in progress | Internal training pipelines | ✅ Yes (AWS) | Mid-stage |

| Maia (Azure) | Early-stage | Microsoft internal use | Limited | New |

While Google leads with mature TPU deployments, AWS is catching up with inference-focused Inferentia and training-optimized Trainium, both already available on Amazon EC2 instances. Microsoft’s Maia chip is still emerging

8. Pros and Cons: TPU vs GPU

Every piece of hardware has its strengths and limitations — and choosing between a TPU and a GPU means understanding where each shines and where it might fall short.

8.1 The Strengths and Trade-Offs of TPUs

TPUs are highly specialized. They're designed with one purpose in mind: accelerating machine learning workloads, particularly those involving large-scale tensor operations.

Their key advantage is efficiency — not just in performance, but also in power usage. This is especially true for inference tasks. The newer generations, like Ironwood, take this even further by reducing latency and boosting throughput across thousands of chips with liquid cooling and custom networking.

However, TPUs come with limitations. They're tightly integrated into Google’s ecosystem, which means limited flexibility if your stack depends on PyTorch or you want multi-cloud support. There’s also a steeper learning curve for teams unfamiliar with TensorFlow or JAX.

8.2 The Versatility of GPUs

GPUs, on the other hand, are the Swiss Army knife of compute hardware. Whether you're doing deep learning, scientific simulations, video rendering, or even blockchain computations, GPUs can handle it all.

They’re backed by a mature ecosystem — especially NVIDIA’s CUDA platform — and are supported by every major AI framework. This flexibility makes them ideal for experimentation, prototyping, and a wide range of production use cases.

That said, this general-purpose nature comes at a cost. GPUs tend to consume more power and may require more careful tuning to reach the same inference efficiency TPUs offer out of the box.

9. Decision Guide: Which One Should You Choose?

Choosing between TPUs, GPUs, and other custom AI accelerators depends on a mix of technical and practical considerations. This section provides a structured framework to help developers, researchers, and decision-makers evaluate the best fit for their AI workloads based on performance, cost-efficiency, scalability, and ecosystem compatibility.

9.1 Model Type and Workload Demands

AI workloads vary widely—from massive language model training to high-throughput inference for recommendation systems. Here's a breakdown of what hardware typically excels at which task:

Workload Type | Best-Suited Hardware | Why |

Large language model training | NVIDIA H100 / A100, Google TPU v5p | High floating-point throughput and memory bandwidth |

Real-time inference at scale | Google TPU Ironwood, AWS Inferentia2 | Purpose-built for low-latency, high-throughput inference |

Sparse embeddings & ranking | Google TPU with SparseCore | Optimized architecture for large-scale sparse tensors |

Custom kernel experimentation | NVIDIA GPUs | CUDA support and flexible developer tooling |

Mixed workloads (train + infer) | AWS Trainium + Inferentia combo | End-to-end integration on AWS for hybrid pipelines |

9.2 Budget and Operational Cost

Pricing remains one of the most important factors when deploying at scale. Here’s what we know based on public pricing as of 2025 for commonly used accelerators:

Estimated On-Demand Hourly Pricing

Hardware | Training Cost/hr | Inference Cost/hr | Availability |

NVIDIA A100 (40GB) | ~$3.90 | ~$2.30 | AWS, Azure, GCP |

NVIDIA H100 (80GB) | ~$4.50–$6.00 | ~$3.00–$4.00 | AWS, Azure, GCP |

Google TPU v5p | ~$3.50 | ~$1.80 | Google Cloud |

AWS Trainium | ~$3.00 | N/A | AWS |

AWS Inferentia2 | N/A | ~$1.25 | AWS |

Google TPU Ironwood | Not yet disclosed | Not yet disclosed | Google Cloud (2025 launch) |

Note: Pricing for Google TPU Ironwood has not been officially released. The values above reflect publicly available information for earlier generations and peer accelerators.

9.3 Scalability and Availability

Whether you're a startup experimenting with new models or an enterprise scaling inference globally, you’ll want to evaluate how well these platforms scale in production environments.

Hardware | Max Deployment Scale | Cloud Availability |

NVIDIA H100 | Thousands of GPUs per cluster | AWS, Azure, GCP |

Google TPU Ironwood | Up to 9,216-chip pod (~42.5 Exaflops) | Google Cloud only |

AWS Inferentia2 | Auto-scaling with ECS/SageMaker | AWS |

AWS Trainium | Multi-node SageMaker training | AWS |

NVIDIA A100 | Widely scalable | All major clouds, on-prem |

Ironwood introduces new scaling standards, but its availability is currently limited to Google Cloud infrastructure.

9.4 Framework Compatibility and Developer Experience

Compatibility with leading ML frameworks and ease of integration into your stack are key factors that affect developer productivity.

Framework | NVIDIA GPUs | Google TPUs | AWS Inferentia / Trainium |

TensorFlow | ✅ Excellent | ✅ Excellent | ✅ Supported |

PyTorch | ✅ Excellent | Moderate (via XLA) | ✅ Supported (via Torch-Neuron) |

JAX | Partial | ✅ Excellent (native) | Limited |

Custom CUDA Kernels | ✅ Full Support | ❌ Not supported | ❌ Not supported |

ONNX Runtime | ✅ Good | ✅ Partial | ✅ Good |

If your team is heavily invested in PyTorch or requires deep customization, NVIDIA GPUs offer the broadest flexibility. On the other hand, Google TPUs shine with TensorFlow and JAX, especially for large-scale distributed training.

Decision Summary

Here's a quick reference table to summarize the best choice depending on your specific requirements:

Criteria | Best Option |

Highest raw training performance | NVIDIA H100 / TPU v5p |

Most efficient inference | Google TPU Ironwood (inference-optimized) |

Budget-friendly inference | AWS Inferentia2 |

Broadest framework support | NVIDIA GPUs |

Scalable training infrastructure | Google TPUs / NVIDIA GPUs |

Best for sparse model workloads | Google TPU + SparseCore |

Multi-cloud flexibility | NVIDIA GPUs |

End-to-end managed AI pipeline | AWS Trainium + Inferentia combo |

Key Takeaway

While GPUs remain the most versatile and widely supported option, specialized accelerators like TPU Ironwood, AWS Inferentia, and Trainium offer exceptional cost-efficiency and performance for targeted workloads—especially inference and large-scale ranking tasks.

The right choice ultimately depends on your:

- Model architecture (dense vs sparse),

- Framework stack (TensorFlow, PyTorch, JAX),

- Budget for training vs inference,

- Need for scalability and multi-region deployment.

Before choosing, it's highly recommended to benchmark on real-world workloads and consult pricing calculators provided by the respective cloud vendors.

10. Looking Ahead: The Future of AI Compute

As AI continues to evolve rapidly, the hardware that powers it is undergoing a parallel transformation. From research labs to enterprise-grade cloud environments, the next frontier in AI compute will be shaped by four major forces: inference-first design, multi-agent reasoning systems, a custom silicon arms race, and the growing pressure to build sustainable infrastructure.

10.1 The Rise of Inference-Centric AI

For years, the focus in AI hardware was squarely on training massive models. But as these models mature and begin to serve real-time user queries, the bottleneck has shifted toward inference—how quickly and efficiently a model can respond. The emergence of inference-first hardware like Google TPU Ironwood and AWS Inferentia2 reflects this shift.

Modern inference workloads demand low-latency response times, optimized precision (e.g., FP8, INT8), and scalable throughput. With billions of daily interactions through chatbots, search, and recommendations, cost-efficient inference is becoming the core priority for both cloud providers and enterprise AI users.

10.2 Multi-Agent Systems and Reasoning

The next phase of AI involves systems of agents, rather than single monolithic models. These multi-agent systems will interact, delegate tasks, reason collaboratively, and even reflect on their own outputs. Hardware will need to adapt to this style of computation—requiring faster interconnects, high memory bandwidth, and real-time data sharing across distributed environments.

Expect accelerators to further specialize for tasks like:

- Multi-modal context switching

- Agent orchestration and scheduling

- Dynamic memory management and cross-agent communication

10.3 The Custom Silicon Race

While NVIDIA continues to lead in general-purpose AI compute, the push for domain-specific hardware is intensifying. Each cloud provider is now building silicon tailored to their unique infrastructure and AI goals:

- Google’s TPUs emphasize large-scale language models and dense compute.

- AWS Inferentia/Trainium focus on scalable, energy-efficient inference and training.

- Microsoft’s Maia chips are being tuned for Azure’s AI services.

- Apple, Tesla, Meta, and other tech giants are also investing heavily in custom chips.

This is no longer just about speed; it’s about tight hardware-software co-design, integration with cloud stacks, and vertical optimization from model training to real-time serving.

10.4 Energy, Sustainability, and AI Infrastructure

One of the most pressing challenges in AI compute is energy consumption. Training a large foundation model today can consume hundreds of megawatt-hours of electricity. As AI use cases scale globally, infrastructure needs to become greener and more sustainable.

Future hardware will prioritize:

- Improved performance-per-watt ratios

- Advanced liquid cooling systems (like those used in TPU Ironwood)

- Low-precision compute efficiency

- Modular data center designs for energy optimization