Introduction:

In the realm of cloud computing and container orchestration, Kubernetes has emerged as the industry standard for efficiently managing containerized applications across diverse environments. Originally developed by Google and now overseen by the Cloud Native Computing Foundation (CNCF), Kubernetes offers unparalleled scalability and a rich feature set. However, its complexity and resource requirements can be challenging, especially in resource-constrained settings.

In response to these challenges, K3s has emerged as a lightweight and certified Kubernetes distribution developed by Rancher Labs (now part of SUSE). Designed to streamline deployments in edge computing, IoT, and local development scenarios, K3s provides a simplified alternative to traditional Kubernetes. By consolidating essential components into a single, efficient binary, K3s aims to maintain core Kubernetes functionalities while reducing the overhead typically associated with deployment and management.

This comparison explores the fundamental differences between Kubernetes (often abbreviated as K8s) and K3s, focusing on their architectural approaches, deployment methodologies, feature sets, and target use cases. By examining these distinctions objectively, organizations can better assess which platform best meets their operational needs and infrastructure requirements.

What is Kubernetes (K8s)?

Kubernetes, commonly referred to as K8s, is an open-source platform designed to automate the deployment, scaling, and management of containerized applications and services. Initially developed by Google and currently overseen by the Cloud Native Computing Foundation (CNCF), Kubernetes has become the established standard for container orchestration across diverse environments, from private data centers to public cloud infrastructures.

Kubernetes Architecture

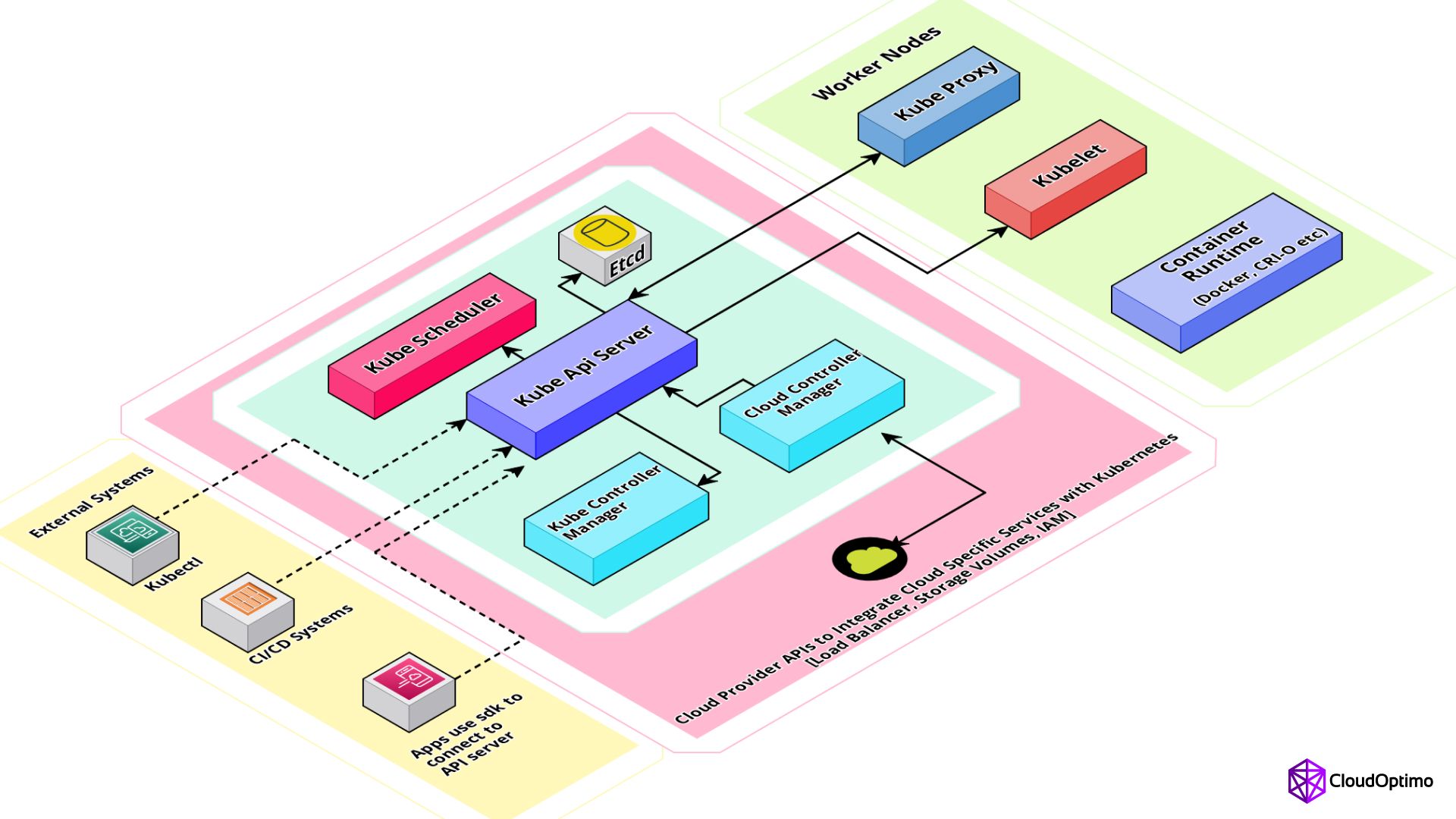

A Kubernetes cluster consists of a control plane and worker nodes. Here's an overview of its primary components:

Control Plane Components:

- Kube API Server: Acts as the front-end for Kubernetes' control plane, providing the Kubernetes API for cluster management and interaction.

- Kube Scheduler: Assigns pods to nodes based on resource availability and constraints, ensuring efficient workload distribution.

- etcd: A distributed, reliable key-value store that stores configuration data and cluster state.

- Kube Controller Manager: Manages controllers that regulate the state of the cluster, including Node and Replication controllers.

- Cloud Controller Manager: Integrates the cluster with cloud provider APIs to manage cloud-specific aspects such as load balancers and storage.

Worker Node Components:

- Kubelet: An agent running on each node that communicates with the control plane and manages containers on the node.

- Kube Proxy: Maintains network rules across the cluster and facilitates communication between pods and external traffic.

- Container Runtime: Executes containers, manages their lifecycle, and provides necessary runtime environments. Common runtimes include Docker, containerd, and CRI-O.

- Containers & Pods: Pods are Kubernetes' smallest deployable units, encapsulating one or more containers with shared resources such as storage and network. They simplify application deployment, scaling, and management.

Kubernetes' modular architecture and comprehensive set of components make it well-suited for deploying and managing containerized applications effectively at scale. By abstracting infrastructure complexities, Kubernetes enables efficient resource utilization, application availability, and resilience.

What is K3s?

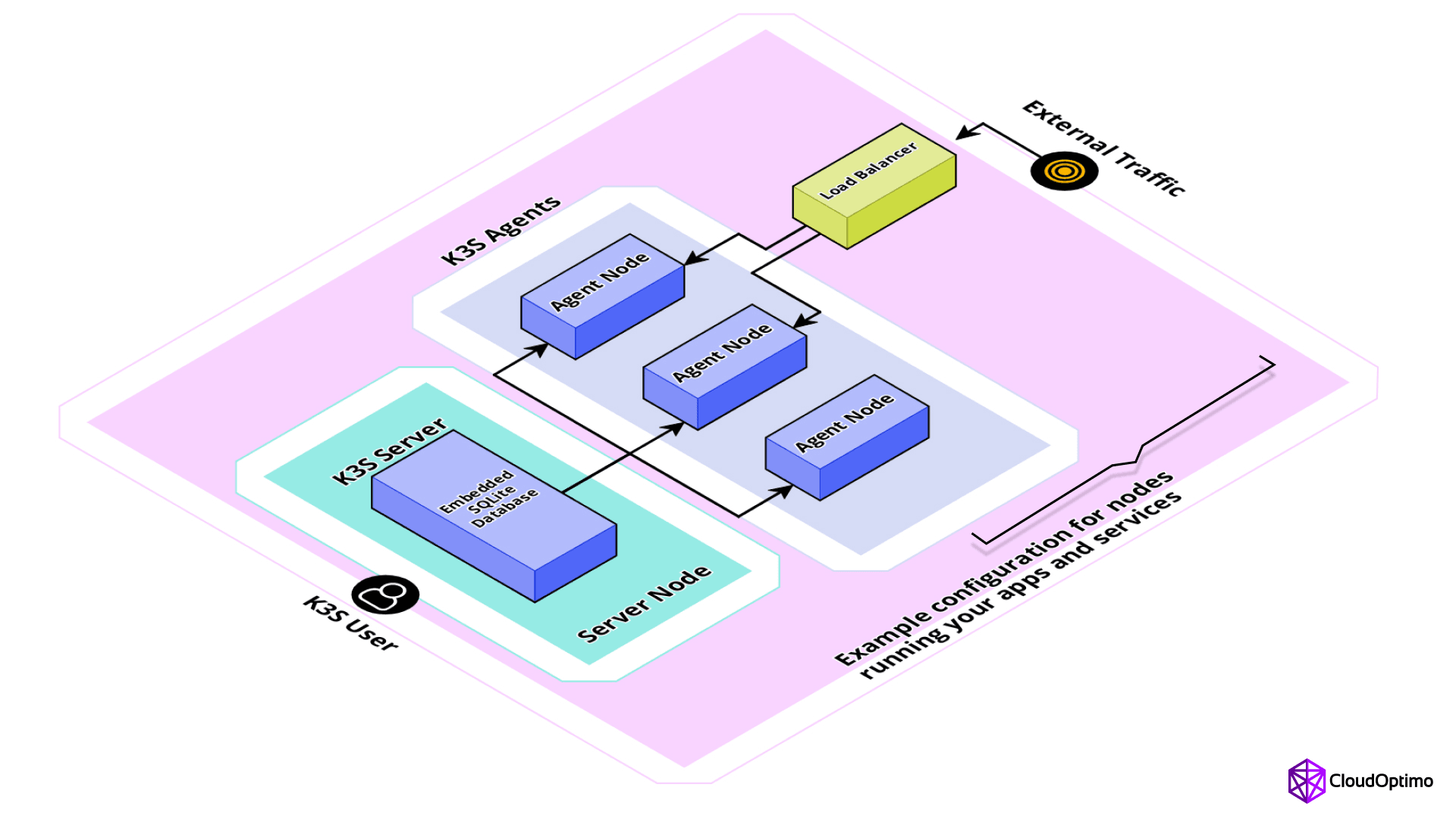

K3s, pronounced "kee-three-ess," is a lightweight, certified Kubernetes distribution designed for use cases where traditional Kubernetes might be too resource-intensive or complex. Developed by Rancher Labs (now part of SUSE), K3s aims to simplify the deployment and operation of Kubernetes clusters, particularly in resource-constrained environments such as edge computing, IoT devices, and small-scale deployments.

Unlike the monolithic architecture of Kubernetes, K3s follows a more streamlined and optimized approach. It packages all the essential Kubernetes components into a single binary, reducing the overall footprint and minimizing dependencies. This design choice makes K3s easier to install, manage, and upgrade compared to a traditional Kubernetes cluster.

Differences between K3s and K8s:

While K3s is compatible with Kubernetes and supports most Kubernetes APIs and features, there are several key differences that set it apart:

- Resource Consumption: K3s has a significantly smaller footprint compared to a full-fledged Kubernetes cluster. It requires less memory, CPU, and disk space, making it more suitable for resource-constrained environments.

- Deployment Simplicity: K3s can be installed with a single command, eliminating the need for complex setup and configuration processes. It also automatically handles certificate management and other operational tasks, further simplifying deployment and management.

- Embedded Components: Unlike Kubernetes, where components are separate binaries, K3s embeds all necessary components into a single binary. This approach reduces the overall attack surface and simplifies updates and maintenance.

- Reduced Networking Overhead: K3s employs a more lightweight networking model, reducing the overhead associated with networking components like kube-proxy and eliminating the need for complex networking setups.

- Limited Feature Set: While K3s supports most essential Kubernetes features, it intentionally excludes some advanced features like horizontal pod autoscaling, cluster autoscaler, and support for advanced networking plugins. This trade-off allows K3s to maintain its lightweight nature and focus on core functionality.

Table showcasing difference between K3s and K8s:

| Feature/Aspect | K3s | K8s (Traditional Kubernetes) |

| Resource Consumption | Significantly smaller footprint | Larger footprint, higher resource requirements |

| Installation Complexity | Simple, single command installation | Complex setup, multiple components to configure |

| Architecture | Monolithic with all components in one binary | Modular, with separate components (API server, etcd, scheduler, etc.) |

| Deployment Simplicity | Minimal setup and configuration required | Requires detailed configuration and setup procedures |

| Networking Model | Lightweight, reduced networking overhead | More comprehensive networking model with kube-proxy |

| Feature Set | Core Kubernetes features supported | Full range of Kubernetes features, including advanced capabilities |

| Target Environments | Edge computing, IoT, local development | Data centers, cloud environments, large-scale deployments |

| Use Cases | Resource-constrained environments | Large-scale applications, complex architectures |

| Community and Support | Strong community, growing support | Mature ecosystem, extensive support from CNCF and contributors |

| Updates and Maintenance | Simplified updates and maintenance | Regular updates with potential for more complex upgrade procedures |

| Advanced Features | Excludes some advanced features like horizontal pod autoscaling, complex networking plugins | Supports full spectrum of Kubernetes capabilities, including autoscaling, extensive networking options |

Use Cases

K3s

K3s stands out in scenarios where resource efficiency is paramount. Its compact size and efficient design make it an ideal choice for:

- Edge Computing: At the network edge, every byte counts. Picture deploying containerized applications for tasks like traffic analysis or industrial automation on remote gateways. K3s efficiently manages these applications with minimal resource consumption, ensuring optimal performance in critical edge environments.

- IoT Devices: Whether it's smart sensors collecting environmental data or wearables monitoring health metrics, K3s empowers developers to deploy and manage containerized applications directly on resource-constrained devices. For instance, K3s could oversee a containerized data processing application on a smart camera, enabling real-time analysis at the edge.

- Development and Testing: K3s provides a lightweight alternative to full Kubernetes setups for local development and testing environments. Imagine a developer working on a containerized microservice—K3s allows them to test its functionality and deployment locally before scaling it up into a larger system.

- CI/CD Pipelines: Integrate K3s seamlessly into your CI/CD pipelines for efficient builds and testing of containerized applications in resource-constrained environments. This capability is particularly valuable for deployments targeting edge devices or IoT systems, where testing within a similar resource profile is crucial for reliability.

In essence, K3s offers a nimble solution for managing Kubernetes in environments where efficiency and performance are paramount. Whether at the edge, in IoT deployments, or during development and testing phases, K3s delivers robust functionality while optimizing resource usage.

Kubernetes (K8s)

Kubernetes offers a robust toolkit for managing intricate containerized applications, making it indispensable for:

- Large-Scale Deployments: Think of deploying massive systems like popular e-commerce platforms or large social media applications. Kubernetes excels in handling thousands of containers across numerous servers, ensuring scalability and optimal resource management at scale.

- Cloud-Native Applications: Tailored for cloud environments, Kubernetes simplifies the development and deployment of cloud-native applications. Consider a microservices-based architecture for a major streaming service—Kubernetes seamlessly manages deployment, scaling, and communication among these services for efficient cloud operations.

- High Availability and Fault Tolerance: Kubernetes prioritizes application uptime with automated recovery mechanisms, crucial for mission-critical applications such as online banking systems or real-time communication platforms. It ensures continuous service availability even in the face of failures.

- Complex Application Architectures: Managing applications with intricate dependencies and multi-component architectures is Kubernetes' forte. Imagine orchestrating a complex data processing pipeline with multiple containerized stages—Kubernetes provides the scalability and efficiency needed to ensure seamless data flow and processing.

In essence, Kubernetes stands as the cornerstone for managing complex containerized applications, offering unparalleled scalability, reliability, and efficiency across diverse deployment scenarios.

Installation and Setup

One of the key advantages of K3s is its simplicity when it comes to installation and setup. Unlike traditional Kubernetes clusters, which often require intricate configuration and orchestration tools, K3s can be installed with a single command.

To install K3s on a single-node cluster, you can run the following command:

| curl -sfL https://get.k3s.io | sh - |

This command downloads and installs the K3s server and automatically configures it to run as a single-node cluster. If you want to set up a multi-node cluster, you can run the same command on the initial server node and then use the generated token to join additional worker nodes.

Once installed, you can interact with the K3s cluster using the standard kubectl command-line tool, just like you would with a traditional Kubernetes cluster.

Deploying Applications on K3s:

Deploying applications on K3s is similar to deploying on a standard Kubernetes cluster. You can use familiar Kubernetes resources like Deployments, Services, and ConfigMaps to define and manage your applications.

Here's an example of deploying a simple nginx web server on K3s:

| # nginx-deployment.yaml apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment spec: replicas: 3 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx:latest ports: - containerPort: 80 --- # nginx-service.yaml apiVersion: v1 kind: Service metadata: name: nginx-service spec: selector: app: nginx ports: - port: 80 targetPort: 80 |

You can apply these manifests using kubectl:

| kubectl apply -f nginx-deployment.yaml -f nginx-service.yaml |

This will create a Deployment with three replicas of the nginx web server and expose it through a Service, allowing you to access the application from within the cluster or externally.

Users may encounter a few common pitfalls during installation:

- Network Issues: Ensure that your environment allows outgoing connections to the necessary URLs for downloading K3s. Firewall settings or proxy configurations may need adjustment.

- Resource Constraints: While K3s is lightweight, ensure your system meets minimum requirements for memory, CPU, and disk space. Insufficient resources can lead to installation failures or degraded performance.

- Compatibility: Verify compatibility with your operating system and kernel version. K3s is compatible with a wide range of Linux distributions, but specific versions or configurations may require additional steps or troubleshooting.

- Permissions: Depending on your system configuration, you may need root or sudo privileges to install K3s successfully. Ensure you have the necessary permissions before proceeding with the installation command.

By being aware of these potential issues and following best practices, such as checking system requirements and ensuring network connectivity, users can mitigate common installation pitfalls and successfully deploy K3s for their environments.

Managing and Monitoring K3s Clusters

While K3s aims to simplify the overall management and operations of Kubernetes clusters, it still provides various tools and utilities for monitoring and administering your cluster.

One of the built-in tools is k3s-kubectl, which is a bundled version of kubectl that is pre-configured to communicate with the local K3s cluster. This utility allows you to perform various administrative tasks, such as inspecting nodes, pods, and services, as well as executing commands within containers.

For monitoring and logging, K3s integrates with popular open-source tools like Prometheus and Grafana. You can enable the built-in monitoring stack by setting the --cluster-monitoring flag during installation or by enabling it later using the k3s-kubectl command.

Additionally, K3s supports various third-party tools and utilities for monitoring, logging, and observability, allowing you to choose the solutions that best fit your requirements.

Comparison with Other Kubernetes Distributions

While K3s is a lightweight and simplified Kubernetes distribution, it's not the only option available. Other popular distributions include:

- Minikube: Minikube is a lightweight Kubernetes implementation primarily designed for local development and testing environments. It runs a single-node Kubernetes cluster within a virtual machine on your local machine, making it easy to experiment with Kubernetes without the need for a full-fledged cluster.

- Docker Desktop with Kubernetes: Docker Desktop includes an integrated Kubernetes environment, allowing developers to deploy and manage Kubernetes clusters directly from their development machines. It provides a more streamlined experience compared to setting up a standalone Kubernetes cluster, but is primarily aimed at development and testing scenarios.

- MicroK8s: MicroK8s is a lightweight, single-package Kubernetes distribution developed by Canonical (the company behind Ubuntu). Similar to K3s, MicroK8s aims to simplify Kubernetes deployments and operations, particularly in resource-constrained environments.

While these distributions share some similarities with K3s in terms of simplifying Kubernetes deployments, they each have their own strengths, target use cases, and trade-offs. The choice between them largely depends on your specific requirements, such as the target environment, resource constraints, and desired feature set.

Kubernetes Distribution Comparison Table

| Feature | Minikube | Docker Desktop with Kubernetes | MicroK8s | K3s |

| Installation Ease | Easy (single command) | Easy (GUI/CLI) | Easy (single command) | Easy (single command) |

| Resource Consumption | Moderate | Moderate (higher than Minikube) | Low | Very Low |

| Feature Set | Basic Kubernetes | Basic Kubernetes + Docker | Basic Kubernetes | Core Kubernetes Features |

| Target Use Cases | Local Development & Testing | Development Workflows (IDEs) | Lightweight Deployments (Ubuntu) | Resource-Constrained Environments (Edge, IoT) |

| Supported Platforms | Linux, macOS, Windows | Windows, macOS | Linux (with Snap support) | Linux (multiple architectures) |

| Multi-Node Support | No | No | Yes | Yes |

Best Practices and Tips:

While K3s simplifies the deployment and management of Kubernetes clusters, it's essential to follow best practices to ensure the reliability, security, and performance of your applications and infrastructure.

Security Considerations

Like any production system, it's crucial to prioritize security when working with K3s clusters. Here are some best practices to keep in mind:

- Network Isolation: Ensure that your K3s cluster is deployed within a secure network environment, isolated from untrusted networks or external access. You can leverage virtual private clouds (VPCs), network security groups, or firewalls to restrict access to your cluster.

- Role-Based Access Control (RBAC): K3s supports Kubernetes RBAC, allowing you to define and enforce granular permissions and access controls for users and applications accessing the cluster.

- Secure Connections: Whenever possible, use secure connections (e.g., HTTPS) when communicating with the K3s API server or other cluster components.

- Keep Software Updated: Regularly update K3s and any related software components to ensure you have the latest security patches and bug fixes.

- Audit Logging and Monitoring: Enable audit logging and monitoring to track and analyze cluster events, resource usage, and potential security incidents.

- Backup and Disaster Recovery: Implement a robust backup and disaster recovery strategy to protect your cluster data and ensure business continuity in case of failures or incidents.

Backup and Disaster Recovery

While K3s aims to simplify Kubernetes deployments, it's still essential to have a reliable backup and disaster recovery strategy in place. Here are some tips:

- Backup etcd Data: etcd is the key-value store that holds the cluster's configuration and state data. Regularly backing up the etcd data is crucial for ensuring you can recover your cluster in case of failures or data loss.

- Application Data Backups: In addition to the cluster data, ensure you have a strategy for backing up your application data, such as persistent volumes or external data stores.

- Disaster Recovery Plan: Define and test a comprehensive disaster recovery plan that outlines the steps for restoring your cluster and applications from backups in case of a disaster scenario.

- Leverage Cloud Storage: If you're running K3s in a cloud environment, consider leveraging cloud storage solutions (e.g., Amazon S3, Google Cloud Storage) for storing and replicating your backups across multiple regions or zones for added redundancy.

Scaling and High Availability

While K3s is designed for resource-constrained environments, there may be scenarios where you need to scale your cluster or ensure high availability. Here are some tips:

- Horizontal Scaling: K3s supports horizontal scaling of worker nodes, allowing you to add or remove worker nodes based on your application's resource requirements.

- Load Balancing: Use a load balancer or ingress controller to distribute traffic across multiple worker nodes, ensuring high availability and failover for your applications.

- High Availability Control Plane: While K3s is primarily designed for single-node deployments, you can configure a multi-node control plane for higher availability and fault-tolerance.

- Monitoring and Alerting: Implement robust monitoring and alerting systems to proactively identify and address issues related to resource utilization, node failures, or application health.

By following these best practices and tips, you can ensure that your K3s clusters are secure, resilient, and capable of meeting your application's scalability and availability requirements.

Conclusion

K3s has emerged as a compelling solution for deploying and managing Kubernetes clusters in resource-constrained environments, such as edge computing and IoT scenarios. Its lightweight nature, simplified installation and management, and compatibility with the Kubernetes ecosystem make it an attractive choice for organizations seeking to leverage the power of containerization without the complexities and overhead of a full-fledged Kubernetes cluster.

While K3s may not be suitable for all use cases, it presents a valuable opportunity for organizations to explore and experiment with Kubernetes in a streamlined and efficient manner. Whether you're deploying applications at the edge, setting up a local development environment, or simply looking to learn and experiment with Kubernetes, K3s offers a compelling alternative to traditional Kubernetes distributions.

As the adoption of edge computing and IoT continues to grow, the demand for lightweight, efficient, and scalable solutions like K3s is likely to increase. By understanding the differences between K3s and Kubernetes, as well as the best practices for deployment, security, and management, you can leverage the power of containerization and orchestration in even the most resource-constrained environments.

FAQs

Is K3s suitable for large-scale production environments?

A: While K3s is production-ready, it's primarily designed for edge computing, IoT, and resource-constrained environments. For large-scale production deployments, traditional Kubernetes (K8s) is typically more suitable due to its full feature set and extensive ecosystem support.

How does K3s handle updates and upgrades compared to K8s?

A: K3s simplifies the update process by packaging all components into a single binary. This often makes updates easier and faster compared to traditional Kubernetes. However, the process may differ for complex, multi-node setups.

How does the performance of K3s compare to K8s in resource-constrained environments

A: K3s generally performs better in resource-constrained environments due to its lightweight design and reduced overhead. This makes it particularly suitable for edge computing and IoT scenarios where resources are limited.

Is the kubectl experience the same when working with K3s and K8s clusters?

A: Generally, yes. However, there might be slight differences in available commands or features due to K3s' streamlined architecture. Familiarizing yourself with any K3s-specific nuances can improve your cluster management experience.

How does K3s handle high availability and fault tolerance?

A: K3s supports high availability configurations, but the setup process and capabilities may differ from traditional Kubernetes. It's important to review K3s documentation for specific guidance on implementing HA in your environment.