1. Introduction

1.1. What Are TPUs?

Tensor Processing Units (TPUs) are custom-built chips by Google, designed specifically to accelerate machine learning tasks. Unlike general-purpose CPUs or GPUs, TPUs are optimized for the types of calculations used in AI models — especially matrix operations common in neural networks.

1.2. The Rise of Custom AI Hardware

As AI models grew in size and complexity, traditional hardware struggled to keep up. This led to a shift toward custom AI accelerators like TPUs. These chips offer better performance, lower power consumption, and cost-efficiency for training and deploying AI at scale.

1.3. Why Ironwood Matters Now

Source : Google Blog

Launched in April 2025, Ironwood is Google’s latest TPU designed specifically for inference at scale. While previous TPU generations focused on training, Ironwood is optimized to handle the real-time demands of production AI models, such as low latency and high throughput. It’s engineered for applications like natural language processing and generative AI, addressing the growing need for specialized hardware to run complex AI models efficiently. Additionally, with a focus on energy efficiency, Ironwood offers a sustainable solution for powering large-scale AI deployments while minimizing environmental impact.

2. The Evolution of Google TPUs

Google’s Tensor Processing Units (TPUs) have undergone a remarkable transformation since their debut in 2016. Each generation reflects a leap in hardware and software co-design, shaped by the evolving demands of deep learning workloads — from training to inference at scale.

2.1. Generational Overview: From TPU v1 to Trillium (v6)

Google introduced the TPU v1 as a purpose-built ASIC (Application-Specific Integrated Circuit) optimized for neural network inference. Over time, successive generations added training capabilities, increased FLOPS (floating point operations per second), and introduced support for bfloat16 and sparsity — all while scaling infrastructure with TPU Pods.

TPU Generational Evolution Table

| TPU Generation | Year | Key Capabilities | Use Case Focus | Notable Enhancements |

| TPU v1 | 2016 | Inference only | Simple models | 92 TOPS, 8-bit precision, low-latency ASIC |

| TPU v2 | 2017 | Training + Inference | Large DNNs | 180 TFLOPS, 64 GB HBM, Pod support |

| TPU v3 | 2018 | Training at scale | Massive training | 420 TFLOPS, Liquid cooling, 100+ TPU cores per pod |

| TPU v4 | 2021 | Training + inference | Foundational models | 275 TFLOPS per chip, optical interconnects, enhanced memory bandwidth |

| TPU v5 (v5e) | 2023 | General-purpose ML | Balanced workloads | Better efficiency, dynamic scaling, support for sparsity |

| TPU v6 (Trillium) | 2024 | Foundation model training | Ultra-large models | Improved networking, memory, and energy efficiency |

2.2. Milestones Leading to Ironwood

Each TPU generation was a stepping stone toward Ironwood. Google iteratively improved three core pillars:

- Compute Power: From fixed-function units to flexible tensor cores, enabling dynamic workloads and sparsity-aware execution.

- Memory Bandwidth: Transition from DDR to HBM with higher throughput to reduce training and inference bottlenecks.

- Interconnects: From PCIe to proprietary optical mesh networks within TPU Pods, significantly boosting data exchange rates across chips.

These evolutionary refinements set the stage for Ironwood — a TPU variant purpose-built for inference, not training, unlike most previous versions.

2.3. What Ironwood Solves That Earlier Versions Didn’t

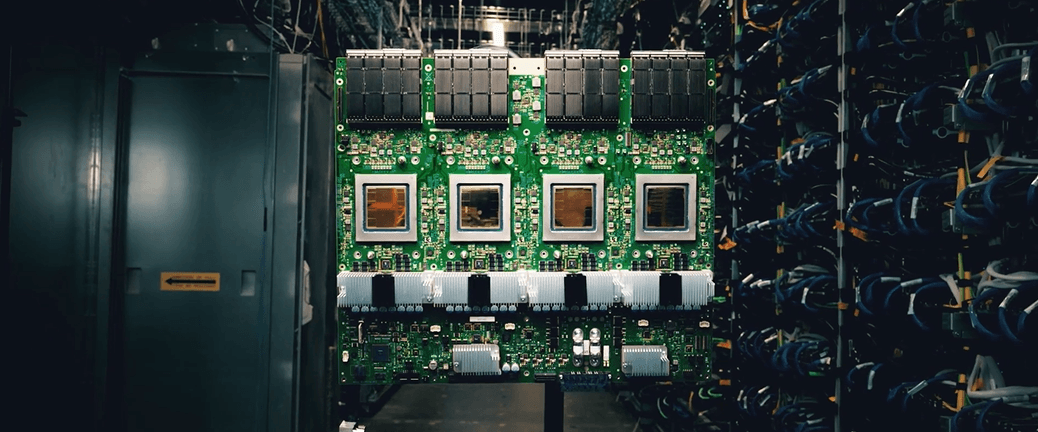

Source : Google Cloud Youtube

Ironwood addresses gaps that emerged as inference workloads outpaced the growth of model training needs. Its architecture is optimized for:

- Inference-First Design: Low-latency path optimizations and fast context switching for real-time applications like search, translation, and recommendation.

- Power Efficiency: Architectural and process node improvements (likely sub-5nm) dramatically reduce power per operation.

- Scalability: Ironwood is designed to scale to thousands of chips with consistent QoS, especially under heterogeneous workloads.

- Memory and Bandwidth Optimization: Enhanced memory hierarchies reduce access latency, critical for inference-heavy transformer models.

Ironwood marks a shift in TPU philosophy — from general-purpose ML accelerators to task-specialized silicon, aligning with Google’s broader trend toward domain-specific architectures (DSAs).

3. Technical Architecture of Ironwood

3.1 Compute Capabilities

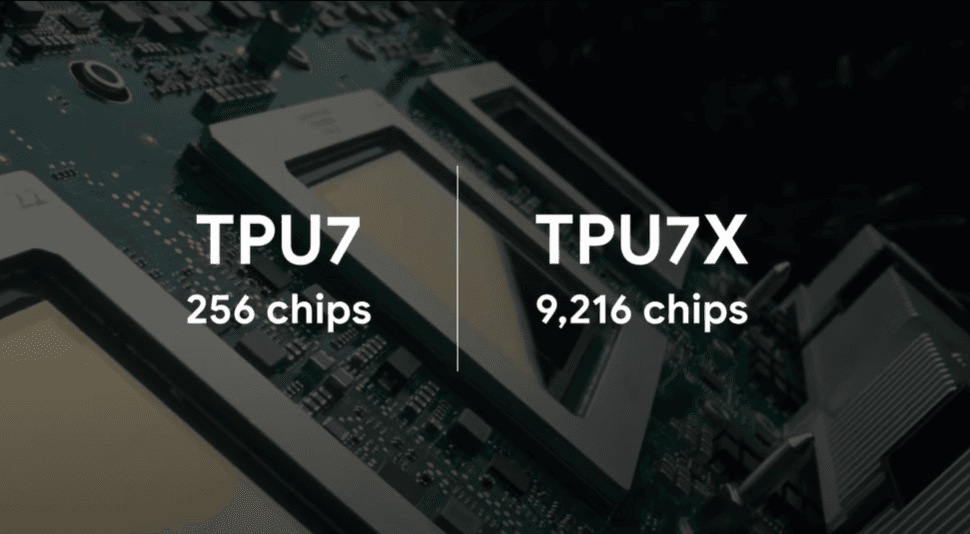

Ironwood offers 4,614 TFLOPs of peak FP8 performance per chip, making it the most powerful TPU Google has ever designed. With the ability to scale up to 9,216 chips per pod (totaling 42.5 exaflops), it delivers more than 24x the compute power of El Capitan, the world’s largest supercomputer, which provides only 1.7 exaflops of peak compute per pod.

This immense computational capacity is designed to handle the most demanding workloads, including Large Language Models (LLMs) and Mixture of Experts (MoE), which require massive parallel processing power and efficient memory access. Ironwood is built to address the transition from responsive AI models to proactive, inferential AI models that generate insights and interpretations. By supporting these AI models with immense compute, Ironwood significantly accelerates both training and inference, making it ideal for real-time, scale-out inference.

3.2 Memory: HBM Size, Speed, and Bandwidth

Each Ironwood chip is equipped with 192 GB of High-Bandwidth Memory (HBM), which is 6x the capacity of the TPU v4 (Trillium), enabling Ironwood to process larger models and datasets without frequent data transfers. Additionally, Ironwood achieves 7.37 TB/s of memory bandwidth per chip, 4.5x the bandwidth of Trillium, making it well-suited to handle memory-intensive workloads common in modern AI, including large-scale natural language processing and vision models.

The substantial increase in HBM capacity and bandwidth ensures Ironwood can efficiently manage the growing demands of foundation models, advanced reasoning tasks, and multi-modal AI, while reducing bottlenecks typically associated with memory throughput.

3.3 Interconnects and Pod-Scale Networking

A defining feature of Ironwood’s design is its Inter-Chip Interconnect (ICI), which offers 1.2 TBps bidirectional bandwidth between chips. This allows Ironwood to support efficient and low-latency communication at pod scale, with 9,216 chips connected in a coordinated, synchronous manner. Ironwood’s pod-scale architecture enables vertical and horizontal scaling, making it capable of handling a range of AI tasks, from smaller inference models to massive training workloads.

At full scale, Ironwood’s 9,216-chip pods deliver 42.5 exaflops of computational power, enabling deployment of the most complex AI models. The ICI network ensures fast and synchronized data sharing between chips, which is essential for both training large models and enabling real-time inference.

3.4 Power and Thermal Efficiency

Ironwood achieves a 2x improvement in performance per watt over TPU v4 (Trillium), addressing the increasing energy demands of AI workloads. Ironwood’s advanced liquid cooling systems ensure efficient thermal management, enabling the chips to maintain peak performance without overheating. This cooling system supports sustained operation under heavy AI workloads, ensuring that Ironwood can operate reliably at scale without throttling, reducing energy consumption and operational costs.

Additionally, Ironwood is 30x more power-efficient than the first-generation Cloud TPU released in 2018, providing significantly more compute power for each unit of energy used — an essential factor as the industry moves toward more energy-conscious AI computing.

3.5 Fabrication, Packaging, and Design Insights

Ironwood is crafted using advanced semiconductor fabrication processes, which optimize transistor density for enhanced performance and energy efficiency. Its design integrates compute units, memory, and interconnects in a compact, high-performance layout. This minimizes signal loss and maximizes data throughput between components.

The modular architecture of Ironwood allows for flexible deployment in a variety of data center configurations. Whether deployed as part of a TPU Pod (scaling up to 9,216 chips) or used in smaller configurations, Ironwood provides consistent performance across diverse AI workloads. Its design ensures full integration with Google Cloud’s infrastructure, offering seamless support for Google’s AI services.

4. Scalability and Deployment

Ironwood is built to meet the infrastructure demands of an AI-first world. While earlier TPU generations focused on training scale, Ironwood is optimized for massively distributed inference. Its design enables Google to deploy intelligent services globally with consistency, efficiency, and reliability.

4.1. Ironwood Pod Architecture: 9,216-Chip Configuration

Ironwood TPUs are arranged into high-density clusters known as TPU Pods, with each pod scaling up to 9,216 custom chips. These pods form a unified, high-speed compute fabric optimized for inference.

- Optical Interconnects: Each chip is connected via custom high-bandwidth optical links, enabling rapid, low-latency communication between thousands of nodes.

- Deterministic Execution: The synchronous architecture ensures reliable and repeatable performance, which is vital for real-time services.

- Energy and Space Efficiency: Ironwood Pods are engineered for high performance per watt and optimized space utilization, making them suitable for dense deployment within existing data center footprints.

This architecture enables large-scale inference workloads to run in parallel — with predictable performance across models and regions.

4.2. Integration Into Google’s Hyperscale Infrastructure

Ironwood doesn’t operate in isolation — it is deeply embedded within Google's custom data centers, benefiting from decades of infrastructure refinement.

- Power-Aware Infrastructure: Custom power delivery systems support Ironwood’s unique workload patterns, maintaining high efficiency across variable demand.

- Advanced Cooling: Thermal management is handled via liquid cooling and heat-aware rack configurations, ensuring chips operate at optimal temperatures.

- Network Fabric Alignment: Ironwood Pods are fully integrated with Google’s internal software-defined network (SDN), ensuring that data can be fetched, processed, and returned without performance bottlenecks.

This full-stack integration ensures high availability, low maintenance overhead, and consistent delivery of AI services at planet scale.

4.3. Deployment Model and Operational Strategy

Ironwood’s rollout is centralized yet flexible, allowing Google to place pods strategically based on latency demands, power availability, and regulatory requirements. The platform supports:

- Global Edge Deployment: Strategically located pods reduce latency for end users across continents.

- Dynamic Resource Allocation: Internal orchestration tools allocate Ironwood resources based on service-level objectives and real-time traffic patterns.

- Reliability at Scale: Built-in fault tolerance ensures services remain robust even in the face of hardware failures or traffic surges.

5. AI Use Cases

Ironwood isn't just an advancement in chip design — it’s a transformative enabler of next-gen AI applications. Purpose-built for inference, it supports a wide spectrum of latency-critical, large-scale, and research-driven workloads.

5.1. Optimizing Inference at Scale

Ironwood shines in serving high-throughput, real-time predictions across a wide range of production systems:

- Batch inference for large datasets

- Online inference with strict latency guarantees

- Multi-tenant workloads with dynamic performance requirements

Its architecture balances speed and efficiency, ensuring AI services remain responsive under massive global demand.

5.2. Gemini and Foundation Model Support

Ironwood plays a vital role in deploying and running foundation models like Gemini, which require:

- High memory bandwidth

- Efficient tensor operations

- Reliable multi-modal inference capabilities

While training still leverages larger-scale infrastructure (e.g. TPU v4/v5), Ironwood ensures that these models can be served interactively and at scale, whether through cloud APIs or embedded in consumer services.

5.3. Latency-Critical Products

Many of Google’s core products are deeply latency-sensitive. Ironwood powers inference in applications like:

- Google Search: Instant ranking, summarization, and query understanding

- Translate: Real-time multilingual conversion with contextual accuracy

- Bard: Conversational AI that demands fast, fluent, and coherent responses

These products benefit from Ironwood’s predictable sub-second performance, even during peak traffic.

5.4. Research and Scientific Computation

Beyond consumer services, Ironwood is also used in high-impact domains that demand fast inference on complex models:

- Medical Imaging and Diagnostics

- Climate Modeling and Forecasting

- Scientific Simulation and ML Research

Its ability to process large models with efficiency accelerates research timelines, bridging the gap between experimentation and real-world application.

6. Performance Benchmarks

6.1. Raw Metrics: FLOPs, Bandwidth, Energy Efficiency

Ironwood delivers impressive raw compute performance, with each chip exceeding 300 TFLOPs in FP8 precision. This makes it one of the most powerful inference-optimized chips available. It’s equipped with high-bandwidth memory (HBM), offering significantly improved data throughput — a critical feature for large language models and generative AI systems that need rapid access to vast volumes of weights and activations.

What stands out is Ironwood’s energy efficiency. With every generation, Google has focused on reducing power consumption per unit of computation. Ironwood takes this further, delivering more performance per watt than previous TPUs, helping Google meet both its performance and sustainability goals.

6.2. Real-World Comparisons: Latency, Throughput

Ironwood has shown strong real-world performance when compared to both TPU v4 and industry-leading GPUs like the NVIDIA H100. It reduces inference latency — the time it takes to get a result from a model — which is essential for user-facing applications like Search and Bard. Meanwhile, higher throughput enables Ironwood to handle more requests per second, making it ideal for large-scale services.

This performance is not just theoretical. Google has reported significant improvements in production environments, showing that Ironwood performs consistently under actual user load — not just in lab benchmarks.

6.3. Inference-Specific Benchmarks

Unlike earlier TPU generations that were optimized primarily for training, Ironwood is built for inference at scale. This shift reflects the growing demand for fast, efficient serving of large AI models in real-time applications.

Ironwood delivers up to 2× better inference performance per watt compared to TPU v4. That means faster model responses, reduced compute costs, and improved sustainability — all while maintaining output quality. It’s especially effective for large transformer models used in NLP, image generation, and multimodal applications.

6.4. Ironwood in Production vs. Lab Results

One of the key strengths of Ironwood is its consistency. Many chips perform well in controlled environments but fall short in production due to scaling limitations or thermal issues. Ironwood, however, is engineered for sustained, real-world use.

Deployed across Google’s data centers, Ironwood powers mission-critical workloads with predictable performance, even under high concurrency. Whether it’s serving billions of queries or supporting the inference of a model like Gemini, Ironwood delivers stable throughput and minimal latency degradation — a key requirement for reliable AI services.

Performance Comparison — Ironwood vs TPU v4 vs NVIDIA H100

| Feature / Metric | TPU v4 (Trillium) | TPU Ironwood | NVIDIA H100 |

| Peak TFLOPs (FP8) | ~275 TFLOPs | 4614 TFLOPs | ~400 TFLOPs |

| HBM Memory Size (per chip) | 128 GB | 192 GB | 80 GB |

| Interconnect Bandwidth | ~600 Gbps | 1.2 TB/s (ICI) | 900 Gbps (NVLink) |

| Pod Size (max chips) | 4,096 | 9,216 | Up to 256 (per DGX) |

| Optimized for Inference? | Partial | Yes | Yes |

| Power Efficiency (per TFLOP) | Moderate | High | High |

| Primary Use Case | Training | Inference & Serving | Training & Inference |

Note: Metrics are based on available public data and industry trends. Actual values may vary based on workload and configuration.

7. Ecosystem and Developer Experience

7.1. Software Stack and Tooling (XLA, JAX, TensorFlow)

Ironwood is tightly integrated with Google’s well-established AI software ecosystem. It supports popular frameworks like TensorFlow and JAX, and leverages the XLA (Accelerated Linear Algebra) compiler to optimize performance on TPU hardware. These tools enable seamless deployment of models with minimal manual tuning, allowing developers to focus on experimentation and innovation.

7.2. Model Portability and Compatibility

A key advantage of Ironwood is its compatibility with models developed across previous TPU generations and even GPU environments. Through abstracted APIs and platform support, developers can migrate or scale models with minimal changes. This portability lowers the barrier to adoption and reduces time-to-deployment for organizations moving workloads into the TPU ecosystem.

7.3. Debugging, Monitoring, and Visualization Tools

To support production-scale deployment, Ironwood offers robust tooling for performance monitoring and debugging. Tools like TensorBoard and Cloud TPU profiler provide visibility into model behavior, resource utilization, and bottlenecks. This transparency is essential for optimizing both performance and cost, especially when operating at scale.

7.4. Challenges in TPU Onboarding for Developers

Despite its strengths, onboarding onto TPUs can present a learning curve. Developers new to Google’s stack may encounter friction related to architecture-specific tuning, static graph compilation, or debugging parallel workloads. However, improved documentation and growing community support are gradually addressing these challenges, making Ironwood increasingly accessible to a broader audience.

8. Market and Competitive Landscape

8.1. Ironwood vs. NVIDIA A100/H100

While NVIDIA’s A100 and H100 GPUs dominate the AI hardware landscape, particularly for training and inference across industries, Ironwood is uniquely optimized for inference workloads at scale. Its specialized architecture gives it an edge in terms of energy efficiency, lower latency, and performance-per-watt, which is crucial for large-scale production environments like Google’s.

Ironwood's tight integration with Google’s internal infrastructure, such as its AI stack and software ecosystem, offers a distinct advantage when it comes to handling massive real-time inference workloads. While NVIDIA’s GPUs excel in versatility, Ironwood is engineered to optimize inference performance in a way that gives it a strategic edge for Google’s own services.

Here’s a comparison of key metrics:

| Feature/Metric | NVIDIA A100 | NVIDIA H100 | TPU Ironwood (Google) |

| Launch Year | 2020 | 2022 | April 2025 |

| Peak FP8 Performance | 312 TFLOPS | 3,958 TFLOPS (With Sparsity) | 4.6 petaFLOPS |

| Memory per Chip | 40 GB HBM2 | 80 GB HBM3 | 192 GB HBM |

| Memory Bandwidth | 1.6 TB/s | 3.35–4.8 TB/s | 7.37 TB/s |

| Interconnect Bandwidth | 600 GB/s (NVLink) | 900 GB/s (NVLink) | 1.2 Tbps (ICI) |

| Inference Latency | Medium | Low | Very Low |

| Energy Efficiency | Moderate | High | 2× improvement over Trillium |

| Best Use Case | General-purpose | Training & Inference | Inference at Scale |

Ironwood's very low inference latency and very high energy efficiency set it apart, especially for real-time applications like Google Search and Bard, where sub-second responses are essential.

8.2. Ironwood vs. Intel Gaudi 3 & AWS Trainium

Intel and AWS have recently introduced Gaudi 3 and Trainium to challenge NVIDIA’s dominance in the AI hardware space. While both accelerators focus on cost-efficient training workloads and cloud-native deployment, Ironwood’s focus on scalable inference gives it an edge in serving large-scale, real-time applications.

Ironwood benefits from Google’s extensive, highly integrated infrastructure. It also benefits from having Google’s mature AI software ecosystem, such as TensorFlow, JAX, and XLA, which ensures faster adoption and seamless deployment at scale. On the other hand, Intel and AWS’s offerings are still maturing, especially in cloud-native integration and scalability.

Here’s a comparison of key specifications:

| Attribute | Intel Gaudi 3 | AWS Trainium | TPU Ironwood |

| Architecture Focus | Training | Training + Inference | Inference-Optimized |

| Launch Year | 2024 | 2023 | 2025 |

| Memory per Chip | 128 GB HBM2e | 32 GB HBM | 192 GB HBM |

| Interconnect | 200 Gbps Ethernet (24 ports) | NeuronLink | ICI (Custom) |

| Native Cloud Support | Moderate (AWS, GCP) | Strong (AWS) | Deep (GCP) |

| Software Ecosystem | PyTorch, TensorFlow, Hugging Face | PyTorch, TensorFlow | TensorFlow, JAX, XLA |

| Target Use Case | Cost-efficient Training | Scalable Inference | Real-time, Scale-out Inference |

Ironwood’s superior memory capacity, custom interconnect, and deep integration with Google Cloud give it a strategic advantage in real-time, large-scale inference, where low latency and high throughput are essential.

8.3. Pricing Model Comparisons

While Google has not publicly disclosed specific pricing for Ironwood, we can use the pricing for the TPU v5p, which is closely related to Ironwood, as a proxy.

TPU v5p Pricing (as of Q2 2025):

- On-demand: $4.20 per chip-hour

- 3-year commitment: $1.89 per chip-hour

In comparison, other public cloud accelerators have varying costs depending on their use case (training vs. inference):

| Hardware | Provider | Approx. On-Demand Cost | Pricing Notes |

| NVIDIA A100 | Multiple (AWS, GCP) | $2.50–$3.10/hr | Based on standard VM instances |

| NVIDIA H100 | Multiple | $3.80–$4.50/hr | Higher cost due to newer generation |

| Intel Gaudi 3 | AWS (Habana) | ~$1.80–$2.50/hr | Focused on cost-efficient training |

| AWS Trainium | AWS | ~$1.40–$2.20/hr | Lower cost for inference use cases |

| TPU v5p | Google Cloud | $4.20/hr (on-demand) | Estimated proxy for Ironwood pricing |

Note: Pricing for the accelerators mentioned may vary based on region, availability, and specific usage patterns (e.g., training vs. inference). For the most up-to-date and accurate pricing, please refer to the respective pricing pages for each GPU or TPU listed:

- Google Cloud TPU v5p .

- AWS EC2 Instances with NVIDIA A100 GPUs

- AWS EC2 Instances with NVIDIA H100 GPUs

- AWS EC2 Trainium Instances

- AWS EC2 Instances with Intel Gaudi 3

Ironwood's pricing is likely higher than that of competitors focused on cost-efficient training, such as Trainium or Gaudi 3, but this reflects its specialization in inference, offering superior performance-per-watt, lower latency, and higher throughput for real-time applications.

8.4. Ironwood’s Strategic Role in Google’s AI Stack

Ironwood plays a critical role in Google’s AI infrastructure strategy. It powers inference for core products like Bard, Gemini, Search, and YouTube recommendations, all of which require ultra-low latency and real-time responsiveness to provide seamless user experiences.

Beyond internal use, Ironwood is also integrated into Google Cloud, enabling enterprise customers to leverage the same advanced infrastructure that Google uses internally. This integration offers:

- Consistency in performance across environments.

- Scalability to handle growing AI workloads.

- Reliability in production environments.

By focusing on inference at scale, Ironwood helps Google maintain its competitive edge as AI applications continue to scale globally, reducing latency, energy consumption, and costs while improving overall throughput.

This positions Ironwood as an essential part of Google’s AI-first vision — powering real-time AI services for billions of users worldwide, while enabling enterprises to deploy cutting-edge AI models with high reliability and efficiency.