Importance of Cost Optimization in Cloud Containers

The efficiency of cloud container workloads depends not just on performance, but on precise cost control at every layer of compute. Containers offer flexibility, speed, and portability, but without proper cost control, these benefits can be counterbalanced by inefficiencies in resource usage, especially at scale.

Cloud providers like AWS offer multiple compute options for container workloads, each with distinct pricing models and operational characteristics. While Amazon EC2 has long been the default for container deployment, it requires active infrastructure management and careful cost planning. In contrast, AWS Fargate introduces a serverless model that removes infrastructure management entirely and charges based on the exact compute consumed.

This blog explores the financial and operational trade-offs between EC2 and Fargate, focusing specifically on how and when Fargate presents a measurable cost-saving opportunity. With that in mind, we begin by examining the core compute options available for container workloads on AWS and what they mean for your cost strategy.

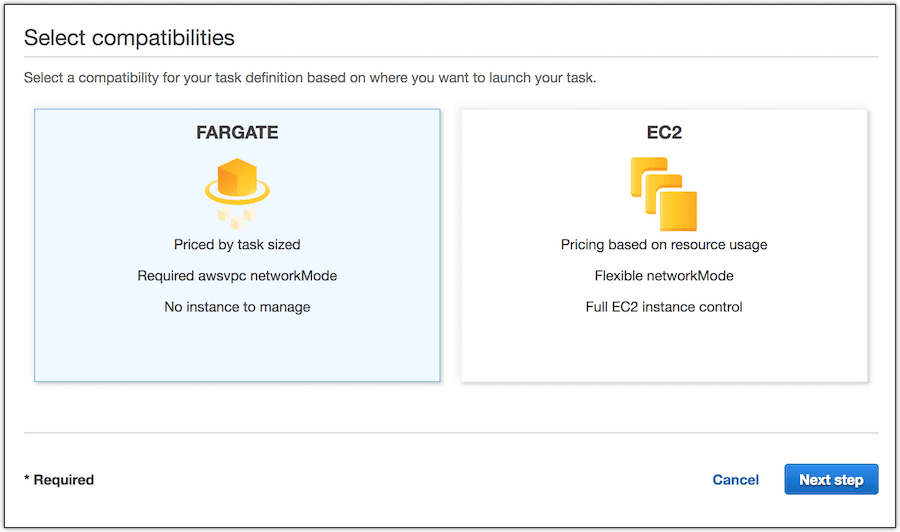

AWS Compute Options for Container Workloads: EC2 and Fargate

Source - AWS

Understanding the cost implications of container workloads starts with the underlying compute choices. In AWS, this typically comes down to two options: EC2 and Fargate. Both support container orchestration through ECS or EKS, but they differ in how resources are provisioned, managed, and billed. These differences have a direct impact on how you control spending and scale workloads.

Before comparing cost models, it's important to clearly understand what each service offers and where its operational trade-offs begin to influence financial outcomes.

Overview of EC2

Amazon EC2 has long been the go-to choice for deploying containers in AWS. It provides configurable instances, giving you full control over the environment your containers run in. This includes the ability to define the operating system, customize networking, tune performance, and access the instance-level view of your infrastructure.

This level of flexibility supports a wide range of technical requirements, from strict compliance needs to specialized performance tuning. For teams that need granular control over their infrastructure, EC2 delivers a familiar, powerful toolset.

However, with that control comes responsibility. Managing EC2-based container workloads requires you to:

- Provision and scale instances manually or through auto-scaling groups

- Handle OS-level patching and security updates

- Monitor for underutilized resources to avoid cost waste

EC2 tends to be a strong fit when workloads are stable and predictable, especially when paired with cost-saving instruments like Reserved Instances or Savings Plans. But it places a significant portion of infrastructure management on your team, and that overhead carries both time and cost implications.

Overview of Fargate

AWS Fargate takes a different approach by removing the need to manage servers entirely. It’s a serverless compute engine for containers, where you define task-level requirements (like CPU and memory), and AWS handles the provisioning, scaling, and maintenance behind the scenes.

With no need to size instances or maintain the OS, Fargate simplifies operations significantly. You only pay for the compute resources your containers use, and only while they’re running. This level of detail can be highly beneficial in environments where workloads are short-lived, variable, or difficult to predict.

Fargate is especially effective for teams focused on speed and agility, or those looking to reduce time spent on infrastructure. It allows development teams to deploy faster, operate leaner, and scale without the risk of overprovisioning.

While Fargate may have a higher per-unit compute cost compared to EC2, it often reduces total cost when you factor in management overhead and resource efficiency, especially for intermittent or unpredictable workloads.

This distinction between EC2 and Fargate forms the basis for deeper cost considerations.

Cost Analysis: Limitations of Hourly Pricing Models

Hourly pricing is often the first metric used to evaluate EC2 and Fargate. However, focusing on price per unit of compute alone leads to incomplete and frequently misleading cost assumptions. Pricing models offer a baseline, but understanding the total cost impact requires evaluating how infrastructure is used, managed, and optimized over time.

This section moves beyond headline rates and examines the practical cost dynamics of running containers on EC2 versus Fargate.

Comparing Pricing Models of EC2 and Fargate

Let’s consider a typical comparison:

- A t3.medium EC2 instance (2 vCPU, 4 GB memory) costs around $0.0416/hour

- A similar Fargate task (2 vCPU, 4 GB memory) costs about $0.0928/hour

This seems straightforward; EC2 looks more cost-effective. But that only holds if you can fully utilize the EC2 instance around the clock.

Now consider this:

- If your workload runs just 4 hours per day, EC2 charges a full-day cost unless you automate instance shutdowns (which adds complexity).

- Fargate, in contrast, charges only for those 4 hours, with no idle billing or setup overhead.

Multiply this across environments - dev, staging, production, and the difference quickly compounds. Even with EC2 Savings Plans, the benefits are dependent on accurate usage forecasting and long-term stability, conditions that many teams can't guarantee.

Cost Impact of Infrastructure Management

Even when EC2 is “cheaper” per unit, managing the underlying infrastructure creates an operational cost center of its own.

Teams typically invest time in:

- Instance lifecycle management (launching, resizing, terminating)

- Patching and AMI updates for security and compliance

- Scaling policies and automation setup

- Monitoring and alert tuning for under-/over-utilization

For example, a mid-sized team may spend 10–15 engineer-hours per week just maintaining EC2-based container infrastructure. At a fully loaded engineering cost of $80–$120/hour, this equates to $3,200–$7,200 per month, exclusive of compute costs.

Fargate removes this layer entirely. By shifting infrastructure responsibility to AWS, teams can focus on building and deploying applications without needing to manage the runtime environment. This reduction in operational effort results in faster deployment cycles and less risk of misconfiguration or underutilization.

Why Pricing Alone Is Insufficient for Cost Decisions

Relying solely on hourly pricing can lead to misleading conclusions. Two environments with identical per-hour rates may produce different outcomes in real-world usage.

Several indirect cost factors often go unaccounted for:

| Cost Factor | EC2 | Fargate |

| Hourly rate per unit | Lower (with reservations) | Higher (on-demand only) |

| Idle cost | Always incurred | None (pay-per-task-runtime) |

| Operational overhead | High | Minimal |

| Scaling complexity | Requires setup + tuning | Native and automatic |

| Cost alignment with workload | Difficult in dynamic workloads | Precise and workload-aligned |

A meaningful cost comparison must extend beyond list prices. It must consider workload behavior, deployment patterns, team structure, and management complexity. Fargate often delivers cost benefits not because it is cheaper per hour, but because it removes hidden inefficiencies that accumulate silently in traditional EC2-based container environments.

The real question is not, “Which service is cheaper per hour?”

It’s, “Which model maps more efficiently to how your workloads behave?”

If you’re relying on EC2 without tightly controlled provisioning and precise automation, you’re likely paying more than needed every single day.

Hidden Costs That Affect Total Spend

Even well-structured cloud environments experience financial leakage not from pricing errors, but from how infrastructure is used, managed, and scaled.

Beyond headline rates and billing dashboards, certain cost drivers remain overlooked. These are not just minor inefficiencies; they are often the difference between scalable cost control and silent overspend.

This section surfaces the three most common hidden cost contributors in EC2-based container environments and explains how Fargate directly mitigates them.

Costs from Overprovisioning and Idle Resources

With EC2, the responsibility of provisioning for peak demand often results in excess capacity being deployed. For example:

- A production service designed to handle peak traffic may run on 8 vCPU and 16 GB memory, even if actual utilization hovers at 30–40% most of the day

- If that instance runs 24/7, more than 60% of allocated compute is routinely idle, yet still billed in full

Even with auto-scaling, EC2 users must define instance types, scaling thresholds, and cooldown periods, which rarely match real-time workload behavior precisely.

Fargate eliminates this overhead. Since it provisions at the task level, you only pay for the exact vCPU and memory each task uses, for the duration it runs. There is no need to size for peak in advance or manage scaling logic around instance lifecycles.

This shift from provisioning for potential to paying based on execution removes one of the most persistent and underestimated causes of inflated compute cost.

Operational Effort and Management Overhead

Responsibility for EC2-based workloads carries frequent costs in both time and expertise. These include:

- AMI maintenance, patch cycles, and OS-level hardening

- Scaling infrastructure during surges and shrinking it during breaks

- Monitoring, logging, and responding to instance-level issues

- Managing EC2 lifecycle events, failures, and spot interruptions

These tasks require engineering effort that must be planned, prioritized, and maintained. In container environments with multiple services or teams, this can consume dozens of hours per month, impacting both delivery velocity and staffing costs.

Fargate removes the need to manage the compute layer entirely. Teams shift their focus from host and instance management to container and application optimization. This not only improves operational efficiency but also limits the need for specialized infrastructure skillsets on every team.

Total Cost of Ownership Considerations

Total Cost of Ownership (TCO) extends far beyond the AWS invoice. To assess container infrastructure cost accurately, consider:

- Direct spend – what you pay for compute, memory, storage, and bandwidth

- Operational cost – time and resources required to run and maintain the environment

- Resilience cost – risks from manual errors, downtime, or inconsistent scaling

- Tooling overhead – cost of third-party management tools needed to bridge EC2 limitations

When viewed through this lens, Fargate introduces a structurally different cost profile:

- No idle costs, no overprovisioning

- No infrastructure management, no patching burden

- No automation scripts or scaling policies to maintain

- Predictable spend, mapped tightly to actual usage

For teams operating in fast-moving or highly variable environments, this model significantly reduces total cost of ownership, not only by optimizing compute spend but by minimizing the time, tooling, and risk required to support it.

In the next section, we’ll examine the specific types of workloads and team profiles where Fargate not only reduces TCO but becomes the most cost-effective strategy for container compute.

When Fargate Is More Cost-Effective: Use Cases and Examples

Fargate becomes cost-effective not by lowering unit prices but by eliminating the operational and architectural inefficiencies that creep into EC2-based environments. While its per-second billing model is often cited as a benefit, the real savings emerge when container workloads are shaped by time sensitivity, unpredictability, or organizational agility.

Below are scenarios where switching to Fargate isn’t just feasible, it’s the smarter financial choice.

- Short-Duration and Intermittent Workloads

Workloads that don’t run continuously or run in response to discrete events are poorly matched to EC2’s static capacity model. Consider a data-processing container triggered hourly or a CI job that runs for 10 minutes at a time. Even with automation, the underlying EC2 instances must remain active or ready to scale, which often results in hours of idle billing for just minutes of actual compute.

Fargate eliminates that idle layer. Resources are provisioned for exactly the duration needed, with no pre-warming or instance management. This results in immediate savings, particularly for tasks that run for less than 45% of the day or vary significantly in timing.

For example, a SaaS company running hourly data transformation jobs saw a 62% reduction in monthly compute spend by shifting those pipelines from EC2-backed ECS to Fargate. The change eliminated idle costs and simplified container orchestration complexity.

- Agile Teams and Startups with Rapid Deployment Needs

Speed is expensive when infrastructure becomes a dependency. In EC2-backed environments, even small teams are forced to manage AMIs, patch schedules, security groups, and scaling policies, costs that do not show up on the pricing sheet but delay product delivery.

Fargate offers a compute layer that adjusts in real-time to build and deploy cycles. For engineering teams focused on delivering features, not maintaining platforms, it unlocks significant operational efficiency. The advantage isn’t only technical, it’s strategic.

A startup with a 5-person team reported saving 40+ hours per month after migrating development and staging environments to Fargate. The team no longer waited for instance boot times, and their deployment cycles improved by over 30%.

- Variable and Unpredictable Workloads

Few container workloads operate at constant scale. Sudden API surges, marketing-driven traffic spikes, or seasonality introduce variability that EC2 handles by overprovisioning or underperforming. This results in either unnecessary spending or reactive scaling failures.

Fargate changes this equation by tying cost directly to container activity, not instance capacity. It automatically adjusts to actual demand, which removes the need to estimate or forecast scale with precision.

The cost variations of a logistics platform that uses event-driven processing in several locations decreased by 45% after concentrating burst-heavy workloads on Fargate. The platform’s engineering team also reduced time spent on scaling logic and cost anomaly investigations.

Cost Analysis: Real-World Savings Examples

Organizations that moved sporadic, dynamic, or non-production workloads to Fargate report:

- 30–70% reduction in total container compute spend, even after accounting for Fargate’s higher unit price

- Sub-30-minute container environment setup, compared to multi-day EC2 provisioning cycles

- No need for Reserved Instance commitments, which simplifies finance forecasting and removes lock-in

Example

A fintech company moved its CI/CD test runners to Fargate. Their EC2-based Jenkins agents ran 24/7, despite workloads lasting ~12 minutes each. After switching to Fargate on-demand runners, they reduced monthly cost from $7,400 to $2,300, a 69% drop, by paying only for the runtime and eliminating instance overhead entirely.

Common Mistakes in Container Cost Optimization

Cost-saving attempts often fail because they focus on price per hour instead of cost per outcome. Avoid these mistakes:

- Benchmarking EC2 vs. Fargate using flat pricing tables, without aligning to your workload behavior

- Assuming Fargate is more expensive simply because its pricing looks higher, while ignoring the 50–80% idle resource EC2 often carries

- Applying Reserved Instances or Savings Plans to workloads that don’t justify a long-term commitment

The more dynamic or fragmented your workload portfolio becomes, the more likely these assumptions are to work against you. A clear evaluation of task duration, frequency, idle ratios, and operational overhead typically reveals that the most expensive compute is not the one with the highest rate; it’s the one you didn’t need in the first place.

When EC2 Remains the Better Cost Option?

While Fargate simplifies container operations, its strengths do not apply uniformly across all workload types. There are critical scenarios, often at scale or under specific technical constraints, where EC2 delivers lower cost, tighter control, and operational flexibility that Fargate cannot replicate.

For organizations that can plan their compute usage, manage infrastructure efficiently, or operate under strict control requirements, EC2 remains the more economical and operationally sound choice.

- Stable, High-Volume Workloads

The pay-per-task model offered by Fargate provides fewer benefits when containers support services with constant demand, like background workers, real-time data platforms, or APIs. These workloads benefit from infrastructure that can remain provisioned at a lower, predictable baseline cost.

EC2 enables this with consistent per-hour billing that becomes significantly cheaper when paired with Reserved Instances or Savings Plans.

A high-throughput streaming platform running 24/7 at ~75% CPU utilization across 100+ containers migrated to EC2 and saved over 48% in monthly compute costs, primarily by shifting from per-second billing to long-term capacity pricing.

The trade-off here is justified; long-lived services don’t benefit from the temporary nature of Fargate and are better optimized through capacity-based planning.

- Effective Use of Savings Plans and Reserved Instances

Fargate offers compute Savings Plans, but EC2 allows deeper cost commitments, especially for predictable workloads over a 1- or 3-year horizon. With EC2, teams can choose between Reserved Instances, Convertible RIs, or EC2-specific Savings Plans to optimize spend further.

For example:

- 1-year Reserved Instances offer up to a 40% discount

- 3-year Reserved Instances can exceed a 60–72% discount

- Spot Instances allow further cost reduction for non-critical or fault-tolerant workloads

A SaaS provider running 16,000+ container hours daily moved their backend processing layer to EC2 and structured it using a mix of 3-year RIs and spot pools, resulting in $420,000 in annual savings compared to their previous Fargate setup.

- Requirements for Detailed OS and Network Control

Certain containerized applications require deep customization beyond what Fargate allows. These include:

- Installing low-level agents, device drivers, or kernel modules

- Running privileged containers or daemon sets

- Enforcing static IPs or custom VPC topologies

- Using shared volumes or host-level caching mechanisms

Fargate’s abstraction model restricts access to the underlying host and OS, making it unsuitable for workloads that depend on infrastructure-level behavior.

Teams running service meshes, intrusion detection agents, or tightly-coupled logging systems typically prefer EC2, where such agents and configurations can be deployed without platform limitations.

Performance and Compliance Factors

For workloads where performance efficiency and regulatory compliance are operational requirements, not just preferences, EC2 offers the level of control and configuration that Fargate does not.

When latency thresholds, workload placement, or data residency must be explicitly governed, EC2 enables you to tailor the runtime environment to the application, infrastructure, and audit criteria involved.

With EC2, teams can:

- Pin CPU cores or configure NUMA-aware applications to ensure compute-intensive services operate without unpredictable performance variations

- Deploy on Dedicated Hosts or Bare Metal instances for tenant isolation, hardware-level control, or meeting licensing constraints

- Implement host-level compliance controls to meet standards such as HIPAA, PCI-DSS, SOC 2, and ISO 27001

- Align compute placement with data sovereignty laws or sector-specific regulatory boundaries

These capabilities become essential for industries like healthcare, finance, and defense, where environments must be explicitly configured and verifiable by auditors.

One healthcare analytics provider migrating from a managed Kubernetes service to EC2 improved SLA compliance and achieved a 30% reduction in latency by leveraging dedicated tenancy and optimizing the OS kernel for processing-intensive workflows, while also passing HIPAA audits with full host-level control.

In these contexts, the cost advantage is not just in the price per core; it’s in the risk avoided, the performance delivered, and the compliance achieved. Fargate simplifies infrastructure, but EC2 ensures infrastructure meets the standard.

In the next section, we’ll explore how to track and govern container costs effectively across both Fargate and EC2, ensuring that compute decisions remain financially sustainable at scale.

Tools to Monitor and Control Container Costs

Visibility is a prerequisite for cost control, especially in dynamic container environments where resource allocation shifts constantly. Whether you're running workloads on EC2 or Fargate, effective monitoring enables proactive decision-making and prevents uncontrolled cost spikes.

- AWS Native Tools for Cost Visibility

AWS provides foundational tools that, when configured properly, can offer detailed insights into container-level spend patterns:

- AWS Cost Explorer – Enables trend analysis and breakdown by service, task, or tag. Ideal for visualizing how container workloads contribute to overall spend across EC2 and Fargate.

- AWS Budgets – Allows you to define thresholds for forecasted and actual spend, triggering alerts before cost overruns occur.

- Amazon CloudWatch – Covers CPU, memory, and networking metrics for containers. Critical for understanding real-time resource usage and identifying underutilized workloads or scaling inefficiencies.

- AWS Cost and Usage Reports (CUR) – For teams that require granular data integration with analytics platforms or dashboards.

By combining Cost Explorer with ECS/Fargate-specific tagging, teams have cut idle spend by over 20% within a quarter, simply by identifying underused services.

- Third-Party Solutions and Integrations

AWS-native tools provide foundational visibility, but third-party platforms deliver advanced forecasting, automation, and cost attribution capabilities, especially at scale:

- Kubecost, CloudHealth, and Harness offer real-time cost allocation by namespace, service, or environment, something critical in multi-team deployments.

- These platforms also support chargeback models and predictive budgeting, useful for organizations with multiple engineering or business units sharing infrastructure.

- Many integrate directly with Kubernetes, ECS, and CI/CD pipelines, enabling automatic scaling and resource right-sizing based on live utilization and cost thresholds.

Organizations using integrated platforms have reported 30–40% improvement in container cost-efficiency through automated resource rebalancing and anomaly detection.

Key Metrics for Cost Monitoring

Effective cost optimization starts with tracking the right indicators. Focus on metrics that expose inefficiencies and inform decisions:

- CPU and memory utilization per task or container – Highlights overprovisioning or uneven resource requests

- Average task duration – Useful for Fargate, where billing is time-dependent

- Idle time and underutilized capacity – Indicates wasted compute on EC2

- Scaling events and frequency – Shows if auto-scaling is configured effectively or is reacting inefficiently

To manage compute expenses without compromising application performance, teams that implement these KPIs through dashboards, thresholds, and alerts gain a competitive advantage.

The 5-Minute Decision Framework

Making a compute decision between EC2 and Fargate doesn’t need to be complex. In most cases, a well-structured set of questions can lead to a clear and defensible choice. This decision framework is designed to provide clarity within minutes, not weeks of analysis, based on actual workload behavior, team structure, and long-term cost implications.

Here’s how to make that decision - fast, and with confidence.

Understand Your Workload Runtime

If your containers run more than 12 hours a day, every day, EC2 with reserved pricing likely delivers a lower per-hour cost. But if your workloads are short-lived, bursty, or event-triggered, Fargate’s precision billing gives you an immediate edge — you pay only when containers run, down to the second.

Then, look at your team's focus.

If you already have a team managing infrastructure, EC2's overhead may be a non-issue. But if your engineering priorities are speed, not server patching, Fargate’s no-management model lets your team stay focused on shipping, not scaling.

Ask about control.

Need access to the host OS, custom kernel modules, or compliance-specific configurations? EC2 gives you full control. If not, Fargate removes complexity without limiting capability.

Forecasting matters.

Confident in your compute usage for the next 12–36 months? EC2’s reserved options lock in serious savings. Uncertain or scaling fast? Fargate gives you flexibility without financial commitment.

Finally, total cost isn’t just pricing.

One EC2 hour might cost less than one Fargate hour, but only if you ignore the hidden labor, downtime, and scaling overhead. Real cost means real usage + real time. And engineer time? That’s rarely free.

3 Migration Mistakes That Cost Teams Thousands

Misjudging Fargate migration can quickly erode potential savings. These are the most common errors and how to avoid them.

- Migrating Everything at Once

Shifting all workloads to Fargate without workload segmentation often backfires. Long-running services on Fargate may cost 30–40% more than EC2 with Reserved Instances.

Start with short-lived or event-driven workloads - CI/CD pipelines, batch jobs, or staging environments. Keep persistent services on EC2 where predictable usage benefits from savings plans.

- Lifting EC2 Configurations As-Is

Transferring EC2 CPU/memory allocations directly to Fargate can lead to overprovisioning. Unlike EC2, Fargate charges for requested resources, not actual usage.

Monitor existing workloads for 1–2 weeks. Most use 30–50% less CPU/memory than allocated. Resize before migrating.

- Overlooking Data Transfer Costs

Fargate doesn’t eliminate network charges. Inter-task communication and cross-AZ transfers can add 15–25% to total cost.

Analyze traffic flows. Where possible, colocate services in the same AZ and use VPC endpoints to avoid NAT gateway charges.

A 30-Day Plan to Optimize Container Compute Costs

Cost optimization does not require major overhauls—it begins with structured visibility, controlled experimentation, and cross-team alignment. A focused 30-day plan can deliver measurable improvements by reducing inefficiencies and creating habits that sustain long-term savings. This framework is designed to guide teams from analysis to execution, avoiding reactive decisions and instead embedding cost awareness into everyday operations.

Review Current Container Usage and Costs

In the first week, shift attention from infrastructure assumptions to actual usage patterns. Leverage AWS-native tools or third-party platforms to surface how containers consume compute across EC2 and Fargate. Pay attention to anomalies—underutilized nodes, long-running idle services, or short-lived tasks consuming disproportionate costs. Identify high-cost services that deviate from their expected usage baselines. This audit isn't about cataloging infrastructure; it's about finding where spend is disconnected from value.

The outcome of this step should be a clear baseline: which workloads are operating efficiently, which are not, and where change is most likely to deliver returns quickly.

Implement Fargate on Specific Workloads

With visibility in place, the second phase moves into targeted testing. Select a limited set of containerized workloads where Fargate’s billing model and scaling flexibility could yield immediate impact, short-duration services, batch jobs, or unpredictable event-driven tasks. Avoid assumptions; instead, deploy in parallel with EC2-based equivalents for 10 to 14 days, gathering side-by-side performance, cost, and scaling metrics.

A successful implementation is not defined by a lower headline cost, but by reduced idle compute, faster time to deploy, and a lighter operational burden. Many teams report 20%–35% lower total workload cost during these pilots, driven not just by compute savings but by time regained from infrastructure abstraction.

Engage Teams in Cost Management Practices

The third week focuses on operational alignment. Cost optimization cannot remain isolated within DevOps or finance; it must become embedded in how teams build, deploy, and maintain containerized applications. Conduct working sessions to share pilot outcomes, highlight inefficiencies discovered in the review, and define practical behaviors that lead to more efficient compute use.

This may include setting defaults for resource requests, defining review cadences for scaling policies, or incorporating cost checkpoints into the release planning process. The goal is to build internal ownership of compute spend, not just visibility, but accountability.

Set Up Continuous Monitoring and Adjustments

The final phase moves from initiative to routine. Establish a monitoring layer that ensures ongoing visibility into compute usage and cost anomalies. This includes usage thresholds, automated alerts, and dashboards shared across engineering and product teams. Review scaling configurations regularly, especially for workloads remaining on EC2, to prevent overprovisioning that can creep back over time.

Where possible, integrate cost data into CI/CD workflows and infrastructure-as-code pipelines to catch inefficient resource definitions early. Monthly reviews should verify whether workloads are still correctly matched to their environments and whether previously optimized services continue to perform efficiently.

By following this plan, teams don’t just optimize container compute costs; they build the foundation for a more agile, cost-aware infrastructure model.