Serverless computing is a cloud-native model where developers can build and deploy applications without worrying about infrastructure management. Cloud providers like AWS, Microsoft Azure, and Google Cloud handle provisioning, scaling, and server maintenance. This allows developers to focus entirely on writing business logic and deploying code as modular functions, without the need to manage virtual machines or underlying network configurations.

The “serverless” model encompasses two major aspects:

- Functions-as-a-Service (FaaS): Small, stateless units of code executed in response to events. Example: AWS Lambda, Azure Functions.

- Backend-as-a-Service (BaaS): Fully managed services for backend components like databases (e.g., Firebase, DynamoDB), storage, and APIs.

Although the term suggests "no servers," in reality, servers still exist, but the responsibility for managing them shifts to the cloud provider. Developers only pay for the time their code runs, making serverless an attractive, cost-efficient option for certain workloads.

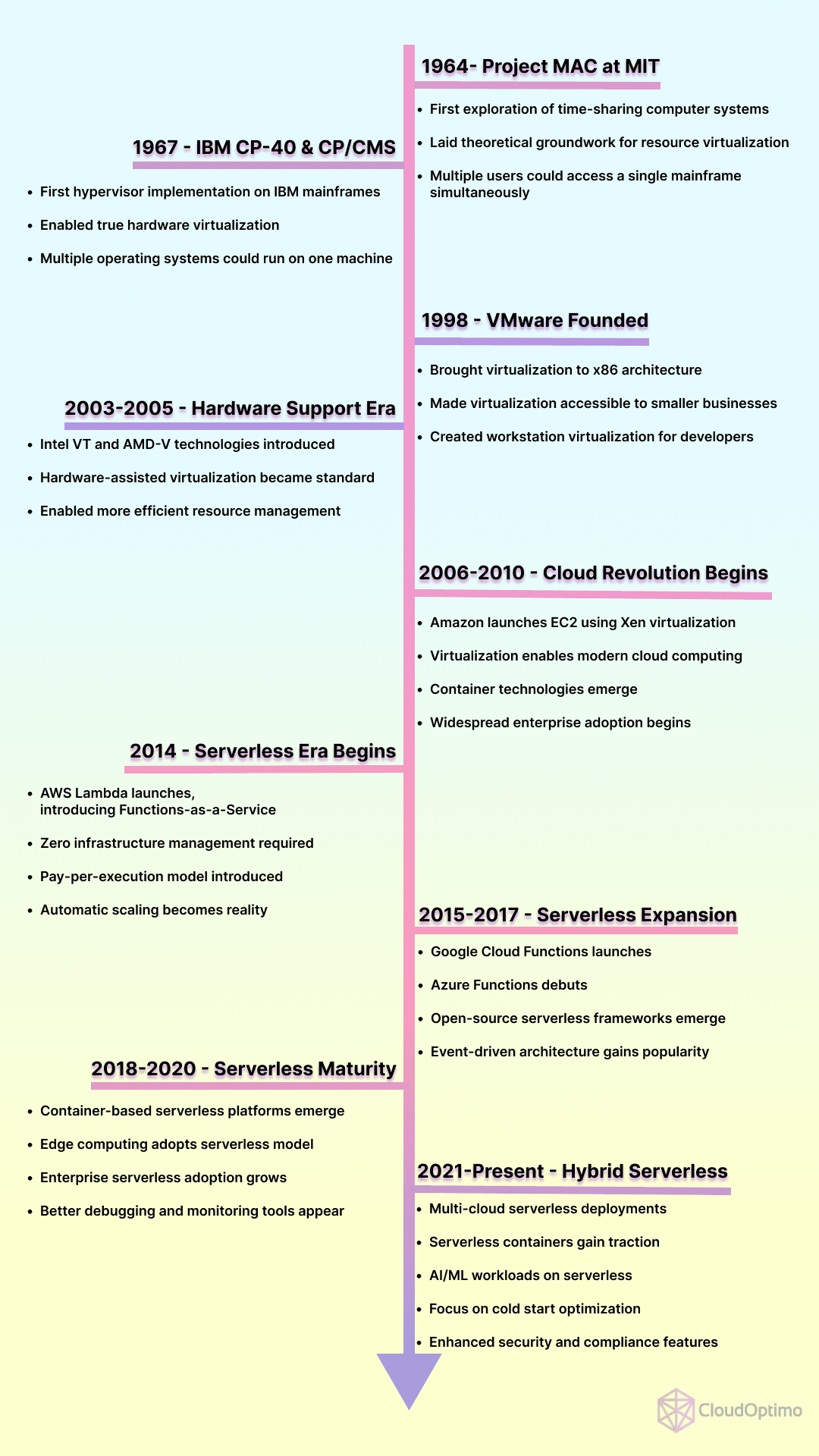

Evolution of Computing Models: From Monolith to Serverless

The shift to serverless computing reflects a natural progression across multiple computing paradigms—each addressing emerging challenges in scalability, flexibility, and automation. Below is a breakdown of this evolution:

1. Monolithic Architecture (Pre-2000s)

- Early computing followed Von Neumann architecture: fixed programs tightly coupled with hardware.

- Monolithic applications combined the user interface and data access layers within one platform. Any change required rebuilding the entire system, making scaling and iteration cumbersome.

2. Virtual Machines (Early 2000s)

- VMware popularized virtualization, allowing multiple virtual machines (VMs) to run on a single physical server. This minimized hardware dependency, shifting the architectural building block from servers to VMs.

- Cloud providers like AWS and Azure transformed IT by eliminating capital expenditure needs for virtualization infrastructure (server racks, networking gear, data centers). They offered flexible VM sizing and on-demand global infrastructure with pay-as-you-go pricing, fundamentally changing how organizations approached infrastructure deployment..

3. Containerization Technology (2013)

- Containers improved on VMs by enabling lightweight, portable units that encapsulate applications and their dependencies. Tools like Docker brought containerization to the forefront, supported by orchestration platforms like Kubernetes.

- The rise of Heroku (2009) and Cloud Foundry (2011) enabled Platform-as-a-Service (PaaS) and 12-factor apps, accelerating the DevOps movement with continuous delivery.

4. Serverless Computing: The Next Step

- Serverless architecture is the evolution of containerized microservices, focusing on event-driven workflows. Stateless functions are triggered by events, with automatic scaling and no infrastructure management required.

- Cloud providers like AWS Lambda and Google Cloud Functions manage the infrastructure, allowing developers to focus solely on code.

| Model | Characteristics | Example |

| Monolithic | Single large application; tightly coupled | Traditional web applications |

| Virtual Machines | OS-level virtualization; improved scalability | AWS EC2, Azure VMs |

| Containers | Lightweight, portable units; better resource utilization | Docker, Kubernetes |

| Microservices | Independent services communicating over APIs | Netflix microservice architecture |

| Serverless | Stateless, event-driven functions; no infrastructure management | AWS Lambda, Google Cloud Functions |

The transition from monolithic systems to serverless computing demonstrates a continuous push toward agility, scalability, and automation. Containerization laid the foundation by enabling microservices architectures, which in turn evolved into serverless models where cloud providers manage infrastructure seamlessly.

Today, serverless computing is ideal for cloud-native applications that benefit from automatic scaling, event-based triggers, and rapid development cycles, ensuring businesses can innovate without the burden of managing infrastructure.

Key Use Cases of Serverless Computing

Here are a few real-world scenarios where serverless models have proved valuable:

- Web App Backend: Hosting lightweight backend logic to handle HTTP requests.

- Data Processing Pipelines: Performing ETL (Extract, Transform, Load) tasks on demand.

- IoT Applications: Collecting and processing data streams from connected devices.

- Event-Driven Notification Systems: Sending alerts in real time using serverless triggers.

- Chatbots and APIs: Responding to customer queries with serverless APIs.

How Serverless Works – A Detailed Look

Serverless Architecture: Components and Workflow

Serverless architecture relies on a series of managed services and events. Below is a breakdown of its major components:

| Component | Description | Example |

| Event Sources | Triggers that invoke serverless functions | HTTP requests, File uploads, Database updates |

| Functions | Stateless code blocks that handle specific tasks | AWS Lambda, Azure Functions |

| Execution Containers | Temporary containers where functions run | Managed by the cloud provider |

| APIs | Interface through which functions are accessed | API Gateway, Cloud Endpoints |

| Backend Services | Managed services for database, messaging, or storage | DynamoDB, S3, Firebase, Pub/Sub |

In a typical serverless workflow, an event triggers a function (such as a user submitting a form). The cloud provider allocates an execution environment, runs the function, and scales automatically based on the demand.

Workflow of a Serverless Function

Let’s dive into the lifecycle of a serverless function:

Step 1: Trigger

An event, such as an HTTP request, file upload, or database change, triggers the function.

Step 2: Cold Start or Warm Start

- Cold Start: If no active container exists, the provider spins up a new container, causing a slight delay.

- Warm Start: If a container is already running, the function executes immediately with minimal delay.

Example: AWS Lambda takes longer during a cold start if using Java compared to Node.js due to runtime initialization.

Step 3: Execution and Scaling

The cloud provider manages:

- Execution: Runs the code within the container.

- Scaling: Automatically scales instances based on demand (e.g., processing hundreds of parallel requests).

Step 4: Termination and Cleanup

After execution, the container is destroyed to free up resources, ensuring no idle costs. This “ephemeral” nature allows cost efficiency but also introduces latency risks during cold starts.

Backend-as-a-Service (BaaS) and Its Role in Serverless

In addition to FaaS, Backend-as-a-Service (BaaS) solutions play a significant role by abstracting backend components like databases, authentication systems, and messaging queues.

- Firebase: Provides real-time databases and authentication services.

- AWS DynamoDB: A fully managed NoSQL database, ideal for serverless architectures.

- Twilio: Offers messaging and communication services through APIs.

BaaS allows developers to quickly integrate powerful backend capabilities without worrying about server configuration or maintenance.

Key Metrics in Serverless Architecture

Monitoring and optimizing a serverless application require attention to the following metrics:

| Metric | Description | Why It Matters |

| Cold Start Latency | Time taken to initialize a function container | Affects response time in critical applications |

| Invocation Count | Number of times a function is invoked | Helps track usage patterns |

| Duration | Total execution time for a function | Impacts billing in pay-per-use models |

| Memory Consumption | Amount of memory used during execution | Helps optimize resource allocation |

Benefits, Challenges, and Use Cases of Serverless Computing

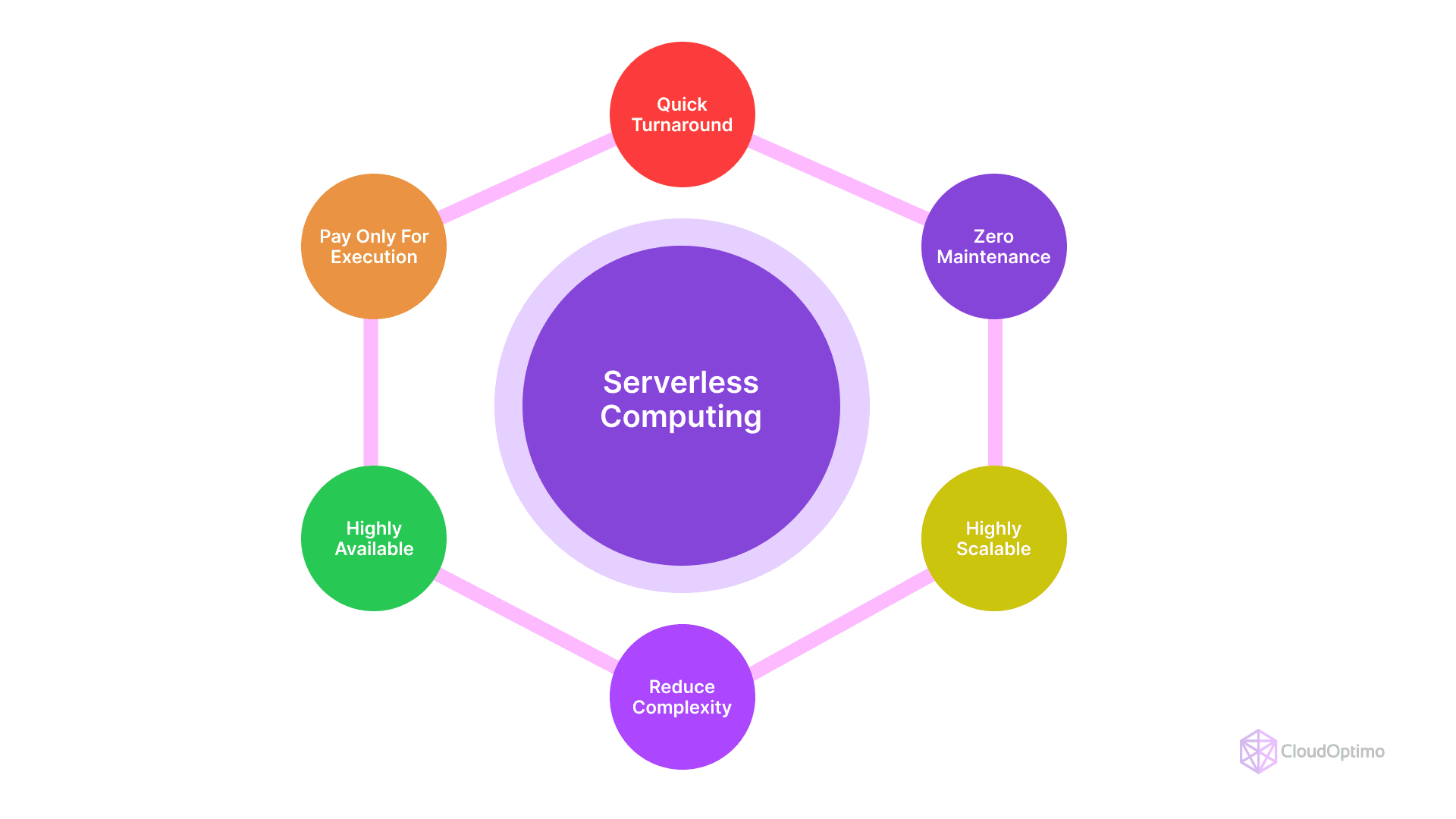

Benefits of Serverless Computing

1. Reduced Operational Overhead

With serverless, the cloud provider takes responsibility for server management, OS patches, scaling, and availability.

- Impact: Developers no longer need to manage load balancers or monitor uptime. This reduces the burden on DevOps teams.

- Example: AWS Lambda functions are auto-managed, ensuring proper scaling without any manual intervention from the user’s side.

- Savings Insight: A SaaS startup can minimize its DevOps team, reducing operational costs by 30%-50%.

2. Cost Efficiency: Pay-Per-Use Billing Model

Unlike traditional cloud infrastructure, where you pay for allocated resources even when idle, serverless platforms bill based on function execution time and resource usage.

- Billing Details:

- AWS Lambda: Charges are based on execution time in milliseconds and memory allocated (up to 10GB).

- Azure Functions: Similar pricing but with a consumption plan offering 1 million free executions monthly.

- Impact Example: A web app with intermittent traffic will benefit from serverless because costs scale down when demand is low.

3. Auto-Scaling

Serverless automatically scales to handle fluctuating workloads, without requiring configuration.

- Horizontal Scaling: Instead of adding more memory or CPU to a server (vertical scaling), serverless launches new instances of functions for parallel requests.

- Example: A video processing function in AWS Lambda will automatically scale to process multiple videos simultaneously, regardless of peak traffic.

4. Event-Driven Workflows

Serverless models are designed to respond to event triggers such as HTTP requests, file uploads, or database changes. This is essential for creating reactive systems.

- Example: A user uploads an image to an S3 bucket, triggering a Lambda function to generate thumbnails in real time.

- Impact: Eliminates idle processes by running code only when needed.

5. Faster Time to Market with Agile Development

With serverless, developers can build modular applications using small functions (microservices), significantly speeding up development and deployment cycles.

- Real-World Example: Startups using Firebase (a BaaS) to quickly set up backends, allowing them to focus more on front-end features.

- Impact: Faster iterations reduce the time required to bring products to market.

6. Built-in Fault Tolerance and High Availability

Cloud providers automatically manage regional replication and recovery. Serverless functions are often replicated across multiple availability zones for redundancy.

- Example: AWS Lambda offers regional redundancy, meaning that a function can run across multiple AWS zones with no downtime.

- Impact: Ensures high availability without additional configuration or infrastructure costs.

Challenges and Limitations of Serverless Computing

While serverless offers significant benefits, certain limitations need to be addressed. Here are key challenges and mitigation strategies:

1. Cold Start Latency

Cold starts happen when the cloud provider must initialize a new execution environment for a function. This creates a slight delay, which can affect latency-sensitive applications.

- Real-World Impact: Applications like chatbots or real-time APIs may experience degraded performance if functions are invoked infrequently.

- Example: A Lambda function written in Java takes longer to cold-start than a Node.js function, due to differences in runtime initialization.

| Language/Runtime | Average Cold Start Time (ms) | Best Use Case |

| Node.js | 50-200 | Real-time APIs, Webhooks |

| Python | 100-300 | Data Science, ML Applications |

| Java | 500-1000 | Enterprise Applications with High Tolerance |

| Go | 100-250 | Lightweight Backend Services |

Solution: Use provisioned concurrency in AWS Lambda or keep functions warm through periodic invocations.

2. Vendor Lock-in Risks

Serverless applications are often tightly coupled with specific cloud providers, which creates challenges in migrating to another platform.

- Example: AWS Lambda functions rely on CloudWatch for monitoring and API Gateway for requests, making it harder to switch providers.

- Mitigation: Use open-source tools like Knative or portable frameworks like Serverless Framework to reduce dependency.

3. Limited Execution Time

Most serverless platforms impose a maximum execution time limit for functions.

- Example: AWS Lambda has a 15-minute limit. Long-running processes (e.g., video encoding) may exceed this threshold.

- Solution: Break long processes into smaller steps and trigger them via chained functions or queues (e.g., SQS).

4. Complex Debugging and Monitoring

Debugging distributed serverless applications is challenging since logs and execution traces are spread across multiple services.

- Solution: Use centralized logging systems like CloudWatch, Azure Monitor, or Datadog for effective monitoring.

5. Security and Compliance

Serverless functions interact with various managed services, increasing the attack surface.

- Example: A compromised Lambda function could provide unauthorized access to backend services like DynamoDB.

- Mitigation: Use fine-grained IAM roles, implement encryption, and ensure least privilege access to backend resources.

Expanded Use Cases of Serverless Computing

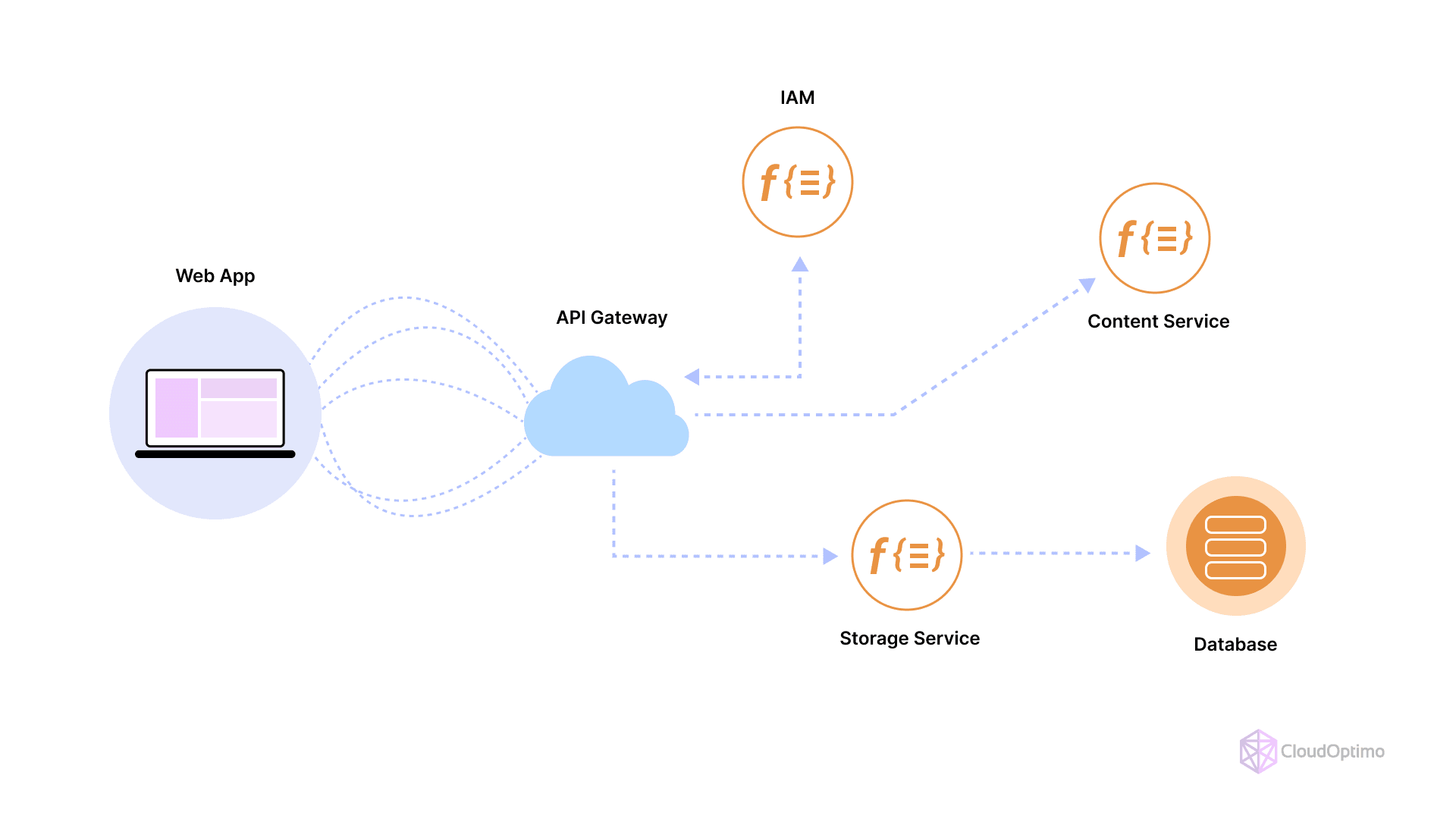

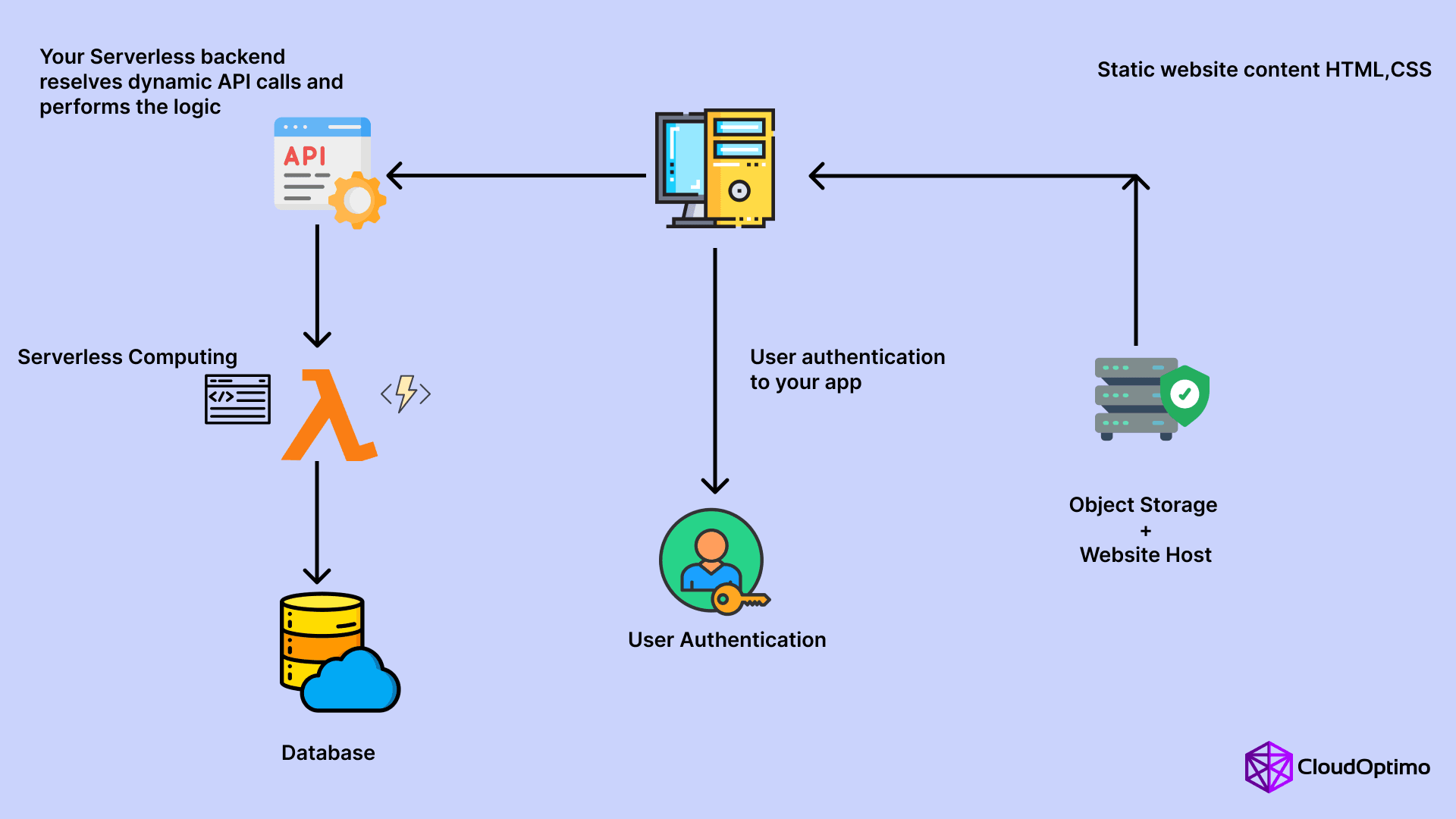

1. Web Application with a Serverless Backend

In a serverless architecture, the backend logic for web applications is often handled by functions triggered by API requests.

- Example: An e-commerce site’s backend runs on AWS Lambda, with data stored in DynamoDB and static content served from S3.

- Impact: Reduced latency and infrastructure management. Scaling happens automatically during flash sales.

2. Serverless Data Pipelines for ETL

Serverless is ideal for on-demand data processing, especially for ETL tasks.

- Example:

- A file is uploaded to Amazon S3.

- A Lambda function extracts and transforms the data.

- The transformed data is loaded into DynamoDB.

| Component | Purpose | Service Example |

| Event Trigger | Detect file upload | Amazon S3 |

| Data Transformation | Process data | AWS Lambda |

| Storage | Store transformed data | DynamoDB |

3. Real-Time IoT Data Processing

Serverless platforms can process data streams from IoT devices with minimal latency.

- Example: AWS Lambda processes sensor data streams from AWS IoT Core and stores the processed data in Amazon Kinesis.

- Impact: Handles unpredictable traffic patterns without requiring pre-allocated infrastructure.

4. Event-Driven Notification Systems

- Example: A Lambda function triggers an SMS alert using Twilio when an EC2 instance fails or when a threshold is breached in a monitoring system.

- Benefit: Instant notification systems improve response times in critical situations.

5. Chatbots and Serverless APIs

Serverless functions enable fast, scalable chatbots that respond to users in real-time.

- Example: A chatbot built using Azure Functions and Cognitive Services responds to customer queries with AI-powered responses.

- Benefit: Costs remain low since functions only run when invoked by users.

Best Practices, Security, and Guidelines for Serverless Adoption

Adopting serverless computing introduces unique challenges and requires mindful strategies for performance, security, and scalability. Below are essential best practices, security recommendations, and adoption guidelines that help teams navigate these complexities.

Best Practices for Serverless Computing

Implementing the following best practices ensures smooth operations and cost-efficient serverless environments.

1. Optimize Function Size and Execution Time

- Why it Matters: Larger functions and longer execution times increase costs and risk timeouts.

- Solution:

- Right-size memory allocation using tools like AWS Lambda Power Tuning.

- Split long-running tasks into smaller, event-driven functions.

Example:

A Lambda function designed to process large data files should offload the file upload process to S3 first, instead of handling everything in one function.

2. Modular Function Design: Keep It Simple

- Problem: Monolithic serverless functions are hard to debug and scale.

- Solution: Break functions into smaller, single-purpose functions following the Single Responsibility Principle (SRP).

Example:

For a shopping cart service:

- Function A: Handles item addition to the cart.

- Function B: Processes discount codes.

- Function C: Calculates final price.

3. Manage Cold Starts

- Issue: Serverless functions can experience cold starts—delays when functions are invoked for the first time after a period of inactivity.

- Solution:

- Use provisioned concurrency (AWS Lambda) for critical functions.

- Leverage function warmers, which keep instances active by pinging them regularly.

| Provider | Cold Start Mitigation Feature | Use Case |

| AWS Lambda | Provisioned Concurrency | Latency-sensitive apps (e.g., chatbots) |

| Google Cloud Functions | Minimum Instances | APIs needing consistent response time |

| Azure Functions | Always On | Long-running services |

4. Use Environment Variables and Secrets Management

Hard-coding credentials directly into code is a recipe for disaster.

Best Practice: Store configuration settings and secrets as environment variables or with secret management tools such as:

- AWS Secrets Manager

- Azure Key Vault

- Google Cloud Secret Manager

Example: Database passwords for a function are accessed via an environment variable, reducing the exposure risk.

5. Implement Monitoring and Centralized Logging

- Use tools like AWS CloudWatch, Azure Monitor, and Google Cloud Logging to centralize logs.

- Implement distributed tracing with correlation IDs for seamless debugging across multiple functions.

| Metric to Monitor | Why It’s Important | Tool |

| Function Invocation Latency | Detect slow performance | AWS CloudWatch |

| Error Rates | Identify frequent failures | Datadog / Azure Monitor |

| Concurrency Levels | Ensure capacity limits aren't breached | AWS Lambda Insights |

Security Practices in Serverless Computing

Security in serverless environments requires a multi-layered approach. Here's a breakdown of key security recommendations.

1. Apply the Principle of Least Privilege

Each function should only have minimal access permissions.

Example: A Lambda function that reads from an S3 bucket should not have access to other buckets unless necessary.

| Service | Security Tool | Purpose |

| AWS | Identity and Access Management (IAM) | Restrict unnecessary permissions |

| Azure | Azure Role-Based Access Control (RBAC) | Fine-grained role assignments |

| Google Cloud | Cloud IAM | Centralized permission management |

2. Secure Data Transmission and Storage

- Use TLS encryption for all communication between functions and external services.

- Store sensitive data with end-to-end encryption using AWS KMS or Azure Key Vault.

- Configure public access controls on buckets (like S3) to prevent data leaks.

3. Automate Threat Detection and Security Audits

- Use AWS Config, Azure Security Center, or Google Cloud Security Command Center to continuously audit serverless environments.

- Enable real-time alerts for unauthorized activities using monitoring tools like CloudTrail or Azure Monitor.

Guidelines for Adopting Serverless in Real-World Scenarios

1. Evaluate if Serverless is the Right Fit

Not every workload is suitable for serverless.

| Suitable Workloads | Less Suitable Workloads |

| Event-driven apps (e.g., IoT data) | Stateful applications |

| Lightweight APIs | Long-running processes (e.g., video encoding) |

| Scheduled tasks (e.g., backups) | High-throughput, low-latency systems |

2. Plan for Vendor Lock-in Mitigation

- Issue: Deeply integrated serverless solutions can create vendor lock-in.

- Solution: Use open-source frameworks like Knative that support multi-cloud deployments, ensuring portability.

3. Automate Deployment with CI/CD Pipelines

Automating serverless deployments improves reliability.

- Example: Use AWS CodePipeline or GitHub Actions to automate testing, versioning, and deployment of Lambda functions.

4. Monitor Cloud Spending and Optimize Usage

- Serverless pricing models charge based on number of requests and execution time, so monitoring costs is essential.

- Use tools like AWS Cost Explorer or Azure Cost Management to track function usage and spending trends.

Real-World Example: E-commerce Platform with Serverless Architecture

Let’s see how serverless computing powers a modern e-commerce platform.

Architecture Overview

- API Gateway: Manages user requests and routes them to relevant Lambda functions.

- Lambda Functions: Handle login, product search, checkout, and payment logic.

- DynamoDB: Stores user profiles and product inventory.

- SNS: Sends order confirmation notifications.

| Component | Service | Purpose |

| Frontend UI | S3 + CloudFront | Static hosting of web interface |

| User Authentication | AWS Cognito | Secure login and session management |

| Backend Logic | AWS Lambda | Business logic for checkout and payment |

| Database | DynamoDB | Product and user data storage |

| Notifications | SNS | Sends email and SMS alerts |

In this section, we covered best practices such as modular design and cold start management, essential security recommendations, and guidelines for adopting serverless effectively. With proper planning, monitoring, and security in place, organizations can unlock the full potential of serverless computing for scalable, cost-efficient operations.

Future Trends Of Serverless Computing

Serverless computing continues to evolve, offering powerful new tools and paradigms for both startups and enterprises. In this final section, we explore emerging trends, innovative use cases, and the future trajectory of serverless computing, helping you stay ahead of the curve.

Emerging Trends in Serverless Computing

1. Serverless Containers and Kubernetes Integration

- Trend: Serverless solutions are increasingly integrating with container orchestration platforms like Kubernetes.

- How It Works: Platforms like Knative and AWS Fargate allow containerized workloads to run in serverless mode, combining the flexibility of containers with the cost-efficiency of serverless.

| Technology | Description | Use Case |

| AWS Fargate | Run containers without managing servers | CI/CD pipelines, microservices |

| Knative | Serverless add-on for Kubernetes | Cloud-agnostic container deployments |

| Google Cloud Run | Fully managed serverless containers | Event-driven, stateless apps |

Example: A CI/CD pipeline built with Jenkins on AWS Fargate automatically scales during builds, reducing infrastructure management efforts.

2. Edge Computing and Serverless at the Edge

- Trend: With the growth of IoT and 5G networks, edge computing is emerging as a key area for serverless. Serverless functions now run closer to users to reduce latency and enhance performance.

- Technology Examples:

- AWS Lambda@Edge: Execute functions at AWS CloudFront locations for low-latency responses.

- Cloudflare Workers: Run lightweight functions close to users on Cloudflare’s edge network.

| Use Case | Technology | Benefit |

| Real-time video processing | Lambda@Edge | Reduced buffering and latency |

| IoT sensor data aggregation | Azure IoT Edge | Local processing, quicker decisions |

| Personalized content delivery | Cloudflare Workers | Faster response to end-users |

3. Event-Driven Architectures and Integration with SaaS Tools

- Trend: Companies are increasingly building event-driven architectures, where events trigger functions in real-time across different systems.

- Examples:

- AWS EventBridge: Connects SaaS applications like Zendesk or Shopify with AWS Lambda.

- Google Cloud Eventarc: Triggers functions based on events from Google Cloud services.

| Scenario | Event Trigger | Action |

| New user sign-up in an app | EventBridge event from Cognito | Send welcome email via SNS |

| Payment completion in Shopify | EventBridge event | Update inventory in DynamoDB |

| Image upload to a bucket | S3 event trigger | Run image resizing Lambda function |

4. Serverless for AI and Machine Learning

- Trend: Serverless is becoming an essential part of machine learning (ML) workflows by automating tasks such as data preprocessing, model inference, and training triggers.

- How It Works:

- Use AWS Lambda to preprocess data and store it in S3.

- Trigger SageMaker jobs via event-based Lambda functions to train and deploy models.

| ML Task | Serverless Component | Benefit |

| Data preprocessing | AWS Lambda | Scalable, event-driven workflows |

| Model deployment | Google Cloud Functions | Automatically scales with requests |

| Automated retraining | Azure Functions | Triggers retraining jobs on demand |

Challenges in the Future of Serverless Computing

While serverless computing offers significant benefits, future challenges include:

- Vendor Lock-in: As functions get tightly coupled with cloud-specific services, migrating workloads across providers becomes more difficult.

- Complexity Management: As serverless applications scale, managing dependencies, monitoring logs, and debugging failures can become more challenging.

- Latency and Cold Starts: While cold start mitigation strategies exist, they may not fully eliminate delays for latency-sensitive applications.

Final Thoughts: Is Serverless Right for You?

Serverless computing presents a compelling opportunity for businesses looking to:

- Reduce Infrastructure Management: Offload server management to cloud providers and focus on business logic.

- Enable Cost Efficiency: Pay only for what you use, without idle infrastructure costs.

- Scale Seamlessly: Functions scale automatically with demand, from zero to thousands of requests.

However, it’s essential to carefully evaluate the use cases before jumping into serverless:

- Short-running, stateless, event-driven workloads benefit the most from serverless.

- Long-running or stateful applications may be better suited for containers or virtual machines.

Summary Table: When to Choose Serverless

| Criterion | Recommended Choice | Reason |

| Event-driven architecture | Serverless | Seamless, event-triggered actions |

| Predictable high throughput | Containers / VMs | More cost-effective at scale |

| Unpredictable traffic | Serverless | Automatic scaling based on load |

| Long-running computations | Containers / VMs | Avoid timeouts and cold starts |

Common Mistakes And FAQs

Common Mistakes in Serverless Implementations

- Misapplying Serverless for Long-running Tasks

- Mistake: Utilizing serverless functions for tasks that require prolonged execution can result in timeouts.

- Solution: For lengthy operations, explore alternatives like containers or AWS Step Functions that can handle stateful workflows effectively.

- Insufficient Monitoring and Logging

- Mistake: Underestimating the importance of monitoring can lead to undetected performance issues.

- Solution: Integrate robust monitoring solutions such as AWS CloudWatch or Azure Monitor to track function performance and manage error logs effectively.

- Ignoring Cold Start Latency

- Mistake: Not accounting for cold start issues can adversely affect user experience.

- Solution: Implement strategies to minimize cold starts, such as using provisioned concurrency or selecting a serverless platform optimized for reduced latency.

- Overlooking Costs from External Service Calls

- Mistake: Failing to understand the costs associated with third-party API calls can lead to unexpected expenses.

- Solution: Optimize external service usage and implement caching strategies to reduce unnecessary requests.

Frequently Asked Questions (FAQs)

Q1. What is the difference between Serverless and Containers?

- Answer: Serverless architecture abstracts the underlying infrastructure, allowing developers to focus solely on code execution without managing servers. In contrast, containers package applications along with their dependencies but still require orchestration and management.

Q2. Are there any cost implications of using serverless computing?

- Answer: While serverless computing can reduce infrastructure costs, it’s essential to evaluate whether it’s cost-effective for your specific workload. For applications with predictable traffic patterns, traditional server-based solutions might be more economical.

Q3. Can I run stateful applications on a serverless architecture?

- Answer: Serverless functions are stateless, meaning they do not retain data between invocations. To manage state, you can leverage external services like DynamoDB or S3, or use orchestration tools like AWS Step Functions for managing workflows.

Q4. What are the consequences if a function exceeds its timeout limit?

- Answer: Functions that exceed the set timeout will be forcibly terminated. It’s vital to optimize functions for performance or break down long-running processes into smaller, manageable parts.

Q5. How can I reduce the impact of cold starts on my application?

- Answer: Cold starts can introduce latency, especially for infrequently invoked functions. To mitigate this, consider using provisioned concurrency or strategies like scheduling regular invocations to keep functions warm.