Software development has never been more dynamic—or more demanding. Users expect uninterrupted service, businesses require faster innovation, and the technology ecosystem is more diverse than ever. Traditional methods of application deployment are simply not equipped to handle these challenges with the required agility. Traditional monolithic applications typically group all parts of an application—frontend, backend, and database—into a single unit, often leading to challenges in scaling and maintenance. In this approach, all application components—such as the user interface, business logic, and database—were bundled into a single, unified codebase. While this method worked for smaller projects, it became increasingly difficult to scale, maintain, and deploy as systems grew in complexity.

In contrast, modern microservices architectures break down applications into smaller, independent services that communicate via APIs. Each service can be developed, deployed, and scaled independently, promoting flexibility.

However, managing multiple services introduced a new challenge: how to deploy and maintain these components efficiently.

Containerization plays a crucial role here, providing isolated environments for each microservice and enabling the development and deployment of complex applications with ease.

What is Containerization?

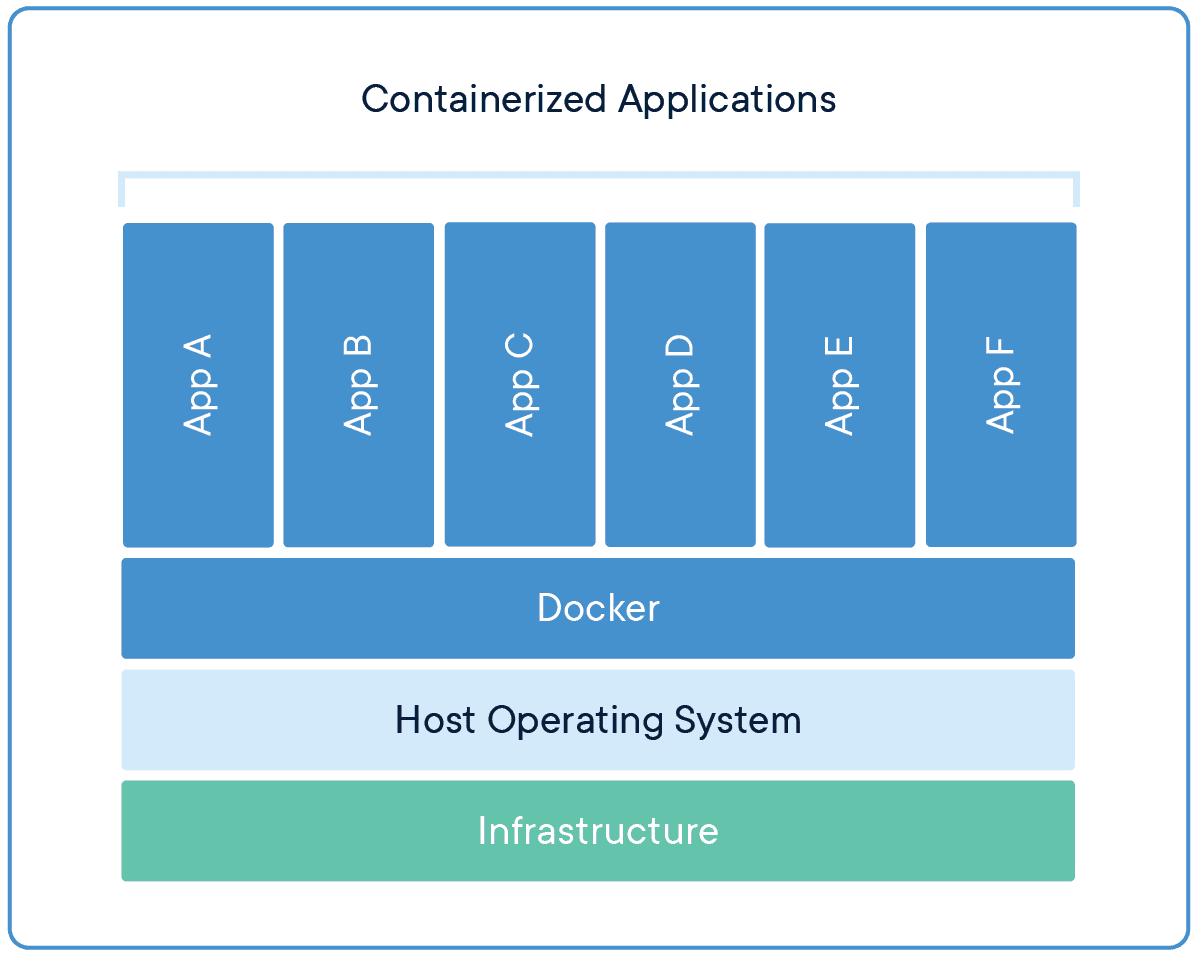

Source - Docker

Containerization is a technology that allows developers to package applications and their dependencies into a single, portable unit called a container. They are lightweight and portable, offering key advantages over traditional virtualization.

Unlike traditional virtual machines, which require an entire operating system, containers share the host system's kernel, making them lightweight and fast. The portability of containers means developers can move their applications across different environments (e.g., from development to production) without worrying about compatibility issues. This eliminates the infamous “works on my machine” problem.

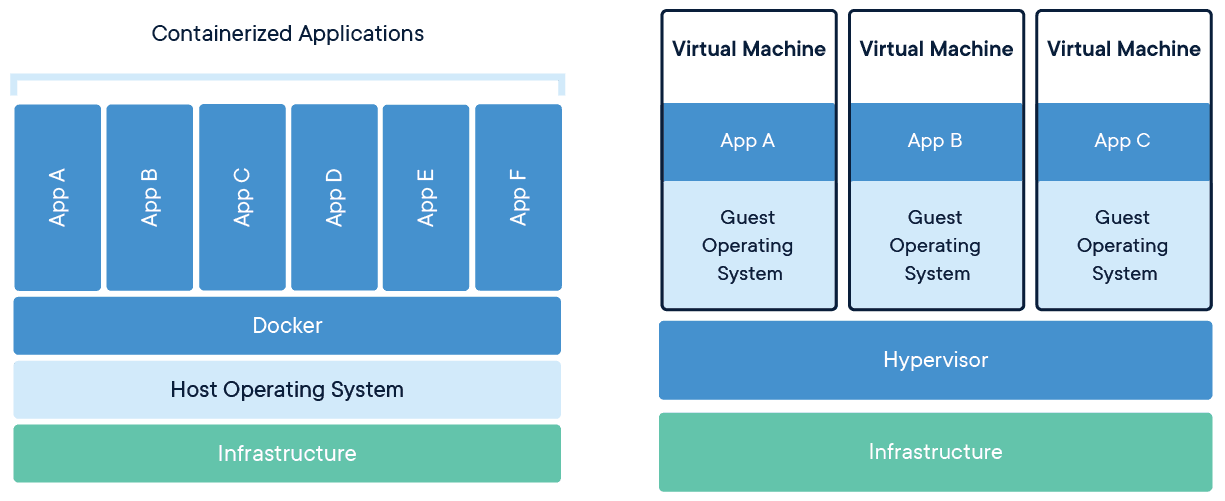

How Containers Differ from Virtual Machines?

Source - Docker

Traditional virtual machines (VMs) run a full operating system (OS) on top of a hypervisor. This OS includes its own kernel, and the VM contains all the resources required to run the application, including its own guest OS, which can lead to considerable overhead. Each VM is independent and typically takes up more space, has higher resource demands, and starts slower.

Containers, on the other hand, run directly on the host OS’s kernel, sharing the underlying OS while maintaining isolation between applications. This makes containers lightweight — they don’t need their own OS, and they can start up almost instantly. Containers package only the application and its dependencies, making them much more efficient in terms of resource usage.

This design choice results in several advantages:

- Portability: Containers can be moved across environments (like from a developer’s laptop to a test server or to a cloud environment) without worrying about incompatibility issues.

- Speed: Containers are fast to deploy because they don’t require a full OS boot-up. This allows developers to spin up new instances almost instantly.

- Efficiency: Since containers share the host OS, they consume fewer resources compared to VMs, leading to better utilization of system resources.

In summary, containerization gives developers the ability to create self-contained, isolated environments for applications that can be easily deployed, scaled, and managed across different platforms.

Historical Evolution of Containerization

The journey began decades ago, with early ideas that laid the groundwork for the powerful tools we have today.

The Early Days: Chroot and Unix

In the late 1970s, Unix introduced a feature known as chroot (Change Root). It allowed a process to alter its view of the file system by isolating itself in a new root directory. This was a primitive form of process isolation, setting the stage for modern containerization.

Key Details:

- Chroot (Change Root): Allowed a process to change its root directory, isolating it from the system.

- Foundation for Isolation: Chroot was a fundamental first step toward containerization, providing basic process isolation.

- Limitations: The isolation was not complete—chroot didn’t offer full resource isolation, paving the way for better solutions later on.

The Rise of Linux Containers (LXC)

As technology evolved, so did the need for more sophisticated isolation. Enter Linux Containers (LXC), which were introduced in the early 2000s. These containers leveraged the power of the Linux kernel to create lightweight, isolated environments for processes.

Key Details:

- Linux Containers (LXC): Used kernel features like cgroups (control groups) and namespaces to manage isolation of processes, networks, and file systems.

- Lightweight & Scalable: Allowed multiple containers to run on a single machine, each with independent resources.

- Challenges: Despite their improvements, LXC still required manual configuration and was mostly used by system administrators, not developers.

- Evolution: LXC served as the foundation for modern container technologies, setting the stage for Docker and Kubernetes.

Docker: Revolutionizing Containerization

In 2013, Docker revolutionized containerization by simplifying the process. It made it possible for developers to package applications and all their dependencies into a container image, ensuring consistency across different environments—from development to production.

Key Details:

- Simplified Containerization: Docker introduced an easy-to-use interface, turning containerization into a mainstream solution.

- Portable Container Images: Enabled apps and dependencies to be packaged into self-contained units, making them portable and consistent across different environments.

- Docker Hub: A platform for sharing container images, speeding up development cycles and fostering collaboration among developers.

- Impact: Docker’s ease of use helped drive the widespread adoption of containers across industries, bringing containerization into the spotlight.

The Rise of Kubernetes

As Docker containers gained popularity, the need to manage them at scale arose. This led to the development of Kubernetes, an open-source container orchestration platform created by Google. Kubernetes addressed the challenge of deploying, scaling, and managing thousands of containers across multiple hosts.

Key Details:

- Managing Containers at Scale: Kubernetes made it easy to orchestrate large-scale containerized applications, automating deployment, scaling, and management.

- Key Features: Kubernetes offers automated deployment, load balancing, and container orchestration.

- Cloud-Native Approach: It enabled the efficient management of microservices-based applications, pushing the rise of cloud-native development.

- Standardized Container Management: Kubernetes became the industry standard for container orchestration, ensuring consistent and reliable container management across cloud platforms.

Key Containerization Tools and Their Use Cases

| Tool | Purpose | Best Use Case |

| Docker | Containerization and image creation | Building and running containers |

| Kubernetes | Container orchestration and management | Managing large-scale containerized applications |

| Docker Compose | Defining and running multi-container Docker applications | Local development environments with multiple containers |

| Helm | Kubernetes package manager | Simplifying deployment of complex Kubernetes apps |

| Prometheus | Monitoring and alerting | Collecting and storing metrics from containerized apps |

Why Does Containerization Matter for Modern Application Development?

Containers enable developers to build, test, and deploy applications consistently across environments. Their lightweight nature reduces overhead, accelerates development cycles, and simplifies scaling, making them a cornerstone of modern microservices and DevOps practices.

Containerization also supports the growing shift towards microservices, enabling independent scaling and management of application components. As businesses look to accelerate deployment and improve operational efficiency, containers make it easier to automate workflows, scale rapidly, and maintain flexibility—key factors in meeting the demands of modern development.

As a result, containerization not only simplifies the development process but also enhances security and resource optimization, making it indispensable for building scalable, reliable applications in today’s dynamic landscape.

Key Components

The key components of a container include:

- Container Image: This is a snapshot of the application, including all its dependencies, libraries, binaries, and configuration files. It ensures that the container can run consistently across any environment.

- Container Runtime: The container runtime, such as Docker or containerd, is responsible for pulling container images, starting and stopping containers, and ensuring that they run properly in their isolated environments.

- Namespaces: These provide the isolation that makes containers lightweight. For instance, the PID namespace isolates process IDs, and the Network namespace isolates network interfaces.

- Control Groups (cgroups): Cgroups ensure that each container has a fair share of system resources (CPU, memory, etc.) without over-consuming resources that might impact other containers or the host system.

- Volumes: Volumes are used to store data persistently outside of containers. Containers themselves are ephemeral (temporary), but volumes allow data to survive container restarts or deletions.

How Container Images Support Application Deployment?

One of the main advantages of containers is the concept of a container image. A container image is a snapshot of an application and its dependencies. It enables developers to package an application in a way that can be consistently deployed in any environment. The building blocks of a container image are:

- Base Image: A minimal operating system or framework upon which the application is built (e.g., Alpine Linux, Ubuntu).

- Application Code: The actual application code and any dependencies it requires, such as libraries, configuration files, and binaries.

- Containerfile/Dockerfile: A set of instructions that define how the container image should be built. It specifies the base image, the application to run, and any other configurations.

Container images ensure consistency, as the exact same image can be deployed in any environment, reducing the risk of "works on my machine" issues.

The Role of Containers in Modern Application Development

Containers play a crucial role in modern software development, particularly in agile environments. They allow developers to:

- Work in isolated, reproducible environments that mirror production.

- Focus on building code without worrying about infrastructure configuration.

- Scale applications easily and manage them in a cloud environment.

With containers, development, testing, and production environments are aligned, which leads to faster deployment and fewer bugs when moving from development to production.

Architecture of Containerized Systems

The architecture of containerized systems involves several core components that work together to provide a robust and scalable infrastructure for applications.

Let's break down the key architectural components of containers and how they function.

- Host Operating System and Kernel

At the core of every containerized environment lies the host operating system (OS). Containers do not require their own OS; instead, they run on the host OS’s kernel, which provides process scheduling, memory management, and other low-level system functionalities.

The key distinction here is that containers share the same OS kernel, which makes them lightweight compared to virtual machines (VMs), which need their own OS. This shared kernel structure is what allows containers to start up quickly and use fewer resources.

Container Runtime: The Engine Behind Containerization

The container runtime is the software responsible for running containers on the host OS. Docker is the most popular container runtime, but others like containerd and CRI-O are also widely used. The runtime interacts with the OS kernel to isolate and manage containers.

The container runtime is responsible for:

- Image management: Pulling container images from a registry and managing them on the local host.

- Execution: Starting, stopping, and managing containers.

- Networking: Configuring networking interfaces for containers to communicate with each other and external systems.

- Container Images and Containers

A container image is a snapshot of a filesystem that contains everything needed to run a specific application or service, including the code, libraries, binaries, and configurations. These images are portable and can be shared across different environments, ensuring consistency.

Once a container image is pulled from a registry and executed by the container runtime, it becomes an isolated instance known as a container. The container itself is a running process that is isolated from the rest of the system, meaning it has its own filesystem, networking, and environment variables.

Container Orchestration: Managing Containers at Scale

When working with multiple containers, container orchestration tools like Kubernetes, Docker Swarm come into play. These tools automate the deployment, scaling, and management of containerized applications, ensuring high availability and fault tolerance.

Container orchestration handles the following:

- Scheduling: Assigning containers to nodes based on resource needs and constraints.

- Scaling: Automatically adjusting the number of container instances based on demand.

- Load Balancing: Distributing traffic evenly across containers to ensure optimal performance.

- Service Discovery: Allowing containers to locate and communicate with each other.

- Health Monitoring: Continuously checking container health and restarting or replacing failed ones.

- Rolling Updates: Deploying updates with minimal downtime by incrementally replacing old containers.

- Rollback: Reverting to a previous version if an update fails.

- Resource Allocation: Managing CPU, memory, and storage usage to prevent overloading.

- Networking: Handling container communication and network policies.

- Storage Management: Providing persistent storage for stateful applications.

- Security: Enforcing access controls, secrets management, and network policies.

- Configuration Management: Managing and injecting configuration data into containers.

- Logging and Monitoring: Collecting logs and metrics for troubleshooting and performance analysis.

- Self-Healing: Automatically replacing or rescheduling failed containers to maintain desired state.

Networking and Storage

Networking and persistent storage are critical for the proper functioning of containerized applications.

- Networking: Containers need a way to communicate with each other and with external services. Docker uses the concept of bridge networks, where each container is connected to a virtual bridge network that allows communication between containers on the same host.

- Overlay Networks: For containers running across multiple hosts, overlay networks ensure seamless communication by abstracting the underlying physical network.

- Storage: Containers are ephemeral by nature, meaning they do not retain data once they are stopped or removed. To address this, persistent storage solutions like Docker Volumes, Kubernetes Persistent Volumes (PVs), and Cloud storage services are used to store data that needs to survive container restarts or failures.

- Networking: Containers need a way to communicate with each other and with external services. Docker uses the concept of bridge networks, where each container is connected to a virtual bridge network that allows communication between containers on the same host.

Performance and Benchmarks

Understanding their performance characteristics is essential for optimizing resource usage and ensuring optimal operation.

In this section, we will cover key performance factors, best practices for optimizing container performance, and benchmarks comparing containerization approaches.

Key Performance Factors for Containers

Container performance depends on several factors, including resource allocation, the container runtime, and the underlying host system's resources. Here are the main performance aspects you should consider when using containers:

- CPU Usage: Containers share the CPU resources of the host system. Excessive CPU usage in one container can affect the performance of other containers running on the same system.

- Memory Usage: Containers are lightweight but still consume memory. When a container consumes more memory than allocated, it can lead to memory swapping, significantly affecting performance.

- Disk I/O: Containers that generate or rely on a large volume of data may experience disk I/O bottlenecks, particularly when running on shared storage.

- Networking Latency: Containers that need to communicate over the network can be affected by latency and throughput limitations, especially in a distributed setup.

Container Benchmarks: Comparing Approaches

To provide a practical understanding of how containers perform under various workloads, let's look at some benchmark comparisons:

| Category | Docker Containers | Virtual Machines (VMs) | Kubernetes |

| Startup Time | ~1–2 seconds | ~1–5 minutes | Adds ~5–10 seconds per pod for orchestration |

| Resource Overhead | ~50–150 MB per container | ~1–5 GB per VM | Overhead depends on the cluster size (~10% for orchestration tasks) |

| Density | ~Hundreds of containers per host | ~10–20 VMs per host | Supports large-scale container deployments (thousands) |

| Use Case | Best for single-container apps or local setups | Suitable for legacy apps or isolated workloads | Ideal for managing microservices at scale |

| Scalability | Manual scaling | Scaling tied to VM provisioning | Auto-scaling, horizontal/vertical pod scaling |

| Fault Tolerance | Limited (manual recovery or restart required) | VM-level failover with downtime | Automated failover with self-healing pods |

| Multi-Container Apps | Simple setups using Docker Compose | Requires individual VMs per service | Orchestrates multi-container workloads seamlessly |

| Networking Performance | ~Minimal latency (single-host setups) | Additional hops between VMs increase latency | Optimized for multi-cluster and cross-node networking |

| Storage Efficiency | Uses shared volumes, lower persistence costs | High due to VM disk duplication | Integrates with cloud-native storage like EBS, Persistent Disks |

| Cost per Instance | Lower due to shared OS | Higher due to full OS requirements | Varies; Kubernetes management incurs operational costs |

Best Practices for Performance Optimization

To ensure optimal performance of your containers, follow these strategies:

Optimizing Container Images

Reducing the size of container images plays a significant role in improving startup times and resource usage. Smaller images are quicker to pull and deploy, which is especially important in high-demand environments.

- Use Multi-Stage Builds: This ensures that only the necessary components are included in the final image, reducing its size.

- Minimize Base Image Size: Start with a minimal base image like alpine to avoid unnecessary overhead.

Resource Allocation Strategies

Allocating resources appropriately is crucial to ensuring that containers are optimized for both performance and stability.

- Set Resource Limits: Limit the amount of CPU and memory a container can consume to avoid resource contention with other containers running on the same host.

| bash docker run -d --memory="1g" --cpus="2.0" my-app |

- Use Resource Requests and Limits in Kubernetes: For Kubernetes, ensure proper requests and limits are set for each container to ensure fair resource allocation.

Networking Optimization

To prevent bottlenecks, optimize container networking by:

- Using Custom Networks: Create isolated networks for containers that need to communicate with each other while minimizing external traffic interference.

| bash docker network create --driver bridge my_network docker run -d --network my_network my-container |

- Optimize DNS Resolution: Using efficient DNS systems like CoreDNS in Kubernetes environments ensures faster and more reliable name resolution for containers.

Container Orchestration

Managing and scaling containerized applications in real-world environments often requires advanced orchestration. Container orchestration automates the deployment, scaling, networking, and management of containers, ensuring they run efficiently and reliably across multiple environments.

Key Orchestration Tools

- Kubernetes:

- Developed by Google, Kubernetes has become the leading platform for orchestrating containerized applications.

- Automates deployment, scaling, and maintenance of containerized workloads.

- Offers features like service discovery, load balancing, self-healing, and automated rollouts/rollbacks.

- Docker Swarm:

- A native clustering tool for Docker.

- Simplifies container orchestration by integrating seamlessly with Docker CLI and Docker Compose.

- Apache Mesos:

- A versatile platform that supports container orchestration alongside other distributed systems.

| Tool | Description | Pros | Cons |

| Kubernetes | Open-source system for automating deployment and scaling of containerized apps | Industry standard, large community, highly scalable | Steep learning curve, complex setup |

| Docker Swarm | Native clustering and orchestration for Docker containers | Easy to use, integrates well with Docker | Less powerful than Kubernetes, limited features |

| Apache Mesos | Distributed systems kernel that abstracts resources across a cluster | Great for large-scale clusters, supports diverse workloads | Complexity, smaller ecosystem for container orchestration |

| Amazon ECS | Fully managed container orchestration service by AWS | Tight integration with AWS services, easy scaling | AWS-centric, limited portability |

| OpenShift | Kubernetes-based container platform from Red Hat | Built-in CI/CD, enterprise support, security features | Heavier resource footprint, less flexible than plain Kubernetes |

Persistent Storage and Volumes for Containers

Persistent storage is essential for containerized workloads that need to retain data beyond the container’s lifecycle. Containers are typically stateless, meaning data stored within a container is lost when it is stopped or deleted. Persistent storage ensures data durability and availability across container restarts and migrations.

Persistent Volumes in Kubernetes

Persistent Volumes (PVs) provide an abstraction over physical storage, enabling containers to store and retrieve data consistently. Kubernetes manages PVs through Persistent Volume Claims (PVCs), allowing applications to request storage dynamically.

Key Features:

- Durability: Ensures that data survives container termination.

- Elastic Scaling: Automatically scales storage capacity with application needs.

- Backup and Restore: Provides mechanisms to protect and recover data during failures.

Common Persistent Storage Solutions

- Host Path Volumes: Stores data on the host system, suitable for non-production workloads.

- Cloud Storage: Integrates with cloud services like AWS EBS, Azure Disks, and Google Persistent Disks.

- Distributed Storage Systems: Uses tools like Ceph or GlusterFS for high availability and scalability.

Distributed File Systems

Distributed file systems enable multiple containers across nodes in a cluster to access shared storage simultaneously. This is particularly valuable in microservices architectures where real-time data sharing is critical.

What Are Distributed File Systems?

A distributed file system breaks data into smaller pieces and stores them across multiple nodes. Containers access this data seamlessly, as though it were stored locally, while the system handles replication, fault tolerance, and consistency.

Benefits of Distributed File Systems

- Scalability: Grows with the number of containers and workloads.

- High Availability: Replicates data across nodes to minimize downtime.

- Simplified Data Management: Abstracts underlying complexity for easier handling.

Popular Distributed File Systems

- GlusterFS: Open-source and highly scalable.

- Ceph: Provides object, block, and file storage in a unified system.

- Amazon EFS: A fully managed cloud-based solution for shared storage.

Use Cases

- Shared logs and configurations.

- Data analytics where multiple containers process the same dataset.

- Content delivery systems requiring consistent access to large files.

Container Networking

One of the key aspects of containerization that enhances its flexibility is networking. Containers need to communicate with each other and the outside world, and this can be achieved through different networking models.

Practical Examples of Container Networking Configurations

Bridge Network: This is the default networking mode for Docker containers. Containers on the same bridge network can communicate with each other using IP addresses or container names. However, they are isolated from the host system and other networks unless specifically configured.

| bash docker run -d --name app1 --network bridge my-app docker run -d --name app2 --network bridge my-app |

Host Network: In this mode, containers share the host’s network namespace, meaning they can communicate with other containers and services as if they were running on the host system.

| bash docker run -d --name app1 --network host my-app |

Overlay Network: Used in Docker Swarm or Kubernetes, overlay networks allow containers on different hosts to communicate securely over a distributed network.

| bash docker network create -d overlay my-overlay-network |

These configurations allow containers to communicate with each other and the outside world in flexible, scalable ways. However, managing multi-container communication across distributed systems can introduce challenges like network security, latency, and service discovery, which need careful attention in production systems.

Building Containers into the DevOps Workflow

Once a container has been built, it remains unchanged. Each deployment of a specific container version delivers consistent behavior, ensuring predictable performance every time it’s used.

However, when updates like security patches or new features are needed, containers are rebuilt to create a new version. The previous version is then explicitly replaced in every environment. Despite internal changes in the updated packages, containers are designed to maintain consistent interactions with the external environment.

In a DevOps pipeline, this repeatability ensures that containers tested in CI/CD environments perform identically in production. This leads to reliable testing and minimizes the chances of bugs or errors affecting end users.

How Containers Play a Role in the DevOps Workflow

- Code: Containers standardize the development environment, ensuring consistency across all stages. By predefining package versions required by an application, they eliminate discrepancies between developers’ systems. For instance, a Python web application team can utilize a container image configured with specific versions of Python and dependencies, reducing conflicts and ensuring seamless collaboration.

- Build: Containers allow applications to be built independently of their target environments. Using tools like Docker or Podman, developers can package application code and dependencies into immutable images. These images are built once and stored for later deployment, ensuring a reliable and reproducible build process.

- Test: Automated testing benefits greatly from containerization, as the testing environment mirrors the production setup. This comprehensive testing approach ensures higher-quality software delivery by minimizing discrepancies between test and production stages.

- Release and Deploy: Containers simplify deployment by enabling changes through new container builds. Their ephemeral nature promotes modular architecture, such as microservices. For example, a retail application can have separate containers for inventory management and payment processing, allowing updates to one without disrupting the other.

- Operate: Containers isolate changes within their environment, reducing risks in live applications. For instance, two microservices in separate containers can rely on different versions of a library without causing conflicts, ensuring smoother operations in complex systems.

Key Benefits of Containers in DevOps

- Consistency: Containers standardize environments across development, testing, and production stages.

- Repeatability: Immutable containers deliver predictable behavior during each deployment.

- Reliability: By isolating environments, containers reduce interdependencies and enhance the resilience of microservices-based architectures.

Deployment Workflow and Strategies

Deployment Workflow

The deployment of containerized applications involves several key steps that ensure smooth and efficient rollouts. This workflow outlines the stages in deploying a containerized application from code commit to production.

- Code Commit: The process begins with developers committing changes to the codebase in version control systems like Git.

- Container Build: After the code is committed, a Docker image is built using the Dockerfile, packaging the application and its dependencies into a container.

- Unit Tests: Unit tests are run to verify the functionality of individual components of the application.

- Integration Tests: The application undergoes integration tests to ensure that the components interact correctly when deployed together.

- Container Registry Push: Once tests pass, the Docker image is pushed to a container registry like Docker Hub or a private registry for storage and access.

- Kubernetes Deployment: The container image is deployed to a container orchestration platform, such as Kubernetes, which manages scaling, deployment, and updates.

- Load Balancer Configuration: A load balancer is configured to distribute traffic efficiently across multiple instances of the application.

- Monitoring Setup: Monitoring tools (like Prometheus, Grafana, etc.) are set up to track the performance and health of the application post-deployment.

Deployment Strategies Comparison

Different deployment strategies help manage the rollout process and minimize downtime or disruptions. Here’s a comparison of common deployment strategies used for containerized applications:

| Strategy | Description | Best For | Example Use Case |

| Blue-Green | Zero-downtime deployment by switching between two environments | Production-critical apps | Major app updates with minimal downtime |

| Canary | Gradual rollout, testing new versions with a small user group before full deployment | Feature testing, phased rollouts | Testing new features in production environments |

| Rolling Update | Incremental updates to the live application, one instance at a time | General application updates | Deploying non-breaking changes with minimal disruption |

Implementation Example: Blue-Green Deployment

Below is an example of how to implement a Blue-Green Deployment strategy using Kubernetes.

| apiVersion: apps/v1 kind: Deployment metadata: name: blue-green-deployment spec: strategy: type: RollingUpdate rollingUpdate: maxSurge: 25% maxUnavailable: 25% replicas: 3 selector: matchLabels: app: my-app template: metadata: labels: app: my-app spec: containers: - name: my-app-container image: my-app-image:v2 ports: - containerPort: 8080 |

- maxSurge: This defines how many additional pods can be created during the deployment process. Here, a 25% surge allows new pods to be added before the old ones are removed.

- maxUnavailable: This indicates how many old pods can be unavailable during the update process, in this case, allowing up to 25% to be taken offline during deployment.

Deployment Configuration Best Practices

To ensure the success of your container deployments, follow these best practices:

- Use Declarative Configurations: Always define your deployment configurations in version-controlled files (e.g., YAML for Kubernetes). This allows for reproducibility and traceability.

- Implement Version Control for Deployments: Track changes to deployment configurations just as you would your application code. This helps in rollbacks and debugging.

- Automate Rollback Mechanisms: Automate rollback procedures in case the deployment fails. Kubernetes supports rolling back to previous versions easily.

- Monitor Deployment Health: Use tools like Prometheus, Grafana, and ELK stack to monitor the health of your deployments and identify potential issues in real-time.

- Validate Configurations Before Production: Always validate configurations in staging environments before applying them to production to avoid surprises during live deployment.

Real-World Applications of Containerization

Containers are applied across industries to address real-world problems:

- Microservices: By breaking down complex applications into smaller, independent services, containers enable developers to deploy and scale each service independently, reducing time-to-market and improving fault tolerance.

- DevOps and CI/CD: Containers play a pivotal role in CI/CD (Continuous Integration/Continuous Delivery) pipelines by ensuring that the development, testing, and production environments are consistent. This leads to faster deployments and fewer bugs.

Example CI/CD pipeline using Docker:

| yaml version: '3' services: web: image: my-web-app:latest ports: - "80:80" build: context: . dockerfile: Dockerfile |

- Cloud-Native Applications: Containers have become the cornerstone of cloud-native architectures, making it easier to manage and scale applications across cloud providers like AWS, Azure, and Google Cloud.

- Hybrid Cloud: With containers, organizations can build hybrid cloud environments, running applications across private and public clouds seamlessly.

Multi-Container Applications: Orchestrating Complex Systems with Ease

In real-world applications, it's common to have multi-container systems. For example, a web application might have separate containers for the frontend, backend, database, and caching layer. Managing multiple containers across different environments requires orchestration.

Kubernetes and Docker Compose are popular tools for orchestrating multi-container applications. These tools allow developers to define, deploy, and manage complex applications with ease, ensuring that containers work together smoothly.

Industry Use Cases

Across various industries, containers are driving innovation:

- E-commerce: Containers enable rapid scaling during high-demand periods like Black Friday.

- Banking and Finance: Containers provide the agility required for fast deployments and regulatory compliance.

- Healthcare: Containers help healthcare providers build secure, scalable applications for patient data management.

- Gaming: Containers allow for the rapid deployment of game servers and player instances.

From startups to enterprise-level businesses, containers are helping organizations achieve greater efficiency and scalability.

Security and Reliability

Containerized environments, if not properly configured, can present vulnerabilities that attackers may exploit. Ensuring robust container security involves careful configuration, ongoing monitoring, and adherence to best practices.

Container Security Risks:

- Vulnerabilities in Container Images: One of the most common security risks arises from using outdated or vulnerable container images. Containers are only as secure as the base image they are built upon. If the image contains security flaws or known exploits, these issues can affect the application. It's crucial to:

- Use Trusted Sources: Always pull images from trusted sources like Docker Hub’s official repositories, or private image registries with verified images.

- Scan for Vulnerabilities: Regularly scan images for vulnerabilities using tools like Clair, Trivy, or Anchore to ensure that the images you’re using are free from known security issues.

Here's a sample scan using Trivy:

| bash trivy image my-app:latest |

- Update Images Frequently: Regularly update base images to benefit from the latest security patches.

- Running Containers as Root: Containers that run with root privileges can give attackers direct access to the underlying host system, enabling privilege escalation attacks. To mitigate this risk:

Always configure containers to run with non-root users. This limits the damage that can be done if a container is compromised.

Dockerfile Example:

| dockerfile FROM node:14 USER node |

By switching to a non-root user in your Dockerfile, you can restrict the container’s access and protect your system from unauthorized modifications.

- Unrestricted Network Access: Containers often need to communicate with each other or the host system, but unrestricted network access can lead to vulnerabilities. To ensure secure networking:

- Use Docker Networks to isolate containers and control which ones can communicate with each other.

- Implement firewalls and network segmentation to limit the exposure of containers to unnecessary networks.

- Misconfigured Volumes: Volumes are used to store persistent data, but if sensitive host directories (e.g., /etc, /root) are improperly mapped to containers, attackers can gain unauthorized access to sensitive information.

- Be mindful of the directories you mount into containers. Use specific, isolated volumes to prevent exposure of critical host data.

- Avoid mounting sensitive files from the host unless absolutely necessary and ensure that the correct file permissions are applied.

Protecting Containers in Production:

Once your containers are in production, securing them during runtime becomes crucial. Containers are often exposed to production environments where malicious actors could attempt to exploit vulnerabilities. To protect your containers in production:

- Implement Runtime Security Policies: Enforce security policies that prevent containers from performing risky actions. For example, restrict containers from running privileged, prevent unnecessary capabilities, and isolate them from critical system resources.

- Access Control and Least Privilege: Use access control mechanisms like Role-Based Access Control (RBAC) in orchestrators like Kubernetes to enforce the principle of least privilege. This ensures that only the necessary users and services can access containers, reducing the attack surface.

- Runtime Monitoring and Intrusion Detection: Utilize monitoring tools to keep track of container behavior in real-time. Tools such as Falco or Sysdig can help detect unusual activities like unexpected file access or network connections, allowing you to identify potential breaches quickly.

Ensuring Compliance:

For industries subject to regulatory requirements (e.g., HIPAA, GDPR, PCI-DSS), ensuring that containers comply with these standards is essential. Containers can handle sensitive data, so protecting this information and maintaining compliance is critical.

- Data Encryption: Always encrypt sensitive data both at rest and in transit. Use encryption libraries and ensure your containers are configured to handle encrypted volumes and secure communication.

- Audit Logs and Monitoring: Keep detailed logs of container activities and access attempts. These logs can be invaluable for forensic investigations and demonstrate compliance during audits.

- Continuous Security Scanning: Regularly scan both the container images and the runtime environment for vulnerabilities. This proactive approach ensures that containers remain secure and compliant with regulatory requirements.

Best Practices for Developers

To truly master containerization, developers should focus on the following best practices:

- Creating Efficient and Lightweight Container Images: Optimize images by minimizing dependencies and using minimal base images.

- Optimizing Container Performance for Speed and Resource Efficiency: Use tools like Docker stats and cgroups to monitor and optimize container performance.

- Scaling Containers: Use orchestration tools like Kubernetes to handle scaling effectively and automatically based on traffic.

- Managing Dependencies and Versioning: Ensure that containerized applications have clear versioning, and avoid unnecessary dependencies in your images.

- Monitoring and Troubleshooting: Set up monitoring solutions (e.g., Prometheus, Grafana) to track container performance and logs.

Common Troubleshooting Scenarios and Solutions

While containers offer speed and scalability, there will be challenges when running containerized applications. Efficiently troubleshooting issues and optimizing performance are key to ensuring containers run smoothly and efficiently in production environments.

Below, we break down common troubleshooting scenarios, the tools and commands to resolve them, and strategies for optimizing your containers to perform at their best.

Container Won’t Start

If a container fails to start, this could be due to several reasons such as missing dependencies, incorrect configurations, or application crashes. Here's how to approach the issue:

Steps to resolve:

- Check the logs: Logs are often the first place to check when troubleshooting. They will often provide detailed information about why a container failed to start.

bash |

Look for error messages or missing dependencies.

- Check for application-specific issues such as unhandled exceptions or configuration errors.

- Inspect the container status:

bash |

This command shows all containers, including stopped ones. If a container exited unexpectedly, look for the exit code. A non-zero exit code indicates an error.

- Verify the Dockerfile or image configuration:

- Ensure that the Dockerfile or image used for the container build includes all the necessary dependencies.

- Consider rebuilding the image if you suspect the issue is with the image's state.

- Verify container resource availability: Sometimes, containers fail to start if there are insufficient system resources (memory, CPU, disk space). Check the system’s resource usage and container limits.

High CPU Usage

High CPU consumption can be a sign of inefficient code, resource mismanagement, or a misconfigured container. If your container consumes too much CPU, follow these steps:

Steps to resolve:

- Monitor container resource usage:

| bash docker stats <container_name_or_id> |

- This command provides real-time resource usage metrics, including CPU, memory, and network I/O.

- Look for containers with unusually high CPU usage and investigate whether they are stuck in an infinite loop or running a resource-intensive process.

- Investigate application behavior: In many cases, high CPU usage is caused by inefficient code. Review your application’s logic or profile it to identify bottlenecks.

- Resource limits and constraints: Consider setting CPU and memory limits for your containers to prevent one container from overusing system resources.

| bash docker run -d --name my-container --memory="500m" --cpus="0.5" my-image |

- This command restricts the container to use a maximum of 500MB of memory and 50% of a CPU core.

- Optimize the application code: Look for memory leaks, unoptimized database queries, or inefficient algorithms that might be consuming unnecessary CPU resources.

Networking Issues

Networking issues are common in containerized environments, especially when containers cannot communicate with each other or the outside world. Containers rely on proper network configurations for inter-container communication and external access.

Steps to resolve:

- Verify container network settings: Ensure that containers are attached to the correct network. Use the following command to inspect container networks:

| bash docker network inspect <network_name> |

- This will display details about the container's IP address, connected containers, and network settings.

- If your container isn't listed under the correct network, consider reconnecting it or recreating the container with the proper network configuration.

- Check for port conflicts: Ensure no two containers are trying to expose the same port on the host system. You can see the exposed ports using:

| bash docker ps |

- If there’s a conflict, stop one of the containers or change its port mapping.

- Ensure proper firewall settings: Sometimes firewall rules block container traffic. Check firewall configurations to ensure the necessary ports are open for communication.

| bash sudo ufw status |

- Inspect DNS resolution inside containers: Containers rely on DNS to resolve domain names. If DNS isn't working, check the DNS configuration inside the container:

| bash docker exec -it <container_name_or_id> cat /etc/resolv.conf |

- Ensure the correct DNS servers are listed, especially in network modes like bridge or host.

The Future of Containerization

As containerization continues to evolve, new technologies and advancements are emerging to enhance its capabilities. Some key trends to watch include:

- Beyond Docker: New container runtimes, such as containerd and CRI-O, are gaining momentum, providing more specialized solutions for container orchestration.

- AI and Machine Learning in Container Management: AI will play an increasingly important role in automating and optimizing container orchestration, resource allocation, and monitoring.

- Edge Computing: Containers will power edge computing solutions, where computation happens closer to the data source, enabling low-latency applications.

- Container Standards and Compatibility: Efforts are underway to create cross-platform compatibility and standardize container runtimes, enabling more universal adoption.

The future of containerization is filled with exciting possibilities, and developers must stay abreast of emerging trends to leverage the full potential of containers in their applications.