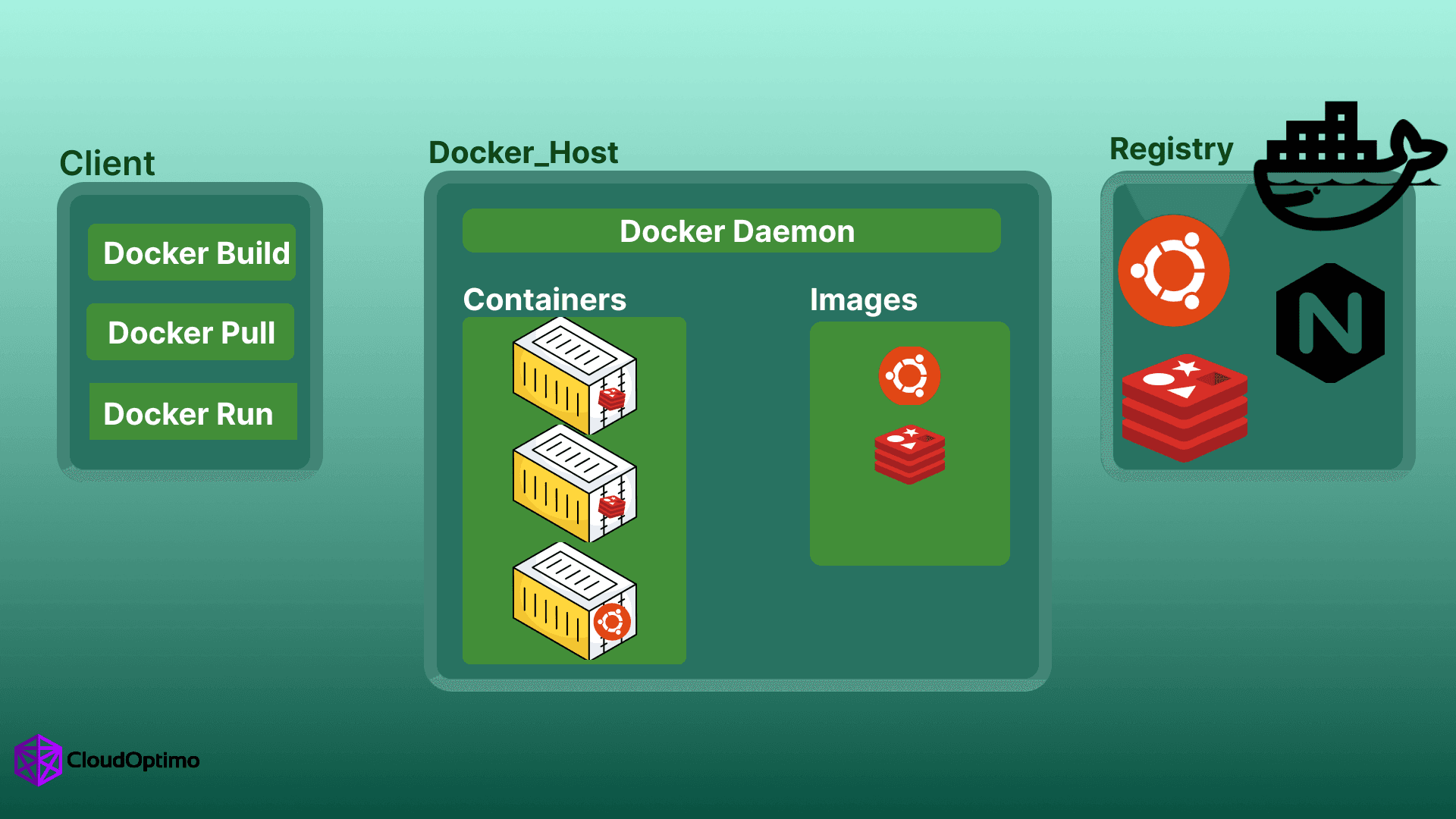

Docker is a platform that enables developers to easily package applications into containers—lightweight, portable environments that include everything needed to run an application. Kubernetes, on the other hand, is a container orchestration platform that automates the deployment, scaling, and management of those containers.

In this blog, we’ll dive into the key differences between Docker and Kubernetes, how they complement each other, and why developers and companies rely on them to streamline application development and deployment.

Overview of Containerization, Docker, and Kubernetes

To understand Docker and Kubernetes, it’s helpful to first look at the concept of containerization.

Containerization allows developers to package an application with all its dependencies (libraries, binaries, and other configurations) into a single unit—a container. Containers are fast, portable, and isolated from each other, making them ideal for consistent deployments across different environments.

Docker revolutionized this process by simplifying the creation, deployment, and management of containers. It makes containerization accessible, enabling developers to focus on writing code rather than worrying about dependencies.

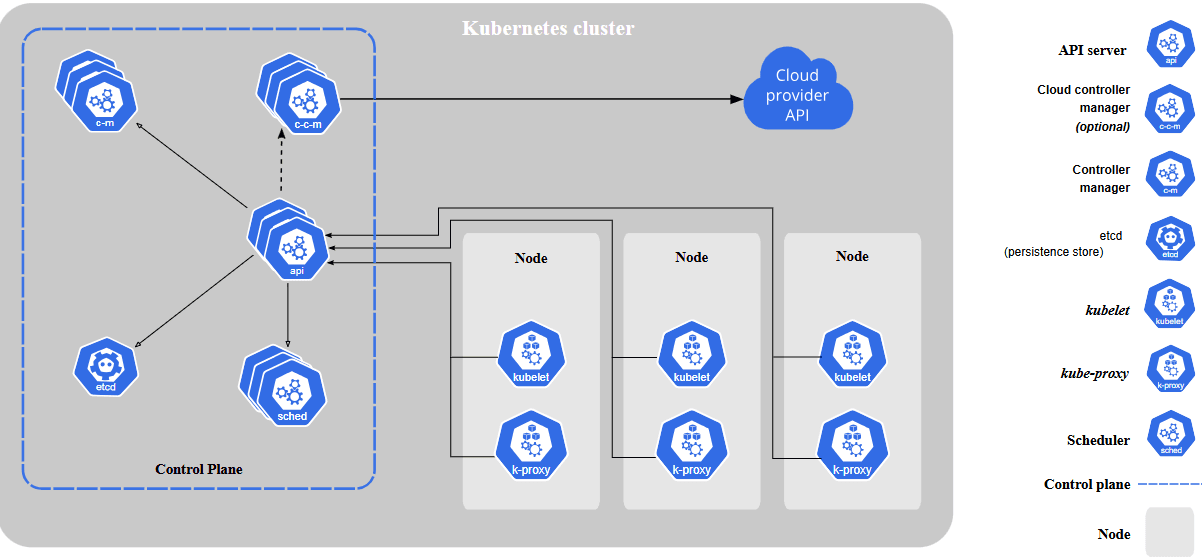

However, as applications grow in complexity, managing multiple containers across multiple systems becomes difficult. This is where Kubernetes steps in. It’s a robust platform that orchestrates and manages containers at scale, ensuring that containers are deployed efficiently, scaled according to demand, and kept available at all times.

The Evolution of Docker and Kubernetes

Docker and Kubernetes have dramatically transformed how modern applications are built and deployed. Let’s take a look at their histories and how they’ve influenced the software development landscape.

History and Growth of Docker and Kubernetes

Docker: Docker was first introduced in 2013 by Solomon Hykes, initially as a way to simplify the packaging of applications with their dependencies. Docker’s ability to create lightweight, consistent, and portable containers revolutionized software deployment, making it one of the most widely used containerization platforms today.

Kubernetes: Kubernetes was developed by Google in 2014 and later donated to the Cloud Native Computing Foundation (CNCF). Kubernetes began as an internal tool at Google to manage containerized applications at scale and has since become the de facto standard for container orchestration in the industry.

Source: Kubernetes

How Docker and Kubernetes Have Transformed the Development Landscape

- Agile Development: Docker enabled developers to quickly package applications and their dependencies into containers, allowing for faster development cycles. Kubernetes, in turn, automated the deployment and scaling of these containers, making it easier to manage complex systems at scale.

- Microservices: Both Docker and Kubernetes have been key to the rise of microservices architectures. Docker allows each microservice to be packaged in its own container, while Kubernetes ensures that these containers can be efficiently managed, scaled, and orchestrated.

The Role of Kubernetes in the Cloud-Native Era

Kubernetes has played a pivotal role in the rise of cloud-native development, where applications are designed to run on distributed systems rather than monolithic servers. Kubernetes’ ability to automate the deployment, scaling, and management of containerized applications makes it an essential tool in the cloud-native ecosystem.

Kubernetes allows organizations to embrace cloud infrastructure and services more fully, ensuring high availability, automatic scaling, and self-healing capabilities, which are crucial for modern cloud-based applications.

Key Differences Between Docker and Kubernetes

Although Docker and Kubernetes are frequently used together, they serve different purposes within the container ecosystem. Below is a comparison of their core functions:

| Feature | Docker | Kubernetes |

| Primary Role | Containerization: Creating and running containers. | Orchestration: Managing containers across clusters. |

| Scope | Single host, local environments. | Multi-host, distributed systems. |

| Scaling | Manual scaling of containers. | Automatic scaling based on demand. |

| High Availability | Limited support for failover and redundancy. | Built-in failover, replication, and self-healing. |

| Networking | Basic networking between containers. | Advanced service discovery, load balancing, and network policies. |

| Use Case | Ideal for development and single-container apps. | Best suited for large-scale, multi-container apps with high availability and scaling needs. |

Why Developers and Companies Use Them Together

While Docker and Kubernetes serve different purposes, their combination offers a powerful solution for modern application development and deployment.

- Efficient Development: Docker simplifies the process of building and packaging applications into containers. Developers can ensure consistency across various environments (development, testing, production) without worrying about configuration differences.

- Seamless Deployment: Once containers are packaged with Docker, Kubernetes takes over to manage deployment. It ensures that containers are deployed across a cluster of servers, auto-scaled when necessary, and kept healthy through its self-healing capabilities.

- Scalability and Flexibility: Kubernetes allows applications to scale automatically, ensuring that as demand fluctuates, containers can scale up or down with minimal manual intervention.

- Portability: Docker containers can run anywhere—from a developer’s laptop to the cloud. Kubernetes ensures that this portability is maintained, even when managing large-scale, complex applications.

Security Considerations in Docker and Kubernetes

As containers become more central to application development and deployment, security plays a critical role. Both Docker and Kubernetes offer various built-in features to address security challenges, but understanding and implementing security best practices is essential.

Docker Security Features

Docker includes several security mechanisms to help secure containers at the container level:

| Feature | Description |

| Image Scanning | Automatically scans container images for known vulnerabilities to ensure security before deployment. |

| Namespaces | Isolates container environments to prevent them from interfering with each other or the host system. |

| User Permissions | Allows restricting container access by using non-root users and limiting permissions. |

By using these features, Docker enhances container security and reduces attack surfaces. However, Docker alone may not address all security concerns in complex production environments.

Kubernetes Security Measures

Kubernetes also provides various tools to enhance security in a container orchestration environment:

| Feature | Description |

| RBAC (Role-Based Access Control) | Manages user access by defining granular permissions for users and service accounts. |

| Secrets Management | Ensures sensitive information (e.g., passwords, tokens) is stored and accessed securely. |

| Network Policies | Defines rules to control communication between pods and services, enhancing network isolation. |

These tools, when configured properly, allow for fine-grained access control and safe handling of sensitive data in Kubernetes.

Best Practices for Securing Containers in Production

- Regularly update images: Ensure your container images are always up to date to patch known vulnerabilities.

- Use security tools: Employ tools like Clair for vulnerability scanning and Docker Content Trust for signed images.

- Limit container privileges: Run containers as non-root users and restrict their capabilities to reduce potential attack vectors.

- Use network segmentation: In Kubernetes, configure Network Policies to restrict communication between containers, reducing exposure.

Networking in Docker vs Kubernetes

Container networking allows communication between containers and with external services. Docker and Kubernetes offer different networking approaches, with Kubernetes providing more complex and advanced networking features for managing large clusters.

Basic Networking in Docker

Docker provides several networking modes for containers, each suited to different needs:

| Network Type | Description |

| Bridge | The default mode, containers communicate via a virtual bridge network within the same host. |

| Host | Containers share the host’s network interface, bypassing Docker’s networking layer. |

| Overlay | Enables communication between containers across different hosts, useful for multi-host setups. |

These options are sufficient for smaller applications or single-host environments.

Kubernetes Networking

Kubernetes offers a more advanced and flexible networking model with the following key components:

| Feature | Description |

| Pod Networking | Every pod gets a unique IP address, allowing containers in a pod to communicate freely with each other. |

| Service Discovery | Services within Kubernetes are automatically assigned DNS names for easier communication across pods. |

| Ingress Controllers | Manages external HTTP/HTTPS access to services, providing load balancing and SSL termination. |

Kubernetes handles complex networking needs across clusters, making it suitable for large-scale production environments.

Key Differences in Container Networking Models

| Feature | Docker | Kubernetes |

| Networking Model | Single-host communication | Multi-host communication with advanced features like service discovery and ingress controllers. |

| Service Discovery | Manual configuration or third-party tools | Automatic DNS-based discovery and load balancing. |

| Network Isolation | Basic isolation between containers | Network policies allow fine-grained control over which pods can communicate. |

CI/CD Integration with Docker and Kubernetes

Both Docker and Kubernetes integrate seamlessly into CI/CD workflows, allowing teams to automate the deployment process. Docker focuses on containerizing applications, while Kubernetes manages their deployment, scaling, and monitoring.

How Docker Integrates with CI/CD Pipelines

Docker’s integration with CI/CD tools like Jenkins and GitLab CI simplifies the process of building, testing, and deploying containers.

| bash # Sample Dockerfile for CI/CD Pipeline FROM node:14 # Set the working directory WORKDIR /app # Copy application files COPY . . # Install dependencies RUN npm install # Run tests RUN npm test # Start application CMD ["npm", "start"] |

In the CI pipeline:

- Docker Image Building: A Dockerfile is used to build the application image.

- Automated Testing: Docker containers provide an isolated environment to run tests, ensuring consistency across various environments.

- Deployment: Once validated, the Docker image is pushed to a container registry (e.g., Docker Hub) and deployed to production.

Kubernetes and CI/CD: Helm Charts, Argo CD, and Flux

Kubernetes facilitates continuous delivery with tools that help automate the deployment of containerized applications:

- Helm Charts: Helm is a package manager for Kubernetes that enables the installation and management of Kubernetes applications. A Helm chart simplifies Kubernetes app deployment by grouping necessary resources together.

| bash # Sample Helm Chart deployment helm install myapp ./myapp-chart |

- Argo CD: A declarative, GitOps-based continuous delivery tool for Kubernetes that synchronizes applications with a Git repository.

- Flux: Another GitOps tool that ensures your Kubernetes clusters are always in sync with your Git repository.

These tools automate and streamline deployments, ensuring a smooth CI/CD process for Kubernetes-managed applications.

Best Practices for Automating Deployments Using Docker and Kubernetes

- Use version-controlled configurations: Store Kubernetes YAML files and Helm charts in a Git repository for consistent deployments.

- Implement automated rollbacks: Use Kubernetes features like Helm and Argo CD for quick rollback if a deployment fails.

- Optimize resource requests and limits: Set CPU and memory limits in Kubernetes pods to ensure efficient resource allocation and avoid performance issues.

Docker Limitations and Challenges

While Docker is a powerful tool, it has several limitations that need attention, especially when scaling up to more complex systems.

Dynamic IP Addressing and Ephemeral Data Storage

- Dynamic IP Addressing: In Docker, containers are assigned dynamic IP addresses that change each time a container is restarted. This can complicate networking for applications requiring fixed IPs or stateful communication.

- Ephemeral Data Storage: Containers are designed to be ephemeral, meaning any data stored inside the container is lost when it is removed or restarted. To persist data, Docker uses volumes.

| bash # Docker command to create a volume docker volume create mydata |

While volumes allow for persistent storage, the configuration process can be cumbersome in larger environments.

Scaling and Networking Issues in Single-Host Deployments

Docker excels at running containers on a single host, but managing multi-container applications across multiple hosts (for instance, in microservices) can become complex. Docker Swarm offers some orchestration capabilities, but it falls short in handling the complexity of large-scale environments.

For instance, scaling services manually or addressing network failures in multi-container apps can become an administrative burden, highlighting Docker's limitations for production-ready orchestration.

How Kubernetes Addresses Docker’s Limitations?

Kubernetes addresses many of Docker’s challenges, providing a more robust solution for large-scale production systems.

- Service Discovery and Static IPs: Kubernetes automatically assigns static DNS names to services, which helps avoid issues with dynamic IP addressing.

- Persistent Storage: Kubernetes supports persistent storage with Persistent Volumes (PVs) and Persistent Volume Claims (PVCs), allowing data to persist even when containers are restarted.

| yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: my-pvc spec: accessModes: - ReadWriteOnce resources: requests: storage: 1Gi |

- Scaling: Kubernetes has powerful features for automatic scaling (Horizontal Pod Autoscaling) and can handle traffic spikes and failures seamlessly through replication and self-healing capabilities.

By combining Docker’s containerization with Kubernetes’ orchestration and management features, developers can build and scale applications with much more efficiency and reliability.

Docker vs Kubernetes in Edge Computing

Edge computing brings computational power closer to data sources such as IoT devices, sensors, and mobile devices, which helps reduce latency and improve real-time processing. Docker and Kubernetes are key players in edge computing, but they serve different roles depending on the scale and complexity of deployments.

How Docker Works in Edge Devices and IoT

In edge computing, Docker is often used to containerize lightweight applications that need to run directly on edge devices or IoT devices. Docker containers are efficient because they provide a consistent runtime environment regardless of the underlying hardware or operating system. This makes it easy to deploy and update software across numerous distributed edge devices without worrying about compatibility issues.

Docker also supports multi-architecture containers, which allows containers to run on various hardware types such as ARM-based edge devices or x86 systems. As a result, developers can build edge applications that are portable and scalable.

Example: Edge devices like Raspberry Pi running Docker containers can efficiently process local data and then push necessary data to a central server or cloud for further analysis.

Kubernetes’ Role in Orchestrating Edge Deployments

While Docker is excellent for packaging and deploying applications at the edge, Kubernetes can manage and orchestrate these containers across a distributed network of edge devices. Kubernetes ensures that edge workloads are efficiently distributed, scaled, and monitored, providing automated updates and fault tolerance.

Kubernetes can also handle the complexities of managing a large number of edge devices. For instance, Kubernetes can dynamically adjust workloads based on device availability, network conditions, or power consumption, ensuring efficient resource use at the edge.

However, the traditional Kubernetes setup is often too heavy for smaller edge devices. K3s, a lightweight version of Kubernetes, is specifically designed for edge and IoT environments, providing the full orchestration benefits of Kubernetes with reduced resource requirements.

Use Cases for Docker and Kubernetes in Edge Computing Environments

Here are some common edge computing use cases for Docker and Kubernetes:

- IoT Device Management: Docker allows developers to containerize IoT applications and manage them across devices.

- Real-time Data Processing: Edge devices running Docker containers can process data locally, minimizing the need for latency-sensitive cloud communication.

- Distributed AI Models: Kubernetes can be used to manage machine learning or AI models running at the edge, ensuring that these models are consistently updated and running at scale.

Cost Considerations: Docker vs Kubernetes

Understanding the financial implications of choosing Docker or Kubernetes is crucial for organizations of all sizes. Let's dive into a detailed cost analysis that goes beyond the surface-level comparisons.

Infrastructure Basics

The fundamental difference in infrastructure costs stems from architectural requirements. Docker operates efficiently on a single node, making it cost-effective for smaller deployments. A basic Docker setup might only need one virtual machine or server, keeping initial infrastructure costs minimal.

| Infrastructure Component | Docker | Kubernetes |

| Minimum Hardware Required | Single server/VM | Multiple servers (control plane + workers) |

| Base Monthly Cloud Costs | $20-100 | $200-500 |

| Setup Time Investment | 2-4 hours | 2-5 days |

Operational Expenses

Day-to-day operations reveal significant cost variations between Docker and Kubernetes. Docker's simplicity translates to lower operational costs for small to medium deployments, while Kubernetes offers cost advantages at scale through automation and efficient resource utilization.

Personnel and Expertise

The human factor plays a crucial role in total ownership costs:

- Docker environments typically require 1-2 developers with container expertise

- Kubernetes clusters often need 2-4 dedicated DevOps engineers

- Training costs vary significantly: $500-1000 per person for Docker vs $2000-5000 for Kubernetes

| Aspect | Docker | Kubernetes |

| Learning Curve | 1-2 weeks | 2-3 months |

| Team Size Required | 1-2 developers | 3-5 DevOps engineers |

| Training Costs | $500-1000/person | $2000-5000/person |

| Certification Costs | Optional ($200-400) | Required ($300-500 per cert) |

Cloud Provider Considerations

When using cloud services, costs can accumulate in different ways:

Docker Cloud Costs:

- Basic VM or instance charges

- Container registry fees ($0-7 per user/month)

- Simple load balancer costs

- Storage for container images

Kubernetes Cloud Costs:

- Control plane fees ($70-200 per month)

- Worker node costs (varies by size and quantity)

- Load balancer and networking charges

- Persistent volume costs

- Container registry fees

Hidden Cost Factors

Several often-overlooked costs can impact your total investment:

- Monitoring and Logging

- Docker: Basic monitoring tools often suffice

- Kubernetes: Requires comprehensive monitoring solutions

- Backup and Recovery

- Docker: Straightforward backup procedures

- Kubernetes: Complex cluster-wide backup systems

- Security Tools

- Docker: Container scanning and basic security

- Kubernetes: Multi-layer security solutions

Cost Optimization Strategies

To maximize value from either platform:

For Docker:

- Optimize container resource allocation

- Use docker-compose for efficient multi-container apps

- Implement basic monitoring and alerting

- Leverage cloud provider reserved instances

For Kubernetes:

- Implement autoscaling policies

- Use spot instances for non-critical workloads

- Set resource quotas and limits

- Optimize cluster size and node pools

Real-world Cost Scenarios

Small Application (5 services):

- Docker: $150-300/month total infrastructure cost

- Kubernetes: $400-800/month total infrastructure cost

Large Application (50+ services):

- Docker: Complex management might increase costs

- Kubernetes: Economies of scale make it more cost-effective

Making the Right Choice

The cost-effective choice depends on your specific needs:

Choose Docker when:

- Running small to medium applications

- Operating with a limited DevOps team

- Need for simple deployment and management

- Budget constraints are significant

Choose Kubernetes when:

- Managing large-scale applications

- Requiring advanced automation

- Operating multiple environments

- Long-term scalability is crucial

Remember that costs evolve with your application's growth. Starting with Docker and migrating to Kubernetes when needed can be a pragmatic approach to managing costs while maintaining flexibility for future scaling.

| Scale Factor | Docker Decision Point | Kubernetes Decision Point |

| Services | 1-20 | 20+ |

| Daily Transactions | Up to 10,000 | 10,000+ |

| Team Size | 1-5 developers | 5+ developers |

| Budget | Limited | Flexible |

This comprehensive analysis shows that while Docker offers cost advantages for smaller deployments, Kubernetes can provide better long-term value for larger, more complex applications. The key is matching your choice to your current needs while considering future growth requirements.

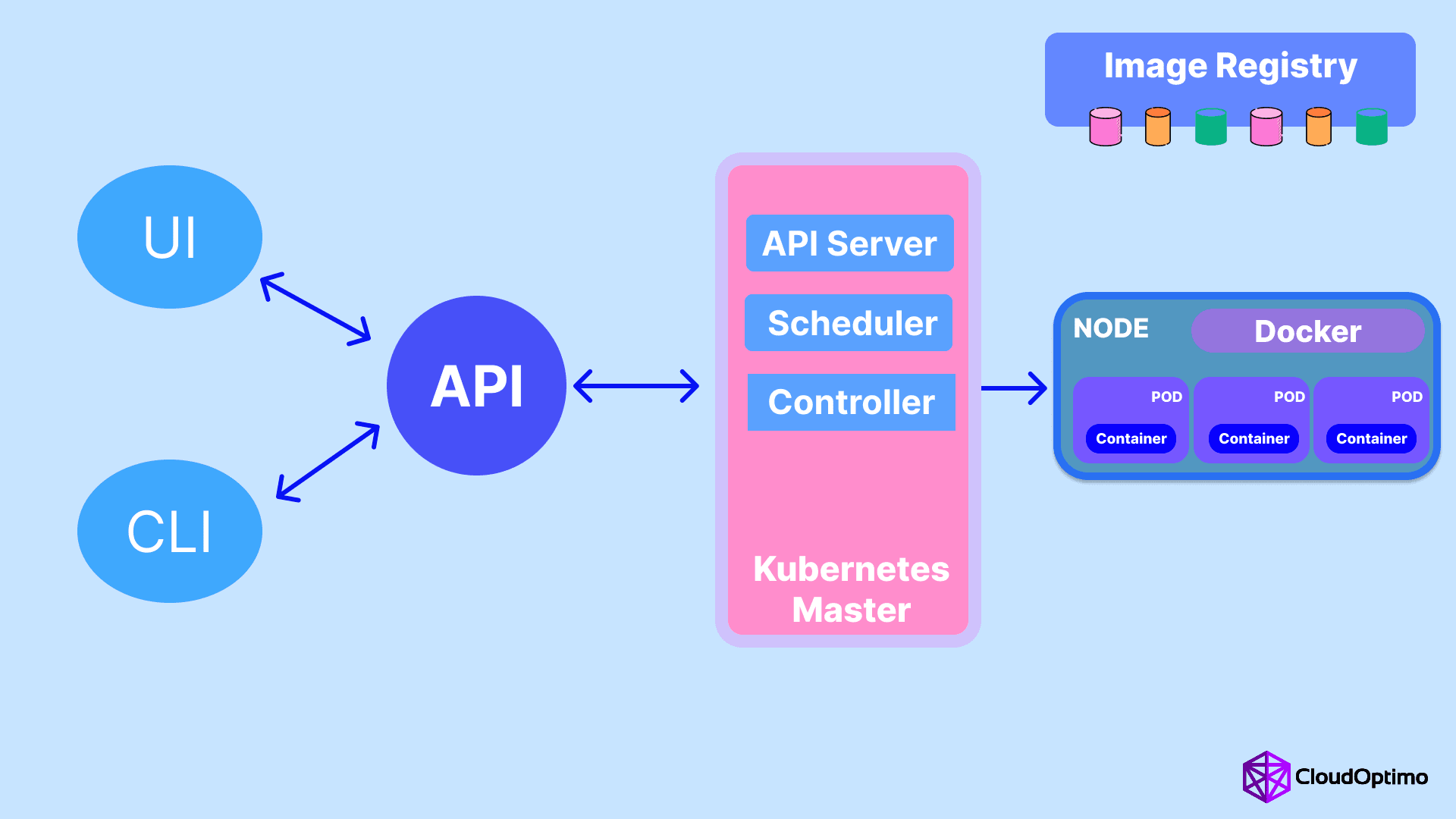

Using Docker and Kubernetes Together

The integration of Docker and Kubernetes is what powers the modern microservices-based applications. While Docker focuses on packaging applications into containers, Kubernetes takes care of managing those containers in a scalable, automated, and efficient way.

The integration of Docker and Kubernetes is what powers the modern microservices-based applications. While Docker focuses on packaging applications into containers, Kubernetes takes care of managing those containers in a scalable, automated, and efficient way.

How Docker Packages and Runs Containers, and How Kubernetes Orchestrates Them

Docker: Docker containers are built to package an application along with its dependencies (libraries, binaries, configurations) into a single unit. This ensures that the application will run consistently across various environments, whether it's on a developer's machine or in a production environment.

Example: A simple Dockerfile for a web application could look like this:

| dockerfile FROM node:14 WORKDIR /app COPY . . RUN npm install CMD ["npm", "start"] |

- Kubernetes: Kubernetes, on the other hand, handles the orchestration of those containers across a cluster of nodes. It ensures that containers are deployed efficiently, can be automatically scaled, and remain resilient. Kubernetes works by deploying containers in pods (groups of containers) and uses a control plane to manage the entire cluster.

Kubernetes Deployment Example:

| yaml apiVersion: apps/v1 kind: Deployment metadata: name: webapp spec: replicas: 3 selector: matchLabels: app: webapp template: metadata: labels: app: webapp spec: containers: - name: webapp-container image: node:14 ports: - containerPort: 8080 |

- Benefits of Combining Docker and Kubernetes for Scalability and Resilience When Docker and Kubernetes work together, they bring out the best in both technologies:

- Scalability: Docker containers can be spun up quickly and scale horizontally with Kubernetes, which automatically manages the scaling process based on real-time demand.

- Resilience: Kubernetes ensures high availability by monitoring containers, restarting failed containers, and redistributing them across the cluster to maintain service uptime.

Best Practices for Integrating Docker Containers into Kubernetes Clusters

Use Helm Charts: Helm is a package manager for Kubernetes that simplifies the process of deploying complex applications. It packages all the necessary Kubernetes resources (like deployments, services, and ingress rules) into a single, versioned unit.

Helm Chart Example:

| bash helm install myapp ./myapp-chart |

- Define Resource Limits: Set CPU and memory requests and limits in your Kubernetes pods to prevent resource contention and ensure optimal performance.

| yaml resources: requests: memory: "64Mi" cpu: "250m" limits: memory: "128Mi" cpu: "500m" |

- Implement Monitoring: Use tools like Prometheus and Grafana to monitor the health and performance of your containers running in Kubernetes. This helps in troubleshooting and scaling decisions.

Alternatives to Docker and Kubernetes

While Docker and Kubernetes dominate the containerization landscape, there are several alternatives that might better suit specific use cases.

Podman, Docker Swarm, and HashiCorp Nomad as Alternatives

- Podman: Podman is a containerization tool that is compatible with Docker but operates without a central daemon, making it more secure. It can run containers in rootless mode, providing more isolation and security.

- Docker Swarm: Docker Swarm is Docker’s native orchestration tool. While Kubernetes provides a more robust solution for managing complex multi-container environments, Docker Swarm is simpler to set up and can handle basic container orchestration tasks.

- HashiCorp Nomad: Nomad is a flexible orchestration tool designed for both containerized and non-containerized applications. It is lightweight and can run on a variety of environments, offering simpler orchestration compared to Kubernetes.

Pros and Cons of Docker and Kubernetes Alternatives

| Alternative | Pros | Cons |

| Podman | Secure, daemonless, Docker-compatible | Lacks some features of Docker (e.g., multi-container orchestration) |

| Docker Swarm | Simple, native to Docker, easier to configure and use | Lacks advanced features and scalability of Kubernetes |

| HashiCorp Nomad | Lightweight, flexible, handles both containers and VMs | Limited ecosystem and community support compared to Kubernetes |

How These Alternatives Compare in Specific Use Cases

- Podman is great for individual developers or smaller applications that require enhanced security and a daemonless approach.

- Docker Swarm works well for small to medium-sized applications where complex orchestration and scaling features aren’t needed.

- HashiCorp Nomad is ideal for environments that need to manage both containers and non-container workloads, offering more flexibility than Kubernetes in mixed environments.

Future Trends in Containerization and Orchestration

As the world of cloud-native development continues to evolve, so too do the technologies that power it. Docker and Kubernetes have laid a solid foundation for modern application deployment and management, but the future of containerization and orchestration is looking even more exciting with new innovations on the horizon.

Upcoming Trends: Serverless Containers, Containerized AI, and More

- Serverless Containers: The future of containerization is moving toward serverless architectures. Serverless containers allow developers to focus on writing code without worrying about infrastructure management. With this trend, Kubernetes will continue to evolve, enabling seamless auto-scaling, resource management, and even dynamic provisioning of serverless containers.

Example: Platforms like AWS Fargate enable serverless containers by automatically managing the underlying infrastructure, and Kubernetes can integrate with such platforms to scale applications on demand. - Containerized AI: Artificial Intelligence (AI) and Machine Learning (ML) workloads are increasingly being containerized to make deployment and scaling more efficient. Docker’s lightweight containers allow AI models to be easily packaged, while Kubernetes orchestrates their scaling and management across multiple nodes for distributed computing. Kubernetes can also optimize resources for intensive ML tasks through specialized hardware like GPUs and TPUs.

- AI-Powered Container Management: Emerging AI technologies are being integrated into orchestration platforms, enabling better decision-making for resource allocation and predictive scaling. AI can optimize container placement, reduce operational costs, and improve overall system performance in real-time.

The Role of Kubernetes in Multi-Cloud and Hybrid Cloud Environments

Kubernetes is increasingly seen as the go-to solution for managing containers across multi-cloud and hybrid cloud environments. The flexibility and scalability offered by Kubernetes allow organizations to run applications across multiple cloud providers, as well as on-premise infrastructure.

- Multi-Cloud Kubernetes: With Kubernetes, organizations can avoid vendor lock-in by running workloads across several cloud platforms simultaneously (e.g., AWS, Google Cloud, Azure). Kubernetes clusters across these platforms can be seamlessly managed and scaled.

- Hybrid Cloud Kubernetes: For businesses that have both on-premise infrastructure and cloud services, Kubernetes provides a unified framework to manage workloads between the two. Kubernetes abstracts the underlying infrastructure, enabling applications to move between cloud and on-premise environments with minimal disruption.

How Emerging Technologies Could Impact Docker and Kubernetes in the Future

- Edge Computing and IoT: The integration of Docker and Kubernetes with edge computing and IoT devices is expected to grow. Kubernetes is already being adapted to lightweight versions (like K3s) to handle IoT workloads, where containers are deployed closer to data sources for real-time processing. With increased automation and intelligence at the edge, containers will play a vital role in distributed data processing.

- Blockchain: As blockchain technology evolves, Docker and Kubernetes may also become essential for orchestrating decentralized applications (dApps) and managing the containers running across distributed networks. The decentralized nature of Kubernetes aligns well with the distributed architecture of blockchain, making it a promising area for future development.

Conclusion

Docker and Kubernetes are two of the most powerful technologies in the modern software development lifecycle. By understanding their distinct roles and how they complement each other, developers and IT teams can harness their full potential for containerization and orchestration.

When to Use Docker and Kubernetes, and How They Complement Each Other

- Use Docker for smaller applications, local development environments, or testing scenarios.

- Use Kubernetes when scaling applications across multiple hosts or managing complex, large-scale production systems.

- Together, Docker provides the containerization, while Kubernetes ensures containers are efficiently managed, scaled, and maintained at scale.

Final Thoughts on Choosing the Right Tool for Your Containerization Needs

Ultimately, the decision to use Docker or Kubernetes—or both together—depends on the complexity and scale of your application. Docker is ideal for lightweight, isolated containers, while Kubernetes provides the orchestration needed for larger, distributed systems. By combining both tools, developers can create highly scalable, portable, and resilient applications.