In recent years, GPU instances have revolutionized how we approach complex computational tasks. Initially developed for graphics rendering, GPUs have evolved into powerful parallel processors capable of accelerating a variety of workloads, including machine learning, scientific simulations, and artificial intelligence (AI) model training. To understand why GPU instances are so essential today, let’s take a quick look at the history and transformative journey of GPUs.

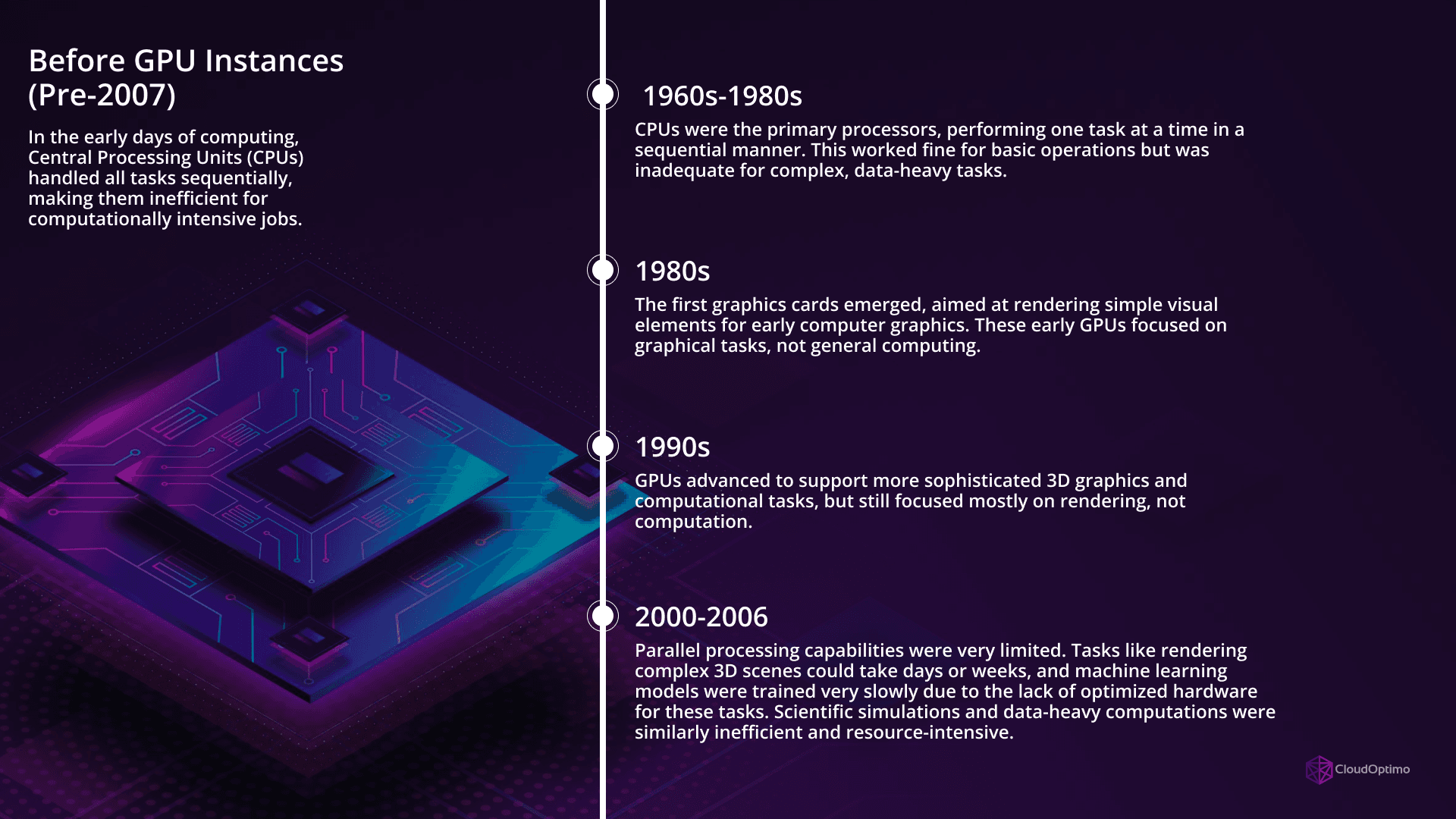

Before GPU Instances (Pre-2007)

Early days of computing, Central Processing Units (CPUs) handled all tasks sequentially, making them inefficient for computationally intensive jobs.

Here’s a breakdown of the timeline before GPUs became essential for parallel processing:

- 1960s-1980s: CPUs were the primary processors, performing one task at a time in a sequential manner. This worked fine for basic operations but was inadequate for complex, data-heavy tasks.

- 1980s: The first graphics cards emerged, aimed at rendering simple visual elements for early computer graphics. These early GPUs focused on graphical tasks, not general computing.

- 1990s: GPUs advanced to support more sophisticated 3D graphics and computational tasks, but still focused mostly on rendering, not computation.

- 2000-2006: Parallel processing capabilities were very limited. Tasks like rendering complex 3D scenes could take days or weeks, and machine learning models were trained very slowly due to the lack of optimized hardware for these tasks. Scientific simulations and data-heavy computations were similarly inefficient and resource-intensive.

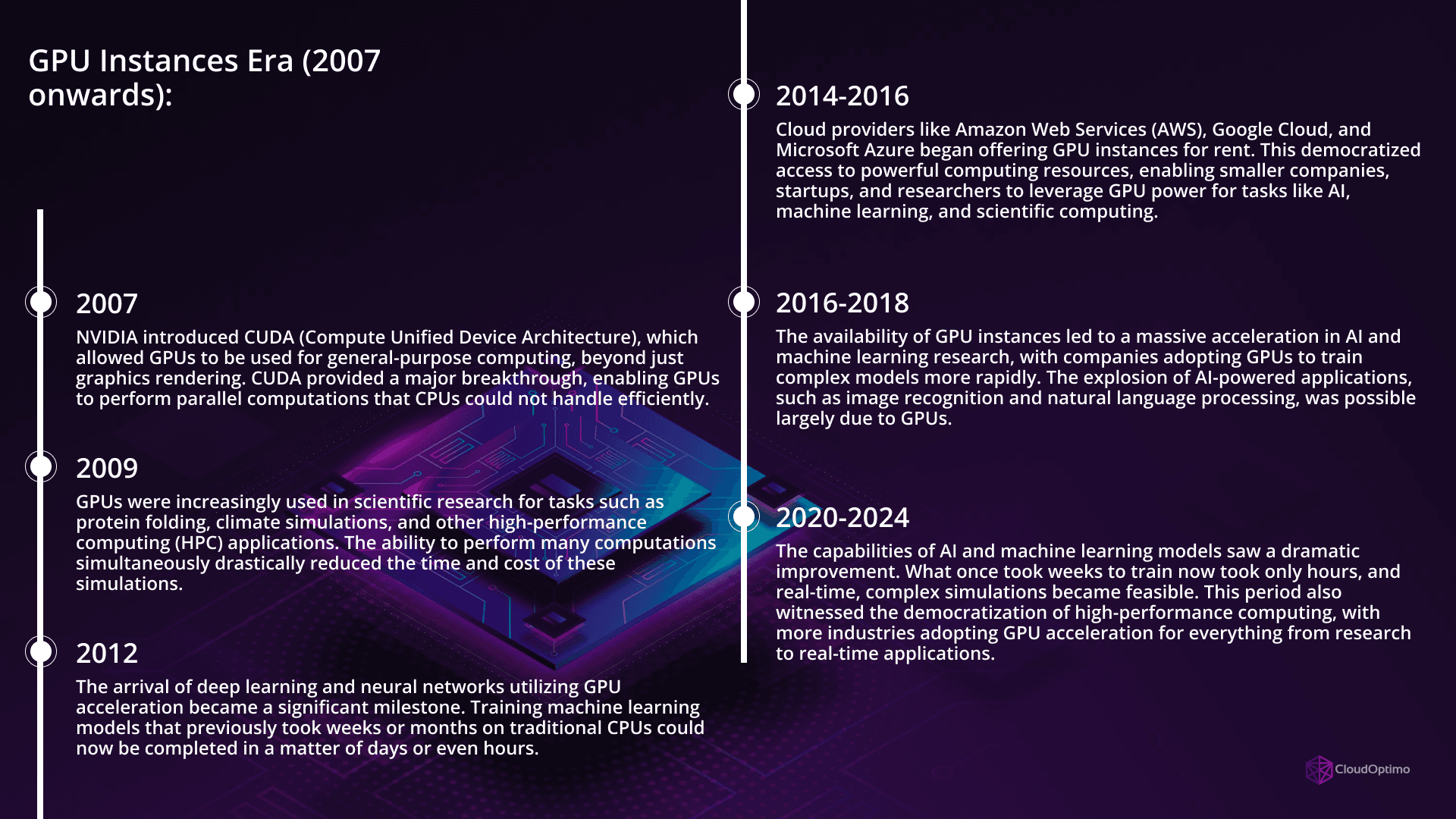

GPU Instances Era (2007 onwards):

- 2007: NVIDIA introduced CUDA (Compute Unified Device Architecture), which allowed GPUs to be used for general-purpose computing, beyond just graphics rendering. CUDA provided a major breakthrough, enabling GPUs to perform parallel computations that CPUs could not handle efficiently.

- 2009: GPUs were increasingly used in scientific research for tasks such as protein folding, climate simulations, and other high-performance computing (HPC) applications. The ability to perform many computations simultaneously drastically reduced the time and cost of these simulations.

- 2012: The arrival of deep learning and neural networks utilizing GPU acceleration became a significant milestone. Training machine learning models that previously took weeks or months on traditional CPUs could now be completed in a matter of days or even hours.

- 2014-2016: Cloud providers like Amazon Web Services (AWS), Google Cloud, and Microsoft Azure began offering GPU instances for rent. This democratized access to powerful computing resources, enabling smaller companies, startups, and researchers to leverage GPU power for tasks like AI, machine learning, and scientific computing.

- 2016-2018: The availability of GPU instances led to a massive acceleration in AI and machine learning research, with companies adopting GPUs to train complex models more rapidly. The explosion of AI-powered applications, such as image recognition and natural language processing, was possible largely due to GPUs.

- 2020-2024: The capabilities of AI and machine learning models saw a dramatic improvement. What once took weeks to train now took only hours, and real-time, complex simulations became feasible. This period also witnessed the democratization of high-performance computing, with more industries adopting GPU acceleration for everything from research to real-time applications.

Key Transformative Periods

The evolution of GPU instances can be broken down into these transformative periods:

- Pre-2007: CPUs were primarily responsible for computation, with limited parallel processing capabilities.

- 2007-2014: The emergence of GPU computing, driven by NVIDIA’s CUDA, began to open up new possibilities for scientific and computational research.

- 2014-2020: The mainstream adoption of GPU instances, both in the cloud and on-premises, accelerated developments in AI, machine learning, and scientific computing.

- 2020-Present: The widespread adoption of GPU computing has revolutionized industries, dramatically reducing the time for training AI models and enabling real-time, large-scale simulations.

As GPUs continued to evolve, cloud providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud started offering GPU-powered instances to users. These instances gave businesses of all sizes access to high-performance computing on-demand, eliminating the need to invest in expensive hardware. Now, startups, researchers, and even small businesses could tap into the power of GPUs for AI, data analysis, and simulations

Basic Concepts of GPU Computing

So, why are GPUs so special? Let’s take a closer look at some key elements that make them ideal for computational workloads:

- Tensor Cores: Specialized hardware units in modern GPUs (e.g., NVIDIA A100) designed to accelerate matrix computations critical for AI/ML tasks.

- NVSwitch and InfiniBand: High-bandwidth interconnect technologies enabling efficient communication between multiple GPUs in a single node or across clusters.

- Memory Hierarchy: GPUs have high-bandwidth memory (HBM) and caches to ensure faster data access during computations

But these features alone aren’t enough—efficient communication between GPUs and other components is crucial. This is where interconnect technologies come in.

Understanding Interconnect Technologies

Building on these foundational GPU concepts, let’s dive deeper into three critical interconnect technologies that enhance the power of GPUs:

- NVSwitch:

- NVIDIA's high-speed GPU interconnect

- Enables 600 GB/s GPU-to-GPU communication

- Critical for multi-GPU workloads

- Reduces data transfer bottlenecks

- InfiniBand:

- High-performance networking technology

- Offers low latency (sub-microsecond)

- Supports Remote Direct Memory Access (RDMA)

- Used in Azure's NCv4 series

- Tensor Core Technology:

- Specialized processing units for AI workloads

- Up to 5x performance boost for AI operations

- Supports mixed-precision training

- Available in V100 and A100 GPUs

Exploring AWS, Azure, and GCP GPU Instances

Now that we’ve covered the key technologies, let’s look at how AWS, Azure, and GCP have integrated GPU instances to meet computational needs. Each cloud provider offers specialized GPU instances, optimized for different workloads:

AWS GPU Instance Types

AWS leads the GPU computing market with diverse offerings categorized into P4, P3, and G5 instances, each optimized for specific workloads:

P4 Instances (Latest Generation)

AWS P4 instances are designed for the most demanding AI and HPC workloads. Built on NVIDIA A100 Tensor Core GPUs, these instances integrate NVSwitch technology, providing seamless GPU-to-GPU bandwidth of up to 600 GB/s. This configuration ensures unmatched performance for distributed deep learning training, inference, and HPC applications at a massive scale.Key Features:

- Up to 8 NVIDIA A100 GPUs per instance.

- Elastic Fabric Adapter (EFA) for low-latency, multi-node GPU training.

- Network throughput of up to 400 Gbps, ideal for large-scale AI models like GPT-3.

- Optimized for workloads requiring both high throughput and low latency, such as drug discovery and autonomous vehicle simulations.

P3 Instances

AWS P3 instances offer a cost-effective balance of performance and price. Equipped with NVIDIA V100 Tensor Core GPUs, they are ideal for deep learning training, financial modeling, and seismic simulations. These instances use NVLink technology to enable 300 GB/s GPU-to-GPU bandwidth, delivering the computational power needed for resource-intensive workloads.Key Features:

- Up to 8 NVIDIA V100 GPUs per instance.

- Suitable for models requiring FP16 and FP32 precision.

- Optimized for mid-sized machine learning workloads, offering scalability and efficiency.

G5 Instances

Targeted at graphics-intensive workloads, AWS G5 instances are powered by NVIDIA A10G GPUs, specifically designed for rendering, video editing, and gaming applications. These instances cater to tasks like real-time 3D rendering, game streaming, and content creation.Key Features:

- Up to 8 NVIDIA A10G GPUs per instance.

- Enhanced for tasks requiring visual processing, such as media encoding or architectural rendering.

- A cost-effective solution for workloads prioritizing GPU acceleration in graphics-heavy environments.

To understand the technological edge offered by these instances, here's a comparison between the NVIDIA A100 and V100 GPUs powering P4 and P3 instances, respectively

| Feature | A100 | V100 | Performance Improvement |

| Tensor Cores | 3rd Gen Tensor Cores | 1st Gen Tensor Cores | 2.5x for ML workloads |

| Bandwidth | 600 GB/s with NVLink | 300 GB/s with NVLink | 2x higher |

| Performance | Optimized for FP16 & INT8 | Focus on FP32 and FP16 | Better INT8 support |

Memory Hierarchy

| Feature | A100 | V100 |

| L2 Cache | 40 MB | 6 MB |

| HBM2e Memory | 80 GB | 32 GB |

| Memory Bandwidth | 2,039 GB/s | 900 GB/s |

Azure GPU Solutions

Microsoft Azure’s GPU offerings are strategically categorized to support a variety of workloads, from AI/ML applications to graphics rendering, leveraging both NVIDIA and AMD GPUs. Azure's infrastructure emphasizes high-speed networking and flexibility.

NCv4 Series

Built on the NVIDIA A100 Tensor Core GPUs, Azure VMs are tailored for AI workloads requiring immense computational power. With up to 8 GPUs per VM and 200 Gbps InfiniBand networking, these VMs deliver the performance needed for large-scale AI training and HPC simulations.

Key Features:

- NVIDIA A100 GPUs, offer state-of-the-art mixed-precision capabilities.

- Multi-instance GPU support, enabling distributed computing environments.

- Optimized for industries such as healthcare (genomics) and automotive (autonomous driving).

NDv2 Series

Azure’s NDv2 series is built on NVIDIA V100 Tensor Core GPUs, making it an excellent choice for distributed AI training. These VMs cater to deep learning models and other AI workloads requiring FP16 precision and large batch sizes.

Key Features:

- Up to 8 NVIDIA V100 GPUs per instance.

- 200 Gbps InfiniBand for high-speed networking between nodes.

- Scalable for hybrid and multi-cloud machine learning workflows.

NVv4 Series

Azure NVv4 VMs employ AMD Radeon Instinct MI25 GPUs to provide cost-effective solutions for smaller workloads, such as graphics rendering and virtual desktops. These instances stand out for their GPU partitioning capabilities, which allow users to allocate only the resources they need.

Key Features:

- Cost-efficient GPU partitioning for smaller AI and graphics workloads.

- Ideal for virtualized environments requiring moderate GPU performance.

- Flexible pricing structure for businesses prioritizing cost management.

GCP GPU Options

Google Cloud Platform (GCP) differentiates itself by offering modular GPU attachments that can be customized to suit various instance types. This flexibility makes GCP a preferred choice for organizations looking for tailored GPU configurations.

A2 Instances:

GCP’s A2 instances, powered by NVIDIA A100 GPUs, provide the highest GPU density among major cloud providers, with up to 16 GPUs per node. These instances are ideal for massive-scale parallel workloads, including climate modeling and large-scale AI training.Key Features:

- Up to 16 NVIDIA A100 GPUs per node.

- 600 GB/s GPU-to-GPU bandwidth, maximizing interconnect speeds.

- Designed for workloads like reinforcement learning and recommendation systems.

T4 GPU Attachments (N1 Instances):

GCP’s T4 GPU attachments offer a cost-effective solution for inference tasks. These GPUs, designed for flexibility and performance, are ideal for workloads like video transcoding, inference at scale, and 3D rendering.Key Features:

- Supports scalable inference pipelines with optimized INT8 precision.

- Cost-efficient for graphics and AI workloads requiring moderate GPU acceleration.

- Flexible integration with N1-standard and preemptible VM instances.

Real-World Performance Examples

Understanding how these instances perform under actual workloads will help clarify the real-world implications of your choice.

For example, training ResNet-50 on 1M images provides a practical comparison of performance and costs:

Image Recognition Training: ResNet-50 on 1M Images:

| Cloud Provider | Instance Type | Training Time | Cost | Performance Highlights |

| AWS | P4d.24xlarge | 14.2 hours | $465 | Outstanding performance with NVIDIA A100 GPUs. High bandwidth ensures faster training and inference for large tasks. |

| Azure | NCv4 | 15.1 hours | $447 | Reliable performance with slightly longer training time. Lower cost makes it a good choice for budget-conscious users. |

| GCP | A2-highgpu-8g | 14.8 hours | $463 | Comparable to AWS in performance, with slight cost and time variations. Good for flexible regional deployment needs. |

Performance Analysis

Training Time

- AWS takes the lead with the fastest training time of 14.2 hours, followed closely by GCP with 14.8 hours.

- Azure takes a bit longer, with 15.1 hours, but this comes with a more affordable price.

Cost

- Azure provides the most cost-effective solution at $447, making it an attractive option for those prioritizing budget.

- GCP and AWS are competitive in price, but AWS offers the best performance for the cost, coming in at $465.

Factors Driving Performance Differences

The GPU model, memory bandwidth, and interconnect technology are key to understanding the performance differences between these providers. Let’s take a closer look:

Memory Bandwidth:

- AWS (P4d.24xlarge), Azure (NCv4), and GCP (A2-highgpu-8g) all feature 600 GB/s memory bandwidth, thanks to the use of NVSwitch.

Networking:

- AWS has a 400 Gbps network, ideal for large-scale, distributed AI tasks.

- Azure supports 200 Gbps, sufficient for AI/ML workloads and enterprise integration.

- GCP has a 100 Gbps network, great for large-scale parallel workloads but slightly less robust than AWS.

In addition to performance, let's examine the specifications across various cloud providers for a better understanding of the resources available:

| Cloud Provider | Instance Type | GPU Model | Max GPUs/Node | GPU Bandwidth | Networking | Target Workload |

| AWS | P4 | NVIDIA A100 | 8 | 600 GB/s (NVSwitch) | 400 Gbps | AI training, HPC |

| P3 | NVIDIA V100 | 8 | 300 GB/s (NVLink) | 100 Gbps | Financial modeling, ML training | |

| G5 | NVIDIA A10G | 8 | 320 GB/s (PCIe) | 100 Gbps | Graphics-intensive workloads | |

| Azure | NCv4 | NVIDIA A100 | 8 | 600 GB/s (NVSwitch) | 200 Gbps | AI/ML workloads |

| NDv2 | NVIDIA V100 | 8 | 300 GB/s (NVLink) | 200 Gbps | Distributed AI training | |

| GCP | A2 | NVIDIA A100 | 16 | 600 GB/s (NVSwitch) | 100 Gbps | Massive-scale parallel workloads |

| T4 (N1) | NVIDIA T4 | 4 | 320 GB/s (PCIe) | 50 Gbps | Cost-efficient inference, graphics |

Cost Analysis

Pricing Comparison: 4 NVIDIA V100 GPUs

Having reviewed performance, let's now turn our attention to the cost comparison for GPU instances. Here's a breakdown based on 4 NVIDIA V100 GPUs:

| Cloud Provider | Instance Type | Pricing Model | Price (per hour) |

AWS

| p3.8xlarge (us-east-1) | On-Demand | $12.24 |

| p3.8xlarge (us-east-1) | Spot | $3.67 | |

Azure

| Standard_NC24s_v3 (East US) | Pay as you go | $12.24 |

| Standard_NC24s_v3 (East US) | Spot | $1.22 | |

Google Cloud

| n2-standard-64 + 4 NVIDIA V100 GPUs | Pay as you go | $13.03 |

| n2-standard-64 + 4 NVIDIA V100 GPUs | Preemptible | $3.71 |

This table should give you a clear understanding of the pricing structure for GPU instances across the three major cloud platforms. Spot pricing can offer significant savings depending on your flexibility and tolerance for interruptions.

Key Observations:

- Spot Pricing offers significant savings across all providers. Azure's spot pricing is the lowest at $1.22, providing the most cost-effective option for flexible workloads.

Cost Optimization Strategies

Each provider offers unique cost-saving mechanisms:

| Cloud Provider | Cost-Saving Option | Description |

AWS

| Spot Instances | Up to 90% savings on compute resources. |

| Savings Plans | Commit to longer-term usage to secure discounts. | |

| Capacity Reservations | Reserve resources for critical workloads. | |

Azure

| Low-priority VMs | Similar to Spot Instances, providing cost savings for non-critical workloads. |

| Reserved VM Instances | Save costs with long-term commitment. | |

| Hybrid Benefit | Leverage existing on-premises licenses for additional savings. | |

GCP

| Preemptible VM Instances | Similar to Spot Instances, with substantial cost savings but potential interruptions. |

| Committed Use Discounts | Save on long-term usage commitments. | |

| Sustained Use Discounts | Automatically applied for consistent use over time. |

Choosing the Right Cloud Provider for GPU Instances

Your choice of AWS, Azure, or GCP for GPU instances depends on your specific requirements:

- AWS:

- Best suited for large-scale AI projects.

- Offers top-tier performance with NVIDIA A100 GPUs.

- Provides unmatched scalability and advanced interconnect technologies like NVSwitch for multi-GPU setups.

- Azure:

- Ideal for enterprise use cases and hybrid deployments.

- Seamlessly integrates with Microsoft tools and hybrid solutions.

- Offers cost-saving options like Reserved VM Instances and low-priority VMs.

- GCP:

- Known for flexibility and high GPU density.

- Excels in advanced networking for distributed workloads.

- Strong choice for custom configurations and region-specific deployments.

Choose a provider that aligns with your workload requirements, cost considerations, and scalability goals to maximize performance and efficiency for GPU-powered workloads.