As your data in Amazon S3 grows, so do the complexities of managing it—and the associated costs. S3 Lifecycle Policies offer an effective solution by automating data management and optimizing storage expenses. These policies enable seamless transitions between storage classes and scheduled deletions based on predefined rules, helping you stay in control of your cloud environment.

Let’s explore how S3 Lifecycle Policies can enhance your storage strategy, keeping your data organized.

What Are S3 Lifecycle Policies and Why Do They Matter?

S3 Lifecycle Policies are rule-based automations designed to manage the lifecycle of objects stored in S3 buckets. These policies determine how and when data moves between storage classes or when objects expire, helping organizations reduce storage costs while maintaining compliance with data retention policies.

By automating these tasks, lifecycle policies eliminate manual overhead and help enforce data governance at scale. As businesses accumulate vast datasets, these policies become critical to:

- Optimize storage costs by moving data to the appropriate S3 storage class.

- Comply with regulations via automated retention or deletion policies.

- Minimize manual intervention, streamlining workflows with predictable object transitions.

With these benefits in mind, let's explore the key components and features of S3 Lifecycle Policies.

Key Components of S3 Lifecycle Policies

To effectively utilize S3 Lifecycle Policies, it's essential to understand their building blocks. Let's break down the main components:

Rules

A rule specifies the action S3 will take on objects within a bucket based on your defined criteria. Rules can apply to the entire bucket or a specific subset of objects

Pro tip: Avoid creating complex, overlapping rules to prevent unpredictable behaviors.Filters

Filters allow for targeted application of rules. You can define rules for specific objects using:

- Prefix-based filtering: Targets objects with key names beginning with a certain string (e.g., logs).

- Tag-based filtering: Applies rules to objects with specific tags (e.g., Project).

- Size-based filtering: Targets objects of a certain size range.

Using a combination of these filters helps create precise policies that avoid unnecessary transitions.

Actions

Actions define what happens to objects that meet the conditions of a rule. The primary actions include:

- Transition: Move objects between storage classes.

- Expiration: Permanently delete objects from the bucket.

- Abort Multi-Part Uploads: Clean up incomplete uploads to save space.

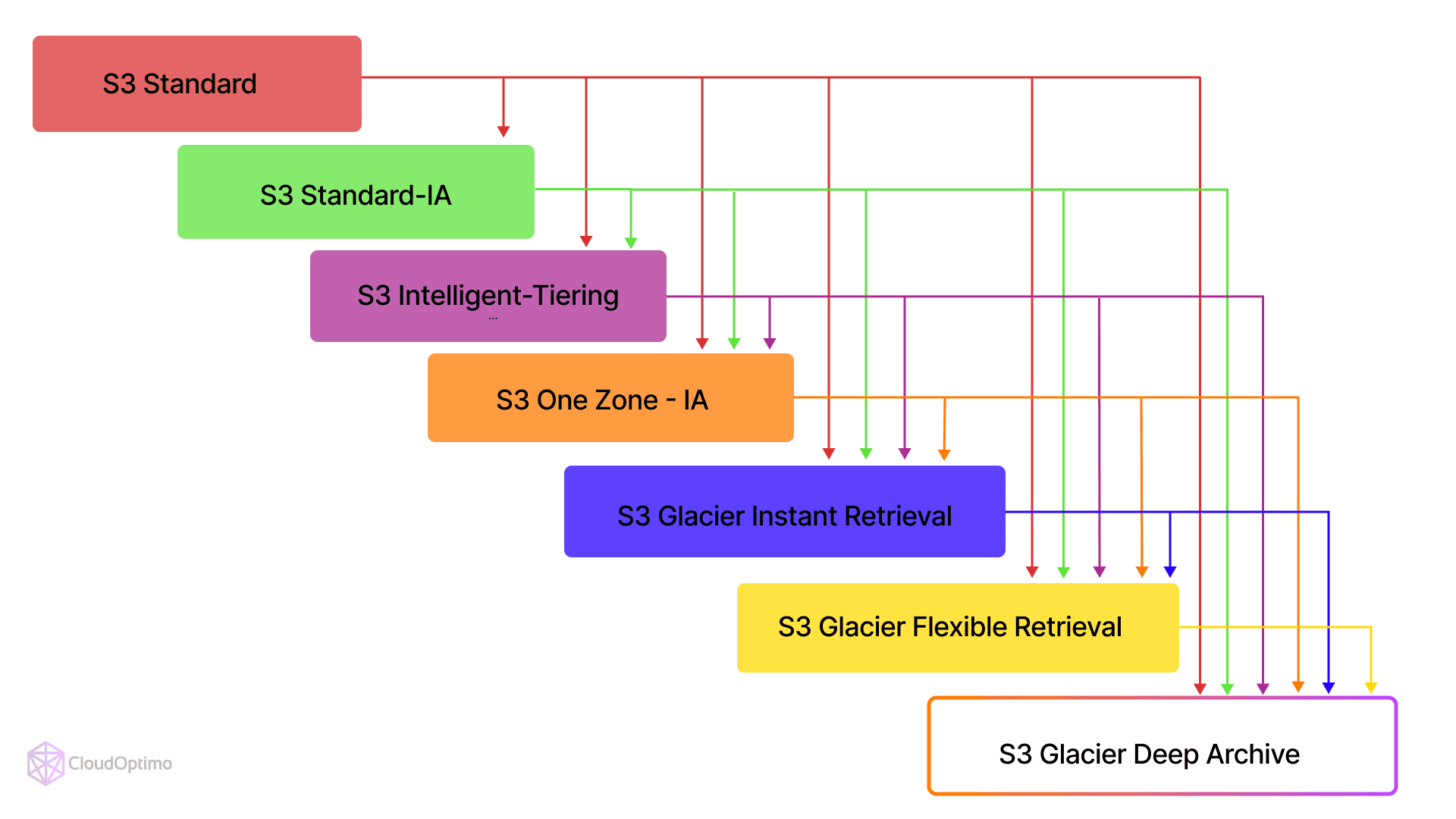

Transitions

Transitions specify when and how objects should move between different S3 storage classes. This feature is crucial for optimizing storage costs while maintaining appropriate access to data based on its lifecycle stage.

The following diagram illustrates the possible transitions between different S3 storage classes, showcasing the flexibility of S3 Lifecycle Policies in managing data across various tiers:

- S3 offers multiple storage classes for cost and performance optimization:

Storage Class | Use Case | Access Latency | Notes | Pricing (as of October 2024) |

S3 Standard | Frequently accessed data | Millisecond retrieval | High durability, multi-AZ storage | $0.023/GB |

S3 Intelligent-Tiering | Dynamic access patterns | Millisecond retrieval | Automatically optimizes cost | $0.023 - $0.00099 per GB/month |

S3 Standard-IA (Infrequent Access) | Less frequently accessed data | Millisecond retrieval | Lower cost, with retrieval fees | $0.006 per GB/month |

S3 Glacier Instant Retrieval | Archive data with occasional access | Millisecond retrieval | Best for quick archival retrievals | $0.01 per GB/month |

S3 Glacier Flexible Retrieval | Long-term archival storage | Minutes to hours | Lower retrieval cost than Instant | $0.004 per GB/month + retrieval fee |

S3 Glacier Deep Archive | Deep cold storage with rare access | Up to 12 hours | Cheapest storage class for archiving | $0.00099 per GB/month + retrieval fee |

Note: Transition policies must account for the minimum storage duration of each class to avoid unnecessary charges. For example, moving data to Standard-IA before 30 days will result in early deletion fees.

Expiration

Expiration policies automate the deletion of objects to reduce excess storage. These rules are useful for log management or temporary data removal.

Best practices:

- Use expiration rules to delete old object versions in buckets with versioning enabled.

- Automate log cleanup to free storage for large-scale workloads.

Now that we've covered the basics, let's dive deeper into how these components work together to create effective S3 Lifecycle Policies.

Crafting Effective S3 Lifecycle Policies

Creating an effective S3 Lifecycle Policy requires careful consideration of your data's characteristics, access patterns, and business requirements. Let's walk through the process of designing and implementing a lifecycle policy, highlighting best practices along the way.

Step 1: Analyze Your Data

Identify the types of data you store, how frequently they are accessed, and your compliance requirements. Before creating any policies, it's crucial to understand your data:

- What types of data do you store in S3?

- How frequently is each type of data accessed?

- What are your data retention requirements?

- Are there any compliance regulations you need to adhere to?

A clear understanding of your data usage allows you to align lifecycle policies with business goals.

Mini-scenario: Imagine you're the IT manager for an e-commerce company. You store product images, transaction logs, and customer data in S3. By analyzing your data, you realize that product images are accessed frequently for the first month after upload, then less often. Transaction logs are needed for 60 days for auditing, then must be archived for 7 years. Customer data needs to be readily available but must be deleted after 5 years of inactivity due to privacy regulations. This analysis will guide your lifecycle policy creation.

Step 2: Define Transition Rules

Based on your data analysis, create transition rules to move objects between storage classes. For example:

- Move objects from S3 Standard to S3 Standard-IA after 30 days

- Move objects from S3 Standard-IA to S3 Glacier Flexible Retrieval after 90 days

- Move objects from S3 Glacier Flexible Retrieval to S3 Glacier Deep Archive after 180 days

- Move logs to S3 Standard-IA after 30 days.

- Archive completed projects to Glacier Flexible Retrieval after 90 days.

- Tag-sensitive data to avoid premature transitions that incur retrieval fees

Remember to consider the minimum storage duration for each class to avoid unnecessary charges.

| { "Rules": [ { "ID": "Move to Standard-IA after 30 days", "Status": "Enabled", "Filter": { "Prefix": "logs/" }, "Transitions": [ { "Days": 30, "StorageClass": "STANDARD_IA" } ] } ] } |

This JSON snippet shows a simple lifecycle policy that moves objects with the prefix "logs/" to the Standard-IA storage class after 30 days.

Mini-scenario: Imagine you're managing log files for a web application. You could set a lifecycle policy to keep logs in S3 Standard for 30 days for quick access during troubleshooting, then move them to Standard-IA for cost-effective storage of older logs.

Step 3: Set Up Expiration Rules

Determine which objects can be safely deleted and when. This might include:

- Deleting log files after 90 days

- Removing temporary files after 7 days

- Expiring old versions of objects in versioned buckets after 365 days

Use versioning-specific rules to remove non-current versions while retaining the latest object version.

Mini-scenario: For your e-commerce platform, you set up an expiration rule to delete abandoned shopping cart data after 30 days to comply with data minimization principles. You also create a rule to remove non-current versions of product images after 90 days, keeping only the latest version to save storage costs while maintaining a history of recent changes.

Step 4: Implement Tagging Strategies

Apply tags to objects based on project, department, or compliance category. Lifecycle rules can act on these tags to provide fine-grained control over transitions and expirations. Utilize S3 object tagging to create more granular lifecycle policies. For example:

- Tag objects with their department or project

- Use tags to indicate sensitivity levels or compliance requirements

- Apply different lifecycle rules based on object tags

Mini-scenario: You implement a tagging strategy for your e-commerce data. Customer data is tagged with "PII" (Personally Identifiable Information) and "LastAccessDate". You create a lifecycle rule that checks the "LastAccessDate" tag and moves objects tagged "PII" to Glacier Deep Archive after 3 years of inactivity, and then deletes them after 5 years, ensuring compliance with privacy regulations.

Step 5: Test in a Staging Environment

Run lifecycle policies on a test dataset to validate transitions and expirations. Use S3 Storage Class Analysis to review access patterns and adjust transition timelines. Before applying lifecycle policies to production data:

- Test your policies on a small subset of data or in a staging environment

- Use S3 Storage Class Analysis to validate your transition rules

- Monitor S3 metrics and AWS Cost Explorer to ensure your policies are having the desired effect

Mini-scenario: Before implementing your new lifecycle policies across all your e-commerce data, you create a staging bucket with a sample of your data. You apply your policies to this bucket and monitor it for a month. You notice that some product images for seasonal items (like holiday decorations) are being accessed more frequently than expected even after 30 days. Based on this, you adjust your policy to keep these tagged items in S3 Standard for 60 days instead of 30.

Step 6: Monitor and Optimize

Use AWS tools like AWS Cost Explorer and S3 metrics to monitor policy impact. Adjust policies based on changing requirements or newly available storage classes. Regularly review and refine your lifecycle policies:

- Analyze cost savings and storage patterns

- Adjust rules based on changing business requirements

- Stay informed about new S3 features and storage classes

Mini-scenario: After implementing your lifecycle policies, you set up monthly reviews of your S3 costs and storage patterns. Three months in, you notice a significant cost reduction in your storage bills. However, you also see that retrieving some archived customer data for a loyalty program promotion is taking longer than ideal. You decide to adjust your policy to keep customer data in S3 Standard-IA for an additional 60 days before moving it to Glacier, balancing cost savings with operational needs.

By following these steps, you can create S3 Lifecycle Policies that effectively balance performance, cost, and compliance requirements.

Advanced Techniques and Considerations

As you become more comfortable with S3 Lifecycle Policies, consider these advanced techniques to further optimize your storage strategy:

Multi-Part Upload Management

For large objects that are uploaded using multi-part uploads, you can use lifecycle policies to:

- Abort incomplete multi-part uploads after a specified time

- Delete expired object delete markers in versioned buckets

- This helps prevent unnecessary storage costs for incomplete or abandoned uploads.

Cross-Region Replication (CRR) and Lifecycle Policies

When using Cross-Region Replication, be aware that:

- Lifecycle policies are not replicated in the destination bucket

- You may need to create separate lifecycle policies for source and destination buckets

- Transition actions do not initiate the replication of objects to the destination bucket

Versioning and Lifecycle Policies

If you're using S3 Versioning, lifecycle policies can help manage multiple versions of objects:

- Apply different rules to current and non-current versions

- Use expiration rules to delete old versions while retaining the current version

- Consider using separate lifecycle rules for deleting markers

Cost Implications of Transitions

While moving data to lower-cost storage classes can save money, be mindful of:

- Minimum storage duration charges for each storage class

- Retrieval fees for accessing data in colder storage tiers

- Data transfer costs when moving between storage classes

- Carefully model these costs to ensure your lifecycle policies are truly cost-effective.

Compliance and Data Governance

For organizations with strict compliance requirements:

- Use Object Lock to implement WORM (Write Once Read Many) policies

- Combine lifecycle policies with S3 Inventory and S3 Storage Lens for comprehensive data governance

- Consider using AWS Config rules to monitor and enforce S3 bucket policies

Real-World Use Cases

To illustrate the power of S3 Lifecycle Policies, let's explore a few real-world scenarios:

Case Study 1: Log Management for a High-Traffic Web Application

A popular e-commerce platform generates terabytes of log data daily. Their S3 Lifecycle Policy:

- Stores fresh logs in S3 Standard for immediate analysis

- Moves log to S3 Standard-IA after 30 days

- Transitions log to S3 Glacier Flexible Retrieval after 90 days

- Deletes logs older than 1 year

This approach balances the need for quick access to recent logs with cost-effective long-term storage for compliance purposes.

Case Study 2: Media Asset Management for a Content Creation Studio

A digital media company manages large video files throughout their production lifecycle:

- Active projects are stored in S3 Standard

- Completed projects are tagged and moved to S3 Standard-IA after 60 days

- Archive footage is transitioned to S3 Glacier Deep Archive after 1 year

- Different retention periods are applied based on content tags (e.g., stock footage vs. client projects)

This strategy ensures fast access to current projects while minimizing storage costs for older assets.

Case Study 3: Scientific Data Repository

A research institution managing large datasets from various experiments:

- Raw data is initially stored in S3 Standard

- Processed data is tagged and moved to S3 Standard-IA after 90 days

- Data from completed studies is archived to S3 Glacier Flexible Retrieval after 1 year

- Metadata objects are kept in S3 Standard for quick searching

- Dataset versions are managed using S3 Versioning with lifecycle rules to expire old versions

This approach allows researchers to access recent data quickly while maintaining a cost-effective long-term archive.

Best Practices

To ensure S3 Lifecycle Policies deliver maximum impact, consider these advanced practices:

Analyze Storage Patterns with Automation Tools

Leverage AWS Storage Class Analysis not only to track access patterns but also to generate automated insights. Use this data to build predictive models for when transitions to colder storage should occur, especially for high-volume, seasonal workloads.

Align Lifecycle Policies with Business Goals and Compliance Needs

Beyond storage cost savings, map your policies to specific compliance frameworks (e.g., PCI-DSS, GDPR). Combine lifecycle rules with S3 Object Lock for Write Once Read Many (WORM) enforcement, ensuring that your data governance aligns with both internal and regulatory mandates.

Use Dynamic Tagging for Fine-Grained Control

Instead of static tags, automate dynamic tagging via Lambda functions to adjust lifecycle rules based on real-time parameters such as object size growth trends or access frequency spikes.This allows policies to adapt automatically without manual reconfiguration.

Monitor the Impact with Multi-Dimensional Cost Metrics

Use AWS Cost Explorer’s filter-by-storage-class feature to monitor detailed storage trends and transition costs. Pair it with CloudWatch metrics to assess the trade-off between storage cost optimization and retrieval performance, ensuring critical workloads aren't compromised by overly aggressive transitions.

Design Policies for Multi-Account Architectures

Use AWS Organizations with service control policies (SCPs) to enforce unified lifecycle rules across multiple accounts. Additionally, leverage S3 replication policies with lifecycle configurations to synchronize policies across regions and ensure storage consistency.

Common Pitfalls

Despite their benefits, S3 Lifecycle Policies can introduce challenges if not implemented thoughtfully. Avoid these pitfalls:

Over-Transitioning Objects Across Classes

Frequent transitions can incur unexpected data retrieval costs and minimum storage duration penalties. Evaluate workload predictability before moving objects between classes to avoid churn between access tiers (e.g., Standard to Intelligent Tiering and back).

Underestimating the Performance Trade-Off of Cold Storage

While Glacier tiers provide cost-effective storage, they can degrade performance if retrieval requirements aren’t properly planned. Use lifecycle rules in tandem with retrieval SLAs to ensure that transition rules align with the acceptable latency of your applications.

Neglecting Lifecycle Policy Conflicts with S3 Versioning

When using versioning, make sure you explicitly define rules for both current and non-current versions. Failing to address non-current version management can lead to unnecessary data buildup and increased costs. Consider automated deletion of old delete markers to keep versioned buckets clean.

Ignoring Cross-Region Replication and Lifecycle Interactions

Remember that lifecycle policies aren't automatically replicated to destination buckets in Cross-Region Replication (CRR). Ensure you apply coordinated policies across both source and replicated buckets, especially for archival workflows where cold storage spans multiple regions.

Failing to Implement Lifecycle Rules for Incomplete Multi-Part Uploads

Incomplete multi-part uploads can accumulate unnoticed and incur unnecessary costs. Set abort rules for incomplete uploads to keep storage lean and ensure proper cost management.

Overlooking Testing in Staging Environments

Implement lifecycle policies gradually by testing on sandbox environments. Use S3 Inventory reports to verify the rules behave as expected before applying them to production workloads. A well-executed test strategy minimizes the risk of accidental deletions or storage misclassifications.

S3 Lifecycle Policies provide powerful automation tools to manage the storage and lifecycle of your data in Amazon S3. By following best practices, leveraging tags, and carefully monitoring storage metrics, you can create policies that optimize costs, performance, and compliance.

FAQs

Q: What is S3 Object Lock, and how does it relate to lifecycle policies?

A: S3 Object Lock is a feature that allows you to store objects using a write-once-read-many (WORM) model. It can be used in conjunction with lifecycle policies to ensure data retention compliance while still enabling cost-effective storage management.

Q: How do lifecycle policies interact with S3 Intelligent Tiering?

A: S3 Intelligent Tiering automatically moves objects between access tiers based on usage patterns. Lifecycle policies can be used to move objects into Intelligent-Tiering, but once there, the automatic tiering takes over.

Q: Can I use lifecycle policies with encrypted objects?

A: Yes, lifecycle policies work with both server-side encrypted and unencrypted objects. However, client-side encrypted objects are treated as standard objects since S3 cannot read their contents.

Q: How quickly do lifecycle transitions take effect?

A: Lifecycle transitions are not instantaneous. They typically occur within 24 hours of the specified transition time, but there's no guarantee of immediate execution.

Q: Do lifecycle policies incur additional costs?

A: While lifecycle policies themselves are free to set up, transitions between storage classes may incur data transfer costs. Always review the AWS pricing documentation for the most up-to-date information.