1. Introduction to Cloud-Native Tools for MLOps

1.1 What Are Cloud-Native Tools?

Cloud-native tools refer to software solutions and platforms designed specifically for cloud environments. These tools are optimized to leverage the scalability, flexibility, and cost-efficiency offered by cloud services. Cloud-native tools are designed to integrate seamlessly into cloud infrastructure and utilize the full potential of cloud computing resources.

In the context of MLOps (Machine Learning Operations), cloud-native tools facilitate the automation and management of machine learning workflows, from data ingestion and model training to deployment and monitoring. They enable machine learning teams to build, deploy, and scale models in a cloud environment while ensuring reliability, performance, and ease of integration.

Unlike traditional tools that might need heavy customization to work in the cloud, cloud-native tools are purpose-built for cloud platforms, offering automatic scaling, high availability, and seamless integration with other cloud services.

1.2 Importance of Cloud-Native Tools in MLOps

The importance of cloud-native tools in MLOps cannot be overstated, as they address several challenges associated with machine learning workflows. Traditional on-premise infrastructure is often limited by resource constraints, maintenance overhead, and scalability issues. Cloud-native tools, however, enable teams to build and scale machine learning pipelines quickly and efficiently.

Some key reasons for the growing importance of cloud-native tools in MLOps include:

- Scalability: Cloud environments offer nearly limitless computing resources, which is crucial for handling the computational demands of training machine learning models, especially with large datasets.

- Automation: Cloud-native tools can automate various aspects of the ML lifecycle, including model training, hyperparameter tuning, and deployment, reducing manual effort and increasing efficiency.

- Cost Efficiency: With cloud-native tools, companies can optimize resource usage by paying only for what they consume. This flexibility helps avoid over-provisioning, which is common with on-premise setups.

- Collaboration: Cloud platforms promote collaboration by providing centralized storage and accessible environments for teams to work together seamlessly, whether they are located in the same office or spread across the globe.

Cloud-native tools enable organizations to manage their ML workflows more effectively, accelerating time-to-market for AI-driven applications.

1.3 Benefits of Using Cloud-Native Tools for MLOps

Adopting cloud-native tools in MLOps provides a variety of benefits that help organizations enhance the efficiency, reliability, and scalability of their machine learning workflows.

- Faster Time to Market: Cloud-native tools allow rapid iteration on models, and through integration with CI/CD pipelines, they streamline the process of continuous deployment. This helps reduce the time it takes to bring ML models from development to production.

- Seamless Integration: Cloud-native tools are built to integrate effortlessly with other cloud services such as data storage, databases, monitoring services, and analytics. This ensures a smoother workflow, enabling the team to focus on developing models rather than managing infrastructure.

- Cost-Effectiveness: These tools typically follow a pay-as-you-go model, meaning companies only pay for the resources they actually use. This is a huge advantage over traditional on-premise setups, which may require significant upfront investments and maintenance costs.

- Scalability and Flexibility: Whether it's handling massive data volumes or scaling to run multiple experiments simultaneously, cloud-native tools are designed to scale dynamically according to demand. This flexibility allows organizations to avoid resource bottlenecks and maintain high-performance levels as the ML pipeline grows.

- Security and Compliance: Major cloud platforms offer built-in security and compliance features, such as data encryption, access control, and auditing. These tools help organizations meet industry-specific regulatory standards without extensive manual intervention.

2. Key Components of Cloud-Native Tools for MLOps

Cloud-native tools for MLOps consist of various components that work together to automate and optimize different stages of the machine learning pipeline. Below are the key components that form the foundation of a robust MLOps pipeline:

2.1 Data Management and Preprocessing Tools

Effective data management is crucial to any ML pipeline, as the quality of the data directly impacts the model's performance. Cloud-native tools provide capabilities for data storage, transformation, and cleaning, which are essential for preparing data for machine learning.

Some important aspects of data management and preprocessing tools include:

- Data Ingestion: Tools that can automatically ingest data from various sources such as databases, APIs, and data lakes.

- Data Transformation: These tools enable data cleaning, normalization, and feature engineering, preparing the data in the right format for training models.

- Data Versioning: Cloud-native tools provide version control for datasets, ensuring that the right version of the data is used at each step of the ML pipeline.

2.2 Model Training and Development Tools

Model training is at the core of the MLOps pipeline, and cloud-native tools offer powerful environments to speed up training, from hyperparameter tuning to distributed computing.

Key features of model training and development tools include:

- AutoML: Tools that allow teams to automate the process of selecting and training models, reducing the need for deep expertise.

- Distributed Training: Cloud platforms provide the resources to train models on multiple machines or GPUs, significantly accelerating the process.

- Experiment Tracking: Cloud-native tools support experiment tracking, allowing teams to log hyperparameters, training data, and results, making it easier to track and compare different models.

2.3 Deployment and Monitoring Tools

Once a model is trained, it must be deployed and continuously monitored to ensure optimal performance in production. Cloud-native tools streamline the deployment process and provide real-time monitoring capabilities.

- Model Deployment: Cloud tools offer several deployment options, such as real-time (online) inference, batch processing, or edge deployments, allowing flexibility based on the use case.

- Model Monitoring: These tools continuously track the model's performance, alerting teams when there is a drop in accuracy or other issues like data drift, ensuring that the model remains reliable over time.

2.4 Continuous Integration and Continuous Deployment (CI/CD) Tools

CI/CD pipelines are essential for automating the deployment of machine learning models and ensuring that updates and improvements are quickly reflected in production.

- Automated Testing: Cloud-native CI/CD tools enable automated testing of models before deployment, ensuring they meet quality standards.

- Model Retraining: Cloud tools support automated model retraining based on new data or performance issues, ensuring that the model evolves over time without manual intervention.

- Rollback Mechanisms: If a model update causes issues, CI/CD tools allow easy rollback to the previous version, minimizing the impact of errors.

3. Overview of Popular Cloud-Native Tools for MLOps

Several cloud-native tools are available to help streamline the machine learning lifecycle. Below are some of the most popular tools for MLOps, each offering a unique set of features tailored for different needs.

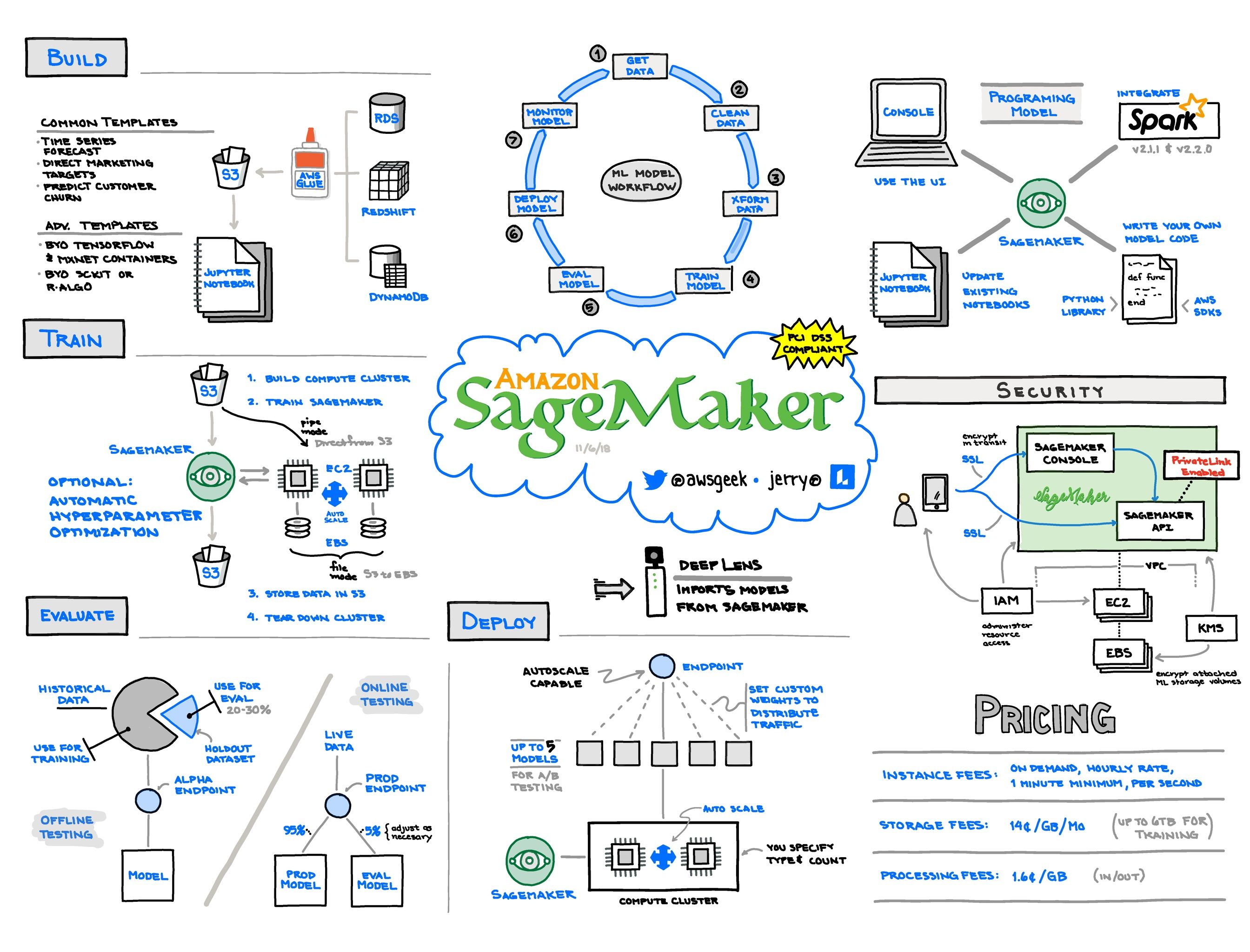

3.1 AWS SageMaker

Source: awsgeek

Amazon Web Services (AWS) SageMaker is a fully managed service that provides a comprehensive suite of tools for building, training, and deploying machine learning models. It offers everything from data preprocessing to model monitoring, making it a one-stop solution for MLOps.

Key Features:

- Built-in Algorithms: SageMaker provides a range of pre-built algorithms, making it easy to get started with machine learning without needing to build models from scratch.

- Automatic Model Tuning: The service allows automated hyperparameter optimization to fine-tune models.

- Integration with Other AWS Services: SageMaker integrates with AWS services like S3 for storage, Lambda for serverless execution, and CloudWatch for monitoring.

3.2 Azure Machine Learning

Microsoft's Azure Machine Learning (Azure ML) is another robust cloud-native tool that offers end-to-end machine learning lifecycle management. It is particularly known for its strong integration with Microsoft's other enterprise services.

Key Features:

- Automated Machine Learning (AutoML): Azure ML offers an AutoML feature that helps users build models without deep expertise in machine learning.

- Model Management: Azure ML includes tools for model versioning and management, allowing users to track, deploy, and monitor models throughout their lifecycle.

- Collaboration and Sharing: Azure ML facilitates collaboration by allowing teams to work on shared projects and notebooks.

3.3 Google AI Platform

Google AI Platform provides a set of cloud-based services to train, deploy, and manage machine learning models, with a strong focus on TensorFlow integration.

Key Features:

- Scalability: Google AI Platform offers powerful infrastructure to scale machine learning workloads efficiently.

- Custom Training: It supports custom training scripts, enabling flexibility for advanced users.

- Pre-built Containers: Google provides containers for popular machine learning frameworks, making it easier to deploy models.

3.4 IBM Watson Studio

Source: IBM

IBM Watson Studio offers a set of tools that allows data scientists to build, train, and deploy machine learning models with an emphasis on artificial intelligence and data analytics.

Key Features:

- Collaboration: Watson Studio promotes teamwork by enabling collaboration across different teams of data scientists, developers, and business experts.

- Pre-built AI Models: Watson Studio provides several pre-trained models for various AI use cases like natural language processing and computer vision.

- Integration with IBM Cloud: Watson Studio is tightly integrated with IBM's cloud ecosystem, providing access to powerful data storage and computing tools.

4. Evaluating Cloud-Native Tools for MLOps

When selecting cloud-native tools for MLOps, organizations need to consider a variety of factors that will influence the effectiveness of the tool in their machine learning workflows. Below are some key criteria to consider:

4.1 Criteria for Choosing the Right Tool

Choosing the right cloud-native tool for MLOps depends on the specific needs of the organization, the scale of the ML projects, and the features offered by each tool. Here are key criteria to evaluate when selecting a tool:

| Criteria | Description |

| Ease of Use | How intuitive and user-friendly the tool is. A complex interface might increase the learning curve for new users. |

| Feature Set | Does the tool provide the necessary features for your ML pipeline (e.g., data ingestion, model training, deployment, monitoring, versioning)? |

| Scalability | Can the tool scale efficiently to handle large datasets and complex models? Cloud-native tools should handle growing workloads easily. |

| Integration | How easily the tool integrates with other platforms, services, and infrastructure, such as storage, databases, and APIs. |

| Cost | Does the tool offer a cost-efficient solution? Pay-as-you-go models help to optimize cost based on actual usage. |

| Security and Compliance | Ensure the tool adheres to industry-specific regulations, like GDPR or HIPAA, and provides necessary security features like data encryption and access control. |

| Support and Documentation | Availability of robust documentation and customer support for troubleshooting, training, and best practices. |

4.2 Performance and Scalability Considerations

Performance and scalability are essential for cloud-native tools that will manage large-scale ML workflows. The tool should handle the growing complexity of machine learning models and the ever-expanding datasets. Here’s a breakdown of factors to consider:

- Distributed Computing: Ensure the tool can distribute the workload across multiple machines or GPUs to speed up training, especially for large datasets and complex models.

- Elasticity: The ability to automatically scale resources up or down based on workload demands. For example, scaling up during model training and scaling down when idle helps optimize costs.

- Latency: Tools should minimize latency during model inference (real-time predictions) or during model updates and deployment. The lower the latency, the faster the user experience and decision-making process.

- Throughput: The tool’s ability to handle large volumes of data, both during training and real-time inference, while maintaining acceptable performance levels.

4.3 Integration with Existing Infrastructure and Tools

Cloud-native tools for MLOps should seamlessly integrate with an organization's existing infrastructure. This includes compatibility with:

- Data Sources: Integration with databases, data lakes, and real-time data streams (e.g., Amazon S3, Google Cloud Storage, Azure Data Lake).

- Model Frameworks: Support for popular machine learning frameworks like TensorFlow, PyTorch, and Scikit-learn, so you can use existing code with minimal changes.

- CI/CD Pipelines: Integration with existing CI/CD systems (e.g., Jenkins, GitLab) to automate model testing and deployment.

- Monitoring Tools: Compatibility with monitoring services (e.g., AWS CloudWatch, Azure Monitor) to track model performance and data drift.

Choosing a tool that supports easy integration with other components in your ecosystem helps streamline the workflow and reduces manual interventions.

5. Best Practices for Implementing Cloud-Native Tools in MLOps

Successfully implementing cloud-native tools in an MLOps pipeline involves a set of best practices that ensure smooth operation and optimal performance.

5.1 Standardizing the Machine Learning Pipeline

Standardization is a key element in reducing errors, improving collaboration, and enhancing the efficiency of machine learning projects. Standardizing the ML pipeline involves:

- Automating Data Preprocessing: Standardize data ingestion, cleaning, and feature engineering to ensure that all datasets are processed in a consistent manner before training.

- Version Control for Models and Data: Use version control to track changes in datasets and models, ensuring that previous versions can be easily retrieved if necessary.

- Reusable Components: Develop reusable components or templates for common tasks, such as model evaluation, training pipelines, or monitoring scripts, so teams can focus on innovation rather than repetitive tasks.

Standardizing processes reduces complexity, makes the workflow more predictable, and enhances collaboration among different teams.

5.2 Automating Model Monitoring and Retraining

Machine learning models can degrade over time due to changes in the data or shifting user behavior. Automating monitoring and retraining ensures that the model remains effective over time.

- Model Drift Detection: Use automated tools to detect when model performance drops due to data drift or concept drift, triggering the need for model retraining.

- Continuous Monitoring: Cloud-native tools can continuously monitor key performance metrics like accuracy, recall, and precision to detect potential issues early on.

- Automated Retraining: Set up automated pipelines that trigger model retraining whenever the model’s performance degrades or when new data becomes available.

By automating these processes, you reduce the manual effort involved in monitoring and retraining models, ensuring timely updates and reducing operational risks.

5.3 Ensuring Collaboration Across Teams

Effective collaboration is essential when multiple stakeholders, such as data scientists, software engineers, and business analysts, are involved in the MLOps workflow. Best practices include:

- Centralized Repositories: Use shared repositories (e.g., GitHub, GitLab) for code, data, and model artifacts, allowing teams to collaborate and track changes efficiently.

- Collaborative Notebooks: Tools like Jupyter Notebooks or Google Colab allow data scientists to share and collaboratively work on code and experiments.

- Role-Based Access Control (RBAC): Implement access control to ensure that team members only have access to the resources they need, protecting sensitive data while encouraging collaboration.

Ensuring smooth collaboration ensures that teams work cohesively, aligning efforts towards successful deployment and performance monitoring.

6. Challenges in Using Cloud-Native Tools for MLOps

While cloud-native tools provide significant advantages for MLOps, they also come with their own set of challenges. Here are some of the common obstacles organizations may face:

6.1 Resource Management and Scalability

While cloud-native tools are designed to scale, managing resources effectively can still be a challenge. Over-provisioning or under-provisioning resources can lead to performance bottlenecks or unnecessary costs.

- Cost Optimization: Scaling up during model training and scaling down during idle times requires careful cost monitoring and the right configuration of auto-scaling mechanisms.

- Resource Planning: Without proper planning, scaling operations might result in resource waste or delays, especially when managing large machine learning jobs with complex models.

6.2 Security and Compliance Issues

Security and compliance are critical when using cloud-native tools, especially for industries that handle sensitive data like healthcare, finance, or government. Some common challenges include:

- Data Encryption: Ensuring that data in transit and at rest is encrypted to prevent unauthorized access.

- Compliance with Regulations: Cloud tools must comply with industry-specific standards such as GDPR (General Data Protection Regulation) or HIPAA (Health Insurance Portability and Accountability Act).

- Access Management: Proper access control and identity management (e.g., using tools like AWS IAM) to ensure only authorized users can access critical data and models.

6.3 Vendor Lock-in and Multi-Cloud Strategies

Many cloud-native tools are specific to one cloud platform (e.g., AWS SageMaker, Azure ML). This can create a risk of vendor lock-in, where an organization becomes dependent on a single provider for all their cloud-based services.

- Data and Model Portability: Moving models and data across different cloud platforms can be complex and costly.

- Multi-Cloud Strategies: Some organizations implement multi-cloud strategies to avoid vendor lock-in by leveraging multiple cloud providers for different purposes (e.g., AWS for storage, Google Cloud for model training). This allows them to diversify risk but introduces complexity in managing multiple platforms.

Table: Vendor Lock-in Considerations

| Aspect | Single Cloud Provider | Multi-Cloud Strategy |

| Cost Efficiency | Optimized for one provider's services and discounts | Higher initial costs due to integration complexity |

| Flexibility | Limited to the tools and services provided by the vendor | Greater flexibility in choosing the best tool for each task |

| Vendor Dependence | High, dependent on one provider’s services | Lower, mitigates risk by diversifying across vendors |

| Data Portability | Potentially easier, but with risks of lock-in | More complex, with added management overhead |

By addressing these challenges proactively, organizations can fully leverage cloud-native tools to improve their MLOps workflows while minimizing risks.

7. Case Studies of Successful Implementation of Cloud-Native Tools for MLOps

To better understand how cloud-native tools for MLOps are transforming industries, let’s explore three case studies from different sectors. These examples highlight how businesses are using cloud-native MLOps solutions to solve complex problems and improve efficiency.

7.1 Case Study 1: Financial Services

The financial services industry deals with vast amounts of sensitive data and requires accurate, real-time decision-making. A prominent global bank adopted AWS SageMaker and integrated it with its existing data pipeline to enhance fraud detection and credit scoring models.

- Challenge: The bank needed to process large datasets quickly and deploy machine learning models in real-time to detect fraudulent transactions.

- Solution: By using AWS SageMaker, the bank was able to streamline its model training and deployment pipelines. The platform allowed them to build custom models, automate retraining based on new data, and deploy models without significant manual intervention.

- Results: The financial institution saw a 30% improvement in fraud detection accuracy and reduced manual intervention by automating the ML lifecycle. The platform also enabled rapid scaling during high transaction volumes.

7.2 Case Study 2: Healthcare Industry

Healthcare providers must process large volumes of medical data while ensuring compliance with strict regulations such as HIPAA. A healthcare company implemented Azure Machine Learning to automate its diagnostic model training and improve patient care delivery.

- Challenge: The company faced difficulties in managing large-scale medical datasets and automating the training of complex diagnostic models.

- Solution: Azure ML was integrated with the company’s existing data sources, including electronic health records (EHR), to facilitate real-time predictive analytics and model training. The company leveraged automated ML to optimize feature engineering and model evaluation.

- Results: The company achieved faster model development cycles, increased prediction accuracy for early disease detection, and enhanced patient outcomes. Automation also improved compliance with regulatory requirements by ensuring secure data processing.

7.3 Case Study 3: Retail and E-commerce

Retailers and e-commerce platforms must adapt to fast-changing customer behaviors. One major e-commerce company implemented Google AI Platform to personalize product recommendations and optimize their inventory management systems.

- Challenge: The company struggled with personalizing product recommendations at scale and managing inventory forecasting across multiple regions.

- Solution: Google AI Platform allowed them to build, deploy, and scale machine learning models for personalized recommendations and demand forecasting. The platform integrates with their existing cloud storage and customer data systems to provide real-time insights.

- Results: The retailer saw a 25% increase in sales conversion due to improved recommendations and more accurate inventory management, leading to a reduction in stockouts and overstocking.

These case studies highlight how cloud-native tools for MLOps can drive innovation and operational efficiency across diverse industries by enabling organizations to automate, scale, and optimize their machine learning processes.

8. The Future of Cloud-Native Tools for MLOps

As machine learning continues to evolve, cloud-native tools for MLOps are also advancing. The future of these tools will likely bring even more powerful capabilities, greater automation, and smarter integrations across various cloud environments.

8.1 Trends in Cloud-Native MLOps Tools

Several trends are shaping the future of cloud-native tools for MLOps:

- Increased Automation: The focus will shift towards automating more aspects of the ML lifecycle, from data preprocessing to model deployment and monitoring. This will reduce the burden on data scientists and improve the speed of model iteration.

- MLOps as a Service: Cloud providers are increasingly offering MLOps platforms as fully managed services, making it easier for organizations to integrate ML workflows without managing the underlying infrastructure.

- Serverless Architectures: Serverless computing models will gain traction, allowing businesses to run ML models without worrying about the underlying hardware infrastructure. This will help reduce operational overhead and improve scalability.

- Integration of AI and Machine Learning Services: Cloud platforms will continue to enhance integrations between AI and MLOps tools, providing a more seamless experience for users who want to develop, train, and deploy AI-powered models.

8.2 The Role of Automation and AI in Future MLOps Workflows

The future of MLOps will be increasingly driven by automation and AI. The growing adoption of AI tools and advanced algorithms will lead to:

- Autonomous Model Training and Optimization: Tools will become more intelligent in optimizing model parameters automatically, reducing the need for manual tuning and experimentations. This will allow data scientists to focus on higher-level tasks.

- Predictive Analytics for Model Management: Future MLOps tools will leverage predictive analytics to forecast model degradation or potential failure, enabling proactive actions like model retraining or adjustments.

- End-to-End Automation: End-to-end automation will cover everything from data ingestion and model training to deployment and monitoring, ensuring that ML workflows are faster and more reliable.

8.3 The Evolution of Multi-Cloud and Hybrid Environments for MLOps

As organizations become more complex and diverse, they increasingly adopt multi-cloud and hybrid environments to avoid vendor lock-in and enhance redundancy. Cloud-native tools for MLOps will evolve to support:

- Multi-Cloud Integration: Tools will allow organizations to integrate and manage their MLOps workflows across multiple cloud providers, offering the flexibility to choose the best tool for each task while mitigating risks associated with relying on a single provider.

- Hybrid Cloud Environments: The rise of hybrid cloud environments, where organizations use both on-premise and public cloud resources, will necessitate MLOps tools that can seamlessly operate in such environments, offering the best of both worlds in terms of performance and cost-efficiency.

- Cross-Platform Interoperability: Future MLOps platforms will offer more robust interoperability across clouds, enabling seamless data movement, model deployment, and monitoring across various environments.

These trends indicate that cloud-native MLOps tools will continue to evolve towards more automation, flexibility, and integration, helping organizations accelerate their ML development and operationalize AI models more effectively.

9. Conclusion

As businesses and industries increasingly adopt machine learning for a wide range of applications, the role of cloud-native tools for MLOps becomes ever more significant. These tools empower organizations to manage their ML workflows efficiently, automate key tasks, and deploy models at scale.

9.1 Summary of Key Insights

- Cloud-native tools for MLOps simplify and accelerate machine learning workflows, from model development to deployment.

- The choice of the right tool depends on criteria such as ease of use, scalability, integration, and cost-efficiency.

- Best practices include automating monitoring, retraining, and ensuring collaboration across teams to optimize the ML lifecycle.

- Popular tools like AWS SageMaker, Azure ML, Google AI Platform, and IBM Watson Studio are widely used across industries, each offering specific advantages.

- The future of cloud-native MLOps tools points toward greater automation, multi-cloud support, and smarter AI-driven workflows.

9.2 Final Thoughts on Cloud-Native Tools for MLOps

The adoption of cloud-native tools for MLOps has been a game-changer for organizations looking to scale their machine learning efforts. These tools not only simplify ML workflows but also enable better collaboration, faster time to market, and more accurate models. As technology continues to evolve, cloud-native MLOps tools will only get more powerful, automating complex processes and making machine learning more accessible to all industries.