Organizations are increasingly moving away from traditional monolithic architectures toward more flexible, scalable microservices architectures. Simultaneously, cloud platforms have emerged as the preferred hosting environment for these distributed systems. This integration of microservices and cloud computing represents a powerful combination that enables businesses to build resilient, scalable applications that can adapt quickly to changing requirements.

In this blog, we will explore microservices on cloud platforms, from foundational concepts to advanced implementation strategies.

What Are Microservices?

Microservices architecture is an approach to software development where an application is built as a collection of small, independent services that communicate with each other through well-defined APIs. Unlike traditional monolithic applications, where all functionality is packaged into a single unit, microservices break down applications into smaller, loosely coupled components.

This architectural style has gained widespread adoption because it enables organizations to build complex applications that can evolve rapidly. Each microservice handles one specific business function, can be developed and deployed independently, and allows teams to choose the most suitable technology for their specific requirements.

Benefits of Microservices over Monolithic Architectures

Source - Azure

Microservices architecture offers clear advantages when building systems that need to evolve, scale, and stay reliable over time. Each service is designed to handle a specific responsibility, allowing teams and systems to operate more effectively.

- Independent Scaling - Scale only the services that need more capacity, reducing cost and improving performance during high-load periods.

- Faster Development Cycles - Teams can build, test, and release features without waiting on other parts of the system, speeding up delivery.

- Technology Flexibility - Each service can use the tech stack best suited to its function, no need to force-fit a single language or framework.

- Fault Isolation - Issues stay contained within individual services, minimizing the impact on the overall system and improving reliability.

- Simpler Maintenance - Smaller, focused codebases are easier to understand and update, reducing bugs and development overhead.

- Targeted Deployments - Push updates to one service without touching the rest of the system, ideal for quick fixes and gradual rollouts.

- Team Ownership - Teams manage services tied to specific business functions, increasing accountability and domain expertise.

Why Cloud Platforms Are Ideal for Microservices?

Cloud platforms provide a computing environment that matches the core requirements of microservices: flexibility, speed, scale, and reliability. They allow teams to build and operate distributed systems without managing physical infrastructure, while still maintaining control over performance, availability, and cost.

Understanding Cloud Computing

Cloud computing gives organizations on-demand access to essential computing resources such as virtual machines, storage, databases, and networking, all delivered over the internet. These resources can be quickly allocated or scaled down as needed, supporting fast-moving development cycles and dynamic application needs.

There are three main service models:

- Infrastructure as a Service (IaaS): Provides virtual servers, networking, and storage. Teams manage operating systems and applications, while the provider manages the hardware and virtualization layer.

- Platform as a Service (PaaS): Delivers a managed environment for building and deploying applications. The cloud provider handles infrastructure, runtime, and scaling, while developers focus on code and configuration.

- Software as a Service (SaaS): Offers ready-to-use software delivered over the web. Users access features via subscription, with the provider managing everything behind the scenes.

How Cloud Platforms Support Microservices?

Microservices operate best in systems that can adapt quickly, grow gradually, and remain resilient under variable conditions. Cloud platforms are designed to support these exact characteristics.

- Elastic scalability

Microservices often face unpredictable traffic patterns. Cloud platforms can automatically adjust capacity by adding or removing instances in real time so each service remains responsive without manual scaling.

- Rapid resource provisioning

Cloud environments allow teams to deploy new services or replicate existing ones within minutes. This speed supports continuous delivery, testing, and system expansion without delays.

- Global distribution

Applications can be deployed across multiple geographic locations. This improves latency, ensures compliance with regional requirements, and delivers consistent performance to users regardless of their location.

- Access to managed services

Cloud providers offer a wide range of services such as managed databases, message queues, service meshes, logging, and monitoring. These reduce the time and effort required to maintain operational infrastructure.

- Usage-based pricing

Costs are based on actual resource consumption. This model is well-suited to microservices, where each service may have different usage patterns. Organizations can optimize spending based on demand, rather than provisioning for peak capacity.

- Resilience and redundancy

Built-in features like multi-zone deployment, failover configurations, and automated backups help protect services from infrastructure failures, ensuring higher system uptime and reliability.

Selecting the Right Cloud Platform

The choice of cloud provider should align with both the technical needs of your architecture and the practical realities of your organization.

Service ecosystem maturity

Evaluate the availability and depth of services relevant to microservices, such as container orchestration, monitoring, identity management, and networking. AWS offers broad service coverage, Google Cloud emphasizes Kubernetes-native design, and Azure provides strong integration with enterprise tools.

Internal capabilities and integration needs

Factor in your team’s existing experience with cloud technologies and your current technology stack. Azure integrates seamlessly with Microsoft environments, while Google Cloud may be a natural fit for teams already working with containers and open-source tooling.

Geographic presence and compliance

Consider data residency, latency, and regulatory requirements. While all major platforms offer global infrastructure, the availability of specific regions, certifications, and compliance guarantees may influence your choice.

Cloud platforms are not just infrastructure providers. They are strategic foundations for microservices, offering the tools and environment needed to build systems that are scalable, resilient, and aligned with business needs.

Cloud-Native Tools for Managing Microservices Effectively

Managing microservices at scale on cloud platforms demands tools that simplify deployment, communication, and infrastructure management. Choosing the right tools improves reliability, reduces operational complexity, and accelerates delivery.

- Kubernetes for Container Orchestration:

Kubernetes automates deployment, scaling, and management of containerized microservices. Its declarative configuration model ensures your services maintain their desired state, reducing manual errors and configuration drift, a major cause of system downtime. Features like rolling updates enable zero-downtime deployments, critical for continuous delivery in microservices environments.

- Service Meshes (e.g., Istio, Linkerd):

Service meshes add a transparent infrastructure layer to manage service-to-service communication. They provide secure connections through automatic mutual TLS encryption, advanced traffic routing (e.g., canary releases), and observability through distributed tracing. This helps identify latency or failure points quickly across your microservices, improving reliability and security without changing application code.

- Infrastructure as Code (IaC) Tools (Terraform, CloudFormation):

Defining infrastructure via code enables consistent, repeatable provisioning and easier rollback of infrastructure changes. IaC allows infrastructure versioning alongside application code, helping teams maintain alignment and reduce deployment errors, a common challenge when managing many microservices and environments.

Practical Insight: Stick to these main tools first before using specialized ones to keep things simple and manageable. A streamlined toolset reduces training overhead and operational risks.

Comparison of Cloud Providers for Microservices

While many cloud platforms support microservices, certain capabilities distinguish how effectively they enable microservices management at scale. The following key factors affect development velocity, operational efficiency, and service resilience:

Amazon Web Services (AWS)

Known for its broadest and most mature service ecosystem. Offers deep container orchestration (EKS, ECS), extensive serverless options (Lambda), and comprehensive API management. Its global infrastructure and managed services reduce operational overhead, making it a versatile choice for diverse microservices architectures.

Microsoft Azure

Excels in hybrid cloud scenarios and enterprise integration, especially for organizations heavily invested in Microsoft technologies. Azure Kubernetes Service (AKS) is tightly integrated with Azure DevOps and security tools, providing smooth workflows and governance for microservices development and deployment.

Google Cloud Platform (GCP)

Focuses on container-native innovation, with Google Kubernetes Engine (GKE) considered a market leader in orchestration. GCP’s strengths lie in developer productivity and advanced data services, including AI/ML capabilities, which can augment microservices with intelligent features.

| Feature / Provider | AWS | Azure | GCP |

| Container Orchestration | EKS (managed Kubernetes), ECS | AKS (managed Kubernetes) | GKE (managed Kubernetes) |

| Serverless Computing | Lambda | Azure Functions | Cloud Functions |

| API Management | API Gateway | Azure API Management | Apigee / Cloud Endpoints |

| Service Mesh Support | AWS App Mesh | Azure Service Fabric | Istio on GKE |

| Global Infrastructure | Largest global footprint | Strong presence, especially in hybrid scenarios | Strong in key global regions |

| Enterprise Integration | Broad 3rd-party ecosystem | Seamless Microsoft ecosystem | Open-source, cloud-native focus |

| Data Services & Analytics | DynamoDB, Aurora, Redshift | Cosmos DB, Synapse Analytics | Spanner, BigQuery, AI/ML |

Key Components of Microservices in the Cloud

Understanding the essential components that make microservices work effectively on cloud platforms is crucial for successful implementation.

Managing Service Communication

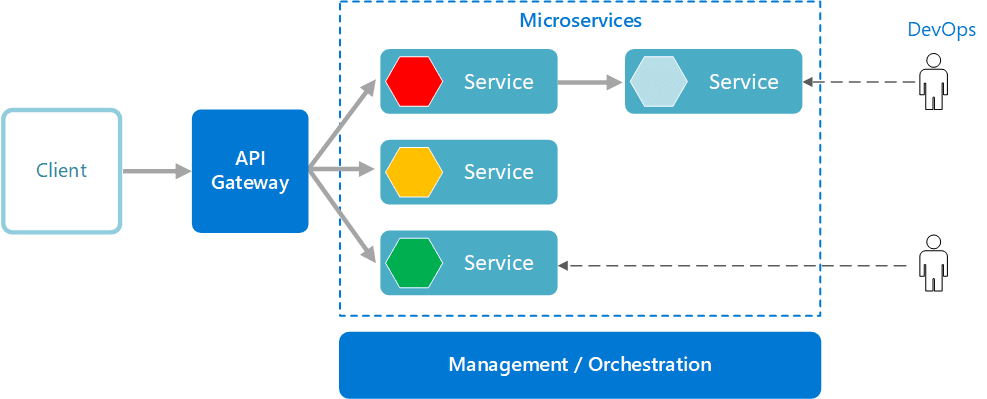

Services need to find each other and communicate reliably. This requires three key capabilities working together:

- Service Discovery maintains a registry of available services, allowing them to locate each other dynamically without hardcoded addresses.

- Load Balancing distributes incoming requests across multiple service instances to prevent any single instance from becoming overwhelmed. Cloud providers offer sophisticated load balancers that can route traffic based on geographic location, service health, and custom rules.

- API Gateway serves as the entry point for all client requests, handling authentication, rate limiting, and request routing. This single point of entry simplifies client interactions while providing consistent security and monitoring across all services.

Modern solutions include AWS API Gateway for serverless scenarios, NGINX for high-performance requirements, and Kong for extensive customization needs.

Containerization and Orchestration

Containers provide consistent, lightweight environments for microservices. Docker is the standard for packaging applications with their dependencies, ensuring services run identically across development, testing, and production environments.

Kubernetes orchestrates these containers at scale, providing automated deployment, scaling, and management capabilities. It handles rolling updates, health checks, and rollbacks to ensure continuous availability during changes.

Cloud providers offer managed Kubernetes services that handle the complexity of maintaining the control plane, allowing teams to focus on application development rather than infrastructure management.

Database Management in Microservices

Each microservice typically manages its own database, enabling teams to choose the most appropriate data storage solution for their specific needs. This approach provides several advantages:

- Services can evolve their data schemas independently without affecting other services.

- Teams can select between relational databases for complex queries, NoSQL databases for flexible schemas, or specialized databases for specific use cases like time-series data or full-text search.

Cloud providers offer managed database services that handle backups, scaling, and maintenance, reducing operational overhead while providing enterprise-grade reliability and performance.

Smart Scaling Strategies

Managing microservices at scale requires more than adding compute capacity - it involves designing systems that scale predictably, cost-effectively, and with minimal operational overhead.

Horizontal vs. Vertical Scaling

- Horizontal Scaling

This is the default and most effective method in cloud-native microservices. New service instances are deployed behind load balancers to meet demand surges. For instance, with Kubernetes Horizontal Pod Autoscaler, services can scale out in response to metrics like CPU or request rate, typically reacting within 60–120 seconds. In AWS, ECS or EKS can integrate with CloudWatch to trigger scale-out actions based on custom metrics. This elasticity ensures services maintain performance during sudden traffic spikes without requiring permanent overprovisioning. - Vertical Scaling

Vertical scaling increases resources (CPU, memory) within an instance. While this is easier to configure in VM-based services, it introduces downtime or restarts and has hard resource limits. It’s suitable only for legacy components or services with stateful workloads that can’t be distributed.

Automated Scaling Techniques

- Reactive Auto-Scaling

Triggers scaling actions based on observed metrics such as CPU usage, memory consumption, request rate, or queue depth. For example, Google Cloud's autoscaler can adjust VM instance groups when CPU utilization exceeds 60% over a rolling five-minute window. This approach ensures resources match demand closely, but may lag during traffic bursts. - Predictive Auto-Scaling

Some cloud providers use historical data and forecasting models to scale ahead of anticipated demand. AWS Predictive Scaling uses machine learning models trained on 24+ hours of application usage data to plan scale-out events up to 48 hours in advance. This is valuable for services with cyclical or event-driven traffic, such as e-commerce checkouts or media streaming during live events. - Scheduled Auto-Scaling

For known traffic patterns (e.g., weekday usage spikes, nightly batch jobs), scheduled scaling avoids cold starts by pre-warming instances. Azure Virtual Machine Scale Sets and Kubernetes CronJobs support this approach, reducing latency during predictable load windows while conserving resources during off-hours.

Scaling in Microservices Context

- Granular Scaling

Each microservice can scale independently. A stateless user-authentication service may scale rapidly, while a backend reporting service may not require dynamic scaling at all. This decoupled behavior helps control cost and isolate performance tuning. - Cost Efficiency

Services like AWS Fargate, Azure Container Apps, and GCP Cloud Run offer per-request or per-second billing, making microservices scaling cost-optimized. - Cold Start Mitigation

Serverless and container-native microservices must handle startup latency. Provisioned concurrency in AWS Lambda or pre-warmed pods in Kubernetes can reduce cold start impact for latency-sensitive APIs, improving user experience without always-on costs.

Performance Optimization Strategies for Cloud Microservices

Performance directly impacts user satisfaction and cloud cost efficiency. Optimizing microservices performance requires balancing resource allocation, latency reduction, and global scalability.

- Right-Sizing Resources and Autoscaling: Use cloud-native monitoring (e.g., Prometheus, AWS CloudWatch) to analyze CPU and memory usage patterns. Set resource requests and limits appropriately to avoid overprovisioning, which increases costs, or underprovisioning, which causes outages. Kubernetes vertical pod autoscalers can dynamically adjust resource allocation, increasing efficiency and availability.

- Caching to Reduce Latency: Implement caching at multiple levels—client-side, API gateway, and distributed caches like Redis—to decrease load on backend services and improve response times. Research shows caching can reduce API response times by up to 50%, directly enhancing user experience.

- Network and Communication Optimization: Place interdependent services within the same availability zone to reduce latency and cross-zone data transfer costs. Use efficient protocols such as gRPC, which have lower overhead compared to REST, especially beneficial for chatty microservices architectures.

- Global Distribution for Scalability and Resilience: Distribute services across multiple regions close to users to minimize latency and ensure availability in case of regional failures. Cloud providers’ global load balancers intelligently route traffic to the nearest healthy instance, balancing speed and fault tolerance.

Best Practices for Managing Microservices on Cloud Platforms

Effectively managing microservices on the cloud requires aligning operational practices with the scale, complexity, and speed of distributed systems. The following principles support reliability, agility, and cost-efficiency.

- CI/CD for Distributed Delivery

A strong CI/CD pipeline is foundational for managing many independent services.

- Automated Testing at Every Level: Unit, integration, contract, and end-to-end tests ensure changes do not break system behavior.

- Containerized Builds: Build pipelines should produce consistent, versioned container images for repeatable deployments.

- GitOps or Pipeline as Code: Define deployments declaratively to support auditability, rollback, and automation.

Cloud-native CI/CD services like GitHub Actions, GitLab CI, and ArgoCD (for Kubernetes) enable scalable release workflows across cloud environments.

- Observability: Monitoring, Tracing, and Logging

To operate reliably, teams need full visibility into system behavior.

- Track Core Metrics: Monitor latency, traffic, error rates, and resource saturation (known as the “Four Golden Signals”).

- Enable Distributed Tracing: Identify performance bottlenecks across services.

- Centralized Logging with Context: Use correlation IDs to follow a single request across systems.

Popular cloud-native observability tools include Prometheus, Grafana, Jaeger, and Cloud-native logging backends like ELK or cloud provider-native options.

- Resilience and Fault Tolerance

Microservices must handle partial failure gracefully.

- Circuit Breakers and Retries with Backoff prevent cascading failures.

- Timeouts and Fallbacks ensure degraded services don’t halt the system.

- Bulkheads isolate workloads to prevent one failure from exhausting shared resources.

Resilience frameworks like Resilience4j, Polly, or built-in service mesh features (e.g., Istio) support these patterns.

- Security Across Layers

Security should be enforced at every boundary and integrated into service communication.

- Authentication & Authorization: Use OAuth2, OpenID Connect, and RBAC for service and user access.

- Secure Service Communication: Enforce mutual TLS and limit exposure with network segmentation.

- Container Hardening: Scan images, drop unnecessary privileges, and use minimal base images.

- Data Protection: Encrypt sensitive data at rest and in transit, and apply least privilege access to storage and APIs.

Tools like Vault, AWS Security Hub, and Prisma Cloud help apply consistent security practices across environments.

Practical Challenges and Pitfalls in Managing Microservices on the Cloud

Running microservices on cloud platforms introduces distinct challenges. These are not flaws of the model, but realities that must be addressed through deliberate design and operational maturity.

- Service Fragmentation and Complexity

Complexity increases with the increase of microservices.Overly fine-grained services create unnecessary network overhead, increase latency, and complicate deployments. Begin with broader service boundaries and refine them only when justified by scaling needs or business logic. Each service should have a clear purpose and deliver value independently.

- Operational Overhead at Scale

Each new service adds configuration, deployment, and monitoring effort. Without standardized automation, teams quickly become overwhelmed. Use infrastructure as code to manage deployments, implement consistent service ownership, and centralize logging and configuration management early.

- Limited Observability in Distributed Systems

In cloud-native systems, services are deployed across zones, containers, and sometimes multiple platforms. Tracing issues becomes difficult without integrated observability. Set up distributed tracing and service-level monitoring from the start. Focus on actionable metrics - latency, errors, saturation - rather than infrastructure-level noise.

- Unreliable Service Communication

Inter-service calls are vulnerable to network failures, version mismatches, and inconsistent APIs. Use service meshes or API gateways to manage traffic, enforce versioning, and isolate failing components before they cascade. Design APIs with backward compatibility and clear contracts.

- Waste of Resources and High Expenses

Cloud platforms make it easy to create resources but easy to forget them. Use infrastructure as code and tagging strategies to track assets, enforce quotas, and prevent unused resources from accumulating. Automate cleanup of temporary environments and monitor usage to control cost.

- Inadequate Testing for Real-World Conditions

Testing isolated units of code isn’t enough in distributed systems. Add contract tests, integration tests, and resilience testing - such as fault injection or traffic spikes - to verify behavior under failure. Tools like chaos engineering simulators help expose weak points early.

Cloud-Native Microservices in Action

Organizations in different industries are using microservices on cloud platforms to solve real operational challenges. While their goals vary, the benefits are often measurable and tied to specific improvements in performance, reliability, and delivery speed.

- Financial Services Provider: Improved Scalability and Compliance

A large financial institution transitioned critical banking services to a microservices architecture on the cloud. This migration enabled them to isolate services by region to meet regulatory compliance requirements. The move reduced service deployment time by 45% and improved system availability to 99.9%. Enhanced monitoring and automation reduced incident response times by 30%, ensuring regulatory audits were completed with zero non-compliance findings.

Key benefits:

- Faster, region-specific service deployment meeting compliance needs

- Increased availability supporting critical financial transactions

- Reduced incident response times improving operational resilience

- Logistics Company: Cost Efficiency and Dynamic Scaling

A global logistics provider restructured its tracking and delivery platform into microservices deployed on a cloud platform with automated scaling. This approach cut cloud infrastructure costs by 35% through dynamic resource allocation during demand peaks and troughs. Service reliability improved, with a 20% decrease in downtime during high-traffic periods, ensuring consistent customer satisfaction.

Key benefits:

- Optimized cloud costs aligned with actual demand

- Improved uptime during peak logistics operations

- Enhanced customer experience through stable services

- Healthcare Platform: Enhanced Security and Operational Agility

A healthcare technology company migrated patient management and diagnostic services to microservices on the cloud to address privacy regulations and data volume growth. By implementing service-level access controls and centralized logging, they reduced security incident investigation times by 60%. The modular architecture accelerated feature delivery by 50%, allowing quicker responses to evolving healthcare needs.

Key benefits:

- Stronger security with detailed access controls and audit trails

- Faster feature deployment responding to market changes

- Accelerated incident resolution protecting sensitive health data

- Software Development Firm: Increased Developer Productivity and Deployment Speed

A software company decomposed a legacy monolithic application into microservices hosted on a Kubernetes platform. This transformation enabled autonomous teams to deploy independently, increasing deployment frequency by over 400%. Automated CI/CD pipelines and monitoring reduced rollback times from hours to under 10 minutes, significantly improving service reliability and developer efficiency.

Key benefits:

- Dramatic increase in deployment speed and frequency

- Reduced downtime through faster rollback capabilities

- Enhanced team productivity with independent service ownership

Microservices are not a one-size-fits-all solution, but when applied with clear intent and domain understanding, they allow organizations to adapt and grow with greater precision. The cloud offers the flexibility to evolve these systems over time, if the foundation is built with care.