In today's digital world, businesses rely on three core systems to handle their data effectively: OLTP (Online Transaction Processing) for processing daily transactions, OLAP (Online Analytical Processing) for analyzing business performance, and ETL (Extract, Transform, Load) for moving and transforming data between systems. Together, these systems enable seamless data flow, allowing organizations to maintain real-time operations while gaining actionable insights from their data.

OLTP acts as a digital storefront for businesses, managing immediate tasks like order processing, payment transactions, and inventory updates. Meanwhile, OLAP operates behind the scenes, helping businesses identify trends and patterns through analytical queries. ETL serves as the crucial bridge between these systems, extracting data from OLTP systems, transforming it for consistency and accuracy, and loading it into OLAP systems for in-depth analysis.

The Role of Cloud in Data Management

The shift to cloud computing has revolutionized how organizations deploy and manage OLTP, OLAP, and ETL systems. Cloud platforms offer distinct advantages:

- Cost Efficiency: Pay only for the resources used, eliminating large upfront hardware costs.

- Scalability: Automatically scale up or down based on demand, supporting growth and seasonal spikes.

- Reliability: Leverage enterprise-grade infrastructure with built-in redundancy for high availability.

- Accessibility: Access systems from anywhere, empowering remote and global teams.

- Automatic Updates: Benefit from continuous improvements without the need for manual maintenance.

This evolution allows businesses to be more agile and responsive, but it also requires a careful balance of performance, data consistency, and cost. This blog will explore OLTP, OLAP, and ETL in depth, providing practical approaches to implementing and optimizing these systems in cloud environments. Whether planning new infrastructure or upgrading existing systems, understanding these core components is crucial for informed decision-making in today’s fast-paced, data-driven world.

1. Understanding OLTP (Online Transaction Processing)

OLTP (Online Transaction Processing) systems are essential for real-time business operations, managing high volumes of short, atomic transactions, such as order processing, financial transactions, and customer account updates. Designed for speed, OLTP systems prioritize efficient transaction processing to support environments where data needs to be updated instantly and consistently.

Key Features and Use Cases of OLTP

OLTP systems are distinguished by their ACID compliance (Atomicity, Consistency, Isolation, Durability), ensuring each transaction is reliably processed in full. Common use cases include e-commerce transactions, financial transfers, and inventory updates, where real-time data integrity and speed are paramount. These systems must handle numerous concurrent transactions, making them indispensable for businesses that rely on rapid data updates and accuracy.

OLTP Systems: Traditional vs. Cloud Environments

Historically, OLTP systems have been deployed in on-premises environments with dedicated hardware to ensure performance and reliability. However, cloud-native OLTP solutions like Amazon Aurora and Google Cloud SQL now provide managed, scalable alternatives with reduced infrastructure demands and a pay-as-you-go model. Additionally, hybrid OLTP architectures are common for organizations requiring a balance of on-premises control with the scalability benefits of cloud infrastructure, ideal for phased cloud migrations.

Decision Matrix for Choosing an OLTP System

For organizations deciding between on-premises, cloud-native, and hybrid OLTP systems, the following decision matrix offers a comparative overview based on critical factors like scalability, cost, and performance optimization:

| Criteria | On-Premises OLTP | Cloud-Native OLTP (e.g., Aurora, Cloud SQL) | Hybrid OLTP |

| Scalability | Limited; requires hardware upgrades | Highly scalable; auto-scaling options available | Moderate; limited by on-premises capacity |

| Cost | High upfront cost, lower ongoing costs | Pay-as-you-go; reduces capital expenditure | Mixed; pay-as-you-go for cloud, capex for on-premises |

| Maintenance | Requires dedicated IT team for updates | Minimal; handled by cloud provider | Requires both in-house and cloud management |

| High Availability | Achieved with redundant hardware setup | Built-in redundancy across zones | Depends on configuration |

| Compliance | Easier to control for strict requirements | Compliant with most standards, depending on provider | Suitable for gradual migration of sensitive data |

| Performance Optimization | Customizable; optimized for specific needs | Provider offers built-in optimization features | Mixed; may require separate optimizations |

| Data Residency | Full control over data location | Dependent on provider’s data centers | Can maintain some data on-premises |

Examples of OLTP Systems

OLTP systems commonly use databases like MySQL and PostgreSQL for their reliability, scalability, and extensive support. Cloud-native counterparts, such as Amazon Aurora and Google Cloud SQL, provide managed services that simplify deployment and scaling, making them increasingly popular for organizations adopting cloud infrastructure.

Challenges in OLTP

Despite their strengths, OLTP systems face several challenges, especially as organizations scale. Managing transactional workloads while maintaining ACID compliance can be complex and resource-intensive. Additionally, high availability is critical, as downtime can lead to business disruptions. Ensuring optimal performance often involves strategies like indexing, caching, and query optimization to maintain speed and efficiency under heavy loads.

2. Understanding OLAP (Online Analytical Processing)

OLAP (Online Analytical Processing) is a key technology that allows organizations to perform complex queries and analyze large volumes of data quickly and efficiently. OLAP systems are designed to support business intelligence (BI), data mining, and reporting by providing powerful analytical capabilities, which make them well-suited for data-driven decision-making.

Key Features and Use Cases of OLAP

- Dimensional Modeling Concepts: OLAP structures data using a multi-dimensional model, which organizes data into dimensions and measures. This structure, often visualized as a data cube, enables fast retrieval of data for analysis, even on a large scale.

- Example: A retail company may use OLAP to analyze sales by different dimensions, such as time (daily, weekly), geography (regions, stores), and product categories.

- Analysis Capabilities: OLAP allows users to perform complex calculations, aggregations, and comparisons across different data dimensions.

- Example: A financial analyst might use OLAP to view revenue changes across quarters, broken down by regions and customer segments.

- Reporting Functions: OLAP systems support robust reporting tools, allowing organizations to create custom reports, dashboards, and visualizations. These reports aid in trend analysis, forecasting, and identifying business insights.

OLAP Systems: How They Differ from OLTP

OLAP and OLTP systems have different architectural designs and purposes:

- Architectural Differences: OLAP systems are built for read-intensive workloads with large data sets, while OLTP systems are designed for high-speed, transactional updates.

- Data Organization: OLAP stores data in a multi-dimensional format for easy analysis, whereas OLTP relies on relational databases with tables optimized for transactions.

- Query Patterns and Optimization: OLAP queries often involve complex aggregations and joins, requiring extensive optimization for speed, while OLTP queries are usually simpler and focused on individual records.

Cloud-Based OLAP Systems

Modern OLAP systems are often deployed in the cloud to leverage scalability and flexibility. Key cloud-based OLAP solutions include:

- Amazon Redshift: Amazon Redshift provides a massively parallel processing (MPP) architecture, making it efficient for large-scale data analytics. It supports columnar storage and allows for quick query performance on terabyte-scale datasets.

- Google BigQuery: BigQuery is a serverless, highly scalable OLAP solution with built-in machine learning capabilities. Its pay-as-you-go pricing model and support for SQL-based queries make it accessible for businesses of all sizes.

- Azure Synapse Analytics: Microsoft’s Synapse Analytics combines big data and data warehousing capabilities, allowing users to query both structured and unstructured data with optimized analytics performance.

Challenges in OLAP

- Query Performance Optimization: Running complex queries on large datasets can impact performance. Techniques like indexing, partitioning, and caching can help optimize query response times.

- Cost Management Strategies: OLAP systems, especially in the cloud, can become costly due to data storage and processing requirements. Solutions include auto-scaling, data archiving, and tiered storage.

- Data Freshness vs. Performance: Balancing data freshness and query speed is challenging. Many companies use a data staging area or hybrid approach to manage data freshness.

- Resource Allocation: Allocating the right amount of computational resources is essential for ensuring performance without overspending. This involves configuring resources based on query frequency and load patterns.

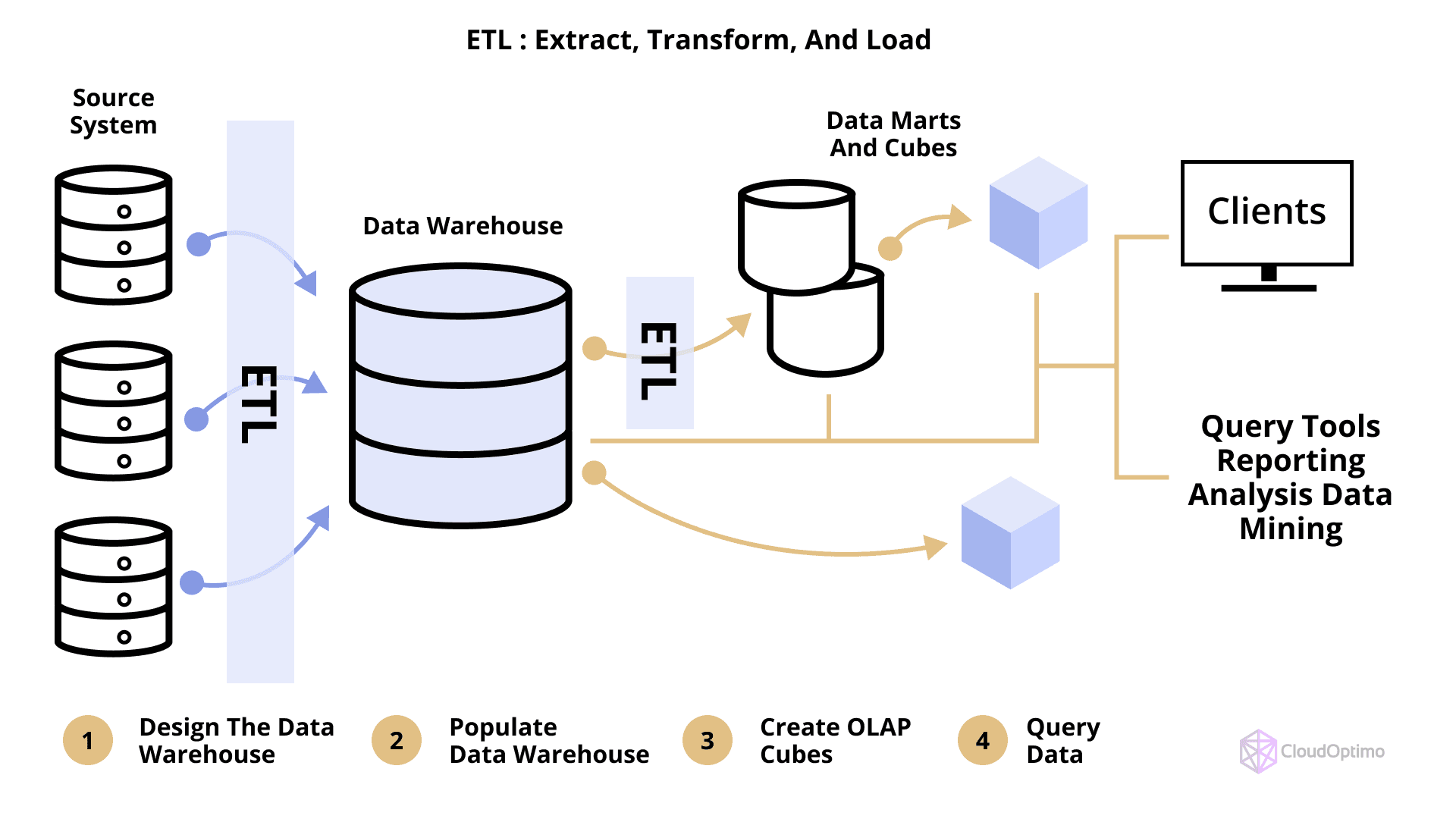

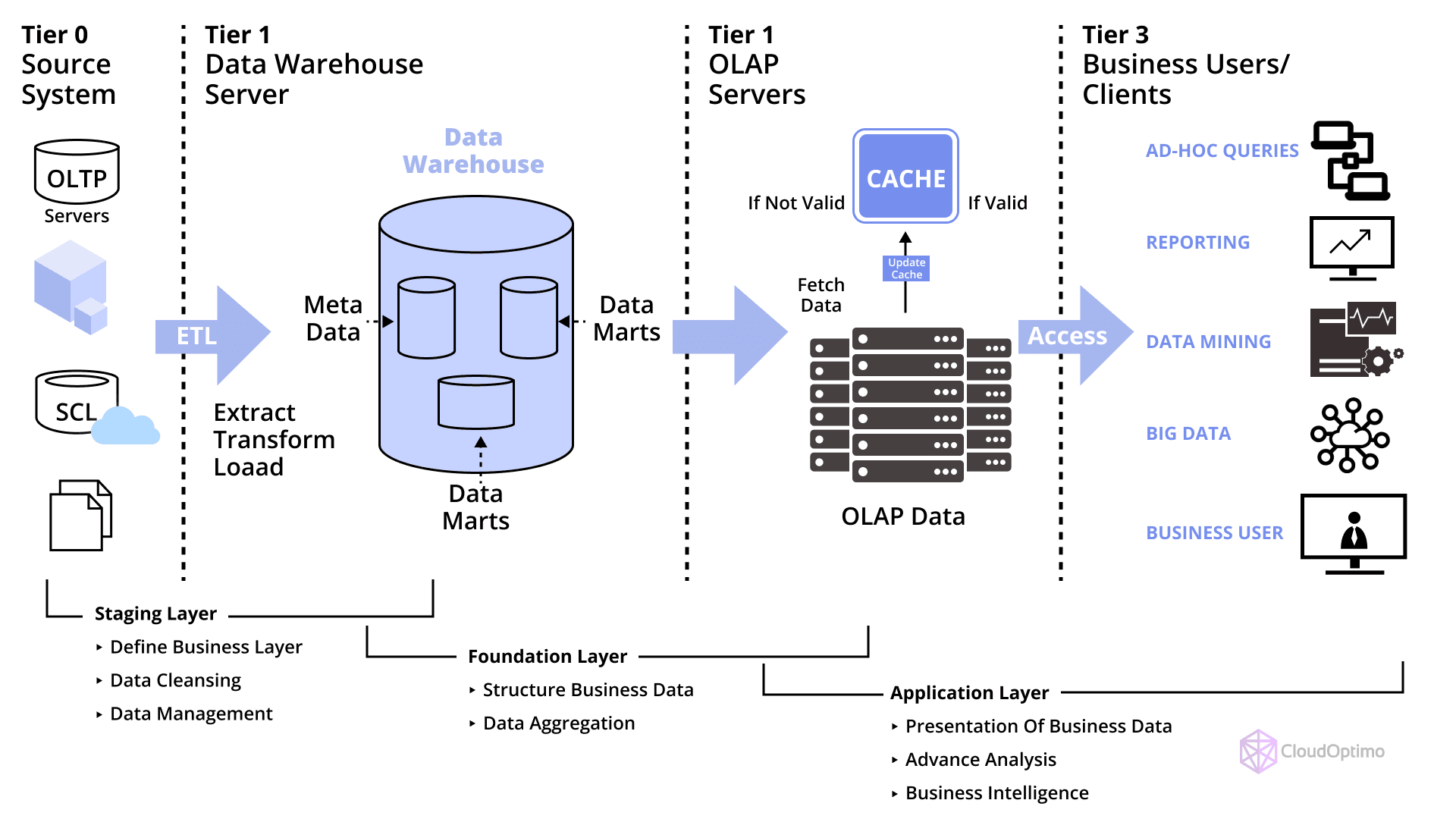

3. The Role of ETL in Data Pipelines

ETL (Extract, Transform, Load) is the backbone of data pipelines, enabling the smooth flow of data between OLTP and OLAP systems. ETL processes extract data from various sources, transform it into a suitable format, and load it into a data warehouse or other storage solution.

ETL (Extract, Transform, Load) is the backbone of data pipelines, enabling the smooth flow of data between OLTP and OLAP systems. ETL processes extract data from various sources, transform it into a suitable format, and load it into a data warehouse or other storage solution.

What is ETL (Extract, Transform, Load)?

- ETL Process Components:

- Extract: Data is pulled from multiple sources, which could include databases, applications, APIs, or files.

- Transform: The extracted data is cleansed, filtered, and transformed to align with the target system’s structure and requirements.

- Load: The transformed data is then loaded into a target data store, such as a data warehouse, where it becomes accessible for analytics and reporting.

- Data Transformation Patterns: Transformations can include data cleansing, aggregation, and format conversions, which help to standardize data for analytical queries.

- Loading Strategies: ETL processes can employ batch loading for periodic data updates or streaming for real-time data requirements, depending on the analytical needs.

ETL's Connection Between OLTP and OLAP

ETL serves as the link between OLTP and OLAP, enabling data to move seamlessly from transactional systems to analytical environments.

- Data Flow Architectures: ETL ensures data flows in a structured way from OLTP to OLAP, supporting BI and analytics.

- Integration Patterns: ETL can integrate data across various formats and systems, allowing OLAP to aggregate data from multiple OLTP sources.

- Synchronization Strategies: ETL processes keep data synchronized by updating OLAP data stores based on changes in OLTP systems, typically on a scheduled or near-real-time basis.

Cloud-Based ETL Tools

- AWS Glue: AWS Glue is a serverless ETL service that simplifies data preparation and movement in AWS environments. It features a built-in data catalog, automated schema discovery, and machine-learning-powered transformations.

- Azure Data Factory: Azure’s ETL tool provides a drag-and-drop interface for building ETL workflows, with connectors for various data sources, both cloud and on-premises.

- Google Cloud Dataflow: Dataflow offers real-time ETL capabilities, supporting both stream and batch processing. It is fully managed and scales automatically to accommodate large data volumes.

Challenges in ETL

- Data Quality Management: Ensuring high data quality is crucial, as ETL involves merging data from different sources. ETL tools often include data validation and cleansing features to address this.

- Performance Optimization: Large-scale ETL processes can become time-consuming. Techniques like parallel processing, partitioning, and incremental loading can improve efficiency.

- Error Handling: ETL jobs can encounter errors during data extraction, transformation, or loading. Modern ETL tools include error-handling mechanisms to log and address issues.

- Monitoring and Alerting: ETL processes require continuous monitoring to detect delays or failures. Cloud-based ETL tools offer built-in monitoring and alerting capabilities, ensuring reliable data movement across systems.

4. How OLTP, OLAP, and ETL Work Together

In modern data architectures, OLTP, OLAP, and ETL systems are interconnected to facilitate seamless data processing and analysis. By integrating these three systems, businesses can gather, process, and analyze data effectively, enabling real-time decision-making and long-term strategic insights.

ETL as the Bridge Between OLTP and OLAP

ETL as the Bridge Between OLTP and OLAP

ETL (Extract, Transform, Load) is the critical process that links OLTP (Online Transaction Processing) systems, where data is created, to OLAP (Online Analytical Processing) systems, where data is analyzed. ETL extracts transactional data from OLTP systems, transforms it into a format suitable for analysis, and loads it into an OLAP data warehouse.

Integration Architecture and Data Flow Patterns

ETL processes follow several architectural patterns depending on the data requirements:

- Batch Processing: Large volumes of data are processed at scheduled intervals, suitable for non-urgent reports (e.g., daily sales reports).

- Streaming ETL: For real-time insights, data flows continuously from OLTP to OLAP systems, enabling immediate analysis. This pattern is beneficial for real-time customer insights in sectors like e-commerce and finance.

- Micro-Batch Processing: A blend of batch and real-time processing, where data is loaded at shorter intervals, allowing near real-time insights.

Example Data Pipeline

Understanding how OLTP, OLAP, and ETL work in a real-world scenario clarifies their collaborative role. Here’s an example of a typical data pipeline in an e-commerce environment:

- E-commerce System Architecture: An OLTP system records each customer transaction (e.g., purchases, returns) in real-time. The ETL process extracts this data periodically and loads it into an OLAP system.

- Financial Data Processing: In financial services, multiple OLTP systems capture transaction data (e.g., deposits, withdrawals). ETL consolidates this data into a centralized OLAP system, where it's analyzed for insights like fraud detection or customer trends.

Table: OLTP, OLAP, and ETL Data Flow Example

| Component | E-commerce Pipeline | Financial Services Pipeline |

| OLTP | Real-time orders, inventory updates | Account transactions, fund transfers |

| ETL | Periodic extraction, data transformation | Consolidated data integration, anomaly checks |

| OLAP | Customer buying trends, inventory forecasting | Trend analysis, fraud detection |

Real-Time Data Processing with ETL

With real-time ETL processing, data is transformed and analyzed as soon as it’s generated, making it essential for applications requiring instant feedback.

- Streaming Architectures: Tools like Apache Kafka or AWS Kinesis enable high-speed data streaming, ideal for processing continuous data.

- Real-Time ETL Patterns: Using tools like Google Cloud Dataflow, businesses can perform real-time data transformations as new data arrives.

- Lambda and Kappa Architectures:

- Lambda Architecture: Divides data processing between batch and real-time layers, ideal for applications needing both historical and real-time insights.

- Kappa Architecture: Optimized for real-time processing only, making it suitable for applications where immediate feedback is critical, such as in fraud detection.

5. Cloud Optimization

With cloud-based OLTP, OLAP, and ETL systems, organizations gain access to scalability, flexibility, and cost efficiency. Cloud optimization techniques ensure these systems remain high-performing without excessive costs.

Benefits of Cloud for Data Systems

The cloud introduces significant advantages, particularly for scaling data pipelines and managing complex workloads.

- Scalability Patterns: Cloud services offer both vertical scaling (adding resources to a single node) and horizontal scaling (adding more nodes). This flexibility supports fluctuating workloads, enabling OLTP systems to handle high transaction rates and OLAP systems to process large data volumes.

- Flexibility Options: Organizations can choose from a variety of cloud services, each optimized for specific workloads, whether for transactional data (OLTP), analytical data (OLAP), or transformation tasks (ETL).

- Cost Optimization Strategies: Cloud providers like AWS, Google Cloud, and Azure offer a pay-as-you-go model, making it easy to avoid upfront infrastructure costs and scale resources based on demand.

Table: Cloud Benefits for OLTP, OLAP, and ETL

| Benefit | OLTP Impact | OLAP Impact | ETL Impact |

| Scalability | Supports high transaction volumes | Manages large datasets | Processes high-volume data quickly |

| Flexibility | Adapts to transaction spikes | Adds/modifies analytical tools | Handles various data sources easily |

| Cost Savings | Reduced hardware expenses | Optimized storage costs | Cost-effective data movement |

Serverless and Scalable Architectures

Serverless architectures allow businesses to focus on code and configuration without managing infrastructure. This approach is particularly valuable for ETL and OLAP tasks that require resource elasticity.

- AWS Lambda: Ideal for running lightweight ETL functions, such as cleaning or transforming data as it’s loaded.

- Google Cloud Functions: Supports a range of ETL tasks, including API integrations and data validation.

- Azure Functions: Useful for processing transactional data in real time or for running ETL tasks that respond to file uploads.

Performance and Cost Optimization

Optimizing performance and managing costs are essential to maintaining efficient cloud-based data architectures.

- Resource Management: Effective resource allocation involves setting thresholds to prevent excessive use and configuring autoscaling for high-demand periods.

- Cost Monitoring: Using tools like AWS Cost Explorer, Google Cloud Billing, or Azure Cost Management, businesses can track spending, set budgets, and analyze usage.

- Performance Tuning: Key strategies for tuning include:

- OLTP: Implementing database indexing, caching, and optimizing queries.

- OLAP: Partitioning data, setting data retention policies, and using columnar storage.

- ETL: Configuring batch sizes, minimizing transformation steps, and leveraging in-memory processing for fast transformations.

6. Key Differences Between OLTP and OLAP

OLTP (Online Transaction Processing) and OLAP (Online Analytical Processing) systems serve different purposes and operate under distinct design principles. Here’s a closer look at their differences in terms of data structure, query types, and storage performance.

Data Structure

The data structure in OLTP and OLAP systems varies significantly to optimize their respective workflows.

- Relational Schemas:

- OLTP: Uses highly normalized schemas, typically in the form of relational tables to avoid data redundancy. This structure supports frequent insertions, updates, and deletions efficiently.

- OLAP: Uses dimensional models like star and snowflake schemas, which support complex queries on large data volumes. The denormalized structure reduces join operations and is optimized for reading.

- Dimensional Modeling:

- OLAP relies on dimensional modeling to organize data into dimensions (e.g., time, product) and facts (e.g., sales, revenue) for intuitive reporting and analysis.

- Storage Optimizations:

- OLTP systems use row-based storage to quickly retrieve single rows, optimizing transactional performance.

- OLAP systems use columnar storage to retrieve large datasets for analysis, enhancing query performance for aggregated results.

Query Types

The types of queries in OLTP and OLAP systems differ due to their distinct roles:

- Transaction Processing Patterns:

- OLTP: Focuses on CRUD operations (Create, Read, Update, Delete) that are short and frequent, such as customer purchases and account updates.

- OLAP: Optimized for complex analytical queries requiring large data scans, aggregations, and joins for reporting and analysis.

- Analytical Query Optimization:

- OLAP queries benefit from indexing, partitioning, and caching to retrieve large datasets quickly, while OLTP queries prioritize latency and data integrity.

- Hybrid Workload Management:

- Some systems, like Google BigQuery, offer hybrid capabilities by balancing transactional and analytical needs, enabling users to run mixed workloads.

Table: Query Type Differences

| Feature | OLTP | OLAP |

| Query Type | Short, frequent transactions | Complex aggregations, long-running |

| Focus | CRUD operations | Summarization, trend analysis |

| Optimization | Indexing, normalization | Partitioning, caching, indexing |

Storage and Performance

Storage and performance strategies differ to cater to the needs of OLTP and OLAP systems.

- Storage Architecture Choices:

- OLTP: Uses traditional relational databases (e.g., MySQL, PostgreSQL) with row-based storage for efficient transactional data handling.

- OLAP: Relies on data warehouses or columnar databases (e.g., Amazon Redshift, Snowflake) to handle large datasets and provide fast analytics.

- Performance Optimization Techniques:

- OLTP: Prioritizes response time through indexing and caching, ensuring ACID compliance.

- OLAP: Optimizes query performance through partitioning and compression, enhancing data retrieval speeds.

- Caching Strategies:

- OLTP: Implements write-through and read-through caching to handle frequent updates.

- OLAP: Uses in-memory caching for accelerating data retrieval in analytics.

7. Choosing the Right Technology

Selecting the right technology depends on understanding business needs and workload characteristics.

Selection Criteria

- Workload Analysis: Identify whether the primary workload is transactional (OLTP) or analytical (OLAP) and select systems optimized for that workload.

- Scaling Requirements: Consider future growth and select systems that offer scalability options, whether horizontal or vertical.

- Integration Needs: Choose tools that easily integrate with your existing tech stack, including ETL tools and cloud services.

Tools Comparison

Several tools from major cloud providers offer OLTP, OLAP, and ETL services, each with unique features and cost considerations.

Table: Tools Comparison

| Provider | OLTP Solution | OLAP Solution | ETL Tool |

| AWS | Amazon RDS | Amazon Redshift | AWS Glue |

| Google Cloud | Cloud SQL | BigQuery | Dataflow |

| Azure | Azure SQL Database | Azure Synapse | Azure Data Factory |

Implementation Considerations

- Maintenance Requirements: Cloud-native services reduce maintenance demands but may involve monitoring and tuning.

- Vendor Lock-In Risks: Avoid relying solely on one provider to reduce risk. Consider tools with multi-cloud support to prevent dependency.

- Migration Strategies: For existing systems, plan for data migration to the cloud, using incremental data transfer and hybrid configurations.

A decision matrix for choosing between OLTP (Online Transaction Processing) and OLAP (Online Analytical Processing) technology should consider factors that match each system’s strength with your organization’s needs in areas like workload, integration, and cost.

| Criteria | OLTP | OLAP | Decision |

| Primary Workload Needs | High volume of short, real-time transactions (e.g., updates, inserts) | High volume of complex, read-heavy queries (e.g., reporting) | Select OLTP for frequent updates; OLAP for analysis |

| Data Storage | Row-based storage, optimized for quick access to specific records | Columnar storage, optimized for aggregations | Choose OLTP for transactional accuracy; OLAP for aggregations |

| Integration with Applications | Suited for front-end applications needing real-time access | Suited for BI tools and analytical dashboards | OLTP for operational use; OLAP for reporting use |

| Data Volume | Handles smaller transactions; designed for smaller databases | Designed for large data volumes and historical data | OLAP is better for high-volume historical data |

| Data Consistency | Strict ACID compliance for transactional accuracy | ACID not always required; emphasizes eventual consistency | OLTP for transactional consistency needs |

| Latency Requirements | Low latency, real-time processing | Higher latency acceptable for batch processing | OLTP for real-time needs; OLAP for batch processing |

| Scalability Needs | Horizontal scaling may be limited; vertical scaling often necessary | Highly scalable horizontally, can handle large data growth | OLAP for high growth; OLTP for moderate growth |

| Cost Considerations | Typically more cost-efficient for low to moderate data storage | Costlier due to large storage and processing power | OLTP for cost-sensitive environments; OLAP if budget allows |

| Operational Complexity | Simpler setup, easier to manage for transactional systems | Requires complex ETL (Extract, Transform, Load) pipelines | OLTP for simplicity; OLAP if capable resources are available |

- Choose OLTP if your focus is on managing real-time transactional data, with low latency and high consistency, and if integration with transactional applications is a priority.

- Choose OLAP if you need extensive data analysis, reporting, and business intelligence, with large historical datasets and complex querying capabilities.

8. Emerging Trends

Real-Time Analytics

Real-time analytics enables organizations to make decisions quickly and improve responsiveness.

- Stream Processing: Tools like Apache Kafka, Amazon Kinesis, and Google Pub/Sub handle continuous data streams for real-time ETL and analytics.

- Data Lake Integration: By combining data lakes with data warehouses, businesses can store both structured and unstructured data for comprehensive analysis.

- Real-Time Visualization: Tools like Tableau and Power BI provide instant insights, helping businesses react to trends in real time.

AI and ML Integration

AI and machine learning are increasingly integrated with data processing pipelines for predictive insights.

- Automated ETL: Machine learning models optimize ETL processes, automating tasks like data cleaning, anomaly detection, and categorization.

- Predictive Analytics: Predictive models provide insights into future trends, making them invaluable for risk assessment and demand forecasting.

- Machine Learning Pipelines: Cloud providers like Google AI Platform, AWS Sagemaker, and Azure ML simplify building and deploying ML pipelines.

Hybrid Architectures

Hybrid architectures are gaining popularity as businesses seek flexibility across cloud and on-premises systems.

- Multi-Cloud Strategies: Businesses avoid vendor lock-in and achieve redundancy by distributing their workloads across multiple cloud providers.

- Hybrid Deployment Patterns: Integrating on-premises and cloud-based systems helps organizations balance control and scalability.

- Data Sovereignty Considerations: For industries with data residency requirements, hybrid architectures ensure data remains within regulatory boundaries.

Table: Emerging Trends in Data Management

| Trend | Description | Example Tools |

| Real-Time Analytics | Continuous data processing | Apache Kafka, Amazon Kinesis |

| AI and ML Integration | Automated insights and predictions | AWS Sagemaker, Google AI Platform |

| Hybrid Architectures | Multi-cloud and hybrid deployments | Anthos, Azure Arc, AWS Outposts |

9. Common Challenges and Best Practices

In the complex world of data management, OLTP, OLAP, and ETL systems are foundational, yet they come with their own set of challenges. Below, we explore these challenges in detail and offer actionable best practices to tackle them effectively.

Data Integrity: Ensuring Consistency and Accuracy

Data integrity is vital for maintaining consistency, accuracy, and trustworthiness across databases. In both OLTP and OLAP systems, ensuring high data integrity guarantees that data remains reliable, especially when making critical business decisions.

- Consistency Mechanisms:

- OLTP systems rely on ACID properties (Atomicity, Consistency, Isolation, Durability) to ensure that transactions are processed reliably. This is crucial for real-time transactional systems, where even a minor error can lead to significant business disruptions (e.g., a failed payment transaction).

- OLAP systems often use eventual consistency in distributed environments, which allows for higher scalability and performance. However, it is essential to manage this properly to avoid inconsistencies during data processing.

- Validation Procedures:

- Automated data validation during ETL processes ensures that only clean data is loaded into analytical systems. For example, implementing data quality checks at each step of the pipeline can detect issues like duplicate records, missing values, or outliers.

- Real-time validation using tools like AWS Glue and Azure Data Factory ensures that issues are detected and resolved quickly before they affect reporting and decision-making.

- Error Handling:

- Graceful error handling is crucial in maintaining system integrity. In OLTP systems, implementing rollback mechanisms ensures that incomplete or failed transactions do not corrupt the database.

- For ETL systems, retry mechanisms and dead-letter queues ensure that failed data processing tasks do not get lost but are retried or logged for later resolution.

Query Performance: Optimizing Data Retrieval

As systems scale, query performance becomes crucial to the success of both OLTP and OLAP systems. Slow queries can drastically affect user experience and decision-making speed. Below are strategies to optimize query performance.

- Optimization Techniques:

- Partitioning: Breaking large datasets into smaller, manageable chunks allows queries to run faster. In OLAP systems, this technique is essential when dealing with time-series data or large data warehouses.

- Sharding: For OLTP systems handling high transaction volumes, sharding (splitting data across multiple machines) can distribute the load evenly, reducing response times.

- Indexing Strategies:

- Indexing is a proven method to speed up query execution by allowing faster data lookups. For OLTP systems, using B-tree indexes is common for transactional queries, while bitmap indexes are ideal for OLAP systems where large datasets need to be scanned.

- Columnar indexing in OLAP is particularly useful for analytical queries, where data needs to be aggregated over large volumes.

- Caching Mechanisms:

- In-memory caching (using tools like Redis or Memcached) is critical for real-time applications and reporting systems. Frequently queried data, such as product details or user preferences, can be stored in memory, ensuring lightning-fast responses.

- Query result caching in OLAP systems can also significantly improve performance, particularly when dealing with complex aggregation queries.

Table: Common Query Performance Optimization Techniques

| Technique | Description | Best Use Case |

| Partitioning | Splitting large datasets into smaller parts for faster access. | OLAP systems dealing with massive datasets like time-series data. |

| Sharding | Distributing data across multiple servers to improve scalability and speed. | High-volume OLTP systems like e-commerce. |

| Indexing | Creating data indexes for faster retrieval. | OLTP systems and OLAP systems with large data warehouses. |

| Caching | Storing frequently accessed data in memory. | Real-time applications and frequent queries in OLAP. |

ETL Automation: Streamlining Data Pipelines

Automating ETL pipelines is essential for ensuring that data flows seamlessly from source to target without manual intervention. Here are key best practices for automating ETL processes:

- Pipeline Automation:

- Tools like AWS Glue, Azure Data Factory, and Google Cloud Dataflow automate the extraction, transformation, and loading of data, allowing data engineers to focus on business logic rather than manual data movement.

- Automated scheduling ensures that data is processed in real-time or batch mode, depending on the use case. For instance, setting up daily data refresh cycles for analytics or near-real-time updates for financial data processing.

- Error Handling:

- Implement retry logic and dead-letter queues for failed tasks in the ETL pipeline. This ensures that if a task fails, it can be retried or flagged for manual inspection.

- Centralized logging systems (e.g., AWS CloudWatch, Azure Monitor) can help track failed ETL jobs, making it easier to pinpoint errors and resolve them swiftly.

- Monitoring Systems:

- Integrate real-time monitoring for ETL processes. Tools like Google Stackdriver or CloudWatch provide insights into the health of data pipelines, alerting teams about failures before they impact downstream applications.

- Using data lineage tools ensures that data transformations are traceable, making it easier to audit and debug the pipeline when things go wrong.

10. Real-World Use Cases

Let's explore some real-world scenarios where OLTP, OLAP, and ETL systems are leveraged to drive business outcomes across different industries.

E-commerce Implementation

E-commerce platforms are high-transaction environments that rely heavily on OLTP for processing real-time purchases while using OLAP for customer insights and sales analytics.

- Transaction Processing:

- OLTP systems manage fast and frequent transactions, such as order placements, inventory updates, and payment processing. Databases like MySQL or PostgreSQL are commonly used for their speed and reliability in transactional operations.

- Customer Analytics:

- OLAP systems enable businesses to gain valuable insights into customer behavior, such as which products are most popular or which customers are likely to make repeat purchases. Tools like Google BigQuery or Amazon Redshift are used to aggregate data from OLTP systems and provide detailed insights into sales trends.

- Inventory Management:

- Real-time inventory management ensures that stock levels are updated instantaneously when products are purchased. OLTP databases are paired with OLAP systems to track inventory patterns and predict demand spikes.

Financial Services

The financial sector, dealing with high volumes of sensitive data, requires OLTP for transaction processing, OLAP for risk analysis, and ETL for data integration and reporting.

- Real-Time Trading Systems:

- OLTP systems process large volumes of trades, stock prices, and transaction data in real-time. PostgreSQL and Oracle Database are commonly used to handle transactional workloads.

- Risk Analysis:

- OLAP systems analyze historical data to identify potential financial risks. These systems use large datasets to build predictive models, leveraging tools like Azure Synapse Analytics for deep data analysis.

- Regulatory Reporting:

- OLAP systems, coupled with ETL tools, ensure accurate and timely reporting for compliance with financial regulations (e.g., SEC, MiFID II). AWS Glue is often used to orchestrate the transformation and loading of data into regulatory reporting systems.

Healthcare Data Management

In healthcare, OLTP systems store critical patient data, OLAP systems provide insights into patient outcomes, and ETL systems ensure that data is compliant with regulations.

- Patient Data Integration:

- OLTP systems manage patient records, medical histories, and appointments. SQL Server or MySQL is often used for this purpose due to their robustness in handling sensitive data.

- Clinical Analytics:

- OLAP systems aggregate patient data to improve clinical outcomes by analyzing trends in patient care. Systems like Google BigQuery are ideal for storing and analyzing large volumes of healthcare data.

- Compliance Requirements:

- ETL tools ensure that data is transformed and loaded in compliance with healthcare regulations (e.g., HIPAA). Azure Data Factory can help automate the extraction of data from multiple sources and ensure it is properly anonymized and compliant.

11. Frequently Asked Questions (FAQ)

Q1. What are the key differences between OLTP and OLAP systems?

Answer: OLTP (Online Transaction Processing) systems are designed to handle real-time transactional data. These systems support frequent, small transactions like placing orders, updating inventories, or processing payments. OLTP databases are highly normalized and optimized for fast read/write operations.

OLAP (Online Analytical Processing) systems, on the other hand, focus on analyzing large volumes of historical data for business intelligence and reporting. These systems are optimized for complex queries, aggregations, and multidimensional analysis, often using a star or snowflake schema for organizing data.

Q2. Why is cloud-based ETL more advantageous than traditional ETL solutions?

Answer: Cloud-based ETL solutions provide several advantages over traditional on-premises systems, including:

- Scalability: Cloud services like AWS Glue or Azure Data Factory allow dynamic scaling to handle increased data volumes.

- Cost efficiency: Pay-per-use models help avoid upfront hardware costs.

- Ease of integration: These solutions integrate seamlessly with other cloud services such as data storage, analytics tools, and machine learning platforms.

- Serverless options: Cloud ETL tools like AWS Lambda automate workflows, eliminating the need for server management.

Q3. What are some common performance challenges in OLAP systems, and how can they be addressed?

Answer: OLAP systems often face performance challenges like:

- Slow query performance: This can be mitigated through the use of indexing, query optimization, and pre-aggregated data.

- High storage costs: OLAP databases tend to store large amounts of historical data, making efficient storage management essential. Partitioning data and using columnar storage can help optimize costs.

- Data freshness: OLAP systems rely on periodic updates, which can lead to stale data. Implementing real-time data streaming or hybrid systems can help achieve near-real-time analytics.

Q4. How do I ensure data integrity and consistency in an OLTP system?

Answer: Maintaining data integrity in OLTP systems requires robust consistency mechanisms:

- ACID compliance: Ensuring that transactions meet the Atomicity, Consistency, Isolation, and Durability properties helps maintain the accuracy and reliability of transactional data.

- Validation procedures: Implementing validation checks during data entry ensures that only correct and accurate data is written to the database.

- Error handling: Proper error handling routines prevent partial or corrupted transactions, ensuring system reliability.

Q5. What are the best practices for automating ETL pipelines in the cloud?

Answer:

- Modular design: Break down ETL processes into smaller, reusable components to increase maintainability.

- Error handling: Implement robust error-catching mechanisms with automated retries and alerting to minimize manual intervention.

- Monitoring: Utilize monitoring tools (e.g., AWS CloudWatch, Azure Monitor) to ensure the ETL pipelines run smoothly and scale according to demand.

- Version control: Version control your ETL code (e.g., using Git) to maintain traceability and enable easier updates.

Q6. How do cloud-based data systems handle large-scale data ingestion?

Answer: Cloud platforms such as AWS, Azure, and Google Cloud offer tools like Amazon Kinesis, Azure Stream Analytics, and Google Cloud Pub/Sub to facilitate the real-time ingestion and processing of large datasets. These tools enable high-throughput data streaming and can integrate with OLTP and OLAP systems to provide seamless data flow for real-time analytics.

Q7. What is the role of machine learning (ML) in modern ETL pipelines?

Answer: Machine learning is increasingly integrated into ETL processes to:

- Data cleansing: ML models can automatically detect anomalies and outliers, improving data quality during the extraction and transformation phases.

- Predictive analytics: ML models can be used within the transformation phase to derive insights from historical data, enhancing decision-making.

- Automated transformation: ML algorithms can automate complex data transformations, making ETL processes more efficient.

Q8. What factors should be considered when choosing between OLTP and OLAP for a project?

Answer: Consider the following factors:

- Workload type: OLTP is ideal for systems requiring real-time transaction processing, while OLAP is suited for heavy data analysis and reporting.

- Data volume: OLTP handles smaller, more frequent transactions, while OLAP works with large datasets for deeper analytical insights.

- Query complexity: OLTP queries tend to be simple, focusing on quick updates, whereas OLAP queries are more complex and require aggregations and joins.