Overview and Importance of Caching in Modern Applications

In the modern world of web applications, speed, and scalability are crucial factors for success. Whether it's a simple e-commerce platform or a complex social media site, delivering a fast and responsive user experience is key. This is where caching plays an essential role.

Caching is a technique where frequently accessed data is stored in temporary storage, making it quicker to retrieve than fetching from the original source. It reduces the load on databases, improves application speed, and enhances overall system performance. For example, caching the results of a heavy database query means that subsequent requests can fetch the cached result, cutting down response times significantly.

Without caching, applications would need to repeatedly query databases or external APIs for the same data, creating bottlenecks, higher latency, and degraded user experience.

Why Choose a Managed Caching Solution?

When you’re building large-scale applications, managing your caching infrastructure manually can be cumbersome and resource-intensive. This is where a managed caching solution like Amazon ElastiCache becomes invaluable. Rather than spending time and resources on infrastructure maintenance, scaling, and patching, Amazon ElastiCache provides a fully managed service for in-memory caching.

Some key advantages of using a managed caching service include:

- Automatic Scaling: ElastiCache automatically scales your cache clusters based on your application's demands.

- Managed Backups: Automatic backups and snapshots ensure your cache data is secure.

- Ease of Use: AWS handles the complexity of maintaining the cache, leaving you free to focus on building your application.

With ElastiCache, you can leverage powerful in-memory caching engines, like Redis and Memcached, while AWS takes care of availability, failover, and security.

What is Amazon ElastiCache?

Core Features and Benefits

Amazon ElastiCache is a fully managed service that simplifies the deployment, management, and scaling of an in-memory cache in the cloud. It enables faster application performance by reducing the need to access slower disk-based storage or external services, providing low-latency data access.

Here are the core features and benefits of using Amazon ElastiCache:

- Fully Managed: AWS takes care of provisioning, patching, scaling, and monitoring.

- Performance and Low Latency: ElastiCache operates in-memory, making data retrieval extremely fast compared to traditional databases.

- Scalability: ElastiCache clusters can scale horizontally, supporting varying levels of demand.

- High Availability: Multi-AZ deployment options provide fault tolerance and improve the resilience of the cache.

- Security: Built-in encryption features allow for secure data in transit and at rest.

- Cost-Efficiency: By caching frequently accessed data, ElastiCache reduces the load on databases, improving overall cost-effectiveness.

Overview of Supported Caching Engines (Redis vs. Memcached)

Amazon ElastiCache supports two of the most popular open-source in-memory caching engines: Redis and Memcached. While both serve similar purposes, they have different features that make them suitable for different use cases.

Redis: is more feature-rich, supporting a variety of complex data types and offering persistence and clustering. It’s ideal for use cases requiring more than simple caching, such as real-time analytics or pub/sub messaging.

Memcached: on the other hand, is optimized for simple key-value caching and is well-suited for lightweight caching needs where high throughput is needed without the complexities of advanced data types.

How Does Amazon ElastiCache Work?

Architectural Overview

The architecture of Amazon ElastiCache is designed to be highly available, scalable, and performant. The system is built on a cluster model, where you can deploy one or more nodes that store cached data in memory.

Key Components of ElastiCache Architecture:

- Cache Nodes: These are the individual servers that store cached data. Nodes can be added or removed as needed, making the system highly scalable.

- Cache Cluster: A collection of cache nodes that work together to provide a unified caching layer.

- Primary and Replica Nodes: In a Redis setup, there is a primary node that handles read and write operations and one or more replica nodes that replicate the data for read scalability and high availability.

- Endpoint: Clients interact with ElastiCache via an endpoint that points to a specific cache cluster.

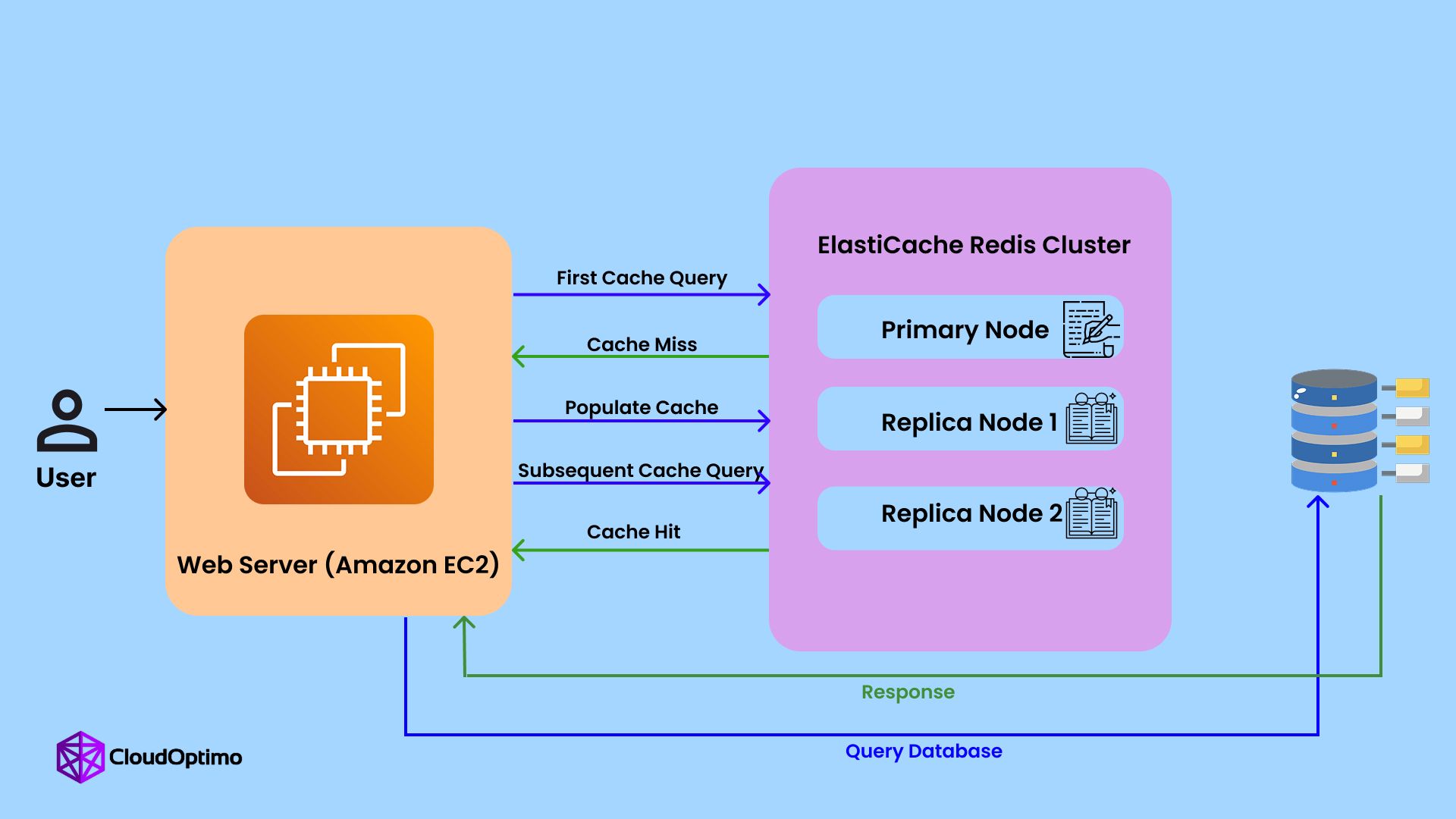

Here’s a high-level diagram of the typical architecture of an Amazon ElastiCache Redis setup:

In this architecture:

In this architecture:

- The application server sends requests to the ElastiCache Redis Cluster.

- A cache miss occurs when the requested data is not found in the cache, prompting the application to fetch it from the database.

- A cache hit happens when the requested data is already in the cache, and the data is served directly from ElastiCache, reducing response time and database load.

Data Flow and Cache Miss/Hit Concept

When an application requests data, ElastiCache checks its cache to see if the data already exists. If it does, the system serves the data from memory—this is called a cache hit. Cache hits result in low latency and improved performance since accessing data from memory is much faster than querying a database.

However, if the requested data is not found in the cache, it results in a cache miss. In this case, ElastiCache will retrieve the data from the original source (e.g., a relational database or an API), store the data in the cache, and return the result to the application. Future requests for the same data will then be served from the cache, reducing load on the backend systems and improving performance.

Example of Cache Miss and Cache Hit in Code:

| python import redis # Connect to the Redis instance r = redis.StrictRedis(host='your-redis-endpoint', port=6379, db=0) def fetch_data_from_cache(key): # Try to get data from Redis cache data = r.get(key) if data: print("Cache Hit: Returning data from cache") return data else: print("Cache Miss: Fetching data from source") data = fetch_data_from_source(key) # Function to fetch from DB r.set(key, data) # Store data in cache for future use return data def fetch_data_from_source(key): # Simulate fetching data from a database or external service return f"Data for {key}" |

In this code:

- The function fetch_data_from_cache first checks the cache for the requested data. If the data is not found (a cache miss), it retrieves the data from the source (e.g., a database), stores it in Redis, and returns it. On subsequent calls, it serves the data directly from the cache (cache hit).

Integrating with AWS Services (EC2, Lambda, RDS, etc.)

One of the major advantages of using Amazon ElastiCache is its seamless integration with other AWS services. ElastiCache is optimized for use with applications running on Amazon EC2, as well as with Amazon RDS for database-driven applications.

Example Integration with EC2:

Applications hosted on EC2 instances can easily communicate with ElastiCache to fetch or store data. Here’s how:

- EC2 instances make requests to ElastiCache, reducing the load on RDS or other databases.

- ElastiCache provides faster access to commonly accessed data.

Example Integration with AWS Lambda:

For serverless applications, AWS Lambda can interact with ElastiCache to retrieve cached data or store results from computation in the cache. This is especially useful for stateless, event-driven applications where caching frequently accessed data minimizes the cost and improves speed.

Why Use Amazon ElastiCache?

Performance Benefits: Speed, Low Latency

Caching accelerates data retrieval times by storing frequently requested data in memory, allowing applications to access it much faster than querying databases or other slower data sources.

Amazon ElastiCache dramatically reduces the time it takes to serve requests, making your application highly responsive. By utilizing in-memory data stores like Redis and Memcached, it allows for microsecond-level access to frequently accessed data. This is ideal for workloads such as session storage, leaderboard management, or real-time data processing.

For instance, with Redis, the data is stored in memory and can be accessed in just a few milliseconds, compared to traditional databases where each query may take hundreds of milliseconds or longer.

Code Example for Redis Performance:

Here’s how Redis improves performance when storing session data for a web application:

| python import redis # Connect to Redis r = redis.StrictRedis(host='your-redis-endpoint', port=6379, db=0) # Store user session data with a timeout def store_session(user_id, session_data): r.setex(f"session:{user_id}", 3600, session_data) # Expires in 1 hour # Retrieve session data def get_session(user_id): session_data = r.get(f"session:{user_id}") if session_data: return session_data return None # Cache miss |

In this case, fetching user session data from Redis is extremely fast, significantly reducing response time compared to a traditional database lookup.

Scalability and Flexibility

Amazon ElastiCache enables horizontal scaling, allowing you to add more cache nodes as your application grows. With ElastiCache, you can easily scale your cache cluster by adding new nodes or partitions, ensuring that your caching layer can handle ever-growing traffic demands without performance degradation.

ElastiCache supports both Redis clustering and Memcached sharding, enabling you to distribute data across multiple nodes and improve cache performance as your application grows. Redis clustering, in particular, allows data to be partitioned across multiple shards, increasing the capacity and availability of your cache.

ElastiCache automatically scales to meet the growing demand of your applications by:

- Adding or removing nodes as needed.

- Handling peak loads with minimal intervention.

- Balancing workloads across multiple cache nodes for optimal performance.

Example of Scaling:

For example, if your web application traffic doubles, you can scale your Redis cluster by adding additional nodes to meet the demand. ElastiCache manages this automatically without causing downtime, ensuring a smooth user experience.

Cost Efficiency and Managed Services

While caching might seem like an additional cost, in reality, it can save your organization a significant amount in infrastructure costs. ElastiCache helps reduce the load on databases, which can otherwise become the bottleneck of your application. With a reduced database load, you can use smaller, less expensive database instances and still meet your performance requirements.

Managed Services mean that Amazon handles the complexity of infrastructure management, such as patching, upgrades, backups, and scaling. You don’t need to invest in costly personnel or infrastructure to manage caching systems, saving both time and money.

With pay-as-you-go pricing, you only pay for the cache nodes you use. This pricing model makes it easy to match your caching needs to your budget.

High Availability, Fault Tolerance, and Security

Amazon ElastiCache offers high availability by deploying clusters across multiple availability zones (AZs). With Redis replication, you can have a primary node that performs both read and write operations, and one or more replica nodes that can serve read requests. If the primary node fails, one of the replicas automatically becomes the new primary, ensuring your application remains available.

- Automatic failover ensures that your application continues running smoothly even in the event of a failure.

- Data replication improves fault tolerance by storing copies of your cache data across multiple AZs.

Additionally, security is tightly integrated with AWS services. ElastiCache supports encryption at rest and in transit using TLS, and you can control access using AWS Identity and Access Management (IAM) roles and policies.

Amazon ElastiCache vs. Other Caching Solutions

ElastiCache vs. Local Caching

While caching data locally on application servers (i.e., local caching) can provide a performance boost, it does have limitations. Local caching stores data in the memory of the application server itself, which can lead to issues when multiple application instances are running or when servers are scaled up or down.

Here’s a quick comparison of local caching vs. ElastiCache:

- Local Caching: Data is stored in-memory on the application server, which means it’s limited to the server’s memory. This makes scaling and managing large applications more complex.

- ElastiCache: ElastiCache, being a managed, distributed caching service, allows for scaling across multiple nodes and servers. It provides data replication, automatic failover, and easy integration with other AWS services.

Local caching might suffice for small applications, but for large-scale, distributed systems, ElastiCache provides better reliability, flexibility, and performance.

Redis vs. Memcached: A Detailed Comparison

When you choose Amazon ElastiCache, you must decide between Redis and Memcached as your caching engine. Let’s dive deeper into the differences between them:

| Feature | Redis | Memcached |

| Data Structure Support | Supports strings, hashes, sets, lists, sorted sets, and more | Simple key-value store |

| Persistence | Persistent (snapshots, AOF) | No persistence |

| Replication | Built-in replication and failover | Basic replication (optional) |

| Clustering | Redis Cluster (sharding) | No built-in clustering |

| Use Cases | Real-time analytics, pub/sub, queues | Simple caching, session storage |

- Redis is more feature-rich and supports a variety of advanced data types and persistence, making it suitable for complex use cases.

- Memcached is simpler, offering high performance for use cases that need fast, simple key-value storage.

Redis is generally recommended for most use cases, especially when you need data persistence or advanced data structures. Memcached, however, might be preferable when your caching needs are straightforward.

ElastiCache vs. Other AWS Solutions (e.g., DAX)

Amazon DynamoDB Accelerator (DAX) is another caching solution provided by AWS, specifically designed for DynamoDB. While ElastiCache is a more general-purpose caching solution, DAX is tightly integrated with DynamoDB to accelerate read performance for workloads dependent on DynamoDB.

Here’s a comparison between ElastiCache and DAX:

- ElastiCache: Supports both Redis and Memcached and is more versatile, as it can be used with any application requiring a caching layer.

- DAX: Optimized specifically for DynamoDB and doesn’t offer the flexibility or engine choices that ElastiCache provides. It’s a specialized service for DynamoDB use cases.

Key Features of Amazon ElastiCache

High Availability and Multi-AZ Deployment

ElastiCache ensures your cache layer is resilient and available by supporting Multi-AZ deployment. This feature allows you to replicate your Redis data across multiple availability zones, ensuring that even in the event of a zone failure, your application can continue to function normally.

For example, in Redis, you can have a primary node in one AZ and replica nodes in others. If the primary node fails, one of the replicas is promoted to primary, and the system continues running without interruptions.

Automated Backups and Snapshots

Amazon ElastiCache also offers automatic backups and snapshots, ensuring that your data is safely stored and recoverable. You can configure backup schedules for your Redis or Memcached clusters, and ElastiCache will automatically create backups without requiring manual intervention.

Snapshots are particularly useful for disaster recovery or maintaining data consistency during large updates or maintenance activities.

Security and Encryption (at Rest and in Transit)

Security is a top priority when using cloud services, and Amazon ElastiCache ensures that your cached data is protected using multiple layers of security:

- Encryption at rest protects your cached data when stored on disk.

- Encryption in transit ensures that data traveling between your application and ElastiCache is secure, using TLS/SSL protocols.

- VPC Integration allows you to place your cache clusters in a private virtual network, enhancing security further.

ElastiCache also integrates with AWS Identity and Access Management (IAM), allowing you to enforce access controls and limit who can access your cache.

Monitoring and Logging Integration

ElastiCache integrates seamlessly with Amazon CloudWatch, enabling you to monitor the health and performance of your cache clusters in real time. You can track key metrics such as cache hits, cache misses, memory usage, and more.

Additionally, ElastiCache supports CloudWatch Logs, allowing you to capture logs of your Redis or Memcached nodes for deeper analysis and troubleshooting.

Use Cases of Amazon ElastiCache

Web and Mobile Application Caching

Caching is critical for web and mobile applications, where users demand fast, responsive experiences. By reducing the time spent on retrieving data from databases, Amazon ElastiCache improves the responsiveness of your application, thus enhancing the overall user experience.

Example Use Case: A popular e-commerce website could use ElastiCache to cache product details, search results, and pricing information. Instead of querying the backend database every time a user requests product data, the application fetches it from the cache. This reduces the load on the database and speeds up response times for users.

Benefits:

- Faster Load Times: By caching frequently accessed data like homepage content, product listings, or social media feeds, you reduce the time needed to load content.

- Reduced Database Load: Caching helps reduce the number of queries hitting your database, ensuring better performance and scalability.

For mobile applications, caching is especially useful when the app must fetch large amounts of data from an API. With Redis or Memcached, mobile apps can access data much faster, even while offline, by storing it locally for subsequent use.

Code Example: Caching Web Data Using Redis (Python)

| python import redis # Connect to Redis cache = redis.StrictRedis(host='your-redis-endpoint', port=6379, db=0) # Fetch product details, first checking if data exists in cache def get_product(product_id): cached_product = cache.get(f"product:{product_id}") if cached_product: return cached_product # Cache hit else: # Simulate fetching from database product = fetch_from_database(product_id) cache.setex(f"product:{product_id}", 3600, product) # Store in cache for 1 hour return product |

Real-time Analytics and Leaderboards

ElastiCache excels in use cases requiring real-time data access, such as real-time analytics and leaderboards. When handling large amounts of real-time data, such as tracking user scores in a game or monitoring social media engagement, caching ensures that data is accessible with low latency.

- Redis is commonly used in scenarios like leaderboards for games, where scores are updated and displayed in real-time. By storing the leaderboard in Redis sorted sets, the system can quickly update and rank user scores.

Example Use Case: A mobile gaming application could leverage Redis to track and update players' scores. Each player's score can be stored as a sorted set, allowing efficient ranking and real-time leaderboard updates.

Code Example: Leaderboard with Redis Sorted Sets (Python)

| python import redis # Connect to Redis cache = redis.StrictRedis(host='your-redis-endpoint', port=6379, db=0) # Update player score in leaderboard def update_score(player_id, score): cache.zadd("leaderboard", {player_id: score}) # Adds player score to sorted set # Get top 10 players from the leaderboard def get_top_players(): return cache.zrevrange("leaderboard", 0, 9) # Get top 10 players, ranked highest to lowest |

Session Store and User State Management

Session management is another critical use case where Amazon ElastiCache shines. Redis is particularly effective in this regard, offering quick access to session data, such as user authentication states, preferences, or shopping cart contents.

- Web applications can use ElastiCache to store session data, reducing the time taken to re-authenticate users and ensuring consistent user experiences across multiple requests.

Example Use Case: An online store may use Redis to store a user's session, such as login state and cart contents. When a user navigates between pages, Redis quickly provides session information, minimizing the need for repeated authentication calls.

Code Example: Session Management with Redis (Python)

| python import redis # Connect to Redis session_cache = redis.StrictRedis(host='your-redis-endpoint', port=6379, db=0) # Store session data def store_user_session(user_id, session_data): session_cache.setex(f"session:{user_id}", 3600, session_data) # Session expires in 1 hour # Retrieve session data def get_user_session(user_id): return session_cache.get(f"session:{user_id}") # Retrieve session data for user |

Pub/Sub Messaging with Redis

Redis is often used for pub/sub (publish/subscribe) messaging, which enables real-time messaging between different application components. This is particularly useful in event-driven architectures where one component needs to notify others about a specific event (e.g., new user registration, stock updates).

- Redis provides publish and subscribe commands, allowing applications to publish messages to a channel and subscribe to receive updates.

Example Use Case: A live chat application may use Redis Pub/Sub to broadcast messages to all connected users. When one user sends a message, Redis publishes it to a channel, and all subscribed users receive the message instantly.

Code Example: Pub/Sub Messaging with Redis (Python)

| python import redis # Connect to Redis cache = redis.StrictRedis(host='your-redis-endpoint', port=6379, db=0) # Publisher (sending message) def send_message(channel, message): cache.publish(channel, message) # Send message to channel # Subscriber (receiving message) def receive_messages(channel): pubsub = cache.pubsub() pubsub.subscribe(channel) for message in pubsub.listen(): print(message['data']) # Print received message |

Key Considerations Before Deploying AWS ElastiCache

Before implementing AWS ElastiCache, it's important to assess several factors that can impact performance, cost, and architecture. Here are the key considerations:

Cost Implications

- Pricing Structure: ElastiCache pricing depends on instance type, size, and usage duration. Over-provisioning resources can lead to unnecessary costs. Carefully select instances based on your specific workload requirements to avoid overpaying.

- Reserved vs. On-Demand Instances: Reserved Instances offer up to 60% savings for long-term commitments, while on-demand instances can be significantly more expensive for predictable workloads. Performing a Total Cost of Ownership (TCO) analysis is essential to choose the most cost-effective option.

- Network Transfer Costs: Data transfer within the same availability zone is free, but transferring data across AZs or regions incurs additional costs. Plan for data transfer charges, especially if your application frequently interacts with ElastiCache across multiple zones or regions.

Performance Considerations

- Cache Eviction Policies: Redis offers various eviction policies, such as volatile-lru and allkeys-random. Choosing the wrong eviction policy can result in important data being evicted. For instance, in an e-commerce application, product information should not be evicted if session data is still retained. Carefully analyze your data access patterns to select the most appropriate policy.

- Cache Invalidation: Proper cache invalidation is necessary to avoid serving outdated data. Event-based invalidation provides the best consistency but requires a more complex setup. Ensure that your invalidation strategy matches the needs of your application.

- Cold Cache: When ElastiCache is first deployed or after a scaling event, the cache may start empty, causing temporary performance degradation. Implement cache pre-warming strategies or route traffic gradually to avoid overwhelming the database during these periods.

Architectural Challenges

- Single Point of Failure: Without a Multi-AZ configuration, ElastiCache can become a single point of failure. In the event of an AZ failure, the cache may become unavailable until recovery. Multi-AZ deployments with automatic failover can mitigate this risk by ensuring quick recovery and high availability.

- Network Latency: Placing ElastiCache in a different region or VPC from your application servers can introduce network latency, affecting performance. Minimize latency by ensuring that ElastiCache is deployed in the same region or VPC as your application.

- Connection Management: To avoid exhausting connection limits, implement connection pooling at the application level. Tuning pool sizes, timeouts, and retry settings ensures stable connections and prevents failures during traffic spikes.

Data Management Challenges

- Data Consistency: Ensuring consistency between the cache and the database is critical. Strategies like write-through and write-behind offer different trade-offs in performance and consistency. Choose the best method to avoid serving stale data.

- TTL Management: Setting the right Time-to-Live (TTL) values for cached data is essential for balancing cache hits and data freshness. Too short TTLs lead to frequent cache misses and higher database load, while too long TTLs result in outdated data. Adjust TTL dynamically based on data volatility.

- Large Object Storage: Storing large objects (>100 KB) directly in Redis can cause memory fragmentation and reduce performance. It's better to store large objects in services like Amazon S3 and keep only references or metadata in ElastiCache.

Operational Considerations

- Monitoring and Alerts: Set up CloudWatch alarms to monitor critical metrics such as memory usage, CPU utilization, and cache hit rates. Proactive monitoring helps detect performance degradation before it affects users.

- Scaling Impact: Scaling Redis clusters, either vertically or horizontally, can temporarily impact performance. Use Multi-AZ deployments with automatic failover to minimize downtime during scaling operations.

- Backup Strategies: Implement a solid backup strategy, including automated backups with appropriate retention periods. Regularly test backup restoration processes to ensure data integrity in case of failure.

- Version Upgrades: Major Redis version upgrades can introduce compatibility issues. Test upgrades in a staging environment and ensure a rollback plan is in place to avoid extended downtime in production.

Setting Up Amazon ElastiCache

Setup Guide for Redis and Memcached

Setting up Amazon ElastiCache involves creating a cluster of either Redis or Memcached nodes. Amazon simplifies the process by providing a managed service, meaning that AWS handles administrative tasks like patching, monitoring, and backups.

Here’s a high-level overview of the setup process:

- Create a Cluster: From the AWS Management Console, you can create an ElastiCache cluster by selecting Redis or Memcached. You will be prompted to choose parameters like node type, number of nodes, and security settings.

- Configure Access Control: Use VPC (Virtual Private Cloud) settings to control who can access your ElastiCache cluster. Ensure that you restrict access to trusted application servers and users.

- Set Up Security Groups: Ensure that the ElastiCache security groups allow traffic from your application servers and prevent unauthorized access.

- Monitoring and Backups: Enable CloudWatch metrics and set up automatic backups to monitor the health and performance of your cache clusters.

Configuring Caching Best Practices

Once your cluster is set up, configuring caching best practices will ensure that your cache is efficient and scalable. Some tips include:

- Use expiration times to control how long data stays in the cache.

- Enable data persistence (Redis) for critical data that needs recovery.

- Avoid over-caching: Cache only the most frequently accessed data.

Connecting ElastiCache with EC2, Lambda, RDS

To fully integrate ElastiCache into your architecture, you’ll need to connect it to other AWS services like EC2, Lambda, and RDS. Here’s how:

- EC2: Your application running on EC2 instances can interact with ElastiCache by connecting to the cache cluster through the provided endpoint.

- Lambda: Use Lambda functions to process data and interact with ElastiCache to cache the results of operations or API calls.

- RDS: If you’re using RDS for data storage, you can cache frequently queried data from RDS using ElastiCache, reducing database load.

Advanced Configuration and Best Practices

Redis Clustering and Sharding

For large-scale applications, Redis clustering and sharding allow you to distribute data across multiple nodes. Redis clustering partitions data into hash slots, and each node in the cluster is responsible for a subset of those slots.

Sharding can significantly increase the capacity of your cache by dividing the data across multiple nodes, ensuring that your application can scale horizontally.

Memory Management and Eviction Policies

ElastiCache allows you to define eviction policies to control how memory is managed. Some common eviction strategies include:

- LRU (Least Recently Used): Removes the least recently used data to make space for new data.

- TTL (Time-to-Live): Sets a time limit for how long data remains in the cache before being evicted.

- Volatile: Evicts data that has a defined expiration time.

You can configure eviction policies based on your use case, whether you want to prioritize low-latency data access or manage memory more efficiently.

Optimizing Cache Performance

To ensure that your cache performs optimally, consider:

- Using connection pooling to minimize connection overhead.

- Configuring TTL (Time-to-Live) for cached objects to ensure that stale data is evicted.

- Using clustered Redis for large datasets to distribute the load across multiple nodes.

Caching Strategies (Lazy Loading, Write-Through)

When deciding how to populate the cache, consider the following strategies:

- Lazy Loading: Only load data into the cache when it’s requested. This strategy minimizes cache population but may result in cache misses.

- Write-Through: When data is written to the database, it’s also written to the cache. This ensures the cache is always up-to-date, but it can lead to higher write latency.

Both strategies have their place depending on the requirements of your application.

Security Best Practices

Securing ElastiCache with VPC, IAM, and Encryption

Securing your Amazon ElastiCache instances is paramount, especially when dealing with sensitive or mission-critical data. Amazon provides several mechanisms to secure your ElastiCache clusters, including VPC (Virtual Private Cloud), IAM (Identity and Access Management), and encryption.

VPC (Virtual Private Cloud)

By placing your ElastiCache cluster within a VPC, you ensure that it is isolated from the public internet and only accessible from trusted resources within your network. This isolation minimizes the risk of unauthorized access.

- Private Subnets: For extra security, ensure that your ElastiCache nodes are in private subnets within your VPC, which prevents direct internet access.

- Security Groups and NACLs (Network Access Control Lists): Use security groups and NACLs to control traffic between your EC2 instances, Lambda functions, and ElastiCache. Only allow access from trusted IPs or services.

IAM (Identity and Access Management)

With IAM roles and policies, you can control which AWS resources or users can access your ElastiCache cluster. This is useful for limiting access to only authorized entities, such as your application servers.

- Create an IAM role with the least privileges, ensuring it has only the necessary permissions to interact with ElastiCache.

Encryption

To protect the data at rest and in transit, Amazon ElastiCache offers encryption capabilities:

- Encryption at Rest: ElastiCache supports encryption of data stored on disk. You can enable encryption when creating a new cluster or through updates to existing clusters.

- Encryption in Transit: For Redis, you can enable TLS encryption to secure data exchanged between your clients and ElastiCache. This ensures that data is protected during transmission over the network.

Access Control: Redis AUTH and Memcached ACLs

Redis AUTH and Memcached ACLs (Access Control Lists) provide mechanisms for controlling access to cache data.

Redis AUTH: Redis supports the AUTH command, which requires clients to authenticate using a password before they can interact with the Redis instance. This adds an additional layer of security, ensuring that only authorized clients can access your Redis data.

Code Example: Redis AUTH (Python)

| python import redis # Connect to Redis with authentication cache = redis.StrictRedis(host='your-redis-endpoint', port=6379, password='your-redis-password') # Example of getting data value = cache.get('key') print(value) |

- Memcached ACLs: Memcached offers ACLs to control access to different operations, ensuring that only authorized clients can perform specific tasks, such as reading or writing data.

By leveraging authentication and ACLs, you can strengthen security and ensure that unauthorized access is blocked.

Pricing and Cost Management

Understanding the Pricing Model

Amazon ElastiCache offers a pay-as-you-go pricing model, with charges based on several factors, such as:

- Node Type: The cost depends on the node size (e.g., memory capacity, CPU, etc.). Larger nodes with higher memory and processing power cost more.

- Node Count: ElastiCache pricing increases with the number of nodes deployed in the cluster. You can scale vertically by upgrading node sizes or horizontally by adding more nodes.

- Data Transfer: Data transfer between your application and ElastiCache (within the same region) is free, but transferring data between AWS regions or to the internet incurs additional costs.

- Backup and Snapshots: If you enable backups, you’ll be charged for the storage used by the backup data.

Pricing Breakdown:

| Cost Factor | Description | Example Cost (on-demand) |

| Node Type | Price depends on the size and capacity of the node. | $0.03 per hour for a cache.t2.micro node |

| Data Transfer | Charges for data transferred out of ElastiCache (within the region is free). | $0.09 per GB transferred to the internet |

| Backup and Snapshots | Charges for backup storage. | $0.10 per GB stored monthly |

Cost Optimization Tips for ElastiCache

To manage and optimize costs, consider the following strategies:

- Choose Appropriate Node Types: Select the right node size based on your application’s needs. Over-provisioning can lead to unnecessary costs, while under-provisioning can cause performance degradation.

- Scaling: Take advantage of Auto Discovery in Redis to scale your clusters up or down based on demand, ensuring you’re not paying for unused capacity.

- Use Reserved Instances: For long-term workloads, Reserved Nodes allow you to commit to using a particular node type for 1-3 years in exchange for a significant discount compared to on-demand pricing.

- Leverage Data Persistence: Enable automatic backups and snapshots for disaster recovery, but make sure you are not storing excessive amounts of backup data.

- Avoid Over-Caching: Only cache frequently accessed data. Too much cached data can lead to increased memory usage and higher costs.

Additionally, you can optimize your ElastiCache costs by integrating intelligent scheduling and automation with OptimoScheduler. It automates the start/stop of ElastiCache nodes, ensuring resources are only running when necessary, helping reduce costs during off-hours. With custom schedules, you can optimize resource usage and reduce storage costs by retaining only the necessary backup and snapshot data. OptimoScheduler enables you to implement automated cost-saving strategies, allowing your team to focus on more strategic tasks.

Interested in exploring more? Request a demo or visit our OptimoScheduler page to see how it can streamline your ElastiCache management and reduce costs.

Monitoring and Troubleshooting Amazon ElastiCache

Using CloudWatch Metrics for Performance Monitoring

Amazon CloudWatch provides robust monitoring tools to track the performance and health of your ElastiCache clusters. Key metrics to monitor include:

- CPU Utilization: Measures the percentage of CPU being used. High utilization indicates that your nodes may need scaling.

- Memory Usage: Tracks memory usage and can help detect when the cache is nearing its memory limits.

- Evictions: Measures the number of keys removed from the cache. High eviction rates may indicate that your cache is full and needs additional capacity.

Example CloudWatch Metrics:

| Metric Name | Description |

| CPU Utilization | Percentage of CPU capacity in use |

| MemoryUtilization | Percentage of memory in use |

| Evictions | Number of evictions from the cache |

Setting CloudWatch Alarms allows you to receive notifications when any of these metrics exceed a predefined threshold. This helps you stay proactive in resolving performance issues.

Troubleshooting Common Issues (Connectivity, Latency, etc.)

When working with ElastiCache, some common issues may arise, such as:

- Connectivity Issues: If your application cannot connect to ElastiCache, check the security group rules, VPC settings, and IAM permissions. Ensure that your application is within the same VPC and has appropriate access rights.

- Latency: High latency may occur if your cache is located in a different region than your application servers. Ensure that both ElastiCache and your application are in the same AWS region.

- Memory Issues: If your cache is running out of memory, consider optimizing the cache eviction strategy (e.g., using LRU eviction) or scaling the nodes to increase capacity.

Diagnosing Cache Misses and Memory Issues

Cache Misses: A cache miss occurs when the requested data is not in the cache, and it needs to be fetched from the original data store. To minimize cache misses, ensure:

- Appropriate cache population strategies like write-through or lazy loading are implemented.

- TTL (Time to Live) values are configured to avoid expired data being served.

Memory Issues: If your cache is using too much memory, it could lead to slower performance or eviction of critical data. To address memory issues:

- Adjust the Eviction Policy to better manage memory (e.g., using LRU or TTL-based evictions).

- Monitor the MemoryUtilization metric and scale your nodes if needed.

By leveraging CloudWatch and AWS support tools, you can troubleshoot common issues and optimize your ElastiCache setup.

Amazon ElastiCache in Real-World Scenarios

Case Study 1: E-commerce Website Performance Enhancement

In the world of e-commerce, speed and responsiveness are critical to user satisfaction. Imagine a popular online store that faces performance issues during peak traffic times, leading to slow page loads and poor user experience. By integrating Amazon ElastiCache into their architecture, the store can significantly enhance its performance.

How It Works:

- Caching Product Data: Frequently accessed product information, such as pricing, availability, and images, is stored in ElastiCache (e.g., Redis). This reduces the need to query the backend database repeatedly for the same data.

- Caching User Sessions: User session data is also cached in ElastiCache, ensuring that user preferences, cart items, and other session-based information are available instantly.

Results:

- Improved Page Load Times: Cached product data reduces the load on the database, making page loads faster.

- Reduced Database Load: By offloading frequent read queries to the cache, the backend database can focus on more complex operations, such as order processing or inventory management.

Case Study 2: Real-Time Data Caching for Analytics

For real-time analytics platforms, speed is critical for delivering insights. In this scenario, a real-time analytics platform relies on frequent updates to dashboards and live data visualizations. Storing this data directly in a database could result in delays due to the sheer volume of queries.

How It Works:

- Storing Real-Time Metrics: Metrics like web traffic, user interactions, or social media engagement are stored temporarily in ElastiCache, providing quick access to up-to-date data.

- Aggregating Data: Complex analytics queries that are frequently accessed are cached, improving the speed of data aggregation and reducing database load.

Results:

- Lower Latency: Caching real-time data allows users to receive instant analytics without the need to wait for queries to run.

- Scalability: As data volume grows, ElastiCache provides the flexibility to scale the cache infrastructure seamlessly.

Case Study 3: Multiplayer Game State Management

In the gaming world, especially for multiplayer online games, managing player states and real-time game progress is critical. An online game may have thousands or even millions of players interacting in real-time, and caching their game state in memory can significantly reduce server load and improve player experience.

How It Works:

- Caching Player States: Player positions, scores, in-game inventory, and other frequently accessed game data are stored in ElastiCache, reducing the need to continuously query the primary database.

- Handling High Load: During peak gaming sessions, the cache handles the rapid influx of requests, ensuring that the game performs without delays.

Results:

- Improved Player Experience: Real-time updates with minimal latency ensure players experience smooth gameplay.

- Reduced Database Bottlenecks: Game state data stored in memory allows the primary database to handle more critical backend processes without overload.

Alternatives to Amazon ElastiCache

While Amazon ElastiCache is a powerful caching solution, it’s not the only option available in the market. Depending on your specific use case and architecture, there are several alternatives worth considering.

Third-Party Solutions

Several third-party solutions can provide caching mechanisms with different sets of features, performance characteristics, and pricing models. Popular third-party caching solutions include:

- Redis Labs: A fully managed Redis service offering enhanced features such as multi-region replication and automated scaling. Redis Labs can be an option for users who need advanced Redis capabilities but prefer a solution outside the AWS ecosystem.

- Memcached on DigitalOcean: For users seeking a simpler, more cost-effective solution, DigitalOcean offers Memcached hosting, which can be used for caching, though it may require more hands-on management than ElastiCache.

- KeyDB: KeyDB is a high-performance fork of Redis, which focuses on multi-threaded performance. If you need a caching solution with strong performance and flexibility, KeyDB might be worth considering.

Other AWS Services for Caching

While ElastiCache is Amazon’s dedicated caching solution, AWS provides other services that may overlap with caching in certain use cases:

- Amazon DynamoDB Accelerator (DAX): DAX is a fully managed, highly available in-memory cache for DynamoDB. It is specifically designed for use with DynamoDB, offering ultra-fast read performance for workloads that require low-latency access to data.

When to Choose DAX:- If you're heavily invested in DynamoDB and need caching to speed up query results.

- If you're building serverless applications that require seamless integration with AWS Lambda.

- AWS S3 with CloudFront: For static content like images, videos, and downloadable files, AWS S3 combined with CloudFront can provide an efficient caching mechanism by caching content closer to end-users, reducing the load on origin servers.

When to Choose CloudFront:- When delivering static content to global audiences with minimal latency.

- If you need edge caching for static files (e.g., media content).

Conclusion

In conclusion, Amazon ElastiCache is a powerful managed caching solution that enhances application performance with low latency, scalability, and high availability. It offers flexibility with Redis and Memcached, and integrates seamlessly with other AWS services like EC2, Lambda, and RDS.

With strong security features, cost-efficiency, and effective monitoring, ElastiCache is ideal for real-time data processing, session management, and more. Whether for web apps, analytics, or game state management, ElastiCache simplifies caching while ensuring performance and reliability, making it an excellent choice for modern cloud architectures.