A Brief History

In the early days of computing, CPUs (Central Processing Units) were the backbone of all computational tasks. They were highly versatile, handling a broad range of functions, but they were not optimized for parallel processing tasks such as graphics rendering, artificial intelligence (AI) computations, and machine learning. As industries like gaming, AI, and video rendering began demanding faster, more efficient computing, the limitations of CPUs became evident.

To meet this demand, the Graphics Processing Unit (GPU) was developed. Initially designed for rendering 3D graphics in gaming, GPUs quickly proved invaluable in fields like deep learning, big data analytics, and scientific simulations. Their ability to process multiple tasks simultaneously made them the ideal choice for compute-heavy, parallel workloads.

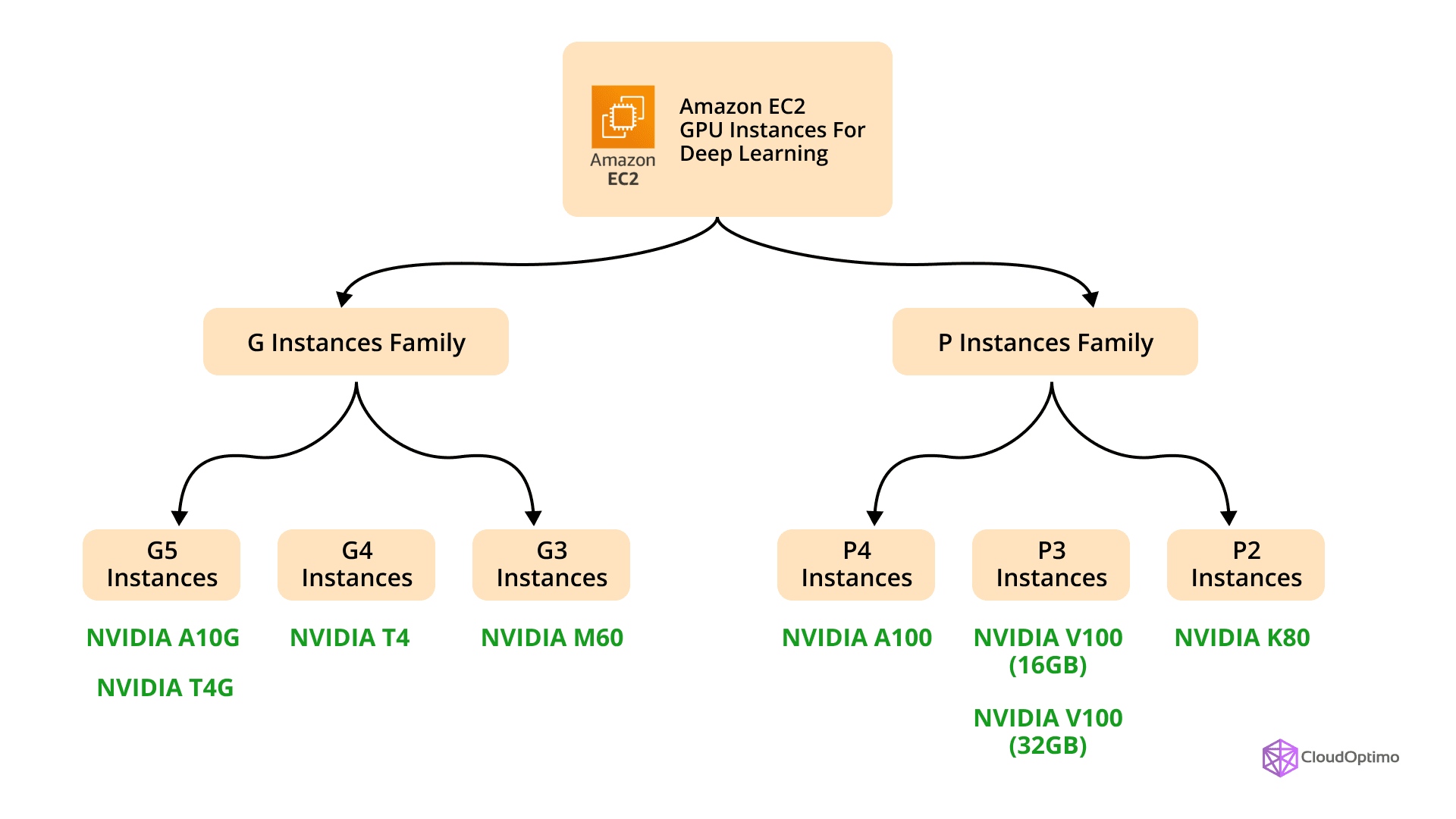

AWS's First GPU-Powered Instances: The P2 and P3 Families

Recognizing the growing demand for specialized computing power, AWS introduced GPU-powered EC2 instances with the P2 and P3 families, built for high-performance tasks like machine learning, AI training, and 3D rendering. These instances revolutionized the cloud computing landscape by making GPU-powered performance accessible on demand. No longer did businesses have to invest in expensive, on-premises infrastructure to leverage the power of GPUs.

However, while these instances offered cutting-edge performance, they were designed for the most demanding workloads, and with that came a high price tag. Many businesses needed a solution that balanced performance with cost, especially for workloads like AI inference, cloud gaming, and video transcoding—tasks that didn’t require the ultra-high performance of P3 instances but still needed powerful GPU capabilities.

The Gap: The Need for a Cost-Effective GPU Solution

As AWS continued to expand its range of instances, it became clear that there was a gap in the market. Businesses sought a cost-effective solution that could handle demanding workloads but didn’t require the extreme capabilities of the P3 instances. These businesses needed powerful GPU instances that were affordable and optimized for applications like AI inference and video transcoding, which required substantial GPU performance, but not at the highest level.

The Introduction of the G4 Family: A Game-Changer

AWS addressed this gap with the introduction of the G4 instance family, powered by NVIDIA T4 Tensor Core GPUs. Designed to offer a perfect balance of performance and cost-efficiency, G4 instances quickly became the go-to solution for businesses looking for high-performance GPU capabilities without the high price tag of P3 instances. These instances were optimized for a range of applications, including machine learning inference, cloud gaming, video transcoding, and graphics-intensive workloads.

Since their launch, G4 instances have enabled organizations to scale AI-driven innovations, deliver stunning real-time graphics, and optimize video workloads, setting new benchmarks for GPU-powered cloud computing. With their cost-effectiveness and high-performance capabilities, they have become an essential tool for industries ranging from healthcare to gaming, providing businesses with the resources they need to grow and innovate.

But what exactly sets the G4 family apart from previous instances like the P2 and P3 families? The following comparison helps clarify how each of these families differs in terms of performance, pricing, and suitability for different workloads.

P2, P3, and G4 Instances Comparison

Here’s a detailed comparison of the P2, P3, and G4 instances, highlighting the differences in key features, performance, and cost:

| Feature | P2 Family | P3 Family | G4 Family |

| GPU Type | NVIDIA K80 GPUs | NVIDIA V100 GPUs | NVIDIA T4 Tensor Core GPUs |

| Use Case | Deep learning, High-performance computing | Deep learning training, Data analytics, Research | AI inference, Video transcoding, Cloud gaming, ML inference |

| Performance Focus | General-purpose GPU tasks | High-performance computing, AI model training | Inference workloads, Cloud gaming, Graphics rendering |

| Price Range (On-Demand) | ~$3.06 per hour (p2.xlarge) | ~$24.48 per hour (p3.2xlarge) | ~$0.526 per hour (g4dn.xlarge) |

| GPU Memory | 12 GB per GPU | 16 GB per GPU | 16 GB per GPU |

| GPU Architecture | Maxwell (older generation) | Volta (highly specialized for deep learning) | Turing (optimized for inference workloads) |

| vCPUs | 8-16 vCPUs per instance | 8-16 vCPUs per instance | 4-16 vCPUs per instance |

| Networking | 10 Gbps | 25 Gbps | 25 Gbps |

| EBS Throughput | Up to 4,750 Mbps | Up to 8,500 Mbps | Up to 14,000 Mbps |

| Key Advantage | Good for training AI models, large-scale simulations | Ideal for deep learning, AI model training with massive datasets | Cost-effective with high GPU performance for inference and graphics rendering |

| Target Users | Enterprises and research organizations requiring high GPU power | Businesses focused on AI and machine learning training at scale | Startups, gaming companies, video editors, businesses scaling AI inference |

Pricing Details:

- P2 Family: On-demand pricing for a p2.xlarge instance is approximately $3.06 per hour. Larger instances, such as p2.8xlarge, will cost significantly more, around $24.48 per hour.

- P3 Family: On-demand pricing for a p3.2xlarge instance is around $24.48 per hour, with higher-end options like p3.8xlarge and p3.16xlarge being much more expensive.

- G4 Family: On-demand pricing for g4dn.xlarge starts at $0.526 per hour, making it a much more cost-effective solution for businesses needing GPU power for tasks like AI inference, cloud gaming, and 3D rendering.

Notes:

- Spot pricing can be significantly lower, and Reserved instances offer a pricing discount for long-term commitment.

- Prices for different instance types (e.g., p2.8xlarge, p3.16xlarge, g4dn.12xlarge) vary, so selecting the right instance depends on the workload and scale needed.

Understanding AWS EC2 G4 Instances

To truly understand the value of G4 instances, it's important to grasp their core design and intended use. The AWS EC2 G4 family is optimized for graphics-intensive and compute-heavy tasks, making it a powerful solution for workloads that require substantial GPU resources.

- Built for AI and graphics tasks: Equipped with NVIDIA T4 Tensor Core GPUs, G4 instances deliver exceptional performance for AI inference, 3D rendering, video processing, and more.

- Balancing performance and cost: G4 instances provide a more affordable alternative compared to higher-end GPU instances, making them ideal for businesses that need GPU power without overspending.

- Versatility for diverse workloads: From scaling AI models to running complex graphics, G4 instances are suitable for a wide range of industries and use cases.

G4 instances are designed to deliver a balanced solution that allows businesses to manage both performance and cost, whether you're working on cutting-edge AI or advanced graphics rendering.

Key Features of G4 Instances

G4 instances are tailored for businesses needing high-performance GPU power, with a focus on both affordability and efficiency. The combination of cutting-edge technology and scalability makes G4 instances an ideal choice for GPU-intensive workloads. Here are the key features that set G4 instances apart:

NVIDIA T4 Tensor Core GPUs

G4 instances are equipped with NVIDIA T4 Tensor Core GPUs, delivering exceptional performance for demanding applications like real-time AI inference, graphics rendering, and high-performance computing. These GPUs are optimized for power efficiency, offering top-tier performance without compromising energy consumption. Whether you're running machine learning models or rendering complex graphics, the T4 Tensor Cores offer the ideal balance between performance and energy efficiency.

Horizontal and Vertical Scalability

One of the standout features of G4 instances is their scalability. Businesses can scale up or down easily to meet varying workload demands. Horizontal scalability allows you to add more instances to handle increases in workload, while vertical scalability ensures that you can adjust the instance size based on resource requirements. This flexibility ensures that businesses can optimize performance based on their current needs while avoiding over-provisioning and unnecessary costs.

Cost-effective Performance

While GPU-intensive workloads are traditionally expensive, G4 instances provide high GPU performance at a more accessible cost compared to other GPU instance families. G4 instances are a more budget-friendly option for businesses looking to integrate GPU power into their operations without the need to invest in costly hardware. By offering a perfect balance between performance and pricing, G4 instances make GPU-powered cloud computing viable for small and medium-sized businesses as well as large enterprises.

Pre-configured Frameworks

G4 instances come with pre-installed NVIDIA drivers and libraries, eliminating the need for developers to spend time on manual setup. With these frameworks already in place, deploying GPU workloads becomes faster and easier, allowing businesses to focus on their core tasks rather than the technicalities of instance configuration. These pre-configured frameworks ensure seamless integration with popular machine learning and AI models, such as TensorFlow and PyTorch, making it easy for developers to hit the ground running.

Detailed Technical Specifications

The instances come in various configurations, each tailored to meet specific business needs. This ensures that users can select the right option based on their performance requirements and budget.

The following table summarizes the key configurations of G4 instances, highlighting their specs and use cases:

| Instance Type | vCPUs | GPU | GPU Memory | RAM | Storage | Use Case |

| g4dn.xlarge | 4 | 1 NVIDIA T4 GPU | 16 GB | 16 GB | 125 GB SSD | Entry-level AI inference, Graphics rendering |

| g4dn.2xlarge | 8 | 1 NVIDIA T4 GPU | 16 GB | 32 GB | 225 GB SSD | Medium-scale AI inference, Video processing |

| g4dn.4xlarge | 16 | 1 NVIDIA T4 GPU | 16 GB | 64 GB | 225 GB SSD | High-performance AI inference, 3D rendering |

| g4dn.8xlarge | 32 | 1 NVIDIA T4 GPU | 16 GB | 128 GB | 450 GB SSD | Large-scale AI inference, Deep learning training |

| g4dn.12xlarge | 48 | 2 NVIDIA T4 GPUs | 32 GB | 192 GB | 900 GB SSD | Training AI models, Advanced visualization |

| g4dn.16xlarge | 64 | 4 NVIDIA T4 GPUs | 64 GB | 256 GB | 1.8 TB SSD | Rendering ultra-HD graphics, High-end AI training |

The variety of configurations available ensures that G4 instances are a flexible, scalable solution for businesses with varying levels of GPU needs. From entry-level tasks to complex high-end applications, AWS EC2 G4 instances deliver consistent, high-performance results across different workloads.

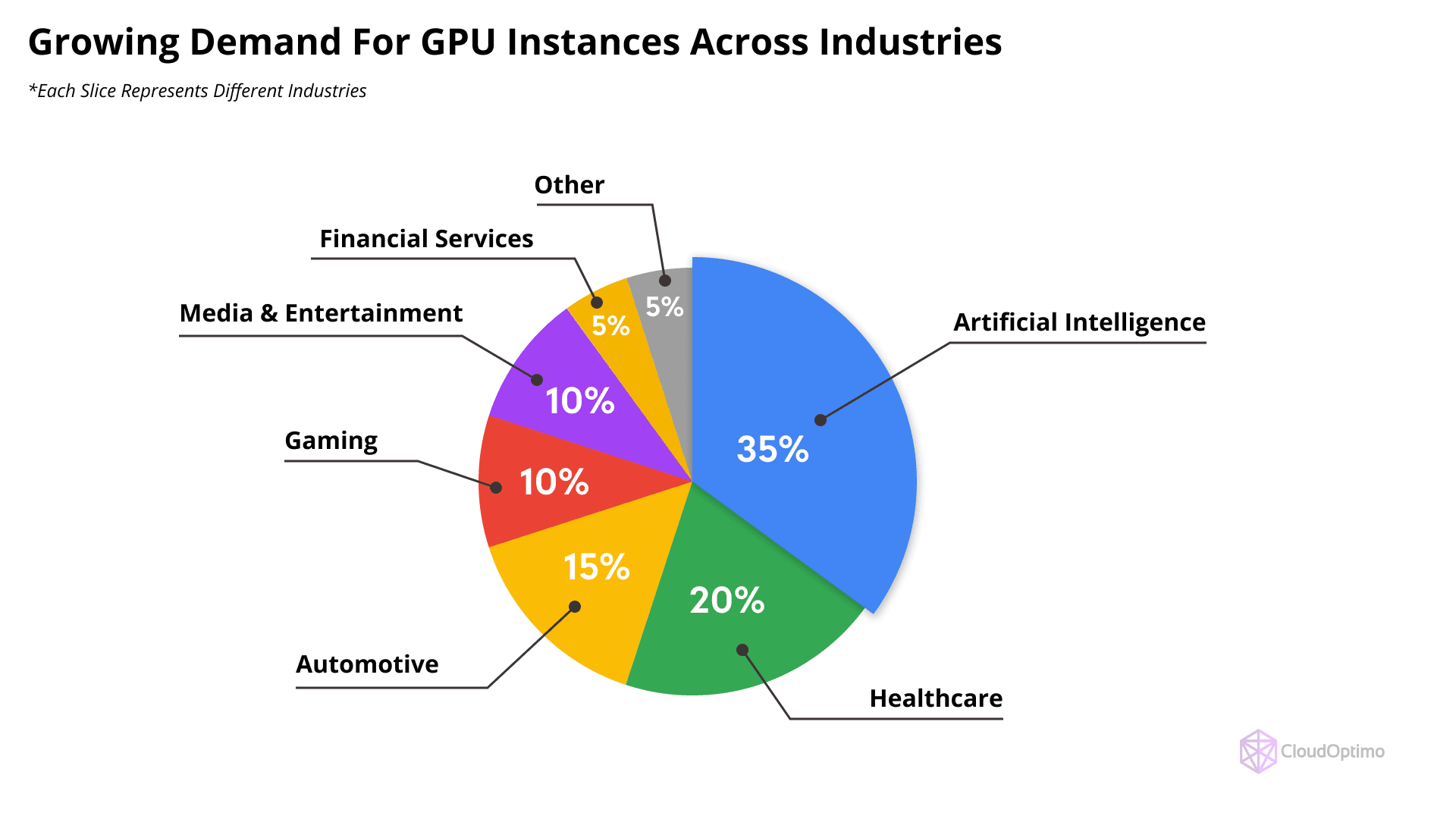

Why G4 Instances Matter Today?

AWS EC2 G4 instances are revolutionizing industries by offering a cost-effective, scalable, and high-performance GPU solution. These instances strike the perfect balance for businesses seeking powerful GPU capabilities without the hefty price tag of high-end GPU instances like the P3 family. Here’s why G4 instances are gaining momentum:

- Cost-Efficiency: G4 instances deliver powerful GPU performance without overspending on unnecessary resources.

- Performance: With NVIDIA T4 Tensor Core GPUs, they provide excellent performance for workloads such as AI inference, video transcoding, and cloud gaming.

- Flexible and Scalable: G4 instances allow businesses to scale GPU resources based on fluctuating demands, making them ideal for both startups and established enterprises.

By offering GPU power at a price that meets the needs of budget-conscious businesses, G4 instances continue to lead the way in GPU-powered cloud computing, making them an indispensable tool for companies striving to stay ahead in the competitive digital world.

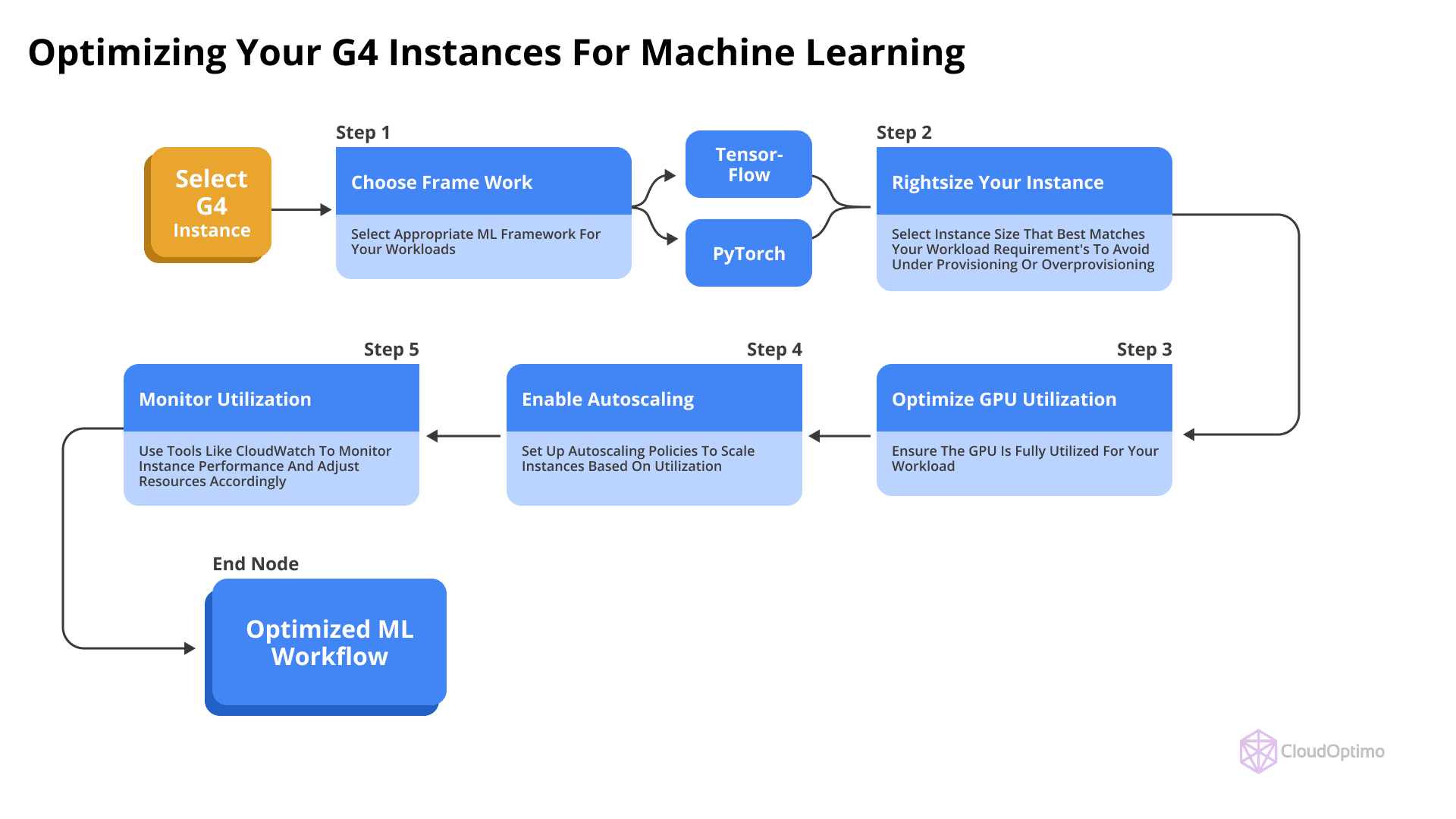

Optimizing Your AWS EC2 G4 Instances for Machine Learning

Now that you understand the core features and technical specifications of G4 instances, let’s explore how to optimize them for machine learning (ML) workloads, especially inference tasks.

Using TensorFlow and PyTorch

G4 instances are particularly well-suited for popular ML frameworks like TensorFlow and PyTorch. The NVIDIA T4 GPUs’ Tensor Cores accelerate mixed-precision calculations, enabling faster and more efficient inference and training.

AWS makes it easy to get started with pre-configured Deep Learning AMIs (Amazon Machine Images). These AMIs come with optimized versions of TensorFlow, PyTorch, MXNet, and other frameworks, along with GPU drivers and libraries like CUDA and cuDNN.

Tips for Using TensorFlow and PyTorch on G4 Instances:

- Enable Mixed Precision:

Use mixed-precision training with Tensor Cores to speed up computations. In TensorFlow, you can use tf.keras.mixed_precision to enable this feature. - Leverage NVIDIA TensorRT:

TensorRT is an SDK for optimizing and deploying ML models on NVIDIA GPUs. It helps reduce latency and improve throughput during inference. - Batch Inference Requests:

For inference workloads, batching multiple requests together can improve GPU utilization and reduce overhead.

Best Practices for Model Training

While G4 instances excel at inference, they are also effective for training smaller models or fine-tuning pre-trained models. Here are some best practices:

- Choose the Right Instance Size:

For small to medium models, g4dn.xlarge or g4dn.2xlarge may be sufficient. For larger models, consider g4dn.12xlarge or g4dn.metal for multi-GPU capabilities. - Multi-GPU Configurations:

If your workload can benefit from parallel processing, use G4 instances with multiple GPUs (like g4dn.12xlarge or g4dn.metal) to accelerate training. - Distributed Training:

Utilize frameworks like Horovod for distributed training to scale across multiple instances.

AWS Pricing Models for G4 Instances

AWS offers multiple pricing options for G4 instances, allowing businesses to tailor costs to their operational needs. Here’s how they break down:

On-Demand Pricing (Approximate, per hour)

Features:

- Pay per hour or second (depending on the instance type).

- No upfront payments or commitments.

Use Cases:

- Development and testing environments.

- Temporary workloads with uncertain durations.

| Instance Type | vCPUs | GPU (T4) | Memory (GB) | Storage | Price (USD/hour) |

| g4dn.xlarge | 4 | 1 | 16 | EBS-Only | $0.53 |

| g4dn.2xlarge | 8 | 1 | 32 | EBS-Only | $0.75 |

| g4dn.4xlarge | 16 | 1 | 64 | EBS-Only | $1.20 |

| g4dn.8xlarge | 32 | 1 | 128 | EBS-Only | $2.18 |

| g4dn.12xlarge | 48 | 4 | 192 | EBS-Only | $3.91 |

| g4dn.16xlarge | 64 | 4 | 256 | EBS-Only | $4.35 |

Note: Prices are approximate and depend on the AWS region. For instance, costs in the US East (N. Virginia) region may differ slightly from those in Europe or Asia-Pacific regions.

Spot Instances (Approximate, per hour)

Spot Instances provide massive cost savings, making them a preferred choice for fault-tolerant and flexible workloads.

- Key Features:

- Up to 90% savings compared to On-Demand pricing.

- Priced dynamically based on AWS capacity availability.

- Use Cases:

- Non-critical workloads like batch processing, data analytics, and model training.

- Short-term rendering jobs.

| Instance Type | Price Range (USD/hour) | Savings |

| g4dn.xlarge | $0.0526 – $0.104 | ~80%-90% |

| g4dn.2xlarge | $0.0752 – $0.150 | ~80%-90% |

| g4dn.4xlarge | $0.1204 – $0.240 | ~80%-90% |

| g4dn.8xlarge | $0.2176 – $0.400 | ~80%-90% |

| g4dn.12xlarge | $0.3912 – $0.750 | ~80%-90% |

| g4dn.16xlarge | $0.4352 – $0.800 | ~80%-90% |

Tip: Use Spot Instance Advisor to monitor pricing trends and plan effectively.

Reserved Instances (1-Year or 3-Year Commitments)

Reserved Instances (RIs) are ideal for consistent, long-term workloads, offering significant savings over On-Demand rates.

- Features:

- 1- or 3-year commitment options with varying payment methods.

- Upfront payment options: All Upfront, Partial Upfront, or No Upfront.

- Savings:

- Up to 72% for 3-year commitments.

- Best for:

- Workloads with predictable, stable demands like backend services.

| Instance Type | 1-Year Savings (%) | 3-Year Savings (%) | Hourly Cost (USD) |

| g4dn.xlarge | ~30% | ~60%-72% | $0.37 |

| g4dn.2xlarge | ~30% | ~60%-72% | $0.53 |

| g4dn.4xlarge | ~30% | ~60%-72% | $0.84 |

Savings Plans

Savings Plans offer flexibility across AWS services, making them an excellent option for multi-service architectures.

- Features:

- Commit to hourly usage across services for 1 or 3 years.

- Save up to 66% on EC2 instances.

- Best Use Cases:

Additional Cost Considerations

When using G4 instances, it’s essential to account for additional costs that can impact overall expenditure.

Data Transfer Costs:

Data transfer pricing depends on inbound and outbound traffic volume.

- Inbound Data: Free for all AWS regions.

- Outbound Data:

- $0.09/GB for the first 10 TB/month.

- Lower rates for higher usage tiers.

Storage Costs (EBS Volumes):

Amazon EBS is the preferred storage option for G4 instances.

- Options:

- General Purpose SSD (gp3): $0.08/GB per month.

- Provisioned IOPS SSD (io2): $0.125/GB per month + $0.065/provisioned IOPS per month.

- Options:

- Elastic IP Addresses:

- Charged at $0.005 per hour when not associated with a running instance.

- Management and Monitoring:

- Amazon CloudWatch:

- Basic Monitoring: Free.

- Detailed Metrics: Starts at $0.01 per metric per hour.

- Amazon CloudWatch:

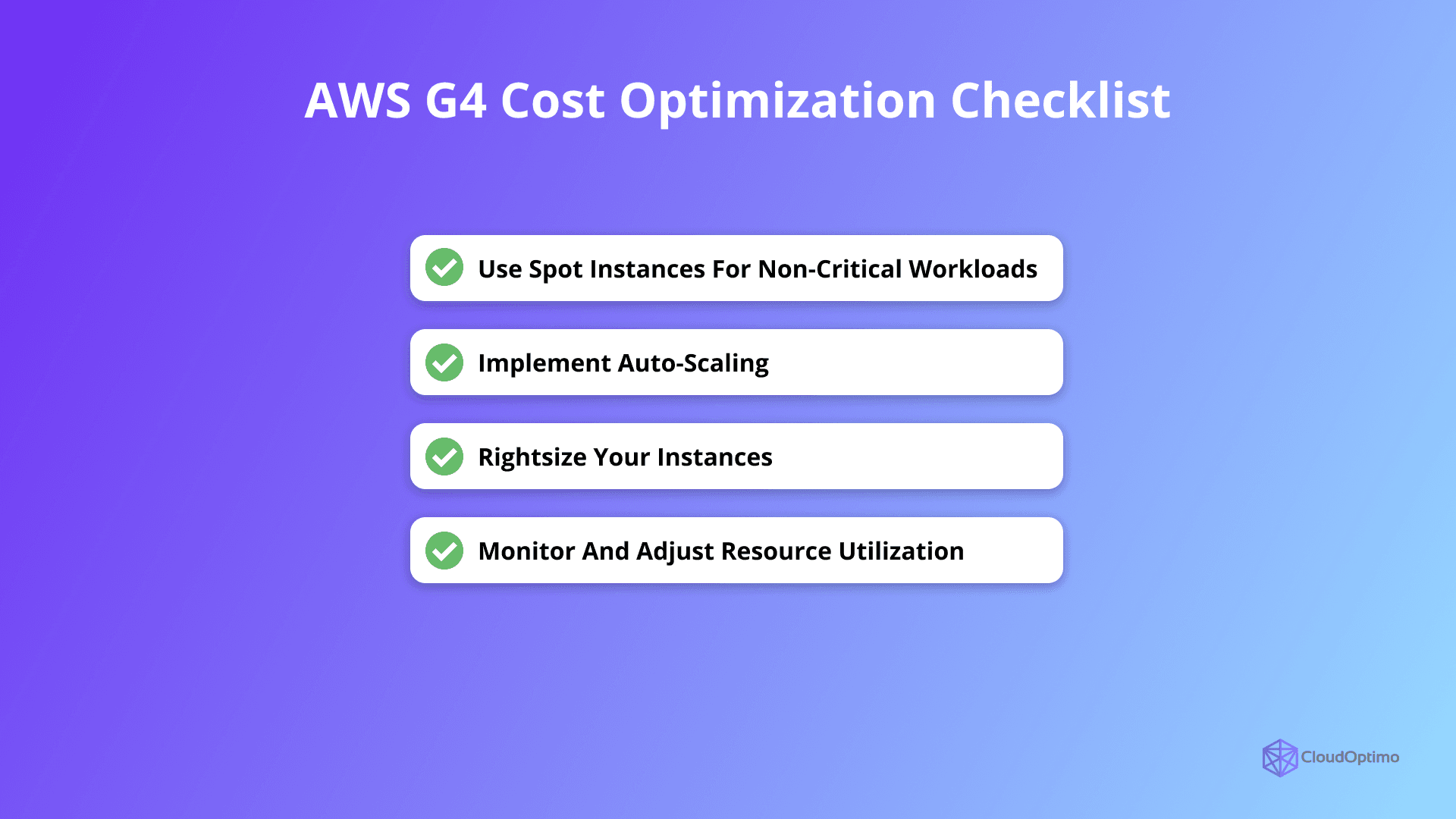

Optimizing Costs for G4 Instances

Cost optimization for G4 instances requires a multi-faceted approach. While AWS provides various tools and pricing models, choosing the right mix that aligns with your workload needs is essential for reducing operational costs. Below are key areas to focus on:

Leverage the Right Pricing Model

AWS G4 instances offer different pricing models that can help businesses save on operational costs. Choosing the right model based on workload needs is critical for efficient cost optimization.

AWS G4 instances offer different pricing models that can help businesses save on costs. Choosing the right one for your workload is crucial.

- On-Demand Pricing: Ideal for short-term or unpredictable workloads, but more expensive if used long-term.

- Optimization Tip: Use On-Demand instances for burst workloads, but consider switching to Spot or Reserved Instances for longer, more predictable workloads to reduce costs.

- Spot Instances: Perfect for non-critical tasks or flexible workloads, offering discounts of up to 90% compared to On-Demand pricing.

- Optimization Tip: Use Spot Instances for tasks like machine learning model training or batch jobs. These workloads can tolerate interruptions, which makes Spot Instances highly cost-effective.

- Reserved Instances: Best for steady, predictable workloads with significant savings (up to 72%).

- Optimization Tip: Commit to Reserved Instances for long-term workloads to benefit from major discounts. Choose the "All Upfront" payment option for the highest savings.

- Savings Plans: Offer flexibility across multiple AWS services like EC2, Lambda, and Fargate, and provide a more flexible, cost-effective way to commit to AWS usage for 1 or 3 years.

- Optimization Tip: Choose Savings Plans if you use several AWS services, allowing you to optimize costs across your infrastructure.

- On-Demand Pricing: Ideal for short-term or unpredictable workloads, but more expensive if used long-term.

Rightsizing Your Instances

Right-sizing ensures that your G4 instances match the specific requirements of your workload. Oversized instances lead to wasted resources, while undersized instances can degrade performance.

- Optimization Tip:

- Use CloudOptimo’s OptimoSizing, a solution that provides data-driven rightsizing recommendations based on actual resource usage. While this tool doesn't automatically rightsize cloud resources, it provides highly actionable insights, allowing you to optimally size your resources and improve cost efficiency without risking under- or over-provisioning.

- Example:

If a g4dn.xlarge instance regularly uses only 50% of its CPU capacity, consider downgrading to a smaller instance (like g4dn.metal) to optimize cost.

- Optimization Tip:

Auto-Scaling for Efficiency

Auto-scaling dynamically adjusts the number of instances based on demand, preventing over-provisioning during low usage and ensuring adequate resources during high usage.

- Optimization Tip:

- Set Up Auto Scaling Groups: Configure auto-scaling policies based on CPU, GPU, or memory utilization to automatically scale up or down as needed.

- Fine-tune Scaling Policies: Avoid over-provisioning by setting granular scaling triggers. For example, scale up based on a sudden traffic spike and scale down after a defined idle period. This allows for cost-efficient scaling, while CloudOptimo’s OptimoGroup helps manage the transitions to Spot Instances seamlessly to maximize savings.

- Optimization Tip:

Minimize Data Transfer and Storage Costs

While not directly related to instance costs, data transfer and storage expenses can add up quickly. Optimizing these can result in considerable savings.

- Optimization Tip:

- Efficient Storage Choices: Use General Purpose SSD (gp3) for cost-effective storage unless high IOPS are needed, in which case, use Provisioned IOPS SSD (io2).

- Optimize Data Transfer:

- Leverage AWS Direct Connect for reduced transfer costs between on-premises systems and AWS.

- Avoid Cross-Region Transfers: Keep your instances and storage in the same region to minimize expensive cross-region data transfer.

- Optimization Tip:

Common Challenges and Solutions

Despite the numerous advantages of AWS EC2 G4 instances, users may encounter specific challenges when deploying and managing GPU workloads. Below, we explore common issues and provide detailed solutions to help you get the most out of your G4 instances.

GPU Driver Compatibility

Challenge:

One of the frequent issues users face is GPU driver compatibility, particularly when using different operating systems, machine learning frameworks, or CUDA versions. Mismatched or outdated drivers can lead to poor performance or even failure to recognize the GPU.Solutions:

- Use Pre-Configured AMIs:

AWS provides Deep Learning AMIs (Amazon Machine Images) that come with pre-installed, optimized GPU drivers and ML frameworks (TensorFlow, PyTorch, MXNet, etc.). Using these AMIs reduces the risk of compatibility issues. - Manual Driver Updates:

If you are managing your own AMIs, ensure you’re installing the correct GPU drivers.- Check for the latest NVIDIA drivers from the NVIDIA website and follow AWS's documentation for installation steps.

- Verify your driver installation using the nvidia-smi command. It will display the GPU status, driver version, and CUDA version.

- Ensure CUDA Compatibility:

Make sure the CUDA version you’re using is compatible with your driver and machine learning frameworks. NVIDIA provides a CUDA compatibility matrix that can guide your installation process. - Automate Driver Management:

Automate driver installation and updates with configuration management tools like AWS Systems Manager or Ansible to maintain consistency across instances.

Best Practice:

- Regularly check and update your drivers to the latest stable versions to benefit from performance improvements and security patches.

- Use Pre-Configured AMIs:

Leverage Multi-GPU Configurations

For intensive tasks, use instances with multiple GPUs to distribute the computation load efficiently. Ensure your ML frameworks support multi-GPU training, such as TensorFlow’s tf.distribute.Strategy or PyTorch’s DataParallel.

- Best Practice:

- Periodically review instance performance metrics and adjust configurations based on evolving workload needs. This proactive approach helps prevent performance degradation.

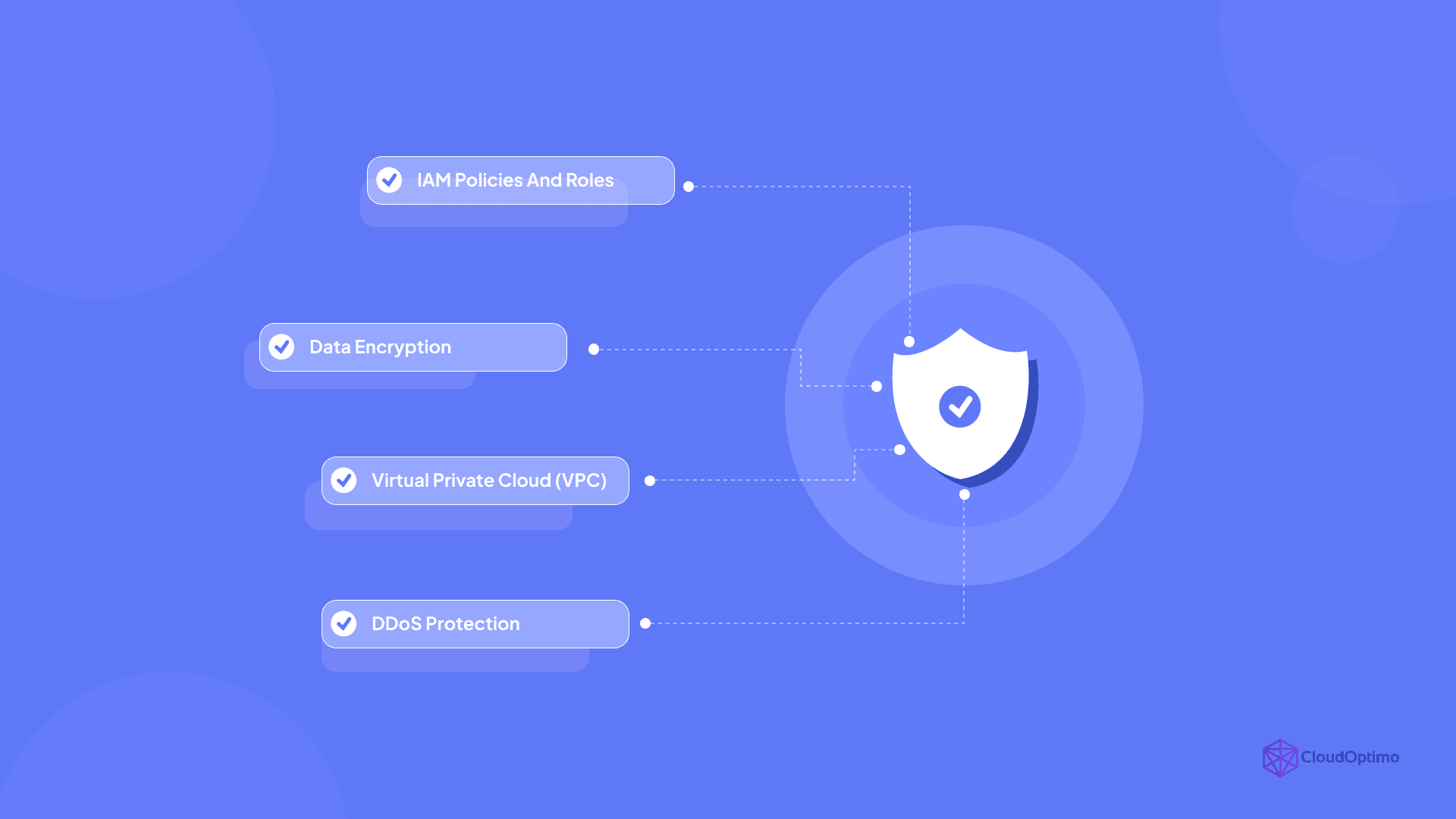

Security and Compliance Considerations

Ensuring security and meeting compliance standards is crucial when deploying workloads on the cloud, especially for industries like healthcare, finance, and e-commerce that handle sensitive data. AWS EC2 G4 instances offer several robust security features and comply with industry standards to protect your workloads and maintain regulatory requirements.

Key Security Challenges and Solutions

IAM Policies and Roles

- Challenge: Ensuring only authorized users can access and modify G4 instances.

- Solution:

- Implement least-privilege access using IAM policies to control permissions.

- Use IAM roles to grant temporary permissions to applications running on your instances, avoiding the need to store credentials directly on the machines.

- Role-Based Access Control (RBAC) reduces the risk of unauthorized access by assigning specific roles to users.

Data Encryption

- Challenge: Protecting sensitive data both at rest and in transit.

- Solution:

- Encryption at Rest: Use AWS Key Management Service (KMS) to encrypt data stored on your G4 instances. Encryption in Transit: Protect data in transit using SSL/TLS protocols to ensure secure communication.

Virtual Private Cloud (VPC)

- Challenge: Isolating instances to prevent unauthorized access.

- Solution:

- Deploy G4 instances within a VPC (Virtual Private Cloud) to isolate resources.

- Use strict security group rules and Network ACLs to manage inbound and outbound traffic.

- Place instances in private subnets for workloads that don’t require direct internet access.

Compliance Standards and Measures

GDPR, HIPAA, and PCI-DSS

- Use Case: When handling personal data, healthcare information, or payment details, adherence to regulations like GDPR, HIPAA, and PCI-DSS is mandatory.

- Solution:

- G4 instances support encryption and access controls to protect sensitive data.

- Follow AWS best practices for data handling and security to ensure compliance with these standards.

- For ongoing compliance management and security best practices, CloudOptimo’s OptimoSecurity provides continuous monitoring and automated checks to ensure your infrastructure remains aligned with regulatory requirements and industry standards.

AWS Shield for DDoS Protection

- Use Case: Protecting public-facing services and AI models from Distributed Denial of Service (DDoS) attacks.

- Solution:

- Use AWS Shield to automatically detect and mitigate DDoS attacks, ensuring service availability and minimizing disruptions.

Best Practices for Security and Compliance

- Conduct periodic audits to identify vulnerabilities and ensure adherence to security policies.

- Set up alerts for suspicious activities to respond promptly to potential threats.

By integrating these security measures and following compliance guidelines, AWS EC2 G4 instances provide a secure and reliable environment for your workloads, safeguarding sensitive data and meeting industry regulations.

Use Cases

G4 instances are versatile, catering to multiple GPU-intensive applications across industries. Here’s a detailed exploration of how they revolutionize workflows:

- Cloud Gaming

- Problem: Gamers demand high-quality, low-latency experiences on devices with limited processing power.

- Solution: G4 instances process graphics-intensive games in the cloud, streaming them to devices in real time.

- Example: Services like NVIDIA GeForce NOW and leverage similar setups to deliver AAA gaming experiences to users without requiring high-end gaming PCs.

- Benefits:

- Seamless gaming experiences with high-quality graphics.

- Scalable infrastructure to support fluctuating user demand.

- AI Inference

- Problem: Real-time AI applications require low-latency inference with high accuracy.

- Solution: G4 instances accelerate the deployment of pre-trained machine learning models, optimizing tasks like object detection, voice recognition, and personalized recommendations.

- Use Case Example: Retailers use G4 instances to run recommendation systems during online shopping, enhancing customer experiences.

- Benefits:

- Faster AI model inference.

- Cost-effective scaling for AI-driven applications.

- 3D Rendering and Visualization

- Problem: Rendering photorealistic images and simulations demands significant GPU power.

- Solution: G4 instances provide the necessary GPU resources for industries such as architecture, film production, and automotive design.

- Use Case Example: Architectural firms use G4 instances for real-time rendering of virtual walkthroughs for clients.

- Benefits:

- Reduced rendering times.

- Ability to handle complex scenes with ease.

- Video Encoding and Transcoding

- Problem: With increasing video content consumption, media companies need efficient video processing pipelines.

- Solution: G4 instances accelerate encoding, transcoding, and streaming of high-resolution videos.

- Use Case Example: OTT platforms use G4 instances to process large volumes of video content in multiple formats.

- Benefits:

- Faster video processing and delivery.

- Support for high-definition formats like 4K and 8K.

- Virtual Desktops and Remote Workstations

- Problem: Creative professionals working remotely need access to powerful GPUs.

- Solution: G4 instances enable cloud-based workstations, providing the GPU performance required for tasks like video editing and CAD modeling.

- Use Case Example: Media companies use virtual workstations powered by G4 instances for distributed teams working on post-production.

- Benefits:

- Secure, high-performance workstations accessible from anywhere.

- Reduced dependency on expensive physical hardware.

Performance Benchmarks and Metrics

Understanding the performance benchmarks of AWS G4 instances is critical for businesses aiming to evaluate their real-world capabilities. These benchmarks highlight the advantages of NVIDIA T4 Tensor Core GPUs in handling a wide range of workloads.

Key Performance Highlights:

- AI Inference:

NVIDIA T4 GPUs significantly reduce inference latency for machine learning models compared to previous-generation GPUs.- Ideal for real-time applications like recommendation engines and facial recognition systems.

- Benchmarks indicate up to 4x faster inference speeds in optimized deep learning frameworks.

- Graphics Rendering:

G4 instances deliver ultra-HD rendering capabilities with minimal latency, enabling fluid performance in cloud gaming, 3D visualization, and simulation.- Benchmarks demonstrate that G4 instances render 4K graphics with an average latency of less than 30 milliseconds.

- Video Processing:

G4 instances accelerate video encoding and transcoding tasks, offering up to 40% better performance compared to prior generation instances. - Energy Efficiency:

The NVIDIA T4 GPU architecture is designed for energy-efficient computing, reducing operational costs without sacrificing performance.

Why These Metrics Matter?

These performance benchmarks demonstrate that G4 instances are not only powerful but also optimized for efficiency, making them a reliable choice for GPU-intensive applications.

Licensing and Software Compatibility

AWS EC2 G4 instances are built for seamless integration into diverse workflows, ensuring users spend less time setting up and more time leveraging GPU power.

Key Features and Benefits

- Pre-installed NVIDIA Drivers and Libraries:

G4 instances are pre-configured with essential software, including NVIDIA drivers, CUDA libraries, and cuDNN, which are critical for GPU-based workloads.- This eliminates manual installation steps, reducing setup time for AI models, video processing tasks, and rendering pipelines.

- Flexible Licensing with BYOL (Bring Your Own License):

Businesses can use their existing software licenses on G4 instances, ensuring compliance with licensing agreements and saving on additional costs.- Ideal for companies that rely on licensed software for applications like CAD, 3D modeling, or machine learning.

- Compatibility with Leading Frameworks and Tools:

G4 instances support a wide array of software and frameworks, ensuring developers can work with familiar tools without compatibility concerns:- AI and ML Frameworks: TensorFlow, PyTorch, Keras, Apache MXNet, and more. These are essential for training and deploying neural networks.

- Data Science and Analytics: RAPIDS, NVIDIA's open-source suite of libraries for data science, accelerates tasks like ETL, model training, and inference.

- Graphics and Rendering Tools: Unreal Engine, Unity, Blender, Autodesk Maya, and 3ds Max offer compatibility for high-performance rendering and 3D visualization tasks.

- Video Processing Software: FFmpeg, Adobe Premiere Pro, and DaVinci Resolve can leverage GPU acceleration for encoding, transcoding, and editing.

- Deep Learning AMIs (Amazon Machine Images):

AWS provides pre-configured AMIs tailored for G4 instances, equipped with popular deep learning frameworks, drivers, and tools.- This feature simplifies deployment for AI practitioners and researchers.

Why Licensing and Compatibility Matter?

Licensing flexibility and broad software compatibility make G4 instances adaptable across industries, ensuring they meet diverse operational needs without unnecessary overhead.

Seamless Integration with the AWS Ecosystem

One of the key strengths of AWS G4 instances is their ability to integrate seamlessly with other AWS services. This integration enables organizations to build, deploy, and manage GPU-intensive workloads efficiently, further enhancing the power of G4 instances for machine learning, AI, and other data-heavy tasks.

Key Integrations for AWS G4 Instances

- Amazon S3:

- Use Case: Store large datasets, model weights, and training data for AI and ML projects. S3’s high durability and scalability ensure you never run out of storage.

- Integration: Easily connect G4 instances to S3 buckets for quick data access, enabling efficient data handling during model training or inference.

- Amazon SageMaker:

- Use Case: Build, train, and deploy machine learning models using the power of G4 instances for intensive GPU computations.

- Integration: SageMaker leverages G4 instances to accelerate training times and improve model accuracy, making it easier for data scientists to manage workflows.

- Amazon ECS:

- Use Case: Run containerized applications that require GPU resources with minimal setup.

- Integration: ECS integrates with AWS G4 instances to support containerized GPU workloads, making it easy to scale GPU-intensive applications without complex configurations.

This seamless integration with AWS services provides flexibility and scalability, allowing users to take full advantage of the AWS cloud ecosystem for their GPU-accelerated workloads.

Sustainability and Energy Efficiency of G4 Instances

As sustainability becomes an increasingly important factor in cloud computing, AWS G4 instances contribute to energy efficiency and sustainability goals. AWS is committed to reducing its environmental impact, and G4 instances are designed to support this initiative.

Energy Efficiency of G4 Instances

- NVIDIA T4 GPUs:

- Efficiency: The T4 GPUs used in G4 instances are known for their energy efficiency, consuming significantly less power compared to previous-generation GPUs.

- Benefit: This translates to lower energy consumption for machine learning, AI, and rendering workloads, contributing to reduced carbon emissions.

- AWS’s Renewable Energy Initiatives:

- Efficiency: AWS has committed to powering its data centers with 100% renewable energy. G4 instances, being part of the AWS ecosystem, are aligned with these green initiatives.

- Benefit: Users of G4 instances benefit from AWS’s renewable energy sources, making their GPU workloads more sustainable.

By choosing AWS G4 instances, users can not only leverage high-performance GPU capabilities but also contribute to a greener future through sustainable cloud computing.

Future Outlook for GPU-Powered Instances

The future of GPU-powered instances is poised for transformative advancements across several key areas. These developments promise to expand the capabilities of GPU-powered computing, making it an even more powerful tool for businesses and organizations.

AI at Scale

- Trend: As AI applications grow in complexity and scale, GPUs will play an even more critical role in enabling faster training and inference.

- Future: GPU performance improvements will facilitate the execution of large-scale AI workloads, driving faster insights, real-time decision-making, and smarter automation.

Edge Computing

- Trend: The push toward edge computing will bring computing closer to where data is generated, reducing latency and enabling real-time processing.

- Future: GPU-powered instances will be deployed in edge environments, providing the computational power needed for AI and machine learning applications right at the source, ensuring ultra-low latency and enhanced user experiences.

- Advancements in GPU Technology

- Trend: Innovations like NVIDIA’s Hopper architecture are set to revolutionize GPU technology.

- Future: With each new architecture, we can expect significant performance leaps, optimizing workloads like AI, graphics rendering, and data processing, pushing the boundaries of GPU capabilities.

In summary, AWS G4 instances provide a powerful, efficient, and scalable platform that is well-suited for modern applications. Whether for AI, graphics rendering, or high-performance computing, G4 instances deliver the performance needed to drive innovation.