Launched to simplify AI model management, AWS Bedrock provides easy access to a variety of powerful, pre-trained foundation models from top-tier providers like Anthropic, Meta, and Stability AI, all within AWS’s secure ecosystem. But how does it work? What are the pricing models, and how can businesses ensure cost efficiency while maximizing the potential of AWS Bedrock?

In this blog, we’ll dive deep into AWS Bedrock, explaining its core features, pricing structure, how to fine-tune models, real-world use cases, and how to integrate it with other AWS services.

What is AWS Bedrock?

AWS Bedrock is Amazon Web Services' managed platform designed for simplifying the deployment of AI models in the cloud. Whether you’re a developer, data scientist, or enterprise IT leader, Bedrock provides a seamless environment to run machine learning (ML) models at scale. What makes AWS Bedrock stand out is its access to multiple state-of-the-art AI models, provided by various vendors, making it a one-stop platform for all your AI and machine learning needs.

Unlike traditional machine learning tools where you have to manage everything from data pipelines to model deployment and scaling, AWS Bedrock abstracts much of this complexity. It allows developers to focus on building and integrating AI-powered applications without worrying about underlying infrastructure.

Some of the key features of AWS Bedrock include:

- Pre-trained models: Access to a range of powerful AI foundation models, including language models for natural language processing (NLP), image generation models, and more.

- Seamless scaling: AWS Bedrock leverages the massive scalability of the AWS cloud to deploy AI models efficiently across a wide variety of applications.

- Flexible integration: Integrates smoothly with other AWS services like Amazon SageMaker, EC2, and S3, enabling end-to-end AI solutions.

- Cost optimization: Flexible pricing models that allow businesses to choose the best cost structure for their use case (e.g., per token, per image, per-hour model pricing).

Whether you're building chatbots, predictive analytics engines, or AI-driven content generation tools, AWS Bedrock makes deploying and scaling machine learning models as straightforward as possible.

What Makes AWS Bedrock Different?

AWS Bedrock competes in a crowded market of AI and machine learning platforms, with major players like Google Cloud AI, Microsoft Azure AI, and IBM Watson offering similar capabilities. So, why choose AWS Bedrock?

- Independence from Consumer AI Products

Unlike Microsoft, which is closely tied to OpenAI's models (like ChatGPT), or Google, which is developing its own AI products such as Bard, AWS Bedrock is more focused on empowering developers rather than competing directly in the consumer-facing AI space. For companies looking to build AI solutions without contributing to a competitor’s products, AWS offers a neutral ground. - Access to Multiple Top AI Models

AWS Bedrock gives developers access to a variety of pre-trained foundation models from leading AI vendors (such as Anthropic, Stability AI, and AI21 Labs), unlike Google’s Vertex AI or Azure AI, which are centered around their own proprietary models. This allows users to pick the best model for their specific needs. - Cost Efficiency with AWS’s Custom Chips

Bedrock leverages Amazon’s Trainium and Inferentia chips, designed to optimize AI training and inference. These specialized chips help reduce computing costs, which can make AWS a more cost-effective option compared to competitors like Microsoft or Google, which also have AI-specific hardware but may not offer the same level of optimization for certain workloads. - Seamless Integration with AWS Ecosystem

For businesses already using AWS, Bedrock seamlessly integrates with a wide range of AWS services like SageMaker, EC2, and S3, creating an easy-to-use, end-to-end platform for AI development. Competitors also offer integration within their respective ecosystems, but AWS’s vast array of services may be particularly appealing to organizations already embedded in AWS. - Scalability and Reliability

Built on AWS’s global cloud infrastructure, Bedrock offers scalability that’s hard to match. Whether scaling a small AI prototype or rolling out enterprise-wide applications, AWS ensures that AI models run efficiently across the globe.

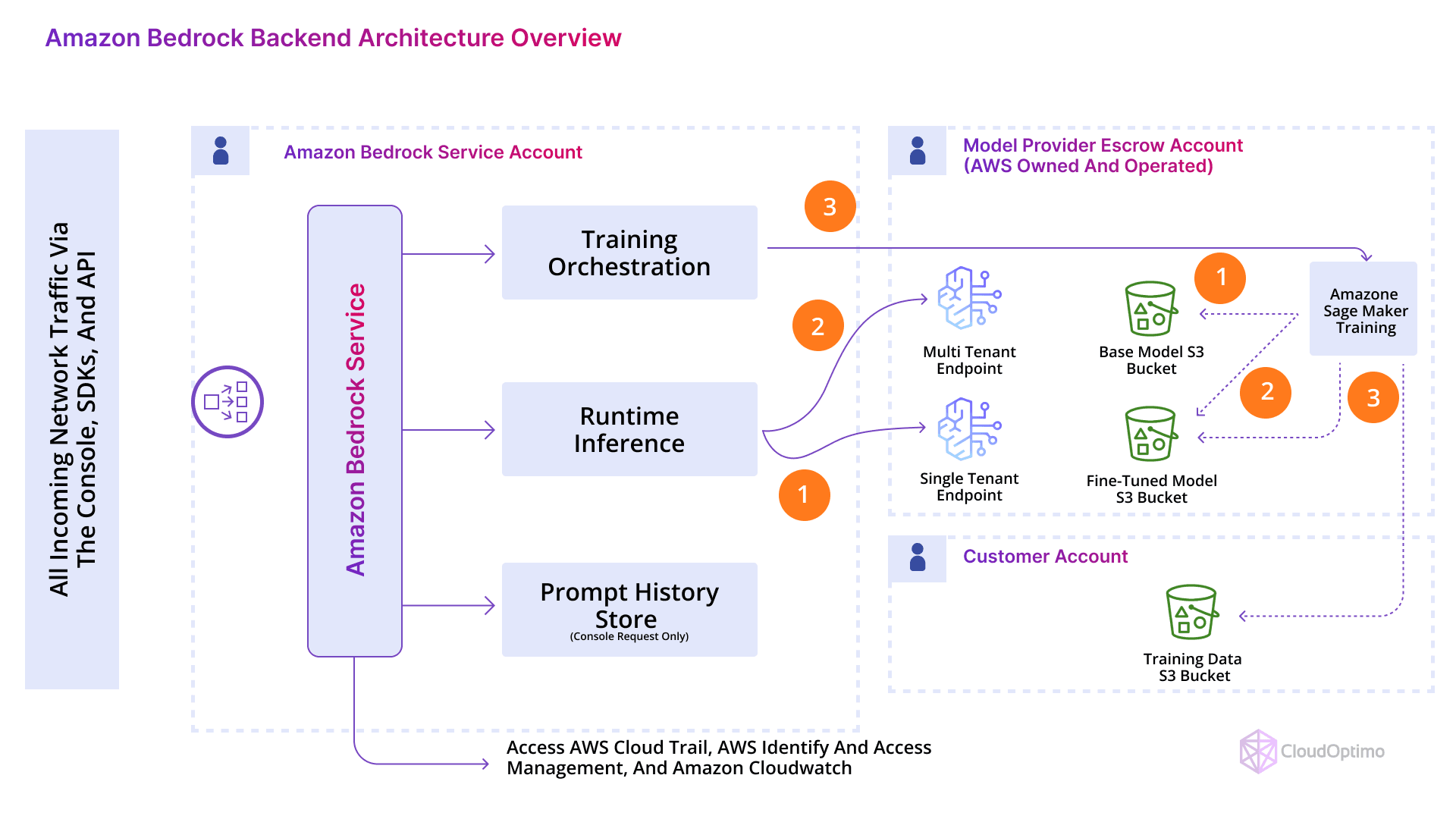

How AWS Bedrock Works?

Source: AWS Community

AWS Bedrock leverages the power of pre-trained models and makes them easily accessible to developers through a managed interface. This service is designed to minimize the complexity associated with using AI models in production environments, helping businesses save time and reduce operational overhead. Let’s break down how AWS Bedrock works, from integration to model deployment:

- Model Access and Integration

AWS Bedrock allows users to access top-tier models from leading providers, including Meta, Anthropic, and Stability AI. These models are pre-trained, meaning businesses don’t have to spend significant resources on data preparation or model training. AWS manages the complexities of hosting and scaling these models, allowing developers to focus on integration. - Model Deployment

AWS Bedrock simplifies model deployment through its native integration with Amazon SageMaker and AWS Lambda. For instance, you can use SageMaker to fine-tune a pre-trained model, and then deploy it with minimal effort using AWS Bedrock's easy-to-use API. The service takes care of scaling, load balancing, and monitoring.

Using the AWS SDK for Model Deployment

Here’s a basic code snippet demonstrating how to use the AWS SDK to deploy a model:

| python import boto3 # Initialize the AWS SDK for Bedrock client = boto3.client('bedrock') # Specify model details model_id = "model-xyz" input_data = {"text": "This is a test input for the AI model."} # Make a request to AWS Bedrock response = client.invoke_model( ModelId=model_id, Body=input_data ) # Process the response result = response['Body'].read() print(f"Model Output: {result.decode('utf-8')}") |

- This Python code demonstrates a basic use case of invoking a model in AWS Bedrock. The SDK simplifies interacting with AWS's infrastructure and ensures the model scales automatically depending on the load.

- Data Storage and Security

AWS Bedrock integrates seamlessly with Amazon S3 for storing input and output data. Security is a major concern when dealing with AI models, and AWS provides several layers of security including encryption, access controls, and compliance certifications. Bedrock ensures that your data is stored securely and only accessible by authorized personnel.

AWS Bedrock Models and Providers

One of the most powerful features of AWS Bedrock is its access to a variety of pre-trained AI models from leading providers. These models span different use cases, including natural language processing (NLP), image generation, and text generation. By leveraging AWS Bedrock, businesses can easily integrate these advanced models into their workflows without needing to train them from scratch.

Here’s a breakdown of the main foundation model providers available on AWS Bedrock:

1. Anthropic Claude Models

Anthropic’s Claude is an advanced language model designed to generate human-like text. It's particularly well-suited for NLP tasks like chatbot development, content creation, and customer service automation. Claude is available in multiple variants, each optimized for specific workloads.

- Claude Instant: A fast, cost-effective version of Claude, optimized for low-latency tasks such as chatbots and content generation.

- Claude 1.0 & 2.0: The more powerful variants are ideal for long-form content creation, sentiment analysis, and complex conversational agents.

2. Meta’s Llama 2

Meta’s Llama 2 is a family of open-weight language models that come in several configurations, ranging from small to large. They are designed to handle a wide range of NLP tasks, such as text summarization, translation, and document classification.

- Llama 2 Chat: Fine-tuned specifically for conversational applications, making it perfect for interactive assistants and intelligent chatbots.

3. Stability AI’s Stable Diffusion

Stability AI brings Stable Diffusion, a highly regarded model for generating images from textual descriptions. This model is a game-changer for industries that rely on creative content, such as gaming, marketing, and advertising. It can generate high-quality images based on specific prompts, saving businesses valuable time and resources.

- Image Generation: Stable Diffusion can produce realistic images, artwork, and visual content from textual descriptions, with applications in advertising, design, and media production.

4. AI21 Labs’ Jurassic-2

AI21 Labs provides the Jurassic-2 model family, which excels in generating natural, human-like text for a variety of NLP applications. The Jurassic-2 models are known for their large scale and high-quality language generation, making them ideal for tasks like document drafting, report generation, and creative writing.

- Jurassic-2 Ultra: The most advanced version, providing superior language generation capabilities for complex and nuanced content creation.

By offering access to multiple providers like Anthropic, Meta, Stability AI, and AI21 Labs, AWS Bedrock ensures that businesses can select the right model for their specific AI use case.

AWS Bedrock vs. Amazon SageMaker

AWS offers two powerful services for machine learning: AWS Bedrock and Amazon SageMaker. While both support building and deploying AI models, they are tailored to different needs. Here’s a quick comparison to help you understand the key differences:

| Feature | AWS Bedrock | Amazon SageMaker |

| Target Users | Developers needing pre-trained models with minimal setup | Data scientists and ML engineers needing full control over ML workflows |

| Primary Use Case | Leveraging foundation models (e.g., Claude, Llama 2) for quick integration | Custom model development, training, and deployment |

| Model Control | Limited to fine-tuning pre-trained models | Full flexibility in creating and training custom models |

| Ease of Use | Highly simplified and fully managed | More complex, with granular control over every stage of the ML lifecycle |

| Cost Structure | Pay-as-you-go for model use and fine-tuning | Pay-as-you-go for compute, training, and model deployment |

| Customization | Fine-tuning only on pre-trained models | Full customization from data prep to model training and deployment |

| Best for | Quick deployment of AI applications (chatbots, text generation, image generation) | Complex ML workflows, large-scale model training, real-time predictions |

When to Choose Which?

- Choose AWS Bedrock if you need quick access to pre-trained models with minimal setup for common AI tasks like text generation or customer service automation.

- Choose Amazon SageMaker if you need to build custom machine learning models from scratch, require advanced control over training, or need to deploy models at scale.

AWS Bedrock Pricing Explained

When adopting a new service like AWS Bedrock, it's important to understand its pricing structure in order to optimize your costs. AWS offers a flexible pricing model for its AI services, with various options based on token usage, image generation, and hourly processing. Here's a breakdown of how AWS Bedrock pricing works for different models.

1. Pricing by Tokens and Images

AWS charges for AI models based on tokens for text-based models and images for visual models. Let’s go over the details for both:

Text Models:

AWS charges for both input tokens (the tokens you send to the model) and output tokens (the tokens the model generates in response). Pricing is typically based on the number of tokens used, and rates are given per 1,000 tokens.

For example:

- Claude Instant:

- $0.00163 per 1,000 input tokens

- $0.00551 per 1,000 output tokens

- Jurassic-2 Ultra:

- $0.0188 per 1,000 tokens (input and output combined)

Image Models:

For image generation models like Stable Diffusion, the pricing is based on the number of images generated. The cost varies based on the model and the image resolution.

For example:

- Stable Diffusion:

- $0.005 per image

2. Hourly Model Usage

For real-time applications or high-demand use cases, AWS Bedrock also charges based on model usage per hour. This applies to models that are run continuously or require high performance.

Examples of hourly usage pricing:

- Claude 1.0 and 2.0: $39.60 per hour (non-committed)

- Llama 2: $21.18 per hour (non-committed)

If you opt for a committed pricing plan (e.g., 1-month or 6-month commitment), the hourly rate becomes cheaper.

3. Fine-Tuning Costs

AWS Bedrock allows businesses to fine-tune certain models to fit their specific needs. Fine-tuning involves additional computation costs and is charged based on the number of tokens used for training.

For example:

- Fine-tuning: $0.0004 per 1,000 tokens

Note that not all models offer fine-tuning options, and pricing for training can vary depending on the model and its capabilities.

4. Cost Optimization Strategies

To optimize costs while using AWS Bedrock, consider the following strategies:

- Token-based optimization: Be mindful of both input and output token usage. You can batch requests or use shorter prompts to reduce token consumption.

- Select the appropriate model: For simple tasks, consider using a more cost-efficient model like Claude Instant rather than the more powerful Claude 2.0.

- Commitment plans: AWS offers discounts for long-term commitments. For example, signing up for a 1-month or 6-month commitment plan can save you money on hourly usage.

5. Pricing Table for AWS Bedrock Models

Here is a detailed pricing table for the AWS Bedrock models, broken down by model type and pricing categories:

| Model Provider | Model Name | Price per 1,000 Input Tokens | Price per 1,000 Output Tokens | Price to Fine-Tune (per 1,000 Tokens) | Price per Hour (Non-committed) | Price per Hour (1-Month Commit) | Price per Hour (6-Month Commit) |

Amazon Titan

| Titan Text Lite | $0.00 | $0.00 | $0.00 | $7.10 | $6.40 | $5.10 |

| Titan Text Express | $0.00 | $0.00 | $0.01 | $20.50 | $18.40 | $14.80 | |

| Titan Text Embeddings | $0.00 | N/A | N/A | $6.40 | $5.10 | N/A | |

Anthropic | Claude Instant | $0.00 | $0.01 | N/A | $39.60 | $22.00 | N/A |

| Claude | $0.01 | $0.02 | N/A | $63.00 | $35.00 | N/A | |

Meta

| Llama 2 Chat (138) | $0.00 | $0.00 | $0.00 | $21.18 | $13.08 | N/A |

| Llama 2 Chat (708) | $0.00 | $0.00 | $0.01 | $21.18 | $13.08 | N/A | |

Stability AI

| SDXL 0.8/1.0 | $0.018 (512px) / $0.036 (1024px) | $0.00195 (<=512px) / $0.00799 (>1024px) | N/A | $21.18 | $13.08 | N/A |

| SDXL 1.0 (Premium) | $0.036 (1024px) | $0.01 | N/A | $21.18 | $13.08 | N/A | |

| Titan Image Generator | N/A | $0.005 per image | N/A | $16.20 | $13.00 | N/A | |

| Cohere | Command-Light | $0.00 | $0.00 | $0.00 | $6.85 | $4.11 | N/A |

Explanations:

- Price per 1,000 Tokens: This is the cost of using a model to process 1,000 tokens, both for input (data you send to the model) and output (the model's response).

- Price to Fine-Tune (per 1,000 Tokens): This reflects the cost to fine-tune a model using your own dataset, if applicable. Fine-tuning prices are typically for text-based models and can vary by model.

- Price per Hour: This is the hourly cost for running a model. Non-committed pricing is for on-demand usage, while committed pricing provides discounts for longer-term contracts (1-month or 6-month commitments).

- Image Models: For image generation models like Titan Image Generator and SDXL, pricing is based on the number of images generated, and the cost varies depending on image resolution.

Notes:

- Fine-Tuning: Some models like Claude Instant or Claude do not offer fine-tuning options, while others like Llama 2 and Titan Text Lite offer fine-tuning for text-based tasks.

- Pricing for Image Models: Image models like SDXL and Titan Image Generator charge based on image generation rather than token usage. Additionally, image resolution (e.g., 512px vs. 1024px) can affect pricing.

- Variable Pricing: Some models, such as Jurassic-2 from AI21 Labs and Cohere, have more variable pricing. It is recommended to check specific pricing details on the AWS Pricing page or the respective model provider's website.

AWS Bedrock Challenges

While AWS Bedrock is a powerful platform for building generative AI applications, it’s not without its challenges. Here are the key obstacles organizations may face and strategies to address them:

1. Integration Complexity

Integrating AWS Bedrock with existing systems can be complex, especially for organizations with legacy infrastructure. Aligning Bedrock’s models with your data pipelines and cloud services often requires custom configurations, and the integration process may require considerable technical expertise.

Solution: Ensure your team is familiar with AWS services or consider professional consulting to streamline integration. AWS’s extensive documentation and support resources can also aid in reducing setup complexity.

2. Model Selection Overload

AWS Bedrock offers access to a variety of pre-trained models from top AI providers like Amazon, Anthropic, and Stability AI. While this provides flexibility, the sheer number of choices can overwhelm users unfamiliar with AI model nuances.

Solution: Narrow down your selection by clearly defining your AI application’s goals and conducting small-scale experiments to evaluate model performance. AWS’s documentation and customer support can guide you in selecting the best-fit model.

3. Data Compatibility and Preprocessing

Data is key to effective AI models, but ensuring compatibility with Bedrock’s models can be challenging. Each model may have specific data requirements, and preprocessing data to meet these needs can be time-consuming and complex.

Solution: Invest in robust data preprocessing tools or leverage AWS services like SageMaker to automate data preparation. A well-structured data pipeline can significantly reduce friction in model deployment.

4. Cost Management and Predictability

AWS Bedrock’s pay-as-you-go pricing model can lead to unpredictable costs, particularly for large-scale AI workloads. Usage-based pricing can make it difficult to manage budgets, especially if the workload scales unexpectedly.

Solution: Regularly monitor usage through AWS cost management tools and set up budget alerts. Optimizing API calls, batch processing, and using smaller models for testing can help keep costs under control.

Fine-Tuning AWS Bedrock Models

Fine-tuning a model in AWS Bedrock allows businesses to customize AI models for specific tasks, such as creating a specialized chatbot or enhancing language processing capabilities for a niche domain.

Step-by-Step Guide to Fine-Tuning a Model

- Select a Pre-trained Model: Choose a model from the available AWS Bedrock providers that best fits your needs. For instance, you might start with Claude 2.0 for a sophisticated text generation task.

- Prepare Your Dataset: Fine-tuning requires a dataset that is closely aligned with your specific use case. For example, you may want to fine-tune a model to respond in a certain way in customer support scenarios. Clean and format your data, ensuring it's in the appropriate format for training.

- Use the AWS SDK for Fine-Tuning: Below is a basic Python code snippet to fine-tune a model using the AWS SDK:

| python import boto3 # Initialize the Bedrock client client = boto3.client('bedrock') # Example dataset for fine-tuning (simplified) training_data = { "training_input": "Customer: How can I help you?\nSupport Bot: I can assist with your queries about orders." } # Start fine-tuning the model response = client.start_finetuning_job( ModelId='Claude2.0', InputData=training_data, OutputPath='s3://your-bucket/output' ) # Check the fine-tuning status print(f"Fine-tuning job started with job ID: {response['JobId']}") |

- Monitor and Adjust: After fine-tuning, it’s essential to evaluate the model’s performance. You can test it with real-world inputs and adjust your dataset and hyperparameters as needed.

AWS Bedrock Use Cases and Applications

AWS Bedrock is suitable for a wide range of AI applications across various industries. The flexibility of pre-trained models and easy deployment means businesses can quickly scale AI capabilities without having to invest in extensive infrastructure.

1. Natural Language Processing (NLP)

- Chatbots and Virtual Assistants: Using models like Claude and Llama 2, businesses can build intelligent chatbots that handle customer queries, support requests, and more.

- Text Summarization and Content Generation: Generate high-quality, readable content for blogs, reports, or social media posts. Jurassic-2 Ultra is particularly strong in this area.

2. Image Generation

- Creative Content: Stable Diffusion enables the generation of realistic images from text prompts. This is useful for industries like advertising, marketing, and game design.

- Product Prototyping: Businesses can quickly generate visual prototypes for product concepts without needing a graphic designer.

3. Predictive Analytics and Data Insights

- Using AWS Bedrock for predictive analytics, companies can analyze large datasets and generate insights on trends, customer behavior, and market forecasts.

Frequently Asked Questions (FAQ) on AWS Bedrock

1. How is AWS Bedrock priced?

AWS Bedrock’s pricing is based on:

- Tokens: You are charged for input and output tokens when using text-based models like Claude or Llama 2.

- Images: For image generation models like Stable Diffusion, pricing is based on the number of images generated.

- Hourly usage: Models like Claude and Jurassic-2 can also be charged by the hour depending on whether you are using a no-commit or commitment-based plan (e.g., 1-month or 6-month plans).

- Fine-tuning costs: Fine-tuning a model to better suit your needs incurs additional costs based on the amount of data processed.

To optimize costs, you can leverage AWS pricing calculators, use shorter prompts for text models, and select the most cost-efficient model for your needs.

2. How can I fine-tune models in AWS Bedrock?

Fine-tuning a model in AWS Bedrock allows you to tailor pre-trained models to specific business use cases. The process involves:

- Selecting a base model: Choose a model that aligns with your desired task.

- Preparing your dataset: Upload a dataset that is closely aligned with your specific use case (e.g., customer support conversations, medical terminology, etc.).

- Fine-tuning the model: You can initiate fine-tuning via AWS SDK or the AWS console. This involves adjusting hyperparameters and training the model on your dataset.

- Deploying the model: After fine-tuning, deploy the model through AWS Bedrock’s APIs for integration with your application.

Fine-tuning costs are generally based on the number of tokens processed, and the more specialized the fine-tuning, the more computational resources it requires.

3. Can I use AWS Bedrock for production applications?

Yes, AWS Bedrock is designed to handle both development and production environments. With its fully managed infrastructure, automatic scaling, and integration with AWS services (like Lambda for serverless deployments and SageMaker for further model training), it is highly suitable for deploying AI models into production workloads. Many companies use AWS Bedrock to power AI-driven applications like chatbots, document processing, customer service automation, and more.

4. What are the main benefits of using AWS Bedrock for AI model deployment?

- Access to Top-Tier Models: You can access pre-trained models from leading providers such as Claude (Anthropic), Llama 2 (Meta), and Stable Diffusion (Stability AI), all through one platform.

- Seamless Scaling: AWS Bedrock runs on AWS infrastructure, allowing you to scale your AI models as your business needs grow, without worrying about infrastructure management.

- Cost Optimization: The flexible pricing model allows you to control costs based on your usage—whether you’re processing tokens for text models or generating images.

- Rapid Deployment: AWS Bedrock abstracts away the complexities of model training and infrastructure management, enabling you to deploy AI models quickly and efficiently.

- Integration with AWS Services: It integrates well with other AWS services like SageMaker, Lambda, and S3, making it easier to build end-to-end AI workflows.

5. How do I integrate AWS Bedrock with other AWS services like Lambda and SageMaker?

AWS Bedrock is designed to work seamlessly with other AWS services:

- Lambda: Use AWS Lambda to trigger AWS Bedrock model inference automatically in response to events, such as changes in data stored in S3.

- SageMaker: You can fine-tune models from AWS Bedrock using Amazon SageMaker, which offers advanced tools for model training, deployment, and monitoring.

- CloudWatch: For monitoring and logging, AWS CloudWatch can be integrated to track model performance, usage metrics, and costs.

Here’s a simple example of integrating AWS Lambda with AWS Bedrock to call an AI model:

| python import json import boto3 def lambda_handler(event, context): # Initialize AWS Bedrock client client = boto3.client('bedrock') # Example input data (modify based on use case) input_data = {"text": "Please summarize this text."} # Invoke the model response = client.invoke_model( ModelId='Claude2.0', Body=json.dumps(input_data) ) # Process and return the result model_output = response['Body'].read().decode('utf-8') return { 'statusCode': 200, 'body': json.dumps({'output': model_output}) } |

This AWS Lambda function triggers AWS Bedrock to process text and return the output in real-time. You can customize this integration further based on your specific use case.

6. What are some real-world use cases for AWS Bedrock?

Here are a few real-world use cases where AWS Bedrock excels:

- Customer Support Chatbots: Use Claude models to create intelligent chatbots that handle customer queries 24/7, reducing operational costs.

- Content Generation: Generate blog posts, product descriptions, and social media content with models like Jurassic-2 and Claude.

- Image Generation for Marketing: Generate high-quality marketing materials, logos, or creative images from text prompts using Stable Diffusion.

- Sentiment Analysis: Analyze customer feedback, product reviews, or social media posts to gain insights into public sentiment using Llama 2.

7. How do I monitor my usage and costs on AWS Bedrock?

To manage your AWS Bedrock usage and optimize costs, you can use tools like:

- AWS Cost Explorer: Visualize and track your AWS spending across services, including AWS Bedrock.

- AWS Budgets: Set custom cost and usage budgets to receive notifications when your usage exceeds predefined thresholds.

- CloudWatch: Use CloudWatch to monitor model performance, usage metrics, and set alerts for unusual activities.