Databricks is a powerful platform for data analytics, machine learning, and big data processing, but its pricing structure can be challenging to navigate.

Understanding how Databricks charges for various services is crucial for businesses looking to optimize their cloud costs. In this guide, we'll explore the key factors that influence Databricks pricing, including compute costs, storage, and cluster management.

By breaking down these components, we’ll provide actionable insights to help you manage and reduce your Databricks expenses effectively.

The Evolution of Data Platforms: A Brief History

The Era of Big Data

The early 2000s witnessed an unprecedented surge in data generation, driven by the internet boom and rapid digitization. Organizations struggled to manage and analyze these growing datasets using traditional relational databases. The challenges were many:

- Scalability Issues: Relational databases couldn’t scale horizontally to handle large datasets.

- Unstructured Data: Emerging data types (text, images, logs) didn’t fit well into structured schemas.

- Processing Speed: Batch processing methods were too slow for real-time insights.

Apache Spark's Introduction

In 2010, Apache Spark emerged as a transformative solution. Unlike the Hadoop MapReduce framework, Spark introduced:

- In-Memory Computing: Spark’s ability to keep data in memory drastically improved processing speed.

- Flexibility: Support for multiple languages (Python, Scala, Java) expanded its usability.

- Rich Libraries: MLlib for machine learning, GraphX for graph processing, and Spark Streaming for real-time data processing.

The Birth of Databricks

Recognizing the potential of Spark, its creators founded Databricks in 2013. Their mission was to simplify big data processing and make Spark more accessible to enterprises. Key goals included:

- Abstracting Complexity: Managing Spark clusters manually was intricate and error-prone.

- Enabling Collaboration: Bridging the gap between data engineers, data scientists, and business analysts.

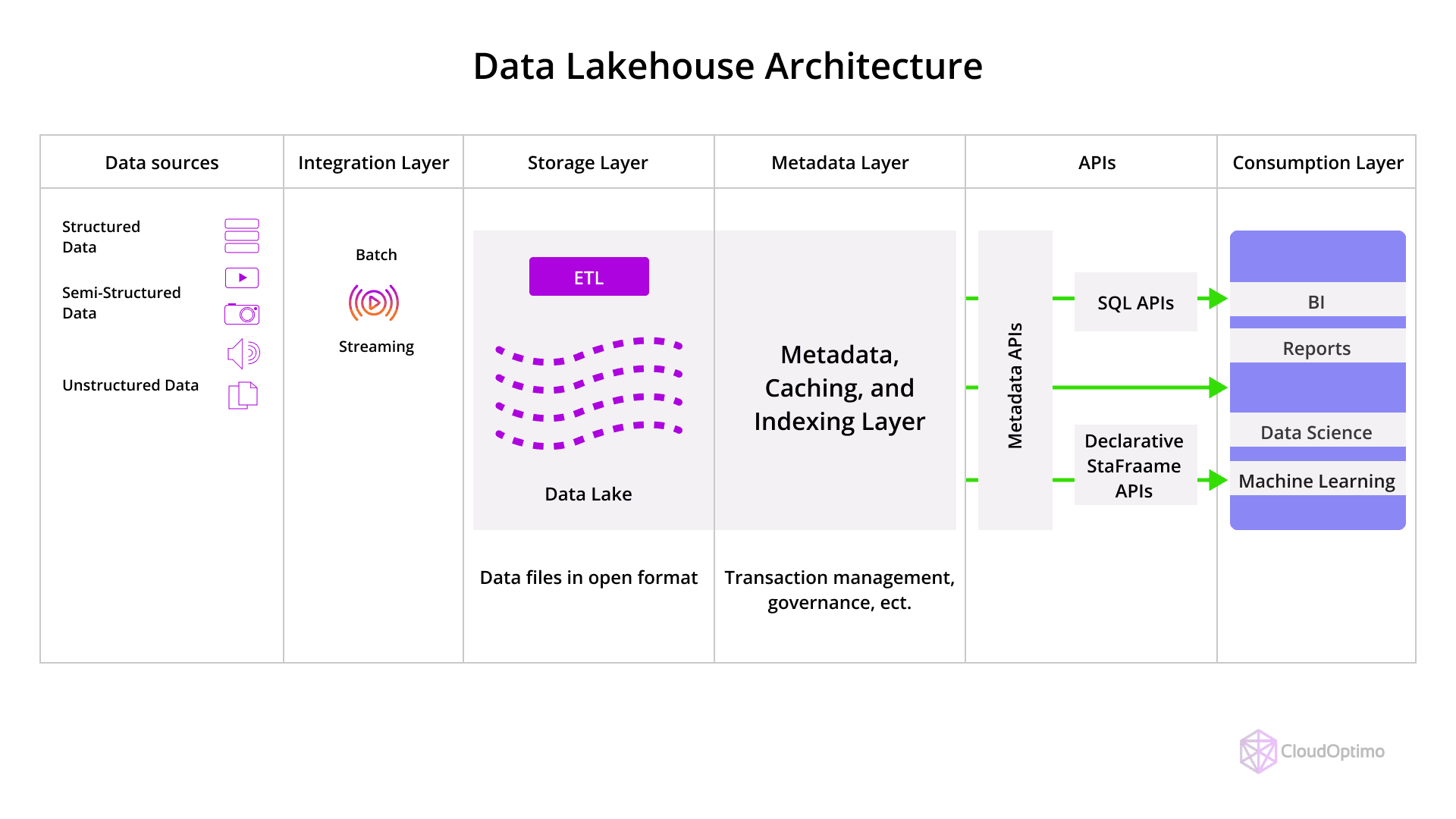

- Promoting the Lakehouse Concept: Combining the best of data lakes and warehouses for a more flexible, scalable architecture.

Why Was Databricks Introduced?

Databricks aimed to solve several challenges:

- Simplifying Spark Deployment: Managing Spark clusters manually was cumbersome and error-prone.

- Unified Data Workflows: There was a gap between data engineering, data science, and business analytics.

- Lakehouse Architecture: Combining the benefits of data lakes and data warehouses to support both structured and unstructured data.

How Databricks Solve Data Challenges?

Unified Data Analytics Platform

Databricks isn’t just about running Spark—it’s a comprehensive platform that unifies data engineering, analytics, and machine learning. Its core components include:

- Collaborative Notebooks: These support multiple languages (Python, Scala, SQL, R) in a single interface, fostering teamwork and experimentation.

- Automated Cluster Management: Databricks handles the complexity of provisioning and managing clusters, ensuring optimal performance without manual intervention.

- Job Scheduling: Automate workflows, run recurring tasks, and integrate with CI/CD pipelines.

- The Lakehouse Architecture

- Databricks pioneered the Lakehouse architecture, merging data lakes' scalability with data warehouses' reliability. Benefits include:

- Unified Storage: Support for structured and unstructured data within a single repository.

- ACID Transactions: Ensure data integrity, enabling complex analytical operations without compromising reliability.

- Schema Enforcement: Apply schema-on-read or schema-on-write for flexibility.

Built-in Governance and Security

Databricks incorporates enterprise-grade security features:

- Role-Based Access Control (RBAC): Fine-grained control over data access.

- Audit Logging: Track user activities for compliance purposes.

- Version Control: Manage data and notebook versions effectively.

Key Components Of Databricks Pricing Model

Understanding the Databricks pricing model is crucial for cost optimization. Here’s a breakdown of the key components:

- Workspaces and Collaboration Costs

- Workspaces: The primary interface where users create and manage notebooks, jobs, and data. Costs depend on the number of users and enabled features.

- Collaboration Tools: Advanced tools like role-based permissions and secure sharing are available in Premium and Enterprise tiers.

Cluster Pricing

Clusters are central to Databricks' compute power. Costs depend on:

- Cluster Types:

- Standard Clusters: Best for development and testing.

- High-Concurrency Clusters: Support multiple concurrent users, ideal for shared environments.

- Job Clusters: Temporary clusters for running scheduled jobs, automatically spinning down after completion.

- Cluster Types:

Compute Costs

Determined by virtual machine (VM) type, node count, and usage duration. Instances are billed based on runtime hours.

Compute Costs Breakdown

Compute costs are influenced by:

- Instance Types:

- On-Demand Instances: Reliable but more expensive.

- Spot Instances: Cheaper but may be terminated based on availability.

- Cluster Size: The number and type of nodes.

- Runtime Hours: Total duration of usage, calculated in Databricks Units (DBUs).

- Instance Types:

Storage Costs

Databricks integrates with external cloud storage (AWS S3, Azure Blob Storage, GCP Storage). Storage costs are based on:

- Data Volume: The total data stored.

- Storage Classes: Costs vary between hot (frequent access) and cold (archival) storage.

- Retention Policies: Efficient lifecycle rules help manage long-term storage costs.

Data Transfer and Networking Costs

Data movement within Databricks environments incurs network costs, especially:

- Cross-Region Transfers: Expensive when moving data across cloud regions.

- Egress Costs: Higher fees for outbound data transfers compared to inbound.

Understanding Databricks Units (DBUs)

DBUs (Databricks Units) are the foundation of Databricks pricing, serving as the standardized measurement of computational resources consumed.

What is a DBU?

A Databricks Unit represents processing power that combines both CPU and memory resources. Think of it as similar to how a kilowatt-hour measures electricity usage - it's a standardized way to measure computational work.

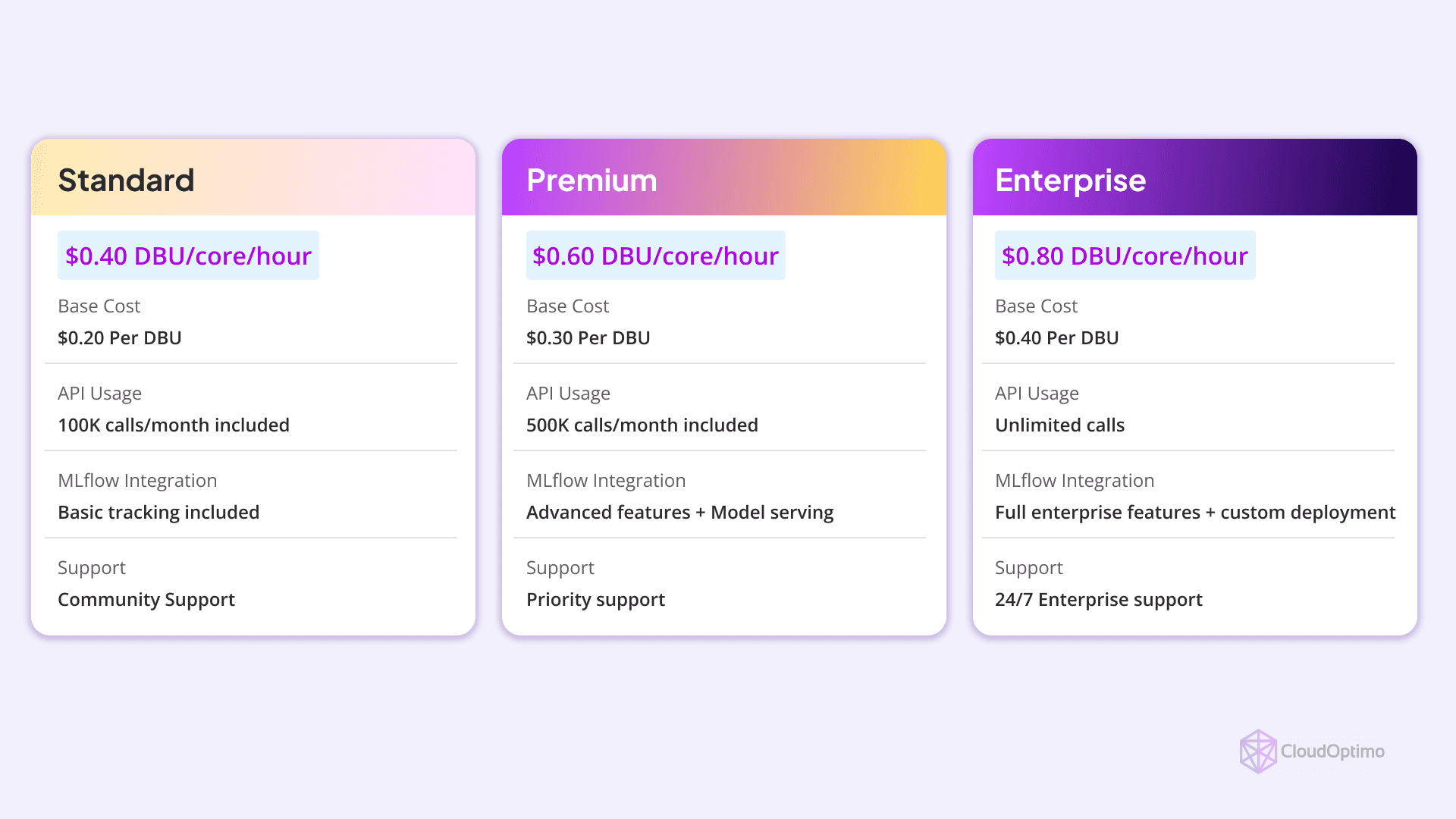

DBU Rates by Tier

- Standard Tier: 0.40 DBU/hour per core

- Premium Tier: 0.60 DBU/hour per core

- Enterprise Tier: 0.80 DBU/hour per core

Databricks Pricing Tiers Explained

Databricks offers three main pricing tiers, each tailored to different organizational needs:

Standard Tier ($0.20/DBU)

It serves as the entry point, offering basic support and standard security features. This tier is ideal for teams starting their data journey, including basic MLflow tracking capabilities.

Premium Tier ($0.30/DBU)

Steps up with advanced security features and Unity Catalog integration. The notable additions include priority support ($2,000/month) and enhanced MLflow capabilities, making it suitable for growing organizations with more sophisticated data needs.

Enterprise Tier ($0.40/DBU)

It delivers the full suite of features with custom SLAs and advanced governance. This tier includes enterprise-grade support and is designed for organizations requiring the highest level of service and security.

- Additional costs to consider:

- Storage: Delta Lake ($23/TB/month), Unity Catalog metadata ($0.10/GB/month)

- MLflow Model Serving starts at $0.35/DBU across all tiers

- Delta Live Tables add 20% to compute costs

- Feature Store pricing is customized based on usage

Quick Reference for Converting DBUs into Compute Workload

To help you better understand how DBUs translate into actual compute workloads, here’s a simple guide that shows the cost implications for various cluster sizes.

This table gives a clearer picture of the costs based on DBU consumption and different pricing tiers:

| Cluster Size (Cores) | DBU Rate (per hour) | Hourly DBU Consumption (per core) | Hourly Cost (at $X per DBU) | Monthly Cost (for 8 hours/day, 22 days/month) |

| 1 Core | Standard: 0.40 DBU | 0.40 DBU | $0.08 | $17.60 |

| 4 Cores | Standard: 0.40 DBU | 1.6 DBU | $0.32 | $56.32 |

| 16 Cores | Premium: 0.60 DBU | 9.6 DBU | $2.40 | $1,728 |

| 32 Cores | Premium: 0.60 DBU | 19.2 DBU | $4.80 | $3,456 |

How to Use This Table:

- Identify the cluster size you plan to use (e.g., 4 cores, 16 cores).

- Find the DBU rate for the corresponding pricing tier (Standard, Premium, or Enterprise).

- Multiply the DBU rate by the number of cores to get your hourly DBU consumption.

- Multiply the hourly consumption by your usage hours (e.g., 8 hours/day) and the number of working days in the month (e.g., 22 working days).

- This will give you an estimated monthly cost based on your configuration.

Real-World DBU Cost Examples

Let's break down actual Databricks costs using two common scenarios that organizations typically encounter. Understanding these examples will help you better predict and plan your own Databricks expenses.

Scenario 1: Development Environment (A Typical Data Science Team Setup)

Environment Configuration:

- Cluster Size: 4 cores

- Usage Pattern: Standard working hours (8 hours/day)

- Pricing Tier: Standard (0.40 DBU/hour/core)

- Working Days: 22 days/month

Cost Breakdown:

- Hourly DBU Consumption

- Per Core: 0.40 DBU/hour

- Total Cores: 4

- Hourly DBUs: 4 × 0.40 = 1.6 DBUs/hour

- Daily Cost Calculation

- Hours Active: 8

- Daily DBUs: 1.6 DBUs/hour × 8 hours = 12.8 DBUs

- At $0.20 per DBU: 12.8 × $0.20 = $2.56/day

- Monthly Cost

- Working Days: 22

- Total Cost: $2.56 × 22 = $56.32/month

Cost-Saving Tip: This setup is ideal for development as the cluster automatically terminates after inactivity, preventing unnecessary overnight charges.

Scenario 2: Production Analytics (Enterprise-Grade Analytics Environment)

Environment Configuration:

- Cluster Size: 16 cores

- Usage Pattern: 24/7 operation

- Pricing Tier: Premium (0.60 DBU/hour/core)

- Running Days: 30 days/month

Cost Breakdown:

- Hourly DBU Consumption

- Per Core: 0.60 DBU/hour

- Total Cores: 16

- Hourly DBUs: 16 × 0.60 = 9.6 DBUs/hour

- Daily Cost Calculation

- Hours Active: 24

- Daily DBUs: 9.6 DBUs/hour × 24 hours = 230.4 DBUs

- At $0.25 per DBU: 230.4 × $0.25 = $57.60/day

- Monthly Cost

- Running Days: 30

- Total Cost: $57.60 × 30 = $1,728/month

Cost-Optimization Tip: For 24/7 workloads, consider using automated scaling policies to reduce cluster size during low-usage periods (typically midnight to early morning).

Key Insights from These Examples

- Scale Impact

- 4x increase in cores (4 to 16) led to 6x increase in DBU consumption

- Premium tier adds 50% to base DBU rate compared to Standard

- Usage Pattern Impact

- 8-hour vs 24-hour operation creates a 3x difference in daily runtime

- Working days (22) vs calendar days (30) significantly affects monthly costs

- Cost Control Levers

- Core count has the largest impact on cost

- Operating hours are the second biggest factor

- Pricing tier selection can increase costs by 50% or more

Remember: These calculations assume consistent usage patterns.

Databricks Pricing Across Cloud Providers

Databricks pricing structures vary across AWS, Azure, and GCP, influenced by compute, storage, and data transfer factors. Here's a comprehensive breakdown for each provider:

AWS Pricing:

- Standard Plan: Starts at $0.40 per DBU for all-purpose clusters.

- Premium Plan: $0.55 per DBU for interactive workloads.

- Serverless Compute (Preview): $0.75 per DBU under the Premium plan.

- Enterprise Plan: $0.65 per DBU for all-purpose compute.

Azure Pricing:

- Standard Plan: $0.40 per DBU for all-purpose clusters.

- Premium Plan: $0.55 per DBU for typical workloads.

- Serverless Compute (Premium Plan): $0.95 per DBU.

GCP Pricing:

- Premium Plan: $0.55 per DBU for all-purpose clusters.

- Serverless Compute (Premium Plan): $0.88 per DBU, which includes the underlying cloud instance cost.

Key Considerations:

- Region and Workload: Pricing may fluctuate depending on the region, type of workload, and specific configurations.

- Discounts: Databricks may offer discounts based on usage volume or long-term commitment.

- DBU Calculator: To get a more accurate estimate of your costs based on your specific requirements, use the Databricks DBU Calculator.

Cloud Provider Pricing Breakdown

AWS

AWS is a top choice for Databricks, known for its extensive instance offerings and robust infrastructure.

- Compute Costs:

- On-Demand Instances: Start at $0.072/hour for basic instances.

- Reserved Instances: Save up to 75% with 1- or 3-year commitments.

- Spot Instances: Offer up to 90% savings for fault-tolerant jobs.

- Compute Costs:

| Instance Type | On-Demand Cost (per hour) | Reserved Instances (1-year commitment) | Spot Instances Cost | Savings for Spot Instances |

| General Purpose (m5.xlarge) | $0.19 | $0.115 (-40% off) | $0.06 | Up to 70% off |

| Compute Optimized (c5.xlarge) | $0.17 | $0.098 (-42% off) | $0.05 | Up to 80% off |

| Memory Optimized (r5.xlarge) | $0.25 | $0.138 (-45% off) | $0.08 | Up to 70% off |

- Storage Costs:

- Amazon S3 Standard: $0.023/GB-month.

- Amazon S3 Glacier (Cold Storage): $0.004/GB-month.

| Storage Type | Cost (per GB/month) |

| Amazon S3 Standard | $0.02 |

| Amazon S3 Intelligent-Tiering | $0.005 - $0.023 |

| Amazon S3 Glacier | $0.004 |

- Data Transfer Costs:

- Inbound: Free.

- Outbound: Starts at $0.09/GB for the first 10TB

| Transfer Type | Cost |

| Inbound Data Transfer | Free |

| Outbound (first 10TB) | $0.09 per GB |

| Inter-region Transfer | $0.02 - $0.09 per GB |

- Key Advantages:

- Extensive range of instance types for diverse workloads.

- Flexible, scalable infrastructure.

- Challenges:

- High data transfer costs, especially for cross-region transfers.

- Complex pricing structure requires careful monitoring.

Azure

Azure integrates seamlessly with Microsoft tools and offers strong hybrid cloud capabilities.

- Compute Costs:

- On-Demand Instances: Start at $0.085/hour for basic VMs.

- Reserved Instances: Save up to 72% with 1- or 3-year commitments.

| Instance Type | On-Demand Cost (per hour) | Reserved Instances (1-year commitment) | Spot Instances Cost | Savings for Spot Instances |

| General Purpose (D4s v5) | $0.17 | $0.102 (-38% off) | $0.05 | Up to 70% off |

| Compute Optimized (F4s v2) | $0.17 | $0.101 (-40% off) | $0.06 | Up to 80% off |

| Memory Optimized (E4s v5) | $0.23 | $0.125 (-45% off) | $0.08 | Up to 70% off |

- Storage Costs:

- Azure Blob Storage (Hot): $0.022/GB-month.

- Azure Blob Storage (Cool): $0.0184/GB-month.

| Storage Type | Cost (per GB/month) |

| Azure Blob Storage (Hot) | $0.02 |

| Azure Blob Storage (Cool) | $0.0184 |

| Azure Archive Storage | $0.0018 |

- Data Transfer Costs:

- Inbound: Free.

- Outbound: Starts at $0.087/GB for the first 10TB.

| Transfer Type | Cost |

| Inbound Data Transfer | Free |

| Outbound (first 10TB) | $0.087 per GB |

| Inter-region Transfer | $0.02 - $0.08 per GB |

- Key Advantages:

- Deep integration with Microsoft tools (e.g., Power BI, Azure Synapse).

- Ideal for enterprises leveraging hybrid cloud solutions.

- Challenges:

- Costs can escalate with complex or high-performance workloads.

- Limited Spot Instance availability compared to AWS.

GCP

GCP offers competitive pricing, which is especially suitable for analytics and machine learning workloads.

- Compute Costs:

- On-Demand Instances: Start at $0.0475/hour.

- Committed Use Discounts: Save up to 70% with 1- or 3-year commitments.

| Instance Type | On-Demand Cost (per hour) | Committed Use Discount (1-year) | Preemptible VMs Cost | Savings for Preemptible VMs |

| General Purpose (n2-standard-4) | $0.15 | $0.095 (-37% off) | $0.03 | Up to 80% off |

| Compute Optimized (c2-standard-4) | $0.19 | $0.114 (-39% off) | $0.04 | Up to 80% off |

| Memory Optimized (m1-ultramem-40) | $5.32 | $3.19 (-40% off) | $1.64 | Up to 70% off |

- Storage Costs:

- Google Cloud Storage (Standard): $0.020/GB-month.

- Nearline Storage: $0.010/GB-month.

| Storage Type | Cost (per GB/month) |

| Standard Storage | $0.02 |

| Nearline Storage | $0.01 |

| Coldline Storage | $0.004 |

- Data Transfer Costs:

- Inbound: Free.

- Outbound: Starts at $0.085/GB for the first 10TB.

| Transfer Type | Cost |

| Inbound Data Transfer | Free |

| Outbound (first 10TB) | $0.085 per GB |

| Inter-region Transfer | $0.01 - $0.08 per GB |

- Key Advantages:

- Cost-effective for analytics and machine learning tasks.

- Simplified pricing and strong native integrations with BigQuery.

- Challenges:

- Limited variety of instance types compared to AWS.

- Less mature in enterprise-grade hybrid solutions.

Note:

Pricing can vary based on region, instance type, usage commitment, and additional service features. The figures provided reflect current rates as of November 2024.

For the most accurate and up-to-date pricing, refer to the official pricing pages and calculators from AWS, Azure, and GCP.

Cost Optimization Strategies for Databricks

- Use Databricks Cost Management Tools

- Databricks Cost Explorer: Access Databricks’ native cost management tools to track cluster usage and spending over time.

- Cost Insights: Regularly monitor usage patterns directly within Databricks to identify areas of inefficiency, and address any high-cost activities before they scale.

- Optimize Resource Tagging and Management

- Job and Cluster Tagging: Tag Databricks clusters, jobs, and notebooks to accurately track usage and cost allocation across teams and projects.

- Cost Attribution Reports: Leverage Databricks’ built-in cost attribution features to generate reports that break down costs by tag, helping you pinpoint expensive processes or underutilized resources.

- Use Spot Instances for Non-Critical Workloads

- Spot Instances: For non-essential batch jobs or development work, switch to Spot Instances to significantly reduce compute costs, as they are much cheaper compared to On-Demand instances.

- On-Demand Instances for Production: Use On-Demand Instances for critical workloads that require guaranteed availability and stability, like production-level ML training.

- Optimize Cluster Configuration

- Right-Sizing Clusters: Match your Databricks cluster configuration to the specific workload. Use small clusters for development and testing and scale up as necessary for production tasks.

- Auto-Termination Settings: Set up auto-termination policies for clusters to automatically shut down idle clusters, preventing you from paying for unused resources.

- Optimize Delta Lake Performance

- Efficient Data Partitioning: Partition Delta Lake tables appropriately based on query patterns to minimize unnecessary compute overhead.

- Z-Ordering: Use Z-ordering to optimize queries by reordering data to speed up retrieval and reduce compute costs.

Future Trends

- Evolving Pricing Models: Databricks may introduce more flexible pricing models that align more closely with specific workloads, allowing users to pay only for the exact compute power they use. Stay updated on new pricing models to make sure your team is using the most cost-effective option.

- Sustainability Incentives: As the industry shifts towards sustainability, cloud providers (including Databricks) may offer cost incentives for using green or eco-friendly compute resources. This is an emerging trend worth exploring to balance cost efficiency with sustainability.

Understanding Databricks pricing is essential for maximizing ROI in data projects. By breaking down costs, comparing cloud providers, and implementing optimization strategies, technical teams can harness the full power of Databricks without overspending. As the platform evolves, staying informed about pricing models will ensure continued cost efficiency and performance.